Summary

In dynamic environments, adaptive behavior requires striking a balance between harvesting currently available rewards (exploitation) and gathering information about alternative options (exploration) [1–4]. Such strategic decisions should incorporate not only recent reward history, but also opportunity costs and environmental statistics. Previous neuroimaging [5–8] and neurophysiological [9–13] studies have implicated several brain areas, including orbitofrontal cortex, anterior cingulate cortex, and ventral striatum, in distinguishing between bouts of exploration and exploitation. Nonetheless, the neuronal mechanisms that underlie selection between these strategies remain poorly understood. We hypothesized that posterior cingulate cortex (CGp), an area linking reward processing, attention [14], memory [15, 16], and motor control systems [17], mediates the integration of decision variables such as reward [18], uncertainty [19], and target location [20] that underlie the dynamic balance of exploration and exploitation. Here we show that CGp neurons distinguish between exploratory and exploitative decisions made by monkeys in a dynamic foraging task. Moreover, the firing rates of these neurons predict in graded fashion the strategy most likely to be selected on upcoming trials. This encoding is distinct from mere switching between spatial targets, and is independent of the absolute magnitudes of rewards. These observations implicate CGp in both the integration of individual outcomes across decision making and the modification of strategy in dynamic environments.

Results

To probe the neuronal processes mediating the strategic balance of immediate reward and information acquisition, we recorded the activity of single CGp neurons in two rhesus macaques performing a “restless” variant of the four-armed bandit for juice rewards [3, 5] (Figure 1). This variant provides a high level of environmental variability with a behaviorally tractable number of options. On each trial, monkeys chose one of four targets whose payoffs were randomly selected from distributions centered about their values on the previous trial. Once a target was chosen, monkeys in principle had perfect knowledge of its present value (there was no added variance in payouts), though the values of all targets changed each trial. As a result, monkeys had to select an option to learn its current value and integrate this information with their statistical knowledge of the environment to predict its relative value on upcoming trials.

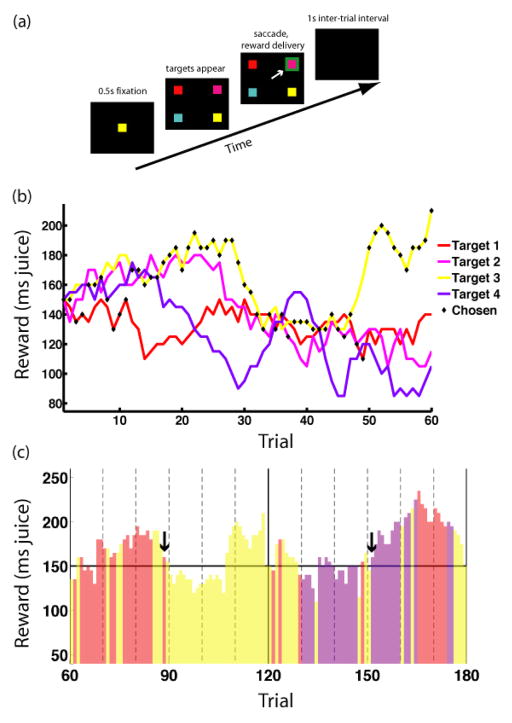

Figure 1.

Task and example reward schedule used to study the explore/exploit dilemma. (a) Schematic of the 4-armed bandit task. Following a 0.5 s fixation period, the central cue disappears, replaced by four colored targets. Subjects indicate choices by shifting gaze to targets, after which the chosen target is highlighted in green for 1s and a juice reward is delivered. Consecutive trials are separated by a 1s inter-trial interval. Every 60 trials, a block change cue appears, and all target values are reset to the mean reward value. (b) Sample payouts and choices for the four options over a single block. Reward values for each target follow a random walk with fixed standard deviation for step size, biased toward the mean of 0.15 ms. Black diamonds indicate choices made by the monkey during the given block. (c) Sample monkey (B) choice behavior over two blocks of the 4-armed bandit task. Bar colors indicate target chosen, bar heights the values of rewards received. The horizontal line indicates the mean reward value. Monkeys exhibit bouts of exploitation of favorable targets with exploration of alternatives. Arrows indicate trials that might plausibly be classified as either exploratory or exploitative, depending on the behavioral model used. Both involve a change in target selected (action switch), but also a return to a target with high remembered value, and so might be classified as exploitative.

Both monkeys were highly adept at optimizing reward. They earned 92% and 91%, respectively, of the total reward that would have been earned by an omniscient observer. Nevertheless, despite this high level of performance, a perfectly greedy decision maker, focused on the option with highest immediate value, would have harvested more, though not all, available reward (see Supplementary Materials).

More importantly, nothing intrinsic to the task design serves to distinguish exploratory from exploitative decisions. On each trial, both monkeys simply selected among the four available options and received a reward. As a result, individual decisions must be classified as exploratory or exploitative according to a model-based analysis of each monkey’s behavior, with model parameters chosen to maximize the likelihood of observed choices. We report here only results based on our best-fitting Kalman filter model, though results were similar for other models as well (see Supplementary Materials).

We analyzed the firing rates of 83 single neurons in CGp in both monkeys performing the 4-armed bandit task (59 from monkey N and 24 from monkey B). We focused on two trial epochs, a 2-second decision epoch (DE; 1s before trial initiation extending to juice delivery) and a 2-second post-reward evaluation epoch (EE; from the offset of juice delivery through the inter-trial interval). Analyses based on mean firing rates in each epoch readily identified neurons that discriminated between the two strategies (14%, n=12/83, DE; 16%, n=13/83, EE; p<0.05, Mann-Whitney U-test), with 22% of neurons doing so in at least one epoch (n=18/83; p<0.025, Bonferroni-corrected Mann-Whitney U-test).

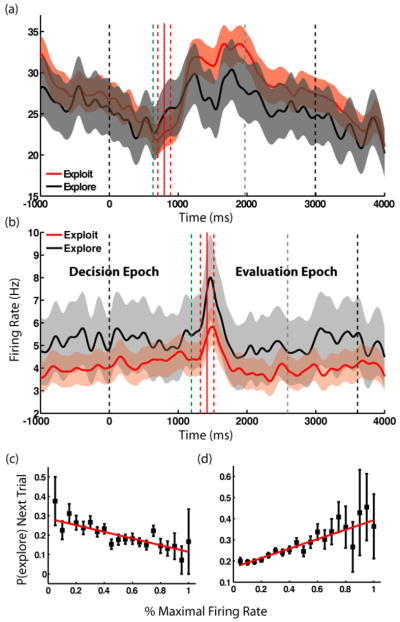

Figure 2a depicts the average firing rate of a single neuron on trials classified as either exploratory or exploitative. Responses on exploit trials were significantly higher in both decision and evaluation epochs (Mann-Whitney U-test, p<0.01). In contrast, the neuron whose activity is plotted in Figure 2b was more responsive on exploratory trials in both epochs (Mann-Whitney U-test, p<0.01). While the population as a whole exhibits slightly higher firing on exploratory trials in both epochs (modulation index=0.0084 (DE), 0.0026 (EE)), the population of cells with significant modulation is mixed (modulation index=0.046 (DE), −0.011 (EE)), indicating heterogeneity in single-cell responses to the different strategies. Thus firing rates in CGp distinguish between upcoming exploratory and exploitative decisions in the epoch leading up to selection and continue to reflect that choice during the post-reward delay.

Figure 2.

Neurons in CGp distinguish between exploration and exploitation. (a,b) Sample neurons (neurons #62 and #12) with significant differences in firing during explore and exploit trials for both the decision and evaluation epochs, with firing aligned to trial onset. Individual cells may prefer either explore (black) or exploit (red) trials. The task begins at time 0. Onset of “go” cue (green line), reward delivery (red line), beginning of inter-trial interval (gray line), and end of trial are mean times. Dashed red lines indicate ± 1 standard deviation in reward onset. Shaded areas represent S.E.M. (c,d) Neurons in CGp encode probability of exploring on the next trial. Points are probabilities of exploring next trial as a function of percent maximal firing rate in the decision epoch, averaged over negatively- and positively-tuned populations of neurons (c and d, respectively). Error bars are S.E.M.

We previously reported that responses of CGp neurons predict impending switches from one option to another on the next trial in a simple two-alternative task [11]. Based on that finding, we hypothesized that CGp neurons would also carry predictive information about more general impending choices of strategy. To test this hypothesis, we regressed the probability of exploration on the upcoming decision as a function of observed firing rate for each neuron. Of the 83 neurons in our sample, about 16% showed significant correlations between firing rate during the decision epoch and the probability of exploration on the ensuing choice (n=13, p < 0.05, Mann-Whitney U-test; p(n>12)<0.001, binomial test). Even more importantly, 16% of neurons showed a correlation between firing during EE and the probability of exploration on the following trial (n=13 p<0.05, Mann-Whitney U-test; p(n>12)<0.001, binomial test), suggesting that CGp differentially signals the probability of strategic decisions within a block of trials. Figures 2d and e depict the separate population average firing rates for the subsets of cells whose activity correlated negatively (n=6) and positively (n=7) with exploration. Clearly, average response for each of these two groups of neurons strongly predicted probability of impending strategic choices in a graded fashion.

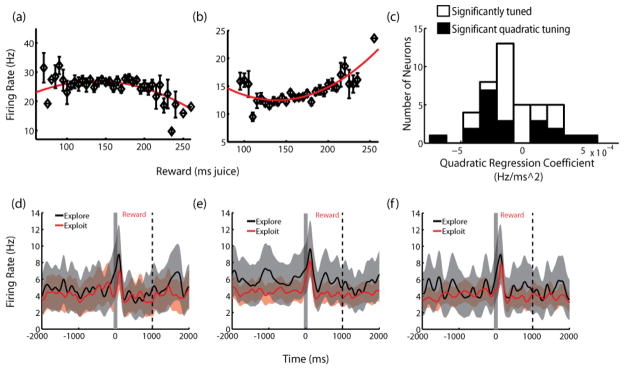

One potential confound of these results arises from the link between exploitation and the likelihood of increased reward. Because we might expect that exploitative choices, on average, yielded higher rewards, a possible alternative interpretation of the present data is that effects of strategy on neuronal activity are reducible entirely to neuronal sensitivity to reward value. To investigate this possibility, we calculated and fit reward size tuning curves for each of our 83 neurons, (Figure 3a and b). Consistent with previous studies [11, 18], we found that the firing rates of CGp neurons during the decision epoch varied with the amount of reward received on the previous trial (DE, n=39, p<0.05, F-test for quadratic regression), and that firing rates in the evaluation epoch varied with the amount just received (EE, n=44, p<0.05, F-test). Over the range of experienced reward values (50 μL to 350 μL), we found a heterogeneity of tuning curves: some were linear (n=14 positive, 6 negative, DE; n=18 positive, n=9 negative, EE; p<0.05 nonzero regression coefficient), while others were U-shaped, both concave up (n=8, DE; n=8, EE; p<0.05 nonzero regression coefficient) and concave down (n=11, DE; n=9, EE, p<0.05 nonzero regression coefficient). We therefore restricted our next series of analyses to those trials where monkeys adopted different strategies but received the same amount of reward. We found that 12% (n=10; p(n>9)<0.001, binomial test) of recorded neurons still showed different mean firing rates on explore versus exploit trials (p<0.01, Bonferroni-corrected Mann-Whitney U-test). Data for an example neuron showing this effect is shown in Figures 3d-f. Here, the middle third of reward values have been subdivided into three categories, medium-low, medium-medium, and medium-high, and neuronal firing plotted as a function of time for both explore and exploit trials, controlled for received reward. This neuron, like many others in our population, showed clear sensitivity to strategy even when we controlled for the value of the reward the monkeys received.

Figure 3.

Reward sensitivity of CGp neurons does not explain sensitivity to strategy. (a,b) Sample tuning curves from single neurons, showing firing as a function of reward received. Neurons display both positively- and negatively-monotonic tuning, as well as parabolic tuning (both concave up and concave down). (c) Histogram of quadratic regression coefficients. Positive values are concave up, as in b. Uncolored bars represent neurons with significant overall regressions, as determined by F-test. Black bars represent the subset of this population with significant coefficients for quadratic tuning. (d-f) Sample neuron (cell 12) firing rates on explore and exploit trials, controlled for low, medium, and high reward and aligned to reward onset. Bins are defined as: low: 115–135 ms, medium: 140–160 ms, high: 165–185 ms. In d, the low case, the difference in mean reward sizes between explore and exploit conditions is 5 ms; in e and f, less than 2 ms. Shaded regions are S.E.M.

Two other possible confounds arise from the known spatial tuning of CGp and the close relationship between exploration and simply switching between targets. As reported previously [21], we found that 63% of neurons were tuned for the location of the target chosen (n=52/83, p<0.05, one-way ANOVA of mean firing rates for each target over all trials; see Supplementary Materials). Across the population, 39% of neurons were significantly tuned for both reward size and target location [18], while 23% were tuned for neither (EE; 34% and 24%, respectively, in DE). However, the population as a whole showed no consistent target tuning across trials (p>0.2, one-sample t-test for contralateral and upper-hemifield tuning indices). In the case of target switching, since repeatedly choosing a poor target is not necessarily exploitative (if higher reward has recently been sampled elsewhere), there is not a strict one-to-one correspondence between exploitation and perseveration, or exploration and switching. As a result, our task design allows for the possibility of disambiguating these phenomena.

However, a partial correlation analysis of neuronal firing rates and upcoming decisions (firing rate in the DE for decision on current trial; firing rate in EE for decision in following trial) while controlling for the effects of reward tuning, spatial tuning, target switching, and previous explore/exploit decision revealed significant correlations in 12% of neurons (n=11, DE; n=10, EE; p<0.05 Spearman partial correlation; p(n>9)<0.01, binomial test). Thus, even when all known effects on firing rate are accounted for, a significant number of neurons still exhibited clear predictive correlations with upcoming strategy. Collectively, these results indicate that single neurons in CGp not only receive information about both previous rewards and previous choices [19] and maintain that information across trials [11, 19], as reported previously, but that these same neurons also carry signals related to dynamic changes in choice strategy in a multiplexed format.

Discussion

We found that, when choosing among multiple targets whose relative values changed dynamically, neurons in posterior cingulate cortex signaled the distinction between trials on which monkeys pursued an exploratory rather than an exploitative strategy. This signal was robust against classifications of trials based on differing models of behavior, including a perfectly greedy strategy and a simple heuristic based on comparison to a reward threshold (see Supplementary Material). More importantly, single neurons signaled in graded fashion the probability of pursuing each strategy on upcoming trials.

Previous work has shown that CGp neurons are sensitive to reward [18], risk [19], and option-switching [11], and integrates this information across multiple trials [11], but the current study generalizes the decision environment to one in which exploration and exploitation are distinguishable from a simple “win-stay/lose-shift” heuristic [11, 22] based only on the most recent reward received, and in which outcomes must be evaluated in light of multiple options with dynamically-changing rewards. As a result, relatively bad outcomes in rich environments may be acceptable under circumstances where all alternatives are poor, and searching for better options necessitates choosing among several competing alternatives, each with a distinct reward history. Strategic decisions in such an environment thus require greater abstraction and integration of information than in comparatively static contexts, since no single variable (or single trial) contains sufficient information on which to base a decision.

Together, these results invite the hypothesis that CGp is part of a network that monitors the outcomes of individual decisions and integrates that information into higher-level strategies spanning multiple choices. Thus, while the prolonged time courses of firing rate changes in CGp are unlikely to be responsible for decisions on individual trials, their tonic activity levels may be responsible for encoding the gradual accumulation of information that gives rises to changes in strategy. However, we do not expect that this integration of single-trial history with strategic information is limited to CGp, nor is this its sole function. For example, Daw et al. [5] found that exploratory decisions are associated with activation in frontal polar cortex and intraparietal sulcus, whereas exploitative decisions are associated with activity in striatum. We speculate that information about individual rewards and reward-predictions is initially computed in striatum, orbitofrontal cortex, and medial prefrontal cortex, subsequently combined with recent reward outcome and choice history and maintained online in CGp, and finally passed to ACC, where it is utilized in the selection of appropriate actions. Moreover, reciprocal connections between ACC and CGp may play a role in learning which combinations of single-trial variables are most relevant when deciding among strategies to maximize reward.

In this framework, recent reward history, computational difficulty, stimulus novelty, memory load, and the statistics of the environment are distilled into a small number of task-related decision variables for the purposes of encoding and selecting among potential actions. Thus, individual neurons that compose this network would be expected to display sensitivity to the many single-trial variables like risk, reward, and spatial location that serve as its inputs. We found that these variables are represented multi-modally by neurons in CGp. As a result, we suspect that CGp may play a key role in the process of learning the combinations of stimuli and accumulated statistics most relevant to making decisions, analogous to its role in simple conditioning [23–25]. This is in keeping with our observation of increased firing rates in response to block boundaries, near-threshold decisions, and aborted trials in the bandit task, as also observed in ACC [12]. If so, CGp dysfunction may be related to deficiencies in memory-guided learning and action selection observed in disorders like Alzheimer’s disease and obsessive-compulsive disorder, and its proper function may be crucial to the flexible adaptation of strategy in response to changing environments.

Experimental Procedures

Surgical Procedures

All procedures were approved by the Duke University Institutional Animal Care and Use Committee and were conducted in compliance with the Public Health Service’s Guide for the Care and Use of Animals. Two rhesus monkeys (Macaca mulatta) served as test subjects for recording. A small prosthesis and a stainless steel recording chamber were attached to the calvarium. The chamber was placed over CGp at the intersection of the interaural and midsagittal planes. Animals were habituated to laboratory conditions and trained to perform oculomotor tasks for liquid reward. Animals received analgesics and antibiotics after all surgeries. The chamber was kept sterile with antibiotic washes and sealed with sterile caps.

Behavioral Techniques

Monkeys were familiar with the task. Eye position was sampled at 1000 Hz (camera, SR Research). Data were recorded by a computer running Matlab (The Mathworks) with Psychtoolbox[26] and Eyelink[27]. Visual stimuli were squares (6° wide) on a computer monitor 50 cm away. A solenoid valve controlled juice delivery. Juice flavor was the same for each target.

On every trial, a central cue appeared and stayed on until the monkey fixated it. Fixation was maintained within a 1–2° window. After a brief delay, the target disappeared, and the four targets were displayed in the corners of the screen. Targets appeared in the same location each trial. After selection of a target, its border was illuminated and reward was delivered, followed by a 1s inter-trial interval. Rewards varied from 40 ms to 280 ms of solenoid open time in 5 ms increments (50 μL to 350 μL, in 7.5 μL increments). Juice volumes were linear in solenoid open time, and we have previously shown that monkeys discriminate juice volumes as small as 20 μL[18]. All target values began at 200 μL and reset each block. Blocks were 60 trials long and cued by the appearance of a gray square in the center of the screen. Reward values for all targets changed each trial according to a biased random walk (see Supplementary Materials).

Microelectrode Recording Techniques

Single electrodes (Frederick Haer Co) were lowered under microdrive guidance (Kopf) until the waveform of a single (1–3) neuron(s) was isolated. Individual action potentials were identified by standard criteria and isolated on a Plexon system (Plexon Inc, Dallas, TX). Neurons were selected on the basis of the quality of isolation, but not on selectivity for the task. Recordings were made in areas 23 and 31 in the cingulate gyrus and ventral bank of the cingulate sulcus, anterior to the intersection of the marginal and horizontal rami.

Statistical Methods

We used an alpha of 0.05 as a criterion for significance. Peristimulus time histograms (PSTHs) were constructed by aligning spikes to trial events, averaging across trials, and smoothing by a Gaussian filter with 50 ms standard deviation. Shaded regions in PSTHs represent +/− SEM, also Gaussian smoothed. Firing rate modulation indices were calculated in each epoch as , where f is the firing rate averaged over the relevant subset of trials. Behavioral parameters were fitted by custom scripts written using the Matlab Optimization Toolbox (The Mathworks). Details of modeling can be found in Supplementary Materials.

Firing Rate Analysis

Analyses utilized a binary, model-based classification of choices on each trial as exploratory or exploitative (see Supplementary Materials). We tested for significant differences in firing rates in both the decision and evaluation epochs as a function of the explore/exploit classification of the decision on the current trial (that is, effects on DEn and EEn as a function of xn, where xn is the binary explore/exploit variable). We also tested for predictive correlations between firing in one epoch and upcoming decision (DEn with xn and EEn with xn+1). To do this, we binned firing rates for each neuron into deciles of percent maximal firing and examined the percentage of exploratory decisions made subsequent to epochs with firing rates in each bin. This allowed us to construct a probability of exploration as a function of percent maximal firing, which we averaged across significant cells of each tuning.

Our reward controls were performed by grouping the 45 distinct reward values into 9 bins and comparing firing rates within each bin during the evaluation epoch on explore and exploit trials. Significance levels utilize a Bonferroni correction for the number of tests performed, which varied (not all bins contained an explore or exploit trial). Our reward controlled plots grouped the 15 middle rewards into three groups of five, denoted “medium-high,” “medium-medium,” and “medium-low.”

Our partial correlation analyses correlated (raw, unbinned) firing rate in a given epoch ( DEn or EEn) with the upcoming explore exploit decision ( xn and xn+1, respectively). In each case, the correlation is controlled for spatial location (split into two variables, one for upper vs. lower hemifield and one for left vs. right hemifield, each taking values ±1), previous received reward (rn−1 and rn, respectively), chosen target switch (binary; sn and sn+1, respectively), and previous explore/exploit choice ( xn−1 and xn, respectively). Correlations were calculated as Spearman rank correlations, and so allow for generic monotonic relations among variables.

Supplementary Material

Acknowledgments

This work was supported by a NIDA post-doctoral fellowship 023338-01 (BYH), NIH grant R01EY013496 (MLP), and the Duke Institute for Brain Studies (MLP). We thank Karli Watson for help in training the animals and Arwen Long for comments on the manuscript.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Stephens DW, Krebs JR. Foraging theory. Princeton, N.J: Princeton University Press; 1986. [Google Scholar]

- 2.Gittins JC. Multi-armed bandit allocation indices. Chichester; New York: Wiley; 1989. [Google Scholar]

- 3.Whittle P. Restless bandits: activity allocation in a changing world %Z A celebration of applied probability. J Appl Probab. 1988:287–298. [Google Scholar]

- 4.Berry DA, Fristedt B. Bandit problems: sequential allocation of experiments. London; New York: Chapman and Hall; 1985. [Google Scholar]

- 5.Daw ND, O’Doherty JP, Dayan P, Seymour B, Dolan RJ. Cortical substrates for exploratory decisions in humans. Nature. 2006;441:876–879. doi: 10.1038/nature04766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wittmann BC, Daw ND, Seymour B, Dolan RJ. Striatal activity underlies novelty-based choice in humans. Neuron. 2008;58:967–973. doi: 10.1016/j.neuron.2008.04.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Walton ME, Devlin JT, Rushworth MF. Interactions between decision making and performance monitoring within prefrontal cortex. Nat Neurosci. 2004;7:1259–1265. doi: 10.1038/nn1339. [DOI] [PubMed] [Google Scholar]

- 8.Yoshida W, Ishii S. Resolution of uncertainty in prefrontal cortex. Neuron. 2006;50:781–789. doi: 10.1016/j.neuron.2006.05.006. [DOI] [PubMed] [Google Scholar]

- 9.Shima K, Tanji J. Role for cingulate motor area cells in voluntary movement selection based on reward. Science. 1998;282:1335–1338. doi: 10.1126/science.282.5392.1335. [DOI] [PubMed] [Google Scholar]

- 10.Kennerley SW, Walton ME, Behrens TE, Buckley MJ, Rushworth MF. Optimal decision making and the anterior cingulate cortex. Nat Neurosci. 2006;9:940–947. doi: 10.1038/nn1724. [DOI] [PubMed] [Google Scholar]

- 11.Hayden BY, Nair AC, McCoy AN, Platt ML. Posterior cingulate cortex mediates outcome-contingent allocation of behavior. Neuron. 2008;60:19–25. doi: 10.1016/j.neuron.2008.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Quilodran R, Rothe M, Procyk E. Behavioral shifts and action valuation in the anterior cingulate cortex. Neuron. 2008;57:314–325. doi: 10.1016/j.neuron.2007.11.031. [DOI] [PubMed] [Google Scholar]

- 13.Rudebeck PH, Behrens TE, Kennerley SW, Baxter MG, Buckley MJ, Walton ME, Rushworth MF. Frontal cortex subregions play distinct roles in choices between actions and stimuli. J Neurosci. 2008;28:13775–13785. doi: 10.1523/JNEUROSCI.3541-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kobayashi Y, Amaral DG. Macaque monkey retrosplenial cortex: II. Cortical afferents. J Comp Neurol. 2003;466:48–79. doi: 10.1002/cne.10883. [DOI] [PubMed] [Google Scholar]

- 15.Vogt BA, Finch DM, Olson CR. Functional heterogeneity in cingulate cortex: the anterior executive and posterior evaluative regions. Cereb Cortex. 1992;2:435–443. doi: 10.1093/cercor/2.6.435-a. [DOI] [PubMed] [Google Scholar]

- 16.Vogt BA, Gabriel M Society for Neuroscience. Neurobiology of cingulate cortex and limbic thalamus: a comprehensive handbook. Boston: Birkh©Þuser; 1993. [Google Scholar]

- 17.Vogt BA, Gabriel M, Vogt LJ, Poremba A, Jensen EL, Kubota Y, Kang E. Muscarinic receptor binding increases in anterior thalamus and cingulate cortex during discriminative avoidance learning. J Neurosci. 1991;11:1508–1514. doi: 10.1523/JNEUROSCI.11-06-01508.1991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.McCoy AN, Crowley JC, Haghighian G, Dean HL, Platt ML. Saccade reward signals in posterior cingulate cortex. Neuron. 2003;40:1031–1040. doi: 10.1016/s0896-6273(03)00719-0. [DOI] [PubMed] [Google Scholar]

- 19.McCoy AN, Platt ML. Risk-sensitive neurons in macaque posterior cingulate cortex. Nat Neurosci. 2005;8:1220–1227. doi: 10.1038/nn1523. [DOI] [PubMed] [Google Scholar]

- 20.Dean HL, Platt ML. Allocentric spatial referencing of neuronal activity in macaque posterior cingulate cortex. J Neurosci. 2006;26:1117–1127. doi: 10.1523/JNEUROSCI.2497-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Dean HL, Crowley JC, Platt ML. Visual and saccade-related activity in macaque posterior cingulate cortex. J Neurophysiol. 2004;92:3056–3068. doi: 10.1152/jn.00691.2003. [DOI] [PubMed] [Google Scholar]

- 22.Barraclough DJ, Conroy ML, Lee D. Prefrontal cortex and decision making in a mixed-strategy game. Nat Neurosci. 2004;7:404–410. doi: 10.1038/nn1209. [DOI] [PubMed] [Google Scholar]

- 23.Gabriel M, Foster K, Orona E. Interaction of laminae of the cingulate cortex with the anteroventral thalamus during behavioral learning. Science. 1980;208:1050–1052. doi: 10.1126/science.7375917. [DOI] [PubMed] [Google Scholar]

- 24.Gabriel M, Sparenborg SP, Stolar N. Hippocampal control of cingulate cortical and anterior thalamic information processing during learning in rabbits. Exp Brain Res. 1987;67:131–152. doi: 10.1007/BF00269462. [DOI] [PubMed] [Google Scholar]

- 25.Gabriel M, Kubota Y, Sparenborg S, Straube K, Vogt BA. Effects of cingulate cortical lesions on avoidance learning and training-induced unit activity in rabbits. Exp Brain Res. 1991;86:585–600. doi: 10.1007/BF00230532. [DOI] [PubMed] [Google Scholar]

- 26.Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- 27.Cornelissen FW, Peters EM, Palmer J. The Eyelink Toolbox: eye tracking with MATLAB and the Psychophysics Toolbox. Behav Res Methods Instrum Comput. 2002;34:613–617. doi: 10.3758/bf03195489. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.