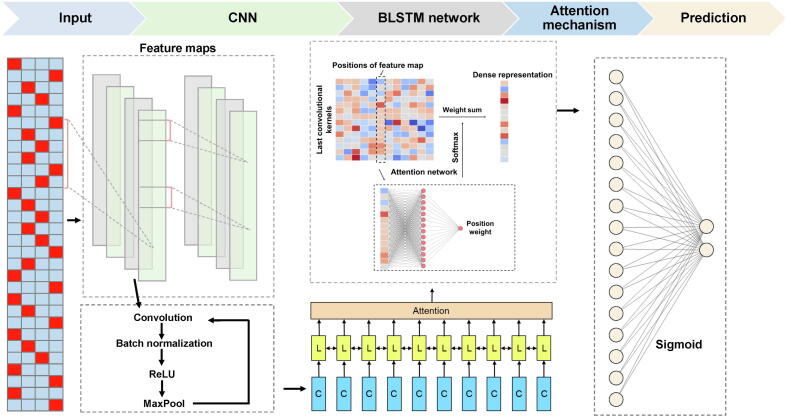

Figure 2.

Deep learning framework of NetBCE

NetBCE is built on a ten-layer deep learning framework. The epitope sequences were encoded as binary matrix and taken as input. Then, CNN module was used for feature extraction and representation. The activation function is the ReLU being applied to the convolution results, where positive values remain unchanged and any negative values are set 0. BLSTM layer was added for retaining features from a long duration to capture the combinations or dependencies among residues at different positions. A fully connected layer was used to integrate the variables’ output from the attention layer and learn the nonlinear relationship. The output layer was composed of one sigmoid neuron for calculating a prediction score for a given peptide. The sigmoid function is also referred to a squashing function, because its domain is defined as the set of all real numbers, and its range is (0, 1). CNN, convolutional neural network; ReLU, rectified linear unit; BLSTM, bidirectional long short-term memory; L, layer; C, convolution.