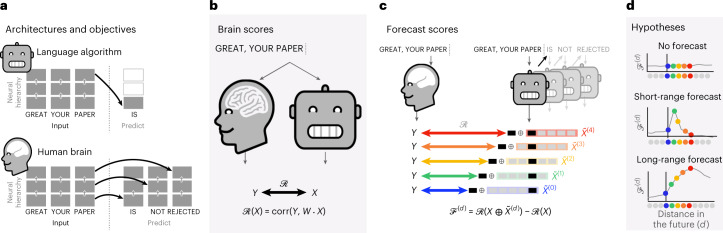

Fig. 1. Experimental approach.

a, Deep language algorithms are typically trained to predict words from their close contexts. Unlike these algorithms, the brain makes, according to predictive coding theory, (1) long-range and (2) hierarchical predictions. b, To test this hypothesis, we first extracted the fMRI signals of 304 individuals each listening to ≈26 min of short stories (Y) as well as the activations of a deep language algorithm (X) input with the same stories. We then quantified the similarity between X and Y with a ‘brain score’: a Pearson correlation after an optimal linear projection W (Methods). c, To test whether adding representations of future words (or predicted words; Supplementary Fig. 4) improves this correlation, we concatenated (⊕) the network’s activations (X, depicted here as a black rectangle) to the activations of a ‘forecast window’ (, depicted here as a coloured rectangle). We used PCA to reduce the dimensionality of the forecast window down to the dimensionality of X. Finally, quantifies the gain of brain score obtained by enhancing the activations of the language algorithm to this forecast window. We repeated this analysis with variably distant windows (d, Methods). d, Top, a flat forecast score across distances indicates that forecast representations do not make the algorithm more similar to the brain. Bottom, by contrast, a forecast score peaking at d > 1 would indicate that the model lacks brain-like forecast. The peak of indicates how far off in the future the algorithm would need to forecast representations to be most similar to the brain.