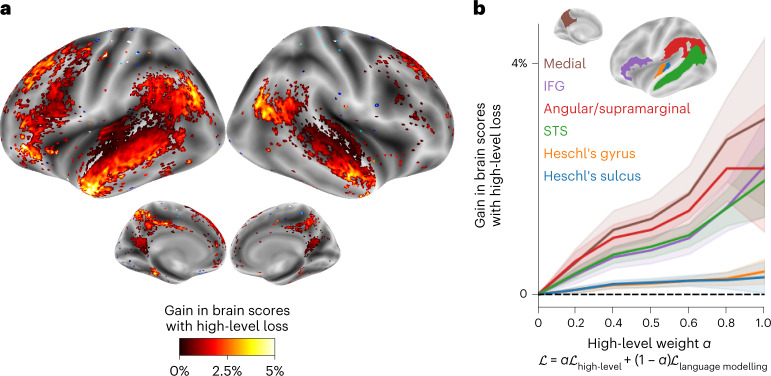

Fig. 5. Gain in brain score when fine-tuning GPT-2 with a mixture of language modelling and high-level prediction.

a, Gain in brain scores between GPT-2 fine-tuned with language modelling plus high-level prediction (for αhigh level = 0.5) and GPT-2 fine-tuned with language modelling alone. Only the voxels with a significant gain are displayed (P < 0.05 with a two-sided Wilcoxon rank-sum test after FDR correction for multiple comparisons). b, Brain score gain as a function of the high-level weight α in the loss (equation (8)), from full language modelling (left, α = 0) to full high-level prediction (right, α = 1). Gains were averaged across voxels within six regions of interests (see Methods for the parcellation and Supplementary Fig. 7 for the other regions in the brain). Scores were averaged across individuals and we display the 95% CIs across individuals (n = 304).