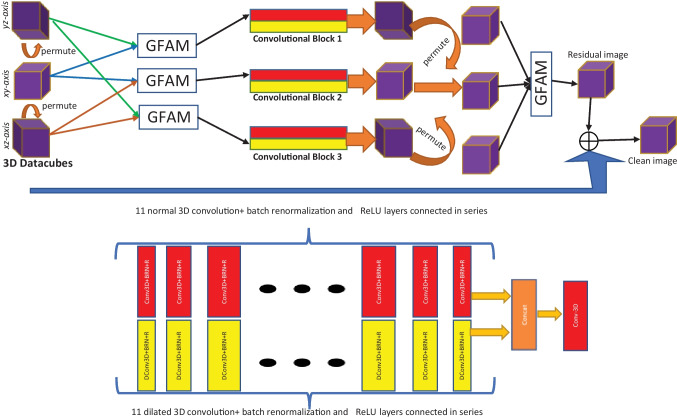

Fig. 1.

The architecture of the proposed generator model. The 3D data patches are permuted along the three dimensions to obtain three data patches such that , and . The global feature attention module (GFAM) blocks can accept arbitrary number of inputs (see Fig. 2). The and arrows coming out from the three permuted 3D patches are fed as input to the three GFAM blocks. The top row shows the overall model while the bottom row shows the expanded views of the red and yellow convolutional blocks. The output features obtained from the convolutional blocks are again permuted to their original dimensions (xyz) and fused together into one 3D image using the final GFAM block