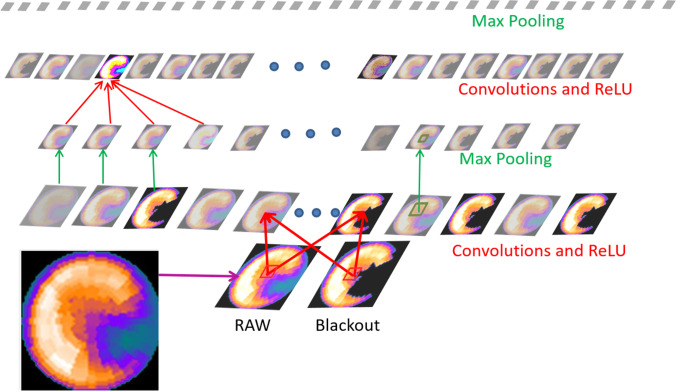

Fig. 5.

Example of inner workings of a convolutional neural network. The outputs of each layer of a typical convolutional network architecture applied to the images of a raw myocardial perfusion polar map and a blackout polar map after comparison to normal limits emulated from Betancur et al. [32]. Simple matrix operators are convolved with each image to extract desired features. Shown here are two operators: rectified linear unit (ReLU) which applies a threshold from the input to the next output layer, and Max Pooling, which applies a filter to a 2 × 2 image patch reducing image dimensions. Each rectangular image is a feature map corresponding to the output for one of the learned features, detected at each of the image positions. Information flows bottom up, and a score is computed for each image class in output. Illustration

adapted from LeCun et al. [33]