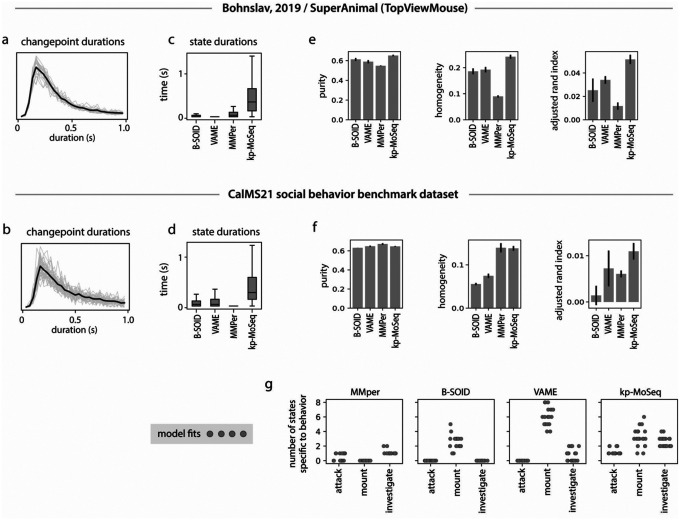

Extended Data Figure 7: Supervised behavior benchmark.

a-d) Keypoint-MoSeq captures sub-second syllable structure in two benchmark datasets. a,b) Distribution of inter-changepoint intervals for the open field dataset (Bohnslav, 2019) (a) and CalMS21 social behavior benchmark (b), shown respectively for the full datasets (black lines) and for each recording session (gray lines). c,d) Distribution of state durations from each behavior segmentation method. e-g) Keypoint-MoSeq matches or outperforms other methods when quantifying the agreement between human-annotations and unsupervised behavior labels. e,f) Three different similarity measures applied to the output of each unsupervised behavior analysis method (see Methods). g) Number of unsupervised states specific to each human-annotated behavior in the CalMS21 dataset, shown for 20 independent fits of each unsupervised method. A state was defined as specific if > 50% of frames bore the annotation.