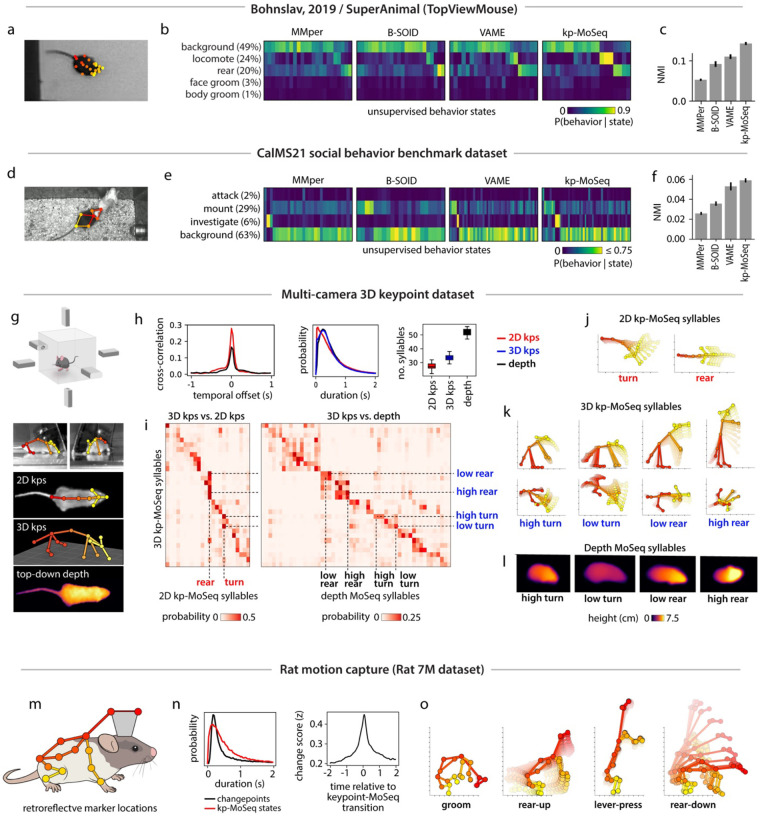

Figure 6: Keypoint-MoSeq generalizes across pose representations, behaviors, and rodent species.

a) Example frame from a benchmark open field dataset (Bohnslav, 2019). b) Overall frequency of each human-annotated behavior (as %) and conditional frequencies across states inferred from unsupervised analysis of 2D keypoints. c) Normalized mutual information (NMI, see Methods) between human annotations and unsupervised behavior labels from each method. d) Example frame from the CalMS21 social behavior benchmark dataset, showing 2D keypoint annotations for the resident mouse. e-f) Overlap between human annotations and unsupervised behavior states inferred from 2D keypoint tracking of the resident mouse, as b-c. g) Multi-camera arena for simultaneous recording of 3D keypoints (3D kps), 2D keypoints (2D kps) and depth videos. h) Comparison of model outputs across tracking modalities. 2D and 3D keypoint data were modeled using keypoint-MoSeq, and depth data were modeled using original MoSeq. Left: cross-correlation of transition rates, comparing 3D keypoints to 2D keypoints and depth respectively. Middle: distribution of syllable durations; Right: number of states with frequency > 0.5%. Boxplots represent the distribution of state counts across 20 independent runs of each model. i) Probability of syllables inferred from 2D keypoints (left) or depth (right) during each 3D keypoint-based syllable. j-l) Average pose trajectories for the syllables marked in (i). k) 3D trajectories are plotted in side view (first row) and top-down view (second row). l) Average pose (as depth image) 100ms after syllable onset. m) Location of markers for rat motion capture. n) Left: Average keypoint change score (z) aligned to keypoint-MoSeq transitions. Right: Duration distributions for keypoint-MoSeq states and inter-changepoint intervals. o) Average pose trajectories for example syllables learned from rat motion capture data.