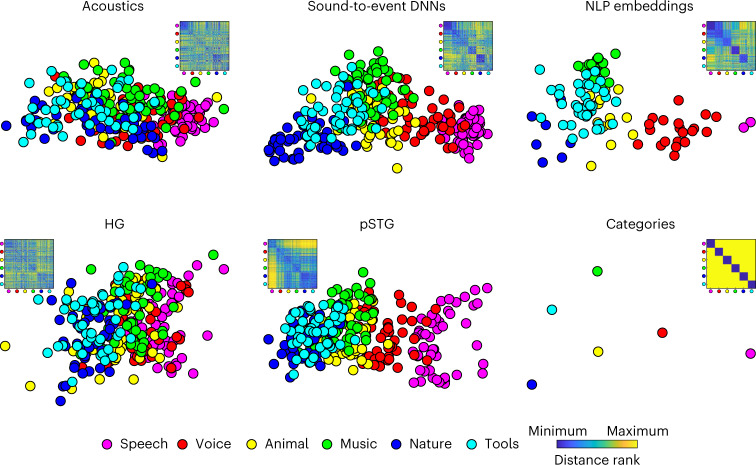

Fig. 2. Visualizing acoustic-to-semantic representations in computational models and in the brain.

Top, metric MDS of the distance between stimuli in acoustic, sound-to-event DNNs and NLP models (MDS performed on the standardized distance averaged across all model components; for example, all layer-specific distances across all DNNs). Bottom, metric MDS of the distance between stimuli in training-set fMRI data, averaged across CV folds and participants and of the category model. All MDS solutions were Procrustes-rotated to the pSTG MDS (dimensions considered, N = 60; only translation and rotation considered). For each MDS solution, we also show the ranked dissimilarity matrix. Note the spatial overlap of category exemplars in the categorical model, postulating zero within-category distances, and the corresponding graded representation of category exemplars in the other models. Note also how pSTG captures the intermediate step of the acoustic-to-semantic transformation emphasized in sound-to-event DNNs. See Supplementary Figs. 2 and 3 for the MDS representation of each model and ROI. fMRI participants, N = 5.