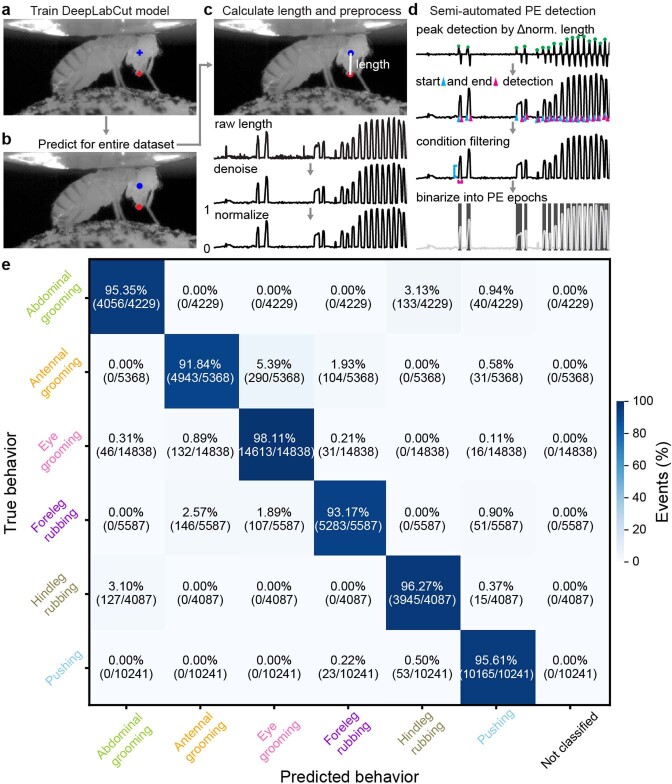

Extended Data Fig. 1. Semi-automated tracking of proboscis extensions, and the accuracy of the behavioral classifier.

We detected proboscis extensions using side-view camera images. (a) First, we trained a deep neural network model with manual annotations of landmarks on the ventral eye (blue cross) and distal proboscis tip (red cross). (b) Then we applied the trained model to estimate these locations throughout the entire dataset. (c) Proboscis extension length was calculated as the denoised and normalized distance between landmarks. (d) Using these data, we per- formed semi-automated detection of PE epochs by first identifying peaks from normalized proboscis extension lengths. Then we detected the start (cyan triangle) and end (magenta triangle) of these events. We removed false-positive detections by thresholding the amplitude (cyan line) and duration (magenta line) of events. Finally, we generated a binary trace of PE epochs (shaded regions). (e) A confusion matrix quantifies the accuracy of behavioral state classification using 10-fold, stratified cross-validation of a histogram gradient boosting classifier. Walking and resting are not included in this evaluation because they are predicted using spherical treadmill rotation data. The percentage of events in each category (‘predicted’ behavior versus ground-truth, manually-labelled ‘true’ behavior) is color-coded.