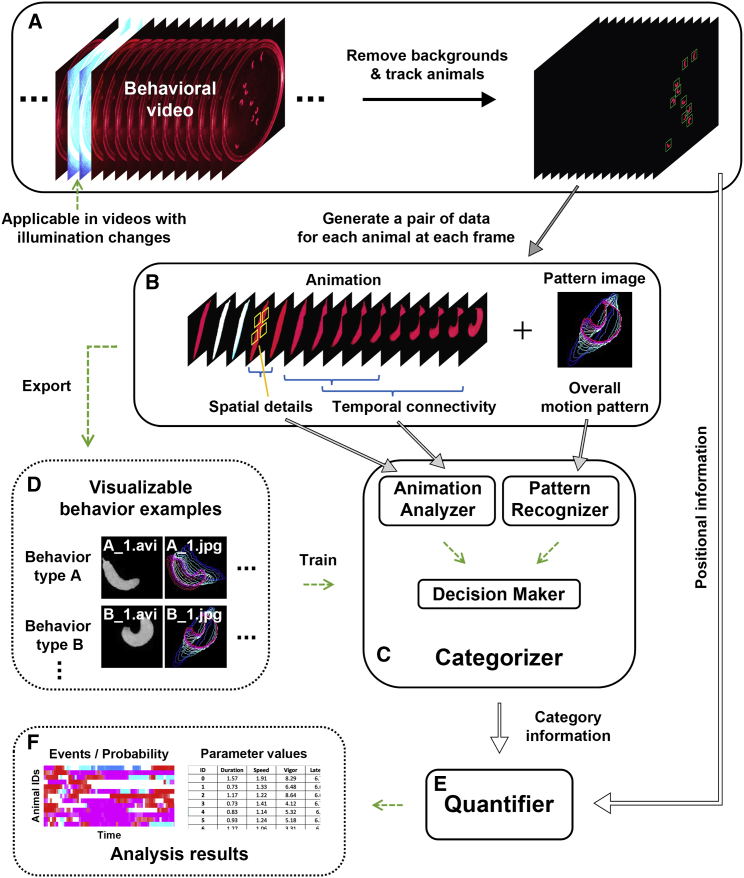

Figure 1.

The pipeline of LabGym

(A) Static backgrounds are removed in each video frame and individual animals are tracked.

(B) At each frame of the video, LabGym generates a pair of data for each animal, which comprises a short animation spanning a user-defined time window and its paired pattern image representing the movement pattern of the animal within the animation.

(C) The Categorizer uses both the animations and pattern images to categorize behaviors during the user-defined time window (i.e., the duration of the animation). The Categorizer comprises three submodules, the “Animation Analyzer,” the “Pattern Recognizer,” and the “Decision Maker.” The Animation Analyzer analyzes all spatiotemporal details in the animation for each animal whereas the Pattern Recognizer evaluates the overall movement patterns in the paired pattern image. The Decision Maker integrates the outputs from both the Animation Analyzer and the Pattern Recognizer to determine which user-defined behavioral category is present in the animation for each animal.

(D) The animations and pattern images can be exported to build visualizable, sharable behavior examples for training the Categorizer.

(E and F) After the behavioral categorizations, the quantification module (“Quantifier”) uses information from the behavioral categories and the animal foregrounds to compute specific quantitative measurements for different aspects of each behavior.

See also Data S1.