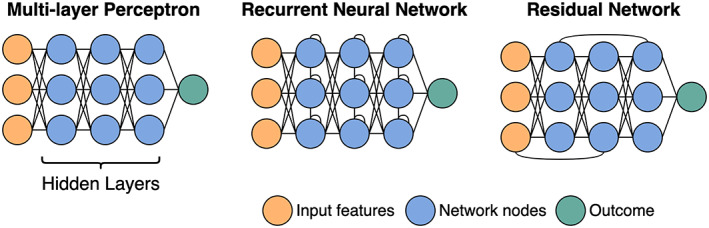

Figure 1.

Neural network architectures. The first layer of a neural network consists of the data. These data are then passed to the first “hidden layer.” Each node, represented by a circle, is a weighted linear combination of all the nodes in the layer before. It is the weights that the model “learns.” Apart from a classic neural network where all nodes from 1 layer are connected to the next (otherwise known as a multilayer perceptron), other common architectures include recurrent networks with connections between nodes within a layer, usually used for sequence data (e.g., time‐series or text), and residual networks, where information from 1 layer can “skip” the next layer, giving the network a way to bypass inefficient layers.