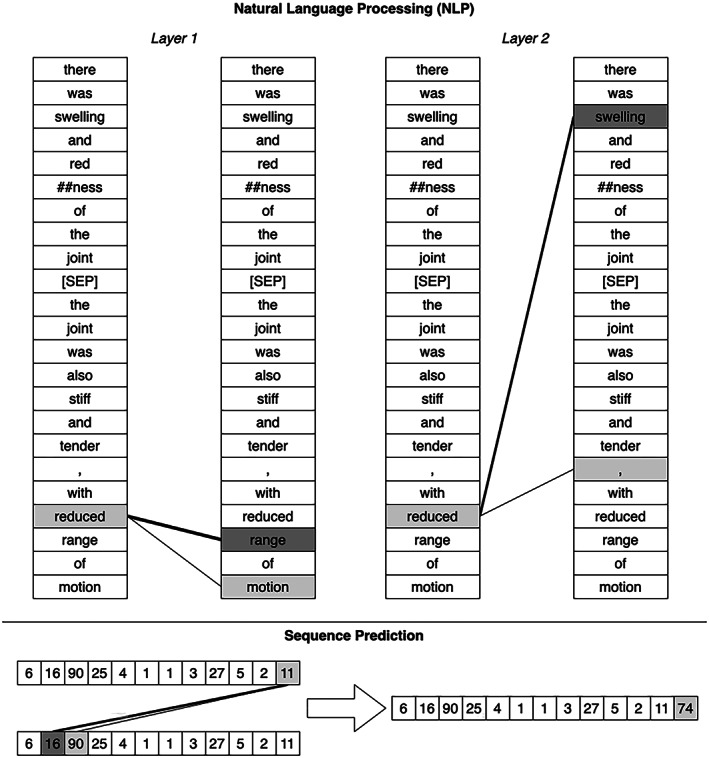

Figure 2.

Visualization of attention model (ref. 94). Two attention layers are shown with text input for NLP (top). The original input text reads, “There was swelling and redness of the joint. The joint was also stiff and tender, with reduced range of motion.” This text is converted into tokens, sometimes splitting words into more than one token (here “redness” is split into “red” and “##ness”—the “##” signifying that this token belongs with the preceding token). On the left, a lower layer of the attention‐based model relied on the words “range” and “motion” to interpret the word “reduced.” On the right, at a higher layer, the word “reduced” also depends strongly on the word “swelling” in the previous sentence. An attention model can be used for any sequence data (bottom). Here, these numbers could be laboratory values, with the task of predicting the next value in the sequence. The attention layer used the values “16” and “90” to predict the next value in the sequence. In this instance, attention is used to focus on a similar pattern to anticipate a future value.