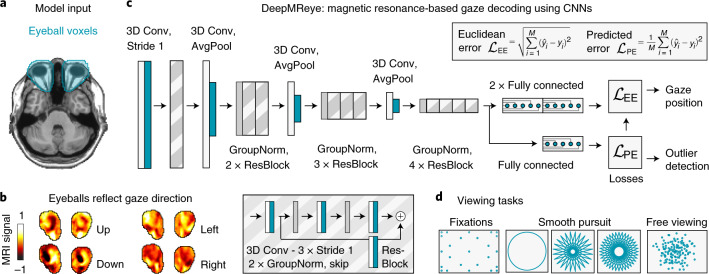

Fig. 1. Model architecture and input.

a, Manually delineated eye masks superimposed on a T1-weighted structural template (Colin27) at MNI coordinate Z = –36. b, Eyeball MR signal reflects gaze direction. The normalized MR signal of eye mask voxels of a sample participant who fixated on a target on the left (X, Y = –10, 0°), right (10, 0°), top (0, 5.5°) or bottom (0, –5.5°) of the screen are plotted. Source data are provided. c, CNN architecture. The model takes the eye mask voxels as three-dimensional (3D) input and predicts gaze position as a two-dimensional (2D; X, Y) regression target. It performs a series of 3D convolutions (3D Conv) with group normalizations (GroupNorm) and spatial downsampling via average pooling (AvgPool) in between. Residual blocks (ResBlock) comprise an additional skip connection. The model is trained across participants using a combination of two loss functions: (1) the Euclidean Error (EE) between the predicted and the true gaze position and (2) the error between the EE and a predicted error (PE). It outputs gaze position and the PE as a decoding confidence measure for each repetition time (TR). d, Schematics of viewing priors. We trained and tested the model on data from 268 participants performing fixations11, smooth pursuit on circular or star-shaped trajectories12–14 and free viewing15.