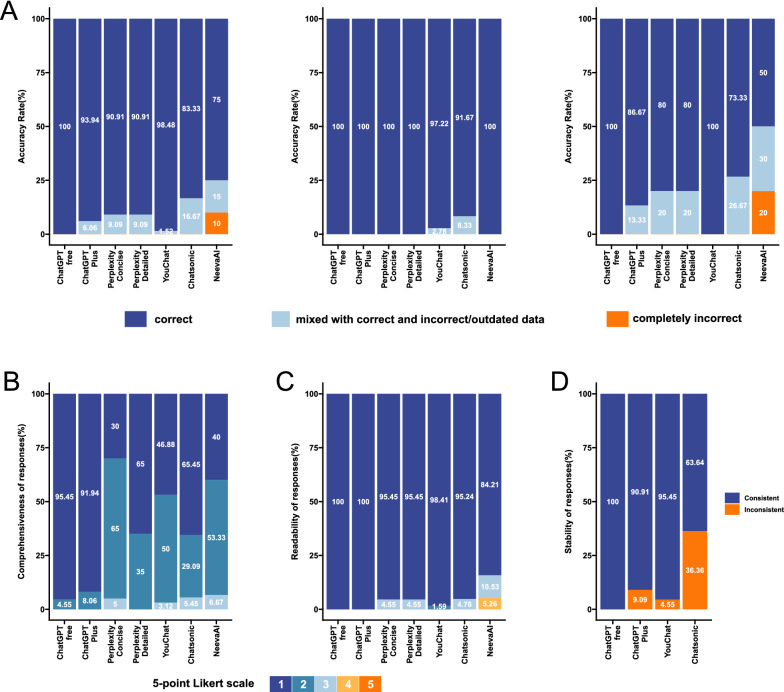

Fig. 1.

The performance of several large language models (LLMs) in answering different questions. All responses were generated and recorded on February 19, 2023. Three experienced urologists worked together to complete the ratings. A Accuracy of responses. Using a 3-point scale: 1 for correct, 2 for mixed with correct and incorrect/outdated data, and 3 for completely incorrect. From left to right, the performance in all questions, the performance in basic questions, and the performance in difficult questions. B The comprehensiveness of correctly answered responses. A 5-point Likert scale is used, with 1 representing “very comprehensive” and 5 representing “very Inadequate”. C Readability of answers. A 5-point Likert scale is used, with 1 representing “very easy to understand” and 5 representing “very difficult to understand”. D Stability of responses. Judged based on whether the model’s accuracy is consistent across different responses to the same question. Except for NeevaAI and Perplexity, the other models generated different responses each time, so we generated three responses for each question in these models to examine the stability of the models