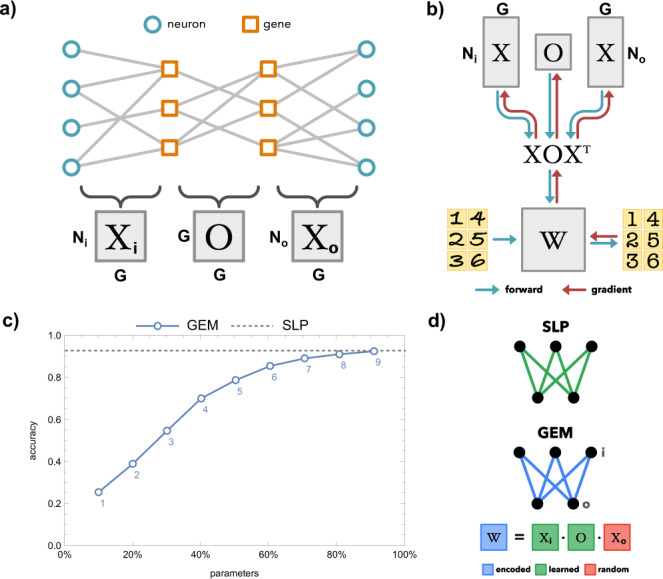

Fig. 1. The genetic neuroEvolution model.

a Visualization of the Genetic Connectome Model18,19. Matrices Xi and Xo represent the gene expression of input and output neurons, respectively. The O matrix corresponds to the genetic interactions that underlie neuronal partner selection. b Traditionally, AI techniques define an architecture (W), which can receive data as inputs (handwritten digits, left of W), and produce predictions (digital numbers, right of W). The weights (W) of the architecture can be updated (leftward red arrow) based on the distance of the predictions from known values, thereby producing a more accurate system with training. In the Genetic neuroEvolution Model (GEM), the architecture (W) is produced from a small set of wiring rules defined by Xs and O (downward blue arrows). At each training step, the data is passed through to make predictions (rightward blue arrows). However, rather than altering the architecture weights directly, gradients are computed to update Xs and O (upward red arrows). At the next training step the revised wiring rules generate a revised W (downward blue arrows). c Mean performance of the GEM on the MNIST task. The accuracy of a single-layer linear classifier (784 by 10 nodes) is shown, either with learned weights (dashed line) or weights encoded by the GEM’s wiring rules (blue line, with number of genes labeled below each marker). Parameters are expressed as a percentage of a learned SLP’s weights. d Visualization of a learned SLP and an SLP encoded by the GEM in Fig. 1c. We do not learn Xo, as we find it adds parameters without increasing task performance.