Abstract

How do humans learn from raw sensory experience? Throughout life, but most obviously in infancy, we learn without explicit instruction. We propose a detailed biological mechanism for the widely-embraced idea that learning is driven by the differences between predictions and actual outcomes (i.e., predictive error-driven learning). Specifically, numerous weak projections into the pulvinar nucleus of the thalamus generate top-down predictions, and sparse driver inputs from lower areas supply the actual outcome, originating in layer 5 intrinsic bursting (5IB) neurons. Thus, the outcome representation is only briefly activated, roughly every 100 ms (i.e., 10 Hz, alpha), resulting in a temporal difference error signal, which drives local synaptic changes throughout the neocortex. This results in a biologically-plausible form of error backpropagation learning. We implemented these mechanisms in a large-scale model of the visual system, and found that the simulated inferotemporal (IT) pathway learns to systematically categorize 3D objects according to invariant shape properties, based solely on predictive learning from raw visual inputs. These categories match human judgments on the same stimuli, and are consistent with neural representations in IT cortex in primates.

The fundamental epistemological conundrum of how knowledge emerges from raw experience has challenged philosophers and scientists for centuries. Although there have been significant advances in cognitive and computational models of learning (Ashby & Maddox, 2011; LeCun, Bengio, & Hinton, 2015; Watanabe & Sasaki, 2015) and in our understanding of the detailed biochemical basis of synaptic plasticity (Cooper & Bear, 2012; Lüscher & Malenka, 2012; Shouval, Bear, & Cooper, 2002; Urakubo, Honda, Froemke, & Kuroda, 2008), there is still no widely-accepted answer to this puzzle that is clearly supported by known biological mechanisms and also produces effective learning at the computational and cognitive levels. The idea that we learn via an active predictive process was advanced by Helmholtz in his recognition by synthesis proposal (von Helmholtz, 1867), and has been widely embraced in a range of different frameworks (Clark, 2013; Dayan, Hinton, Neal, & Zemel, 1995; de Lange, Heilbron, & Kok, 2018; J. Elman et al., 1996; J. L. Elman, 1990; Friston, 2005; George & Hawkins, 2009; Hawkins & Blakeslee, 2004; Kawato, Hayakawa, & Inui, 1993; Mumford, 1992; Rao & Ballard, 1999; Summerfield & de Lange, 2014).

Here, we propose a detailed biological mechanism for a specific form of predictive error-driven learning based on distinctive patterns of connectivity between the neocortex and the higher-order nuclei of the thalamus (i.e., the pulvinar) (S. M. Sherman & Guillery, 2006; Usrey & Sherman, 2018). We hypothesize that learning is driven by the difference between top-down predictions, generated by numerous weak projections into the thalamic relay cells (TRCs) in the pulvinar, and the actual outcomes supplied by sparse, strong driver inputs from lower areas. Because these driver inputs originate in layer 5 intrinsic bursting (5IB) neurons, the outcome is only briefly activated, roughly every 100 ms (i.e., 10 Hz, alpha). Thus, the prediction error is a temporal difference in activation states over the pulvinar, from an earlier prediction to a subsequent burst of outcome. This temporal difference can drive local synaptic changes throughout the neocortex, supporting a biologically-plausible form of error backpropagation that improves the predictions over time (Ackley, Hinton, & Sejnowski, 1985; Bengio, Mesnard, Fischer, Zhang, & Wu, 2017; Hinton & McClelland, 1988; Lillicrap, Santoro, Marris, Akerman, & Hinton, 2020; O’Reilly, 1996; Whittington & Bogacz, 2019). The temporal-difference form of error-driven learning contrasts with prevalent alternative hypotheses that require a separate population of neurons to compute a prediction error explicitly and transmit it directly through neural firing (Friston, 2005, 2010; Kawato et al., 1993; Lotter, Kreiman, & Cox, 2016; Ouden, Kok, & Lange, 2012; Rao & Ballard, 1999).

In the following, our primary objective is to describe the hypothesized biologically based mechanism for predictive error-driven learning, contrast it with other existing proposals regarding the functions of this thalamocortical circuitry and other ways that the brain might support predictive learning, and evaluate it relative to a wide range of existing anatomical and electrophysiological data. We provide a number of specific empirical predictions that follow from this functional view of the thalamocortical circuit, which could potentially be tested by current neuroscientific methods. Thus, this work proposes a clear functional interpretation of this distinctive thalamocortical circuitry that contrasts with existing ideas in testable ways.

A second major objective is to implement this predictive error-driven learning mechanism in a large-scale computational model that faithfully captures its essential biological features, to test whether the proposed learning mechanism can drive the formation of cognitively useful representations. In particular, we ask a critical question for any predictive-learning model: can it develop high-level, abstract representations while learning from nothing but predicting low-level visual inputs. Most visual object recognition models that provide a reasonable fit to neurophysiological data rely on large human-labeled datasets to explicitly train abstract category information via error-backpropagation (Cadieu et al., 2014; Khaligh-Razavi & Kriegeskorte, 2014; Rajalingham et al., 2018). Thus, it is perhaps not too surprising that the higher layers of these models, which are closer to these category output labels, exhibited a greater degree of categorical organization.

Through large-scale simulations based on the known structure of the visual system, we found that our biologically based predictive learning mechanism developed high-level, abstract representations that signif icantly diverge from the similarity structure present in the lower layers of the network, and systematically categorize 3D objects according to invariant shape properties. Furthermore, we found in an experiment using the same stimuli that these categories match human similarity judgments, and that they are also qualitatively consistent with neural representations in inferotemporal (IT) cortex in primates (Cadieu et al., 2014). In addition, we show that comparison predictive backpropagation models lacking these biological features (Lotter et al., 2016) did not learn object categories that go beyond the visual input structure. Thus, there may be some important features of the biologically based model that enable this ability to learn higher-level structure beyond that of the raw inputs.

It is important to emphasize that our objectives for these simulations are not to produce a better machine-learning (ML) algorithm per se, but rather to test whether our biologically based model can capture some of the known high-level, cognitive phenomena that the mammalian brain learns. Thus, we explicitly dissuade readers from the inevitable desire to evaluate the importance of our model based on differences in narrow, performance-based ML metrics. As discussed later, there are various engineering-level issues regarding the biologically based model’s computational cost and performance, that currently limit its ability to compete with simpler, much larger-scale backpropagation models, but we do not think these are relevant to the evaluation of the scientific questions of relevance here. In short, this model is an instantiation of a scientific theory, and it should be evaluated on its ability to explain a wide range of data across multiple levels of analysis, just as every other scientific theory is evaluated.

The remainder of the paper is organized as follows. First, we provide a concise overview of the biologically based predictive error-driven learning framework, including the most relevant neural data. Then, we present a small-scale implementation of the model that learns a probabilistic grammar, to illustrate the basic computational mechanisms of the theory. This is followed by the large-scale model of the visual system, which learns by predicting over brief movies of 3D objects rotating and translating in space. We evaluate this model and compare it to two other predictive learning models that directly use error-backpropagation, based on current deep convolutional neural network (DCNN) mechanisms. Then, we circle back to discuss the relevant biological data in greater detail, along with testable predictions that can differentiate this account from other existing ideas. Finally, we conclude with a discussion of related models and outstanding issues.

Predictive Error-driven Learning in the Neocortex and Pulvinar

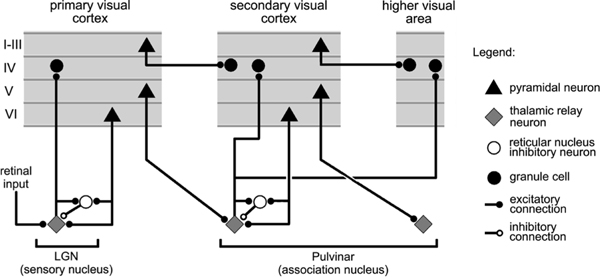

Figure 1 shows the thalamocortical circuits characterized by S. M. Sherman and Guillery (2006) (see also S. M. Sherman & Guillery, 2013; Usrey & Sherman, 2018), which have two distinct projections converging on the principal thalamic relay cells (TRCs) of the pulvinar, the primary thalamic nucleus that is interconnected with higher-level posterior cortical visual areas (Arcaro, Pinsk, & Kastner, 2015; Halassa & Kastner, 2017; Shipp, 2003). One projection consists of numerous, weaker connections originating in deep layer VI of the neocortex (the 6CT corticothalamic projecting cells), which we hypothesize generate a top-down prediction on the pulvinar. The other is a sparse (Rockland, 1996, 1998) and strong driver pathway that originates from lower-level layer 5 intrinsic bursting cells (5IB), which we hypothesize provide the outcome. These 5IB neurons fire discrete bursts with intrinsic dynamics having a period of roughly 100 ms between bursts (Connors, Gutnick, & Prince, 1982; Franceschetti et al., 1995; Larkum, Zhu, & Sakmann, 1999; Saalmann, Pinsk, Wang, Li, & Kastner, 2012; Silva, Amitai, & Connors, 1991), which is thought to drive the widely-studied alpha frequency of ∼ 10 Hz that originates in cortical deep layers and has important effects on a wide range of perceptual and attentional tasks (Buffalo, Fries, Landman, Buschman, & Desimone, 2011; Clayton, Yeung, & Kadosh, 2018; Jensen, Bonnefond, & VanRullen, 2012; K. Mathewson, Gratton, Fabiani, Beck, & Ro, 2009; VanRullen & Koch, 2003). Critically, unlike many other such bursting phenomena, this 5IB bursting occurs in awake animals (Luczak, Bartho, & Harris, 2009, 2013; Sakata & Harris, 2009, 2012), consistent with presence of alpha in awake behaving states.

Figure 1:

Summary figure from Sherman & Guillery (2006) showing the strong feedforward driver projection emanating from layer 5IB cells in lower layers (e.g., V1), and the much more numerous feedback “modulatory” projection from layer 6CT (corticothalamic) cells. We interpret these same connections as providing a prediction (6CT) vs. outcome (5IB) activity pattern over the pulvinar.

The existing literature generally characterizes the 6CT projection as modulatory (S. M. Sherman & Guillery, 2013; Usrey & Sherman, 2018), but a number of electrophysiological recordings from awake, behaving animals clearly show sustained, continuous patterns of neural firing in pulvinar TRC neurons, which is not consistent with the idea that they are only being driven by their phasic bursting 5IB inputs (Bender, 1982; Bender & Youakim, 2001; Komura, Nikkuni, Hirashima, Uetake, & Miyamoto, 2013; Petersen, Robinson, & Keys, 1985; Robinson, 1993; Saalmann et al., 2012; Zhou, Schafer, & Desimone, 2016). Indeed, these recordings show that pulvinar neural firing generally resembles that of the visual areas with which they interconnect, in terms of neural receptive field properties, tuning curves, etc. This is important because our predictive learning framework requires that these 6CT top-down projections be capable of directly driving TRC activity. Specifically, in contrast to the standard view, the core idea behind our theory is that the top-down 6CT projections drive a predicted activity pattern across the extent of the pulvinar, which precedes the subsequent outcome activation state driven by the strong 5IB inputs.

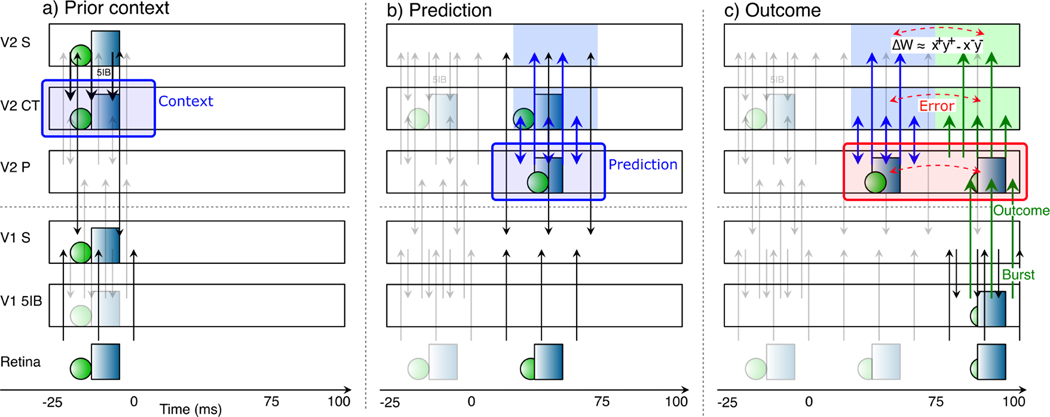

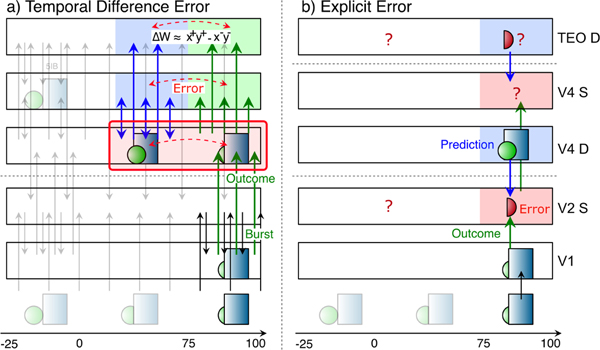

Figure 2 illustrates the temporal evolution of activity states according to our predictive learning theory, which is somewhat challenging to convey because the critical signals driving learning unfold over time (Kachergis, Wyatte, O’Reilly, de Kleijn, & Hommel, 2014; O’Reilly, Wyatte, & Rohrlich, 2014, 2017). We hypothesize that synaptic plasticity throughout the cortex is sensitive to the resulting temporal differences that emerge initially in the pulvinar. Thus, unlike other models (as we discuss in depth later) the prediction error here is not captured directly in the firing of a special population of error-coding neurons, but rather remains as a temporal difference error signal.

Figure 2:

Corticothalamic information flow under our predictive learning hypothesis, shown as a sequence of movie frames (Retina), illustrating the three key steps taking place within a single 125 ms time window, broken out separately across the three panels: a) prior context is updated in the V2 CT layer; b) which is then used to generate a prediction over the pulvinar (V2 P); c) against which the outcome, driven by bottom-up 5IB bursting, represents the prediction error as a temporal difference between the prediction and outcome states over the pulvinar. Changes in synaptic weights (learning) in all superficial (S) and CT layers are driven from the local temporal difference experienced by each neuron, using a form of the contrastive hebbian learning (CHL) term as shown, where the ‘+’ superscripts indicate outcome activations, and ‘−’ superscripts indicate prediction. CHL approximates the backpropagated prediction error gradient experienced by each neuron (O’Reilly, 1996), reflecting both direct pulvinar error signals, and indirect corticocortical error signals as well. In specific: a) CT context updating occurs via 5IB bursting (not shown) in higher layer (V2) during prior alpha (100 ms) cycle — this context is maintained in the CT layer and used to generate predictions. b) The prediction over pulvinar is generated via numerous top-down CT projections. This prediction state also projects up to S and CT layers, and from S to all other S layers via extensive bidirectional connectivity, so their activation state reflects this prediction as well. c) The subsequent outcome drives pulvinar activity bottom-up via V1 5IB bursting, and is likewise projected to S and CT layers, ensuring that the relevant temporal difference error signal is available locally in cortex. The difference in activation values across these two time points, in S and CT layers throughout the network, drives learning to reduce prediction errors. Note that the single most important property of the 5IB bursting is that these driver cells are not active during the prediction phase — the bursting itself may also be useful in the driving property, but that is a secondary consideration to the critical feature of having a time when the prediction alone can be projected onto the pulvinar.

The figure shows a single 125 ms time window of a 100 ms alpha cycle for the purposes of illustration (the actual timing is likely to be more dynamic as discussed next). The activity state in pulvinar TRC neurons, representing a prediction, as driven by the top-down 6CT projections, should develop during the first ∼ 75 ms, when the 5IB neurons are paused between bursting. Then the final ∼ 25 ms largely reflects the strong 5IB bottom-up ground-truth driver inputs when they burst. Thus, the prediction error signal is reflected in the temporal difference of these activation states as they develop over time. In other words, our hypothesis is that the pulvinar is directly representing either the top-down prediction or the bottom-up outcome at any given time, and the temporal difference between these states implicitly encodes a prediction error. While the deep 6CT layer is involved in generating a top-down prediction over the pulvinar, the superficial layer neurons continuously represent the current state, simultaneously incorporating bottom-up and top-down constraints via their own connections with other areas. To ensure that the prediction is not directly influenced by this current state representation (i.e., “peeking at the right answer”), it is important that the 6CT neurons encode temporally delayed information, consistent with available data (Harris & Shepherd, 2015; Sakata & Harris, 2009; Thomson, 2010).

The actual biological system is likely to be much more dynamic than the simplistic cartoon with rigid 100 ms timing as shown in Figure 2, based on a set of neural mechanisms that can work together to enable it to more flexibly entrain the predictive learning cycle to the environment. These mechanisms would also tend to increase activity and learning associated with unexpected outcomes relative to expected ones, consistent with the observed expectation suppression phenomena (Bastos et al., 2012; Meyer & Olson, 2011; Summerfield, Trittschuh, Monti, Mesulam, & Egner, 2008; Todorovic, van Ede, Maris, & de Lange, 2011).

Specifically, various underlying mechanisms result in neural adaptation, which is generally thought to increase neural activity and learning associated with novel inputs relative to recently familiar ones (Abbott, Varela, Sen, & Nelson, 1997; Brette & Gerstner, 2005; Grill-Spector, Henson, & Martin, 2006; Hennig, 2013; Müller, Metha, Krauskopf, & Lennie, 1999). In the case where outcomes are consistent with prior predictions (i.e., the predictions are accurate), the same population of neurons across pulvinar and cortex should be active over time, whereas unpredicted outcomes will generally activate new subsets of neurons in superficial cortical layers representing the current state. Thus, due to adaptation, there should be a phasic increase in activity in these superficial neurons at the onset of unpredicted stimuli relative to predicted ones. Furthermore, the 5IB neurons downstream of these superficial neurons may be particularly responsive to these phasic activity increases, causing their bursting to coincide preferentially with unexpected outcomes, thereby driving the phase resetting of the alpha cycle to such events. Thus, during a sequence of predicted states, the pulvinar may experience relatively weaker or even absent 5IB driving inputs, until an unpredicted stimulus arises. At this point, error-driven learning would be more strongly engaged as a function of the phasic release from adaptation and 5IB burst activation. We discuss these dynamics more later in the context of the comparison with explicit error coding models.

We also hypothesize that 5IB bursting preferentially drives the synaptic plasticity processes to take place at that time, due the strong driving nature of the outputs from these neurons. In computational terms originating with the Boltzmann Machine (Ackley et al., 1985; Hinton & Salakhutdinov, 2006), this anchors the target or plus phase to be at this point of 5IB bursting. Furthermore, this means that the predictive nature of the prior minus phase naturally emerges just by virtue of it being the state prior to 5IB bursting: the learning rule automatically causes that prior state to better anticipate the subsequent state. Thus, even if no prediction was initially generated, learning over multiple iterations will work to create one, to the extent that a reliable prediction can be generated based on internal states and environmental inputs. Likewise, assuming relevant activity traces naturally persist over timescales longer than the alpha cycle, this predictive learning process can take advantage of any such remaining traces to learn across these longer timescales, even though it is operating at the faster alpha scale.

In short, learning always happens whenever something unexpected occurs, at any point, and drives the development of predictions immediately prior, to the extent such predictions are possible to generate. In the typical lab experiment where phasic stimuli are presented without any predictable temporal sequence (which is uncharacteristic of the natural world), there may often be no significant prediction prior to stimulus onset, and we would expect such stimuli to reliably drive 5IB bursting, which is consistent with available electrophysiological data (Bender, 1982; Bender & Youakim, 2001; Komura et al., 2013; Luczak et al., 2009, 2013; Petersen et al., 1985; Robinson, 1993; Zhou et al., 2016). Thus, unlike Figure 2, such situations would start with a 5IB-triggered plus phase, without a significant minus phase prior to that.

As may be evident by this point, we are mainly focused on prediction in the sense of the humorous quote: “prediction is very difficult, especially about the future” (attributable to Danish author Robert Storm Petersen), whereas this term is potentially confusingly used in a much broader sense in most Bayesian-inspired predictive coding frameworks (de Lange et al., 2018; Friston, 2005; Rao & Ballard, 1999). These frameworks use “prediction” to encompass everything from genetic biases to the results of learning in the feedforward synaptic pathways to top-down filling-in or biasing of the current stimulus properties, and fairly rarely use it in the “about the future” sense. We think these different phenomena are each associated with different neural mechanisms at different time scales (O’Reilly, Hazy, & Herd, 2016; O’Reilly, Munakata, Frank, Hazy, & Contributors, 2012; O’Reilly, Wyatte, Herd, Mingus, & Jilk, 2013), and thus prefer to treat them separately, while also recognizing that they can clearly interact as well.

Thus, our use of the term prediction here refers specifically to anticipatory neural firing that predicts subsequent stimuli. We use the term postdiction to refer to the operation of this predictive mechanism after a stimulus has been initially processed (to consolidate and more deeply encode, as in an auto-encoder model), and distinguish both from top-down excitatory biasing, which directly influences the online superficial layer neural representations of the current stimulus (Desimone & Duncan, 1995; E. K. Miller & Cohen, 2001; O’Reilly et al., 2013; Reynolds, Chelazzi, & Desimone, 1999). Finally, many discussions of prediction error in the literature include late, frontally-associated processes such as those associated with the P300 ERP component (Holroyd & Coles, 2002). We specifically exclude these from the scope of the mechanisms described here, which are anticipatory, fast, and low-level, as is appropriate for the posterior cortical sensory processing areas that interconnect with the pulvinar.

Computational Properties of Predictive Learning in the Thalamocortical Circuits

We next elaborate the connections between the computational properties required for predictive learning, and the properties of the circuits interconnecting cortex and the pulvinar, which appear to be notably well suited for their hypothesized role in predictive learning. We begin with a relatively established interpretation of superficial layer processing, to contextualize subsequent points about the special functions required of the deep layers and the thalamus.

The superficial cortical layers continuously represent the current state: The superficial layer pyramidal neurons are densely and bidirectionally interconnected with other cortical areas, and update quickly to new stimulus inputs, with continuous, relatively rapid firing (i.e., up to about 100 Hz for preferred stimuli). These neurons integrate higher-level top-down information with bottom-up sensory information to resolve ambiguities, focus attention, fill in missing information, and generally enhance the consistency and quality of the online representations (Desimone & Duncan, 1995; Hopfield, 1984; E. K. Miller & Cohen, 2001; O’Reilly et al., 2016, 2012, 2013; Reynolds et al., 1999; Rumelhart & McClelland, 1982). As noted above, we distinguish this form of top-down processing, which is often most evident during the period after stimulus onset (Lee & Mumford, 2003), from the specifically predictive, anticipatory sort.

Predictions must be insulated against receiving current state information (it isn’t prediction if you already know what happens): Given that the superficial layers are continuously updating and representing the current state, some kind of separate neural system insulated from this current state information must be used to generate predictions, otherwise the prediction system can just “cheat” and directly report the current state. It may seem counter-intuitive, but making the prediction task harder is actually beneficial, because that pushes the learning to capture deeper, more systematic regularities about how the environment evolves over time. In other words, like any kind of cheating, the cheater itself is cheated because of the reduced pressure to learn, and learning is the real goal.

-

Predictions take time and space to generate: Non-trivial predictions likely require the integration of multiple converging inputs from a range of higher-level cortical areas, each encoding different dimensions of relevance (e.g., location, motion, color, texture, shape, etc). Thus, sufficient time and space (i.e., neural substrates with relevant connectivity) must be available to integrate these signals into a coherent predicted state, and per the above point, these substrates must be separated from the influence of current state information. This fits with the properties of the layer 6CT neurons and their deep layer inputs, which we hypothesize are insulated from superficial-layer firing by virtue of being driven locally by the 5IB bursting within their own cortical microcolumn, such that the inter-bursting pause period provides a time window when these deep layers can integrate and generate the prediction.

Biologically, this is consistent with the delayed responses of 6CT neurons (Harris & Shepherd, 2015; Sakata & Harris, 2009; Thomson, 2010). Computationally, these neurons function much like the simple recurrent network (SRN) context layer updating (J. L. Elman, 1990; Jordan, 1989) which reflects the prior trial’s state, as discussed in detail in the Appendix. The overall duration of the alpha cycle may represent a reasonable compromise between the prediction integration time and the need to keep up with predictions tracking changes in the world. Notably, films are typically shown at just over 2 times the alpha frequency (24 Hz), suggesting a Nyquist sampling relative to the underlying alpha processing.

The predicted state must be directly aligned with the outcome state it predicts: A prediction error is a difference between two states, so these prediction and outcome states must be directly comparable such that their difference meaningfully represents the actual prediction error, and not some other kind of irrelevant encoding differences. In other words, the prediction and the outcome must be represented in the same “language”, so that the “words” from the prediction can be directly compared against those of the outcome — if the prediction was in Japanese and the outcome in English, it would be hard to tell whether the prediction was correct or not! Thus, a common neural substrate with two different input pathways is required, one reflecting the prediction and the other the outcome, so that both converge onto the same representational system within this common neural substrate. This fits well with the two pathways converging into the pulvinar: the 6CT top-down prediction-generation pathway and the lower-level 5IB driving inputs.

The outcome signal should be as veridical as possible (i.e., directly reflecting the bottom-up outcome), and should arise from lower areas in the hierarchy relative to the corresponding predictive 6CT inputs: Given that the outcome is the driver of learning, if it were to be corrupted or inaccurate, then everything that is learned would then be suspect. To the extent that delusional thinking is present in all people (some moreso than others perhaps) this principle must be violated at some level, but for the lowest levels of the perceptual system at least, it is important that strongly grounded, accurate training signals drive learning. The bottom-up, sparse, strongly driving nature of the 5IB projections to the pulvinar can directly convey such veridical outcome signals, and ensure that they dominate the activation of their TRC targets. Based on indirect available data, it is likely that each pulvinar TRC neuron receives only roughly 1–6 driver inputs (S. M. Sherman & Guillery, 2006, 2011), such that these sparse inputs directly convey the signal from lower layers, without much further mixing or integration (which could distort the nature of the signal). Furthermore, these inputs are likely not plastic (Usrey & Sherman, 2018), again consistent with a need for unaltered, veridical signals. Lastly, the TRC neurons are distinctive in having no significant lateral interconnectivity (S. M. Sherman & Guillery, 2006), enabling them to faithfully represent their inputs. These properties led Mumford (1991) to characterize the pulvinar as a blackboard, and we further suggest the metaphor of a projection screen upon which the predictions are projected.

-

The prediction error must drive learning to reduce subsequent prediction errors: Obviously, this is the goal of prediction error learning in the first place, and given that the cortex is what generates predictions, it must be capable of learning based on prediction error signals represented over the pulvinar. Computationally, the critical problem here is credit assignment: how do the error signals direct learning in the proper direction for each individual neuron, to reduce the overall prediction error? The error backpropagation procedure solves this problem (Rumelhart, Hinton, & Williams, 1986), but requires biologically implausible retrograde signaling across the entire network of neural communication (Crick, 1989), to propagate the error proportionally back along the same channels that drive forward activation. Bidirectional connections, which are ubiquitous in the cortex (Felleman & Van Essen, 1991; Markov, Ercsey-Ravasz, et al., 2014) and computationally beneficial for other reasons as noted earlier, can eliminate that problem by “implicitly” propagating error signals via standard neural communication mechanisms along both directions of connectivity (O’Reilly, 1996).

This solution to the credit assignment problem relies on a temporal difference error signal, as originally developed for the Boltzmann machine (Ackley et al., 1985). The bidirectional neural communication at one point in time is encoding and sharing the prediction among the entire network of neurons. Then, this same network of connections is reused at another point in time to encode and communicate the outcome. Mathematically, the difference in activation state across these two points in time, locally at each individual neuron, provides an accurate estimate of the error backpropagation gradient (O’Reilly, 1996). In effect, this temporal difference tells each neuron which direction it needs to change its activation state to reduce the overall error. The reuse of the very same network of connections across both points in time ensures the overall alignment of the two activation states, as noted above, such that this temporal difference precisely represents the error signal. While various other schemes for error-driven learning in biologically-plausible networks have been proposed (e.g., Bengio et al., 2017; Lillicrap et al., 2020; Whittington & Bogacz, 2019), the temporal-difference framework with bidirectional connectivity provides a particularly good fit with the natural temporal ordering of predictive learning (prediction then outcome) and the extensive bidirectional connectivity of the thalamocortical circuits (Shipp, 2003).

Temporal differences in activation state across the alpha cycle, between prediction and outcome states, must drive synaptic plasticity: The final step needed to connect all of the elements above is that neurons actually modify their synaptic strengths in proportion to the temporal-difference error signal. We have recently provided a fully explicit mechanism for this form of learning (O’Reilly et al., 2012), based on a biologically-detailed model of spike timing dependent plasticity (STDP) (Urakubo et al., 2008). We showed that when activated by realistic Poisson spike trains, this STDP model produces a nonmonotonic learning curve similar to that of the BCM model (Bienenstock, Cooper, & Munro, 1982), which results from competing calcium-driven postsynaptic plasticity pathways (Cooper & Bear, 2012; Shouval et al., 2002). As in the BCM framework, we hypothesized that the threshold crossover point in this nonmonotonic curve moves dynamically — if this happens on the alpha timescale (Lim et al., 2015), then it can reflect the prediction phase of activity, producing a net error-driven learning rule based on a subsequent calcium signal reflecting the outcome state. The resulting learning mechanism naturally supports a combination of both BCM-style hebbian learning and error-driven learning, where the BCM component acts as a kind of regularizer or bias, similar to weight decay (O’Reilly & Munakata, 2000; O’Reilly et al., 2012).

Thus, remarkably, the pulvinar and associated thalamocortical circuitry appears to provide precisely the necessary ingredients to support predictive error-driven learning, according to the above analysis. Interestingly, although S. M. Sherman and Guillery (2006) did not propose a predictive learning mechanism as just described, they did speculate about a potential role for this circuit in motor forward-model learning and the predictive remapping phenomenon (S. M. Sherman & Guillery, 2011; Usrey & Sherman, 2018). In addition, Pennartz, Dora, Muckli, and Lorteije (2019) also suggested that the pulvinar may be involved in predictive learning, but within the explicit error-coding framework and not involving the detailed aspects of the above-described circuitry.

It bears emphasizing the synergy between the various considerations above for the benefits of the pause in 5IB firing between bursts. First, this pause is critical for creating the time window when the predictive network is representing and communicating the prediction state, without influence from the outcome state. Further, it creates the temporal difference in activation state in the pulvinar between prediction and outcome, which is needed for driving error-driven learning. Thus, for both the 6CT and pulvinar layers, the periodic pausing of 5IB neurons is essential for creating the predictive learning dynamic. Interestingly, by these principles, the lack of such burst / pause dynamics in the driver inputs to first-order sensory thalamus areas such as the LGN and MGN (S. M. Sherman & Guillery, 2006) means that these areas should not be directly capable of error-driven predictive learning. This is consistent with a number of models and theoretical proposals suggesting that primary sensory areas may learn predominantly through hebbian-style self-organizing mechanisms (Bednar, 2012; K. D. Miller, 1994). Nevertheless, primary sensory areas do receive “collateral” error signals from the pulvinar (Shipp, 2003), which could provide some useful indirect error-driven learning signals.

Note that this form of temporal-difference learning signal is distinct from the widely-used TD (temporal-difference) model in reinforcement learning (Sutton & Barto, 1998), which is scalar, and applies to reward expectations, not sensory predictions (although see Gardner, Schoenbaum, & Gershman, 2018 and Dayan, 1993 for potential connections between these two forms of prediction error). Finally, as we discuss later, this proposed predictive role for the pulvinar is compatible with the more widely-discussed role it may play in attention (Bender & Youakim, 2001; Fiebelkorn & Kastner, 2019; LaBerge & Buchsbaum, 1990; Saalmann & Kastner, 2011; Snow, Allen, Rafal, & Humphreys, 2009; Zhou et al., 2016). Indeed, we think these two functions are synergistic (i.e., you predict what you attend, and vice-versa; Richter & de Lange, 2019), and have initial computational results consistent with this idea.

Predictive Learning of Temporal Structure in a Probabilistic Grammar

To illustrate and test the predictive learning abilities of this biologically based model, we first ran a classical test of sequence learning (Cleeremans & McClelland, 1991; Reber, 1967) that has been explored using simple recurrent networks (SRNs) (J. L. Elman, 1990; Jordan, 1989). The biologically based model was implemented using the Leabra algorithm, which is a comprehensive framework that uses conductance-based point neuron equations, inhibitory competition, bidirectional connectivity, and the biologically plausible temporal difference learning mechanism described above (O’Reilly, 1996, 1998; O’Reilly et al., 2016; O’Reilly & Munakata, 2000; O’Reilly et al., 2012). Leabra serves as a model of the bidirectionally connected processing in the cortical superficial layers, and has been used to simulate a large number of different cognitive neuroscience phenomena. It is described in the Appendix, which also provides a detailed mapping between the SRN and our biological model.

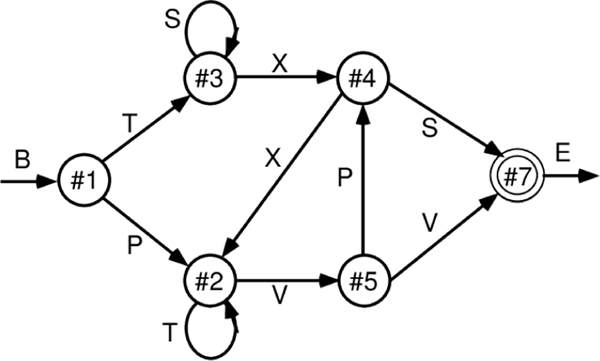

As shown in Figure 3, sequences were generated according to a finite state automaton (FSA) grammar, as used in implicit sequence learning experiments by Reber (1967). Each node has a 50% random branching to two different other nodes, and the labels generated by node transitions are locally ambiguous (except for the B=begin and E=end states). Thus, integration over time and across many iterations are required to infer the systematic underlying grammar. It is a reasonably challenging task for SRNs and people to learn and provides an important validation of the power of these predictive learning mechanisms. Given the random branching, accurately predicting the specific path taken is impossible, but we can score the model’s output as correct if it activates either or both of the possible branches for each state.

Figure 3:

Finite state automaton (FSA) grammar used in implicit sequential learning experiments (Reber, 1967) and in early simple recurrent networks (SRNs) (Cleeremans & McClelland, 1991). It generates a sequence of letters according to the link transitioned between state nodes, where each outgoing link to another node has a 50% probability of being selected. Each letter (except for the B=begin and E=end) appears at 2 different points in the grammar, making them locally ambiguous. This combination of randomness and ambiguity makes it challenging for a learning system to infer the true underlying structure of the grammar.

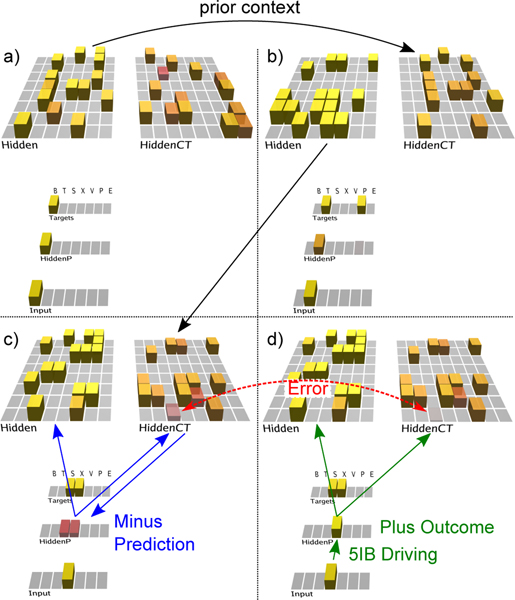

The model (Figure 4) required around 20 epochs of 25 sequences through the grammar to learn it to the point of making no prediction errors for 5 epochs in a row (which guarantees that it had completely learned the task). This model is available in the standard emergent distribution, at https://github.com/emer/leabra/tree/master/examples/deepfsa. A few steps through a sequence are shown in the figure, illustrating how the CT context layer, which drives the P pulvinar layer prediction, represents the information present on the previous alpha cycle time step. Thus, the network is attempting to predict the current Input state, which then drives the pulvinar plus phase at the end of each alpha cycle, as shown in the last panel. On each trial, the difference between plus and minus phases locally over each cortical neuron drives its synaptic weight changes, which accumulate over trials to allow accurate prediction of the sequences, to the extent possible given their probabilistic nature.

Figure 4:

Predictive learning model applied to the FSA grammar shown in previous figure. The first three panels (a, b, c) show the prediction state (end of the minus phase, e.g., the first 75 ms of an alpha cycle) of the trained model on the first three steps of the sequence ‘BTX’ (plus phases also occurred, but are not shown). The last panel (d) shows the plus phase after the third step. The Input layer provides the 5IB drivers for the corresponding HiddenP pulvinar layer, so the plus phase is always based on the specific randomly-selected path taken. The Targets layer is purely for display, showing the two valid possible labels that could have been predicted. To track learning, the model’s prediction is scored as accurate if either or both targets are activated. Computationally, the model is similar to an SRN, where the CT layer that drives the prediction over the pulvinar encodes the activation state from the previous time step (alpha cycle), due to the phasic bursting of the 5IB neurons that drive CT updating. Note how the CT layer in b) reflects the Hidden activation state in a), and likewise for c) reflecting b). This is evident because we’re using one-to-one connectivity between Hidden and HiddenCT layers (which works well in general, along with full lateral connectivity within the CT layer). Thus, even though the correct answer is always present on the Input layer for each step, the CT layer is nevertheless attempting to predict this Input based on the information from the prior time step. a) In the first step, the B label is unambiguous and easily predicted (based on prior E context). b) In the 2nd step, the network correctly guesses that the T label will come next, but there is a faint activation of the other P alternative, which is also activated sometimes based on prior learning history and associated minor weight tweaks. c) In the 3rd step, both S and X are equally predicted. d) In the plus phase, only the Input pattern (‘X’ on this trial) drives HiddenP activations, and the projections from pulvinar back to the cortex convey both the minus-phase prediction and plus-phase actual input. You can see one HiddenCT neuron, just above the arrow, visibly changes its activation as a result (and all neurons experience smaller changes), and learning in all these cortical (Hidden) layer neurons is a function of their local temporal difference between minus and plus phases.

Predictive Learning of Object Categories in IT Cortex

Now we describe a large-scale, systems-neuroscience implementation of the proposed thalamocortical predictive error-driven learning framework, in a model of visual predictive learning (Figure 5). Our second major objective, and a critical question for predictive learning, is determining whether the model can develop high-level, abstract ways of representing the raw sensory inputs, while learning from nothing but predicting these low-level visual inputs. We showed the model brief movies of 156 3D object exemplars drawn from 20 different basic-level categories (e.g., car, stapler, table lamp, traffic cone, etc.) selected for their overall shape diversity from the CU3D-100 dataset (O’Reilly et al., 2013). The objects moved and rotated in 3D space over 8 movie frames, where each frame was sampled at the alpha frequency (Figure 5b). Because the motion and rotation parameters were generated at random on each sequence, this dataset consists of 512,000 unique images, and there is no low-dimensional object category training signal, so the usual concerns about overfitting and training vs. testing sets are not applicable: our main question is what kind of representations self-organize as result of this purely visual experience.

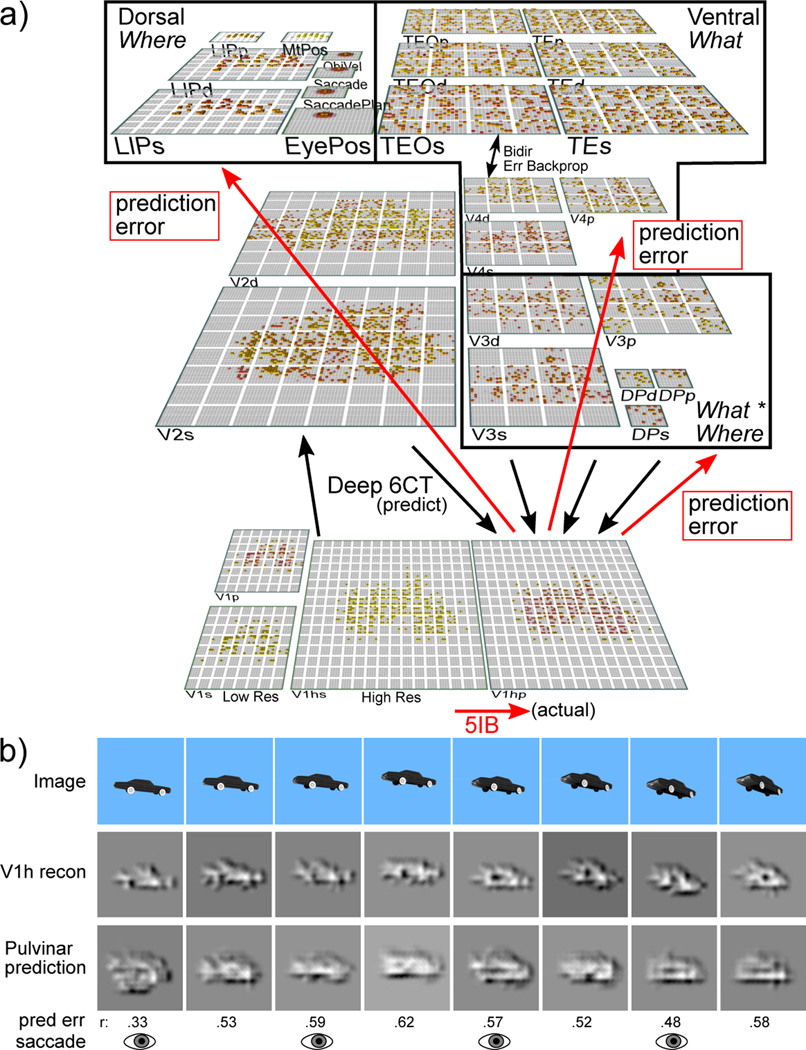

Figure 5:

a) The What-Where-Integration, WWI deep predictive learning model. The dorsal Where pathway learns first, using easily-abstracted spatial blobs, to predict object location based on prior motion, visual motion, and saccade efferent copy signals. This drives strong top-down inputs to lower areas with accurate spatial predictions, leaving the residual error concentrated on What and What * Where integration. The V3 and DP (dorsal prelunate) constitute the What * Where integration pathway, binding features and locations. V4, TEO, and TE are the What pathway, learning abstracted object category representations, which also drive strong top-down inputs to lower areas. Suffixes: s = superficial, d = deep, p = pulvinar. c) Example sequence of 8 alpha cycles that the model learned to predict, with the reconstruction of each image based on the V1 gabor filters (V1h recon), and model-generated prediction (correlation r prediction error shown). The low resolution and reconstruction distortion impair visual assessment, but r values are well above the r’s for each V1 state compared to the previous time step (mean = .38, min of .16 on frame 4 — see Appendix for more analysis). Eye icons indicate when a saccade occurred.

There were also saccadic eye movements every other frame, introducing an additional, realistic, predictive-learning challenge. An efferent copy signal enabled full prediction of the effects of the eye movement, and allows the model to capture the signature predictive remapping phenomenon (Cavanagh, Hunt, Afraz, & Rolfs, 2010; Duhamel, Colby, & Goldberg, 1992; Neupane, Guitton, & Pack, 2017). The only learning signal available to the model was the prediction error generated by the temporal difference between what it predicted to see in the V1 input in the next frame and what was actually seen.

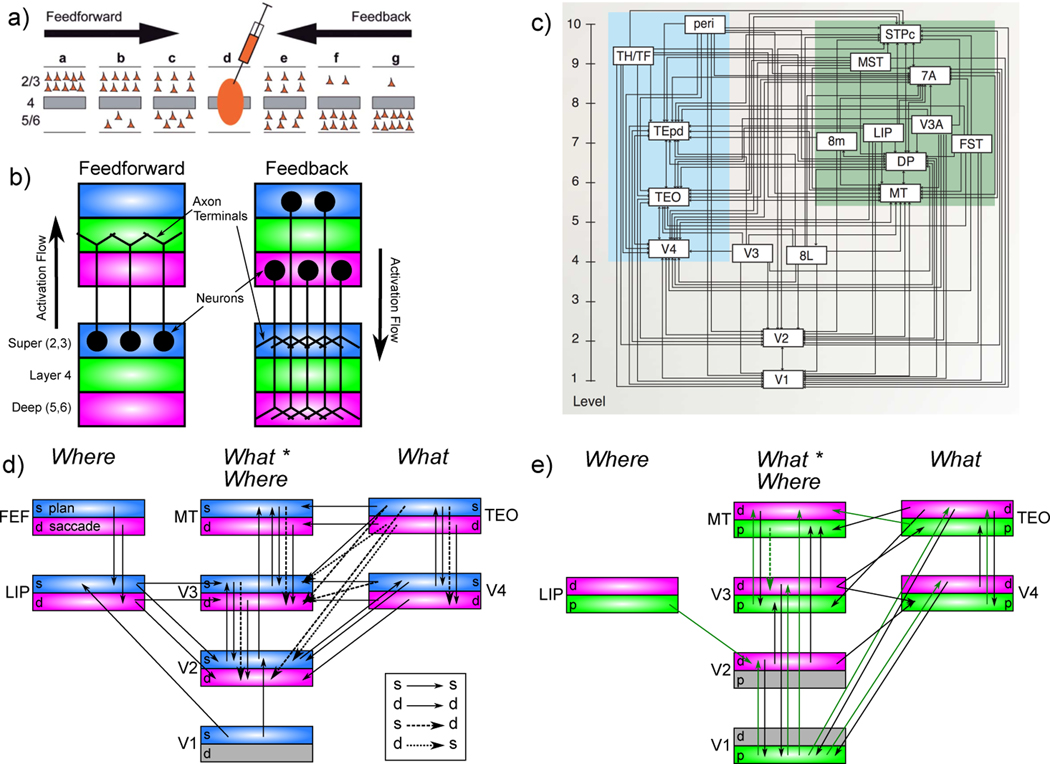

As described in detail in the Appendix, our model was constructed to capture critical features of the visual system, including the major division between a dorsal Where and ventral What pathway (Ungerleider & Mishkin, 1982), and the overall hierarchical organization of these pathways derived from detailed connectivity analyses (Felleman & Van Essen, 1991; Markov, Ercsey-Ravasz, et al., 2014; Markov, Vezoli, et al., 2014; Rockland & Pandya, 1979). In addition to these biological constraints, we conducted extensive exploration of the connectivity and architecture space, and found a remarkable convergence between what worked functionally and the known properties of these pathways (O’Reilly et al., 2017). For example, the feedforward pathway has projections from lower-level superficial layers to superficial layers of higher levels, while feedback originated in both the superficial and deep and projected back to both (Felleman & Van Essen, 1991; Rockland & Pandya, 1979). Also, consistent with the core features of the pulvinar pathways discussed above, deep layer predictive (6CT) inputs originated in higher levels, while driver (5IB) inputs originated in lower levels. For simplicity we organized the model layers in terms of these driver inputs, whereas the topographic organization of pulvinar in the brain is organized more according to the 6CT projection loops (Shipp, 2003).

Another important set of parameters are the strength of deep-layer recurrent projections, which influence the timescale of temporal integration, producing a simple biologically based version of slow feature analysis (Foldiak, 1991; Wiskott & Sejnowski, 2002). We followed the biological data suggesting that recurrence increases progressively up the visual hierarchy (Chaudhuri, Knoblauch, Gariel, Kennedy, & Wang, 2015). It was essential that the Where pathway learn first, consistent with extant data (Bourne & Rosa, 2006; Kiorpes, Price, Hall-Haro, & Anthony Movshon, 2012), including early pathways interconnecting LIP and pulvinar (Bridge, Leopold, & Bourne, 2016), and a rare asymmetric pathway, from V1 to LIP (Markov, Ercsey-Ravasz, et al., 2014), providing a direct short-cut for high-level spatial representations in LIP. Results from various informative model architecture and parameter manipulations are discussed below after the primary results from the standard intact model.

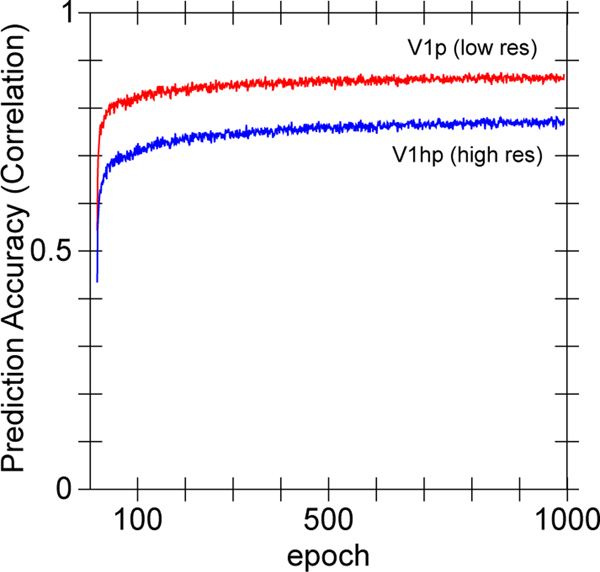

Learning curves and other model details are shown in the Appendix. We have also implemented a full de-novo replication of the model in a new modeling framework, which also replicated the results shown here. Furthermore, much of the model was originally developed in the context of a set of object-like patterns generated systematically from a set of simple line features O’Reilly et al. (2017), and the parameters that work best in terms of combinatorial generalization on those patterns also worked well for these 3D objects. Thus, we are confident that the model’s learning behavior is not idiosyncratic to the particular set of objects used here, and represents a general capacity of the system to develop abstract representations through predictive learning. Other ongoing work to be reported in an upcoming publication is applying the model to prediction of auditory speech inputs, which has a natural temporal structure, and finding similar results in terms of learning higher-level abstract encoding of these auditory signals.

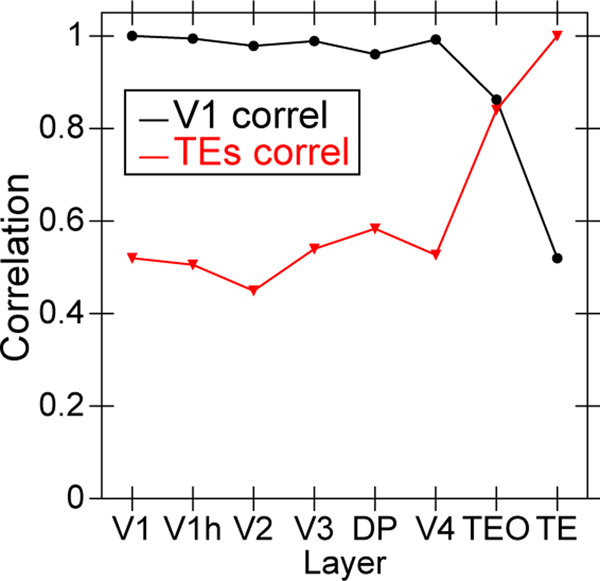

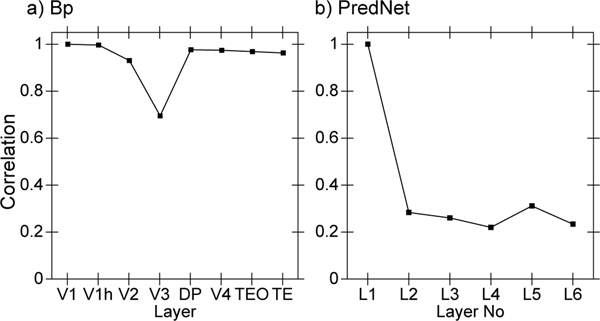

To directly address the question of whether the hierarchical structure of the network supports the development of abstract, higher-level representations that go beyond the information present in the visual inputs, we applied a second-order similarity measure across the object-level similarity matrices computed at each layer in the network (Figure 6). This shows the extent to which the similarity matrix across objects in one layer is itself similar to the object similarity matrix in another layer, in terms of a correlation measure across these similarity matrices. Critically, this measure does not depend on any kind of subjective interpretation of the learned representations — it just tells us whether whatever similarity structure was learned differs across the layers. Starting from either V1 compared to all higher layers, or the highest TE layer compared to all lower layers, we found a consistent pattern of progressive emergence of the object categorization structure in the upper IT pathway (TEO, TE).

Figure 6:

Emergence of abstract category structure over the hierarchy of layers, comparing similarity structure in each layer vs that present in V1 (black line) or in TE (red line). Both cases, which are roughly symmetric, clearly show that IT layers (TEO, TE) progressively differentiate from raw input similarity structure present in V1, and, critically, that the model has learned structure beyond that present in the input. This is is the simplest, most objective summary statistic showing this progressive emergence of structure, while subsequent figures provide a more concrete sense of what kinds of representations actually developed.

This analysis confirms that indeed the IT category structure is significantly different from that present at the level of the V1 primary visual input. Thus the model, despite being trained only to generate accurate visual input-level predictions, has learned to represent these objects in an abstract way that goes beyond the raw input-level information. We further verified that at the highest IT levels in the model, a consistent, spatially-invariant representation is present across different views of the same object (e.g., the average correlation across frames within an object was .901).

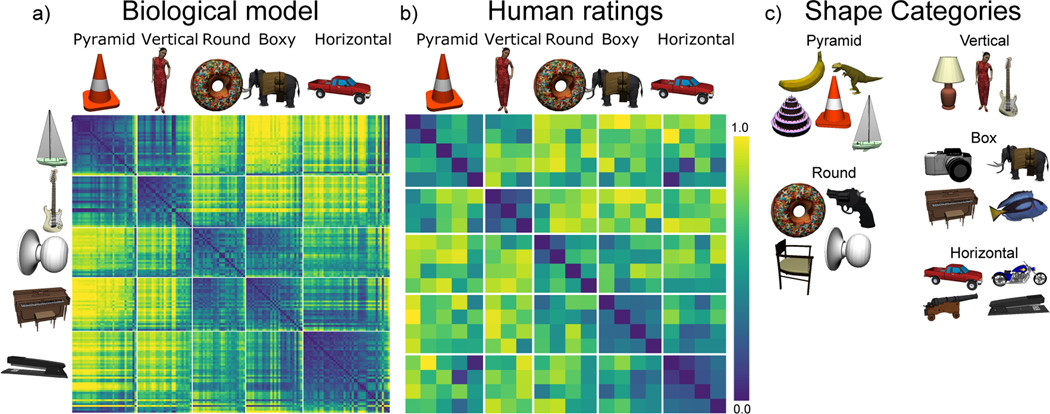

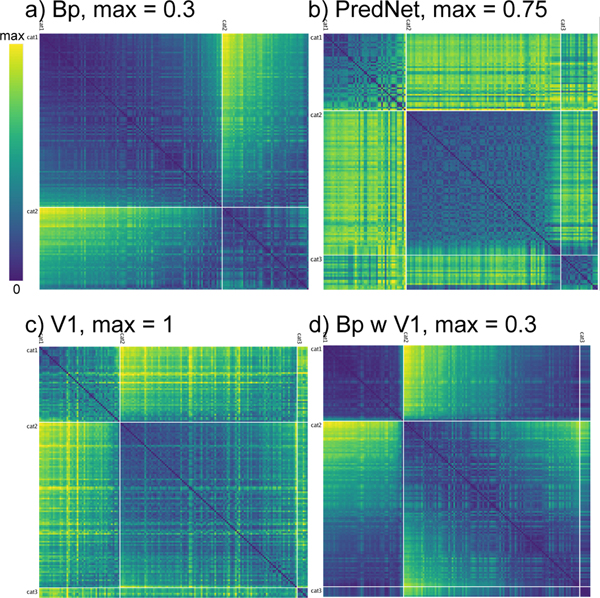

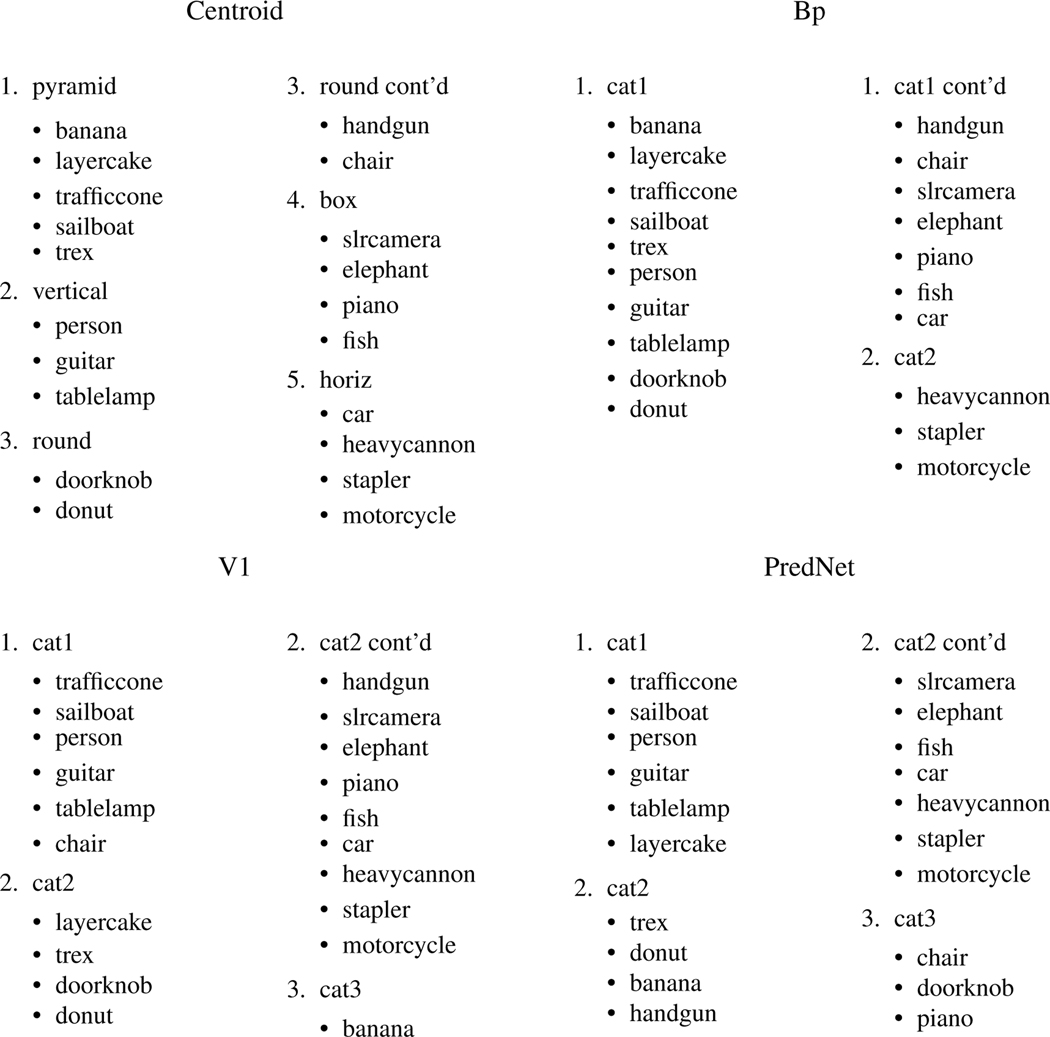

To better understand the nature of these learned representations, Figure 7 shows a representational similarity analysis (RSA) on the activity patterns at the highest IT layer (TE), which reveals the explicit categorical structure of the learned representations (Cadieu et al., 2014; Kriegeskorte, Mur, & Bandettini, 2008). Specifically, we found that the highest IT layer (TE) produced a systematic organization of the 156 3D objects into 5 categories. In our admittedly subjective judgment, these categories seemed to correspond to the overall shape of the objects, as shown by the object exemplars in the figure (pyramid-shaped, vertically-elongated, round, boxy / square, and horizontally-elongated). Furthermore, the basic-level categories were subsumed within these broader shape-level categories, so the model appears to be sensitive to the coherence of these basic-level categories as well, but apparently their shapes were not sufficiently distinct between categories to drive differentiated TE-level representations for each such basic-level category.

Figure 7:

a) Category similarity structure that emerged in the highest layer, TE, of the biologically based predictive learning model, showing dissimilarity (1-correlation) of the TE representation for each 3D object against every other 3D object (156 total objects). Blue cells have high similarity. Model has learned block-diagonal clusters or categories of high-similarity groupings, contrasted against dissimilar off-diagonal other categories. Clustering maximized average within – between dissimilarity (see Appendix), and clearly corresponded to the shown shape-based categories, with exemplars from each category shown. Also, all items from the same basic-level object categories (N=20) are reliably subsumed within learned categories. b) Human similarity ratings for the same 3D objects, presented with the V1 reconstruction (see Fig 1b) to capture coarse perception in the model, aggregated by 20 basic-level categories (156 × 156 matrix was too large to sample densely experimentally). Each cell is 1 proportion of time given object pair was rated more similar than another pair (see Appendix). The human matrix shares the same centroid categorical structure as the model (confirmed by permutation testing and agglomorative cluster analysis, see Appendix), indicating that human raters used the same shape-based category structure. c) One object from each of the 20 basic level categories, organized into the shape-based categories. The Vertical, Box and Horizontal categories are fairly self-evident and the model was most consistent in distinguishing those, along with subsets of the Pyramid (layer-cake, traffic-cone, sailbot) and Round (donut, doorknob) categories, while banana, trex, chair, and handgun were more variable.

Given that the model only learns from a passive visual experience of the objects, it has no access to any of the richer interactive multi-modal information that people and animals would have. Furthermore, as evident in Figure 5b, the relatively low resolution of the V1 layers (required to make the model tractable computationally) means that complex visual details are not reliably encoded (and even so, are not generally reliable across object exemplars), such that the overall object shape is the most salient and sensible basis for categorization for this model.

Although these object shape categories appeared sensible to us, we ran a simple experiment to test whether a sample of 30 human participants would use the same category structure in evaluating the pairwise similarity of these objects. Figure 7b shows the results, confirming that indeed this same organization of the objects emerged in their similarity judgments. These judgments were based on the V1 reconstruction as shown in Figure 5b to capture the model’s coarse-grained perception; see Appendix for methods and further analysis.

The progressive emergence of increasingly abstract category structure across visual areas, evident in Figure 6, has been investigated in recent comparisons between monkey electrophysiological recordings and deep convolutional neural networks (DCNNs), which provide a reasonably good fit the the overall progressive pattern of increasingly categorical organization (Cadieu et al., 2014). However, these DCNNs were trained on large datasets of human-labeled object categories, and it is perhaps not too surprising that the higher layers closer to these category output labels exhibited a greater degree of categorical organization. In contrast, because the only source of learning in our model comes from prediction errors over the V1 input layers, the graded emergence of an object hierarchy here reflects a truly self-organizing learning process.

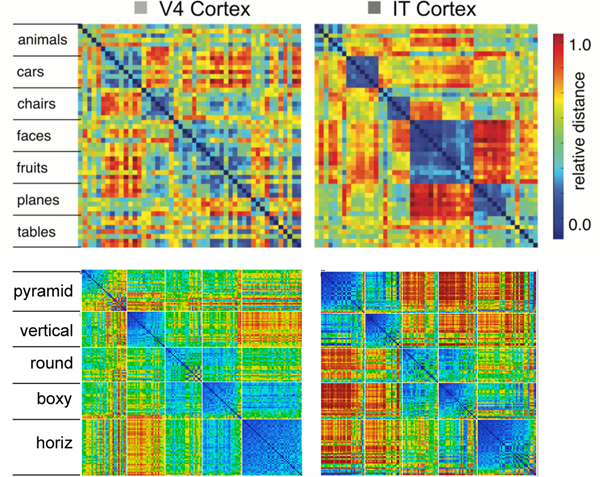

Figure 8 compares the similarity structures in layers V4 and IT in macaque monkeys (Cadieu et al., 2014) with those in corresponding layers in our model. In both the monkeys and our model, the higher IT layer builds upon and clarifies the noisier structure that is emerging in the earlier V4 layer, showing that our model replicates the essential qualitative hierarchical progression in the brain. As noted, we would not expect our model to exactly replicate the detailed object-specific similarity structure found in macaques, due to the impoverished nature of our model’s experience, so this comparison remains qualitative in terms of the respective differences between V4 and IT in each model, rather than a direct comparison of the similarity structure between corresponding layers in the model and the macaque. In the future, when we can scale up our model and tune the attentional processing dynamics necessary to deal with cluttered visual scenes, we will be able to train our model on the same images presented to the macaques, and can provide this more direct comparison.

Figure 8:

Comparison of progression from V4 to IT in macaque monkey visual cortex (top row, from Cadieu et al., 2014) versus same progression in model (replotted using comparable color scale). Although the underlying categories are different, and the monkeys have a much richer multi-modal experience of the world to reinforce categories such as foods and faces, the model nevertheless shows a similar qualitative progression of stronger categorical structure in IT, where the block-diagonal highly similar representations are more consistent across categories, and the off-diagonal differences are stronger and more consistent as well (i.e., categories are also more clearly differentiated). Note that the critical difference in our model versus those compared in Cadieu et al. 2014 and related papers is that they explicitly trained their models on category labels, whereas our model is entirely self-organizing and has no external categorical training signal.

Finally, we did not use analyses based on decoding techniques, because with high-dimensional distributed neural representations, it is generally possible to decode many different features that are not otherwise compactly and directly represented (Fusi, Miller, & Rigotti, 2016). In preliminary work using decoding in the context of the simpler feature-based input patterns, we indeed found that decoding was not a very sensitive measure of the differentiation of representations across layers, which is so clearly evident in Figure 6. Thus, as advocates of the RSA approach have argued, measuring similarity structure evident in the activity patterns over a given layer generally provides a clearer picture of what that layer is explicitly encoding (Kriegeskorte et al., 2008).

In summary, the model learned an abstract category organization that reflects the overall visual shapes of the objects as judged by human participants, in a way that is invariant to the differences in motion, rotation, and scaling that are present in the V1 visual inputs. We are not aware of any other model that has accomplished this signature computation of the ventral What pathway in a purely self-organizing manner operating on realistic 3D visual objects, without any explicit supervised category labels. Furthermore, our model does this using a learning algorithm directly based on detailed properties of the underlying biological circuits in this pathway, providing a coherent overall account.

Backpropagation Comparison Models

To help discern some of the factors that contribute to the categorical learning in our model, and provide a comparison with more widely-used error backpropagation models, we tested a backpropagation-based (Bp) version of the same What vs. Where architecture as our biologically based predictive error model, and we also tested a standard PredNet model (Lotter et al., 2016) with extensive hyperparameter optimization (see Appendix). Due to the constraints of backpropagation, we had to eliminate any bidirectional connectivity loops in the Bp version, but we were able to retain a form of predictive learning by configuring the V1p pulvinar layer as the final target output layer, with the target being the next visual input relative to the current V1 inputs.

Figure 9 shows the same second-order similarity analysis as Figure 6, to determine the extent to which these comparison networks also developed more abstract representations in the higher layers that diverge from the similarity structure present in the lowest layers. According to this simple objective analysis, they did not — the higher layers showed no significant, progressive divergence in their similarity structure. The PredNet model did show a larger difference between the first layer and the rest of the layers, due to the subsequent layers encoding errors while the first layer has a positive representation of the image, but there was no progressive difference beyond that up into the higher layers.

Figure 9:

Similarity of similarity structure across layers for the comparison backprop models, comparing each layer to the first layer. a) Backpropagation (Bp) model with the same What / Where structure as the biological model. Unlike the biologically based model (Figure 6) the higher IT layers (TE, TEO) do not diverge significantly from the similarity structure present in V1, indicating that the model has not developed abstractions beyond the structure present in the visual input. Layer V3 is most directly influenced by spatial prediction errors, so it differs from both in strongly encoding position information. b) PredNet model, which has 6 layers. Layers 2–6 diverge from layer 1, but there is no progressive change in the higher layers as we see in our model moving from V4 to TEO. The divergence in correlation starting at layer 2 is likely due to the fact that higher layers only encode errors, not stimulus-driven positive representations of the input. Aside from this large distinction (which is inconsistent with the similarity in neural coding seen in actual V1 and V2 recordings), there is no evidence of a cumulative development of abstraction in higher layers.

Next, we examined the RSA matrices for the highest (TE) layer in the comparison models, also in comparison with the same for the V1 layer (Figure 10). This shows that the TE layer in the Bp model formed a simple binary category structure overall, which is similar to the RSA for the V1 input layer. It is also important to emphasize that the scales on these figures are different (as shown in their headers), such that these comparison models had much less differentiated representations overall. Similar results were found in the PredNet model. Because existing work with these models has typically relied on additional supervised learning and decoder-based analyses (which are essentially equivalent to an additional layer of supervised learning), these RSA-based analyses provide an important, more sensitive way of determining what they learn purely through predictive learning.

Figure 10:

a) Best-fitting category similarity for TE layer of the backpropagation (Bp) model with the same What / Where structure as the biological model. Only two broad categories are evident, and the lower max distance (0.3 vs. 1.5 in biological model) means that the patterns are much less differentiated overall. b) Best-fitting similarity structure for the PredNet model, in the highest of its layers (layer 6), which is more differentiated than Bp (max = 0.75) but also less cleanly similar within categories (i.e., less solidly blue along the block diagonal), and overall follows a broad category structure similar to V1. c) The best fitting V1 structure, which has 2 broad categories and banana is in a third category by itself. The lack of dark blue on the block diagonal indicates that these categories are relatively weak, and every item is fairly dissimilar from every other. d) The Bp TE similarity values from panel a shown in the same ordering as V1 from panel c, demonstrating how the similarity structure has not diverged very much, consistent with the results shown in Figure 9 — the within – between contrast differences are 0.0838 for panel a and 0.0513 for d — see Appendix for details.

These results show that the additional biologically derived properties in our model are playing a critical role in the development of abstract categorical representations that go beyond the raw visual inputs. These properties include: excitatory bidirectional connections, inhibitory competition, and an additional Hebbian form of learning that serves as a regularizer (similar to weight decay) on top of predictive error-driven learning (O’Reilly, 1998; O’Reilly & Munakata, 2000). Each of these properties could promote the formation of categorical representations. Bidirectional connections enable top-down signals to consistently shape lower-level representations, creating significant attractor dynamics that cause the entire network to settle into discrete categorical attractor states. Another indication of the importance of bidirectional connections is that a greedy layer-wise pretraining scheme, consistent with a putative developmental cascade of learning from the sensory periphery on up (Bengio, Yao, Alain, & Vincent, 2013; Hinton & Salakhutdinov, 2006; Shrager & Johnson, 1996; Valpola, 2014), did not work in our model. Instead, we found it essential that higher layers, with their ability to form more abstract, invariant representations, interact and shape learning in lower layers right from the beginning.

Furthermore, the recurrent connections within the TEO and TE layers likely play an important role by biasing the temporal dynamics toward longer persistence (Chaudhuri et al., 2015). By contrast, backpropagation networks typically lack these kinds of attractor dynamics, and this could contribute significantly to their relative lack of categorical learning. Hebbian learning drives the formation of representations that encode the principal components of activity correlations over time, which can help more categorical representations coalesce (and results below already indicate its importance). Inhibition, especially in combination with Hebbian learning, drives representations to specialize on more specific subsets of the space.

Ongoing work is attempting to determine which of these is essential in this case (perhaps all of them) by systematically introducing some of these properties into the backpropagation model, though this is difficult because full bidirectional recurrent activity propagation, which is essential for conveying error signals top-down in the biological network, is incompatible with the standard efficient form of error backpropagation, and requires significantly more computationally intensive and unstable forms of fully recurrent backpropagation (Pineda, 1987; Williams & Zipser, 1992). Furthermore, Hebbian learning requires dynamic inhibitory competition which is difficult to incorporate within the backpropagation framework.

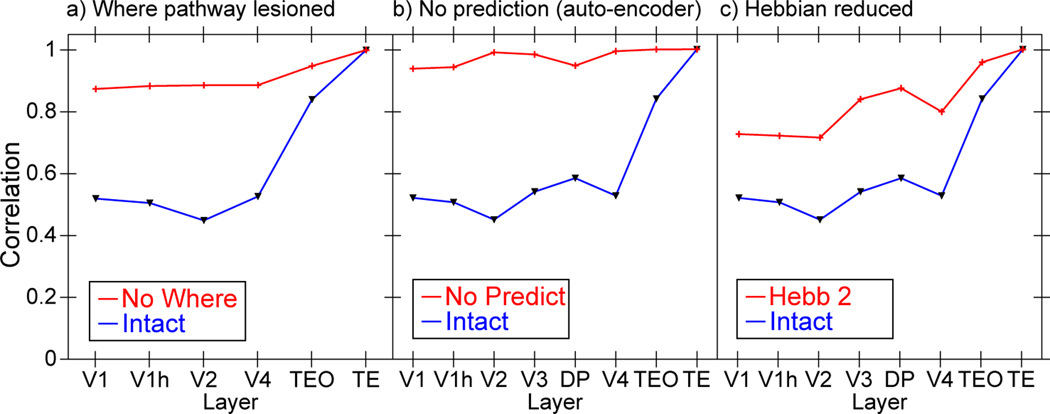

Architecture and Parameter Manipulations

Figure 11 shows just a few of the large number of parameter manipulations that have been conducted to develop and test the final architecture. For example, we hypothesized that separating the overall prediction problem between a spatial Where vs. non-spatial What pathway (Goodale & Milner, 1992; Ungerleider & Mishkin, 1982), would strongly benefit the formation of more abstract, categorical object representations in the What pathway. Specifically, the Where pathway can learn relatively quickly to predict the overall spatial trajectory of the object (and anticipate the effects of saccades), and thus effectively regress out that component of the overall prediction error, leaving the residual error concentrated in object feature information, which can train the ventral What pathway to develop abstract visual categories.

Figure 11:

Effects of various manipulations on the extent to which TE representations differentiate from V1. For all plots, Intact is the same result shown in Figure 6 from the intact model for ease of comparison (panel a is missing V3 and DP dorsal pathway layers). All of the following manipulations significantly impair the development of abstract TE categorical representations (i.e., TE is more similar to V1 and the other layers). a) Dorsal Where pathway lesions, including lateral inferior parietal sulcus (LIP), V3, and dorsal prelunate (DP). This pathway is essential for regressing out location-based prediction errors, so that the residual errors concentrate feature-encoding errors that train the What pathway. b) Allowing the deep layers full access to current-time information, thus effectively eliminating the prediction demand and turning the network into an auto-encoder, which significantly impairs representation development, and supports the importance of the challenge of predictive learning for developing deeper, more abstract representations. c) Reducing the strength of Hebbian learning by 20% (from 2.5 to 2), demonstrating the essential role played by this form of learning on shaping categorical representations. Eliminating Hebbian learning entirely (not shown) prevented the model from learning anything at all, as it also plays a critical regularization and shaping role on learning.

Figure 11a shows that, indeed, when the Where pathway is lesioned, the formation of abstract categorical representations in the intact What pathway is significantly impaired. We also hypothesized that full predictive learning (about the future), as compared to just encoding and decoding the current state (i.e., an auto-encoder, which is much easier computationally), is also critical for the formation of abstract categorical representations — prediction is a “desirable difficulty” (Bjork, 1994). Figure 11b shows that this was the case. Finally, consistent with our hypothesis that Hebbian learning provides an important bias on learning, Figure 11c shows the impairment associated with reducing this learning bias. The significant reduction in differentiation across all of these manipulations shows that this differentiation property is not a simple consequence of the neural architecture, but rather depends critically on the learning process, unfolding over time with appropriate parameter values and other architectural components. Furthermore, the Bp comparison model shares the same architecture, and does not show the differentiation across layers.

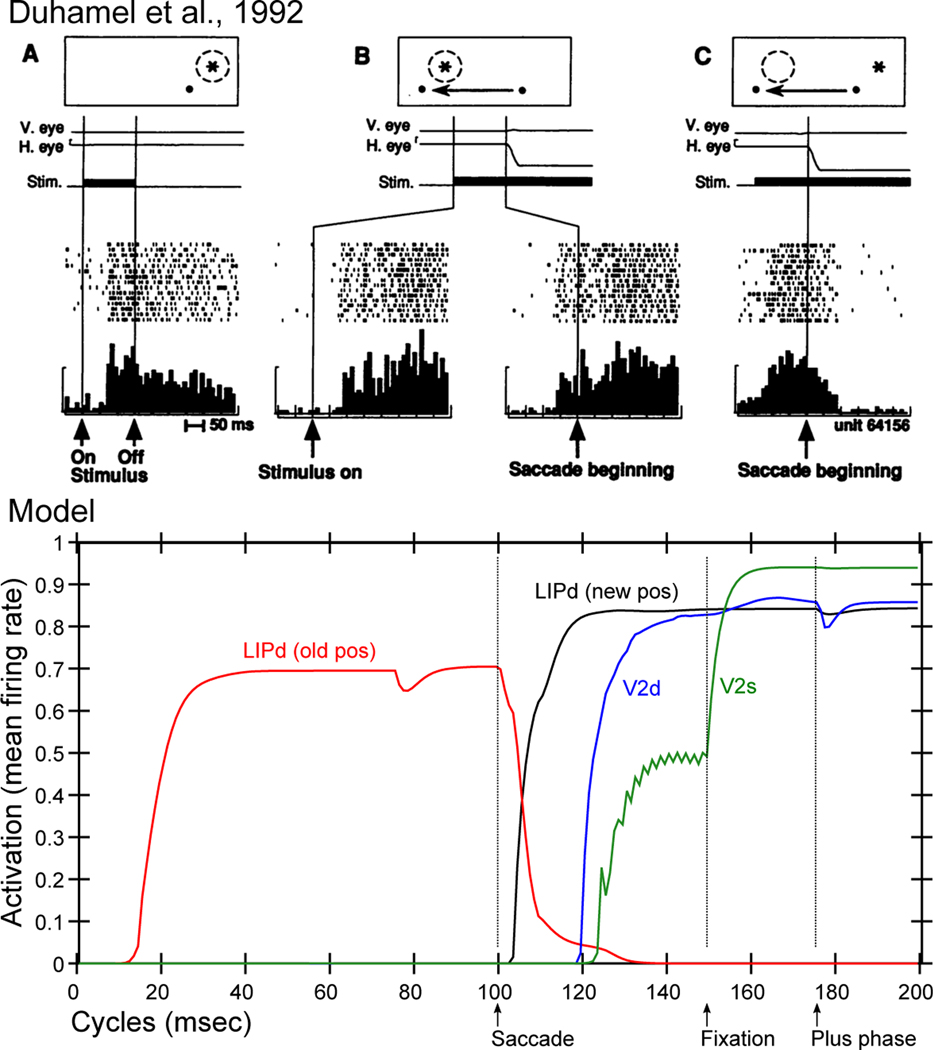

Predictive Behavior

A signature example of predictive behavior at the neural level in the brain is the predictive remapping of visual space in anticipation of a saccadic eye movements (Colby, Duhamel, & Goldberg, 1997; Duhamel et al., 1992; Gottlieb, Kusunoki, & Goldberg, 1998; Marino & Mazer, 2016; Nakamura & Colby, 2002) (Figure 12a). Here, parietal neurons start to fire at the future receptive field location where a currently-visible stimulus will appear after a planned saccade is actually executed. Remapping has also been shown for border ownership neurons in V2 (O’Herron & von der Heydt, 2013) and in area V4 (Neupane, Guitton, & Pack, 2016, 2020). These are examples, we believe, of a predictive process operating throughout the neocortex to predict what will be experienced next. A major consequence of this predictive process is the perception of a stable, coherent visual world despite constant saccades and other sources of visual change.

Figure 12:

Predictive Remapping. top: Original remapping data in LIP from Duhamel et al (1992). A) shows stimulus (star) response within receptive field (dashed circle) relative to fixation dot (upper right of fixation). B) Just prior to monkey making a saccade to new fixation (moving left), stimulus is turned on in receptive field location that will be upper right of the new fixation point, and the LIP neuron responds to that stimulus in advance of the saccade completing. The neuron does not respond to the stimulus in that location if it is not about to make a saccade that puts it within its receptive field (not shown). This is predictive remapping. C) response to the old stimulus location goes away as saccade is initiated. bottom: Data from our model, from individual units in LIPd, V2d, and V2s, showing that the LIP deep neurons respond to the saccade first, activating in the new location and deactivating in the old, and this LIP activation goes top-down to V3 and V2 to drive updating there, generally at a longer latency and with less activation especially in the superficial layers. When the new stimulus appears at the point of fixation (after a 50 ms saccade here), the primed V2s units get fully activated by the incoming stimulus. But the deep neurons are insulated from this superficial input until the plus phase, when the cascade of 5IB firing drives activation of the actual stimulus location into the pulvinar, which then reflects up into all the other layers.

Figure 12b shows that our model exhibits this predictive remapping phenomenon. Specifically, LIP, which is most directly interconnected with the saccade efferent copy signals, is the first to predict the new location, and it then drives top-down activation of lower layers. This top-down dynamic is consistent with the account of predictive remapping given by Wurtz (2008) and Cavanagh et al. (2010), who argue that the key remapping takes place at the high levels of the dorsal stream, which then drive top-down activation of the predicted location in lower areas, instead of the alternative where lower-levels remap themselves based on saccade-related signals. The lower-level visual layers are simply too large and distributed to be able to remap across the relevant degrees of visual angle — the extensive lateral connectivity needed to communicate across these areas would be prohibitive.

Neural Data and Predictions

Having tested the computational and functional learning properties of this biologically based predictive learning mechanism, we now return to consider some of the most important neural data of relevance to our hypotheses, beyond that summarized in the introduction, including contrasts with a widely-discussed alternative framework for predictive coding, and some of the extensive data on alpha frequency effects, followed by a discussion of predictions that would clearly test the validity of this framework.

Additional Neuroscience Data

We begin with data relevant to the basic neural-level properties of the framework. First, a central element of the proposed model is the alpha cycle bursting, and subsequent inter-burst pauses, in the 5IB neurons. Direct electrophysiological recording of deep layer neurons shows periodic alpha-scale bursting for continuous tones in awake animals (Luczak et al., 2009, 2013; Sakata & Harris, 2009, 2012). In vitro, a variety of potential mechanisms behind the generation and synchronization of the 5IB bursts driving this alpha cycle have been identified (Connors et al., 1982; Franceschetti et al., 1995; Silva et al., 1991). Furthermore, the pulvinar has been shown to drive alpha-frequency synchronization of cortical activity across areas in the alpha band in awake behaving animals (Saalmann et al., 2012). We review the larger alpha frequency literature in more detail below, but it is critical to emphasize that this alpha bursting dynamic is actually found in awake, behaving animals, because so many other bursting and up / down state phenomena have recently been shown to only occur in anesthetized brains, including bursting in the thalamic TRC neurons.

In contrast to the 5IB bursting, the 6CT neurons exhibit regular spiking behavior, (Thomson, 2010; Thomson & Lamy, 2007), providing consistent activation to the pulvinar. Also, they do not have axonal branches that project to other cortical areas — the subpopulation that projects to the pulvinar only project there and not to other cortical areas (Petrof, Viaene, & Sherman, 2012), whereas there are other layer 6 neurons that do project to other cortical areas. This distinct connectivity is consistent with a specific role of this neuron type in generating predictions in the pulvinar. The 6CT synaptic inputs on pulvinar TRCs have metabatropic glutamate receptors (mGluR) that have longer time-scale temporal dynamics consistent with the alpha period (100 ms) and even longer (S. M. Sherman, 2014), and the 6CT neurons themselves also have temporally-delayed responding (Harris & Shepherd, 2015; Sakata & Harris, 2009; Thomson, 2010). Furthermore, they have significantly more plasticity-inducing NMDA receptors compared to the 5IB projections (Usrey & Sherman, 2018). These properties are consistent with the 6CT inputs driving a longer-integrated prediction signal that is subject to learning, whereas the 5IB are likely non-plastic and their effects are tightly localized in time.

The 5IB inputs often have distinctive glomeruli structures at their synapses onto pulvinar neurons, which contain a complete feedforward inhibition circuit involving a local inhibitory interneuron, in addition to the direct strong excitatory driver input (Wilson, Bose, Sherman, & Guillery, 1984). Computationally, this can provide a balanced level of excitatory and inhibitory drive so as to not overly excite the receiving neuron, while still dominating its firing behavior.

Although there are well-documented and widely-discussed burst vs. tonic firing modes in pulvinar neurons (S. M. Sherman & Guillery, 2006), there is not much evidence of these playing a clear role in the awake, behaving state, and as noted earlier the growing electrophysiological evidence shows a remarkable correspondence between cortical and pulvinar response properties across multiple different pulvinar areas in this awake state. Nevertheless, there may be important dynamics arising from these firing modes that are more subtle or emerge in particular types of state transitions that may have yet to be identified.

Contrast with Explict Error (EE) Frameworks

To further clarify the nature of the present theory, and introduce a body of relevant data, we contrast it with the widely-discussed explicit error (EE) framework for predictive coding (Bastos et al., 2012; Friston, 2005, 2010; Kawato et al., 1993; Lotter et al., 2016; Ouden et al., 2012; Rao & Ballard, 1999) (Figure 13). The hypothesized locus for computing errors in this framework is in the superficial layers of the neocortex, which are suggested to directly compute the difference between bottom-up inputs from lower layers and top-down inputs from higher areas. Despite many attempts to identify such explicit error-coding neurons in the cortex, no substantial body of unambiguous evidence has been discovered (Kok & de Lange, 2015; Kok, Jehee, & de Lange, 2012; Lee & Mumford, 2003; Summerfield & Egner, 2009; Walsh, McGovern, Clark, & O’Connell, 2020). Furthermore, due to the positive-only firing rate nature of neural coding, two separate populations would be required to convey both signs of prediction error signals, or it would have to be encoded as a variation from tonic firing levels, which are generally low in the neocortex.

Figure 13: