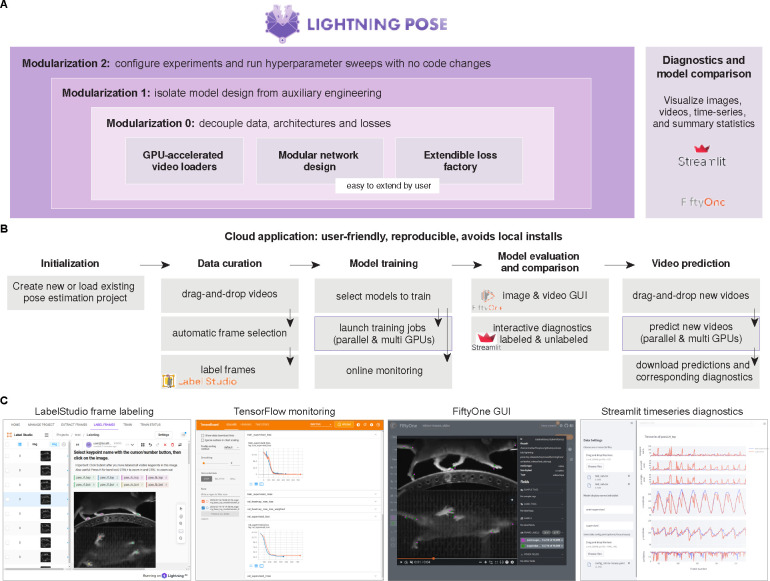

Extended Data Figure 8: Lightning Pose enables easy model development, fast training, and is accessible via a cloud application.

A. Our software package outsources many tasks to existing tools within the deep learning ecosystem, resulting in a lighter, modular package that is easy to maintain and extend. The innermost purple box indicates the core components: accelerated video reading (via NVIDIA DALI), modular network design, and our general-purpose loss factory. The middle purple box denotes the training and logging operations which we outsource to PyTorch Lightning, and the outermost purple box denotes our use of the Hydra job manager. The right box depicts a rich set of interactive diagnostic metrics which are served via Streamlit and FiftyOne GUIs. B. A diagram of our cloud application. The application’s critical components are dataset curation, parallel model training, interactive performance diagnostics, and parallel prediction of new videos. C. Screenshots from our cloud application. From left to right: LabelStudio GUI for frame labeling, TensorFlow monitoring of training performance overlaying two different networks, FiftyOne GUI for comparing these two networks’ predictions on a video, and a Streamlit application that shows these two networks’ time series of predictions, confidences, and spatiotemporal constraint violations.