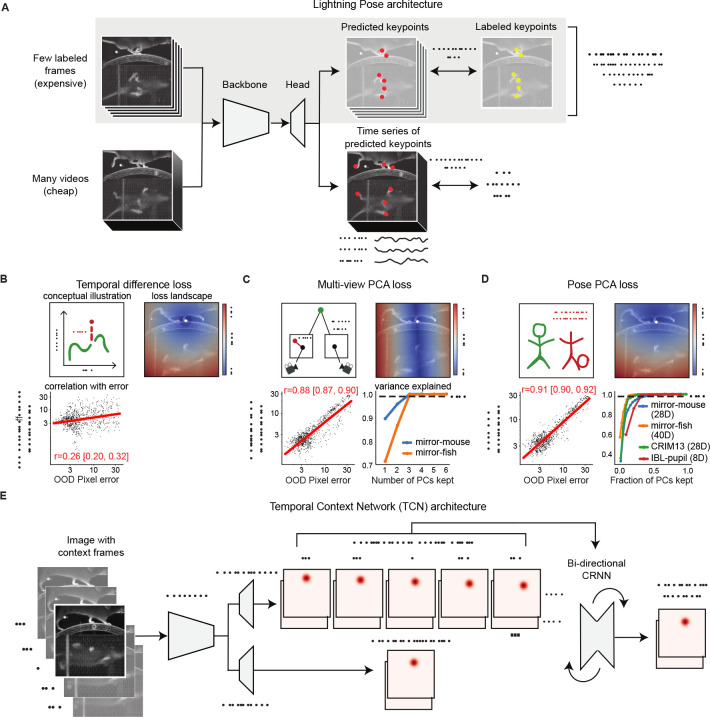

Figure 2: Lightning Pose exploits unlabeled data in pose estimation model training.

A. Diagram of our semi-supervised model that contains supervised (top row) and unsupervised (bottom row) components. B. Temporal difference loss penalizes jump discontinuities in predictions. Top left: illustration of a jump discontinuity. Top right: loss landscape for frame t given the prediction at t −1 (white diamond), for the left front paw (top view). The loss increases further away from the previous prediction, and the dark blue circle corresponds to the maximum allowed jump, below which the loss is set to zero. Bottom left: correlation between temporal difference loss and pixel error on labeled test frames. C. Multi-view PCA loss constrains each multi-view prediction of the same body part to lie on a three-dimensional subspace found by Principal Component Analysis (PCA). Top left: illustration of a 3D keypoint detected on the imaging plane of two cameras. The left detection is inconsistent with the right. Top right: loss landscape for the left front paw (top view; white diamond) given its predicted location on the bottom view. The blue band of low loss values is an “epipolar line” on which the top-view paw could be located. Bottom left: multi-view PCA loss is strongly correlated with pixel error. Bottom right: three PCs explain >99% of label variance on multi-view datasets. D. Pose PCA loss constrains predictions to lie on a low-dimensional subspace of plausible poses, found by PCA. Top left: illustration of a plausible and implausible poses. Top right: loss landscape for the left front paw (top view; white diamond) given all other keypoints, which is minimized around the paw’s actual position. Bottom left: Pose PCA loss is strongly correlated with pixel error. Bottom right: cumulative variance explained versus fraction of PCs kept. Across four datasets, > 99% of the variance in the pose vectors can be explained with <50% of the PCs. E. The Temporal Context Network processes each labeled frame with its adjacent unlabeled frames, using a bi-directional convolutional recurrent neural network. It forms two sets of location heatmap predictions, one using single-frame information and another using temporal context.