Abstract

The correct identification of facial expressions is critical for understanding the intention of others during social communication in the daily life of all primates. Here we used ultra-high-field fMRI at 9.4 T to investigate the neural network activated by facial expressions in awake New World common marmosets from both male and female sex, and to determine the effect of facial motions on this network. We further explored how the face-patch network is involved in the processing of facial expressions. Our results show that dynamic and static facial expressions activate face patches in temporal and frontal areas (O, PV, PD, MD, AD, and PL) as well as in the amygdala, with stronger responses for negative faces, also associated with an increase of the respiration rates of the monkey. Processing of dynamic facial expressions involves an extended network recruiting additional regions not known to be part of the face-processing network, suggesting that face motions may facilitate the recognition of facial expressions. We report for the first time in New World marmosets that the perception and identification of changeable facial expressions, vital for social communication, recruit face-selective brain patches also involved in face detection processing and are associated with an increase of arousal.

SIGNIFICANCE STATEMENT Recent research in humans and nonhuman primates has highlighted the importance to correctly recognize and process facial expressions to understand others' emotions in social interactions. The current study focuses on the fMRI responses of emotional facial expressions in the common marmoset (Callithrix jacchus), a New World primate species sharing several similarities of social behavior with humans. Our results reveal that temporal and frontal face patches are involved in both basic face detection and facial expression processing. The specific recruitment of these patches for negative faces associated with an increase of the arousal level show that marmosets process facial expressions of their congener, vital for social communication.

Keywords: awake marmosets, faces, facial expressions, fMRI, respiration rate, social communication

Introduction

Social cognition requires the ability to perceive, process, and recognize the emotional expressions of conspecifics (Ferretti and Papaleo, 2019). This ability is crucial not only for humans but also many other social species (Parr et al., 1998; Tate et al., 2006; Albuquerque et al., 2016), allowing them to communicate intentions and to better adapt to environmental challenges (Russell, 1997). Facial expressions are particularly necessary during social interactions, constituting a powerful route of rapid nonverbal communication in all primates (Darwin, 2004).

Functional magnetic resonance imaging (fMRI) studies have identified the neural networks underlying faces and facial expression processing in humans and nonhuman primates. In humans, faces elicit activity in a cortical and subcortical network that includes the fusiform gyrus, amygdala, and frontotemporal areas in anterior and posterior regions of the superior temporal sulcus (STS) and in the inferotemporal cortex as well as the orbitofrontal cortex (Kanwisher et al., 1997; Puce et al., 1998; Haxby et al., 2000; Fusar-Poli et al., 2009). Distinct patterns of activation have also been associated with the processing of facial expressions. Overall, the processing of emotional faces is associated with increased activation in most occipitotemporal face-selective regions, prefrontal and orbitofrontal areas as well as subcortical areas including the amygdala and cerebellum (Kanwisher et al., 1997; Puce et al., 1998; Haxby et al., 2000; Blair, 2003; Murphy et al., 2003; Posamentier and Abdi, 2003; Strauss et al., 2005; Hennenlotter and Schroeder, 2006; Vuilleumier and Pourtois, 2007; Fusar-Poli et al., 2009; Arsalidou et al., 2011; Zinchenko et al., 2018). These brain areas respond even more when dynamic facial expressions are used compared with static faces, which also seem to facilitate their recognition (Ceccarini and Caudek, 2013; Zinchenko et al., 2018).

Comparable face-selective regions have been identified in Old World macaque monkeys (Tsao et al., 2003) and in New World marmosets (Hung et al., 2015). In macaques, face-selective patches are located along the occipitotemporal axis in occipital cortex, along the STS, in ventral and medial temporal lobes, in some subregions of the inferior temporal cortex, as well as in frontal cortex and some subcortical regions such as amygdala and hippocampus (Tsao et al., 2003; Pinsk et al., 2005; Hadj-Bouziane et al., 2008; Bell et al., 2009; Weiner and Grill-Spector, 2015). In marmosets, face patches are found along the occipitotemporal axis and in frontal cortex (Hung et al., 2015; Schaeffer et al., 2020). Some fMRI studies have also revealed a stronger modulation of face-selective regions and surrounding cortex for emotional compared with neutral faces in Old World macaques (Hoffman et al., 2007; Hadj-Bouziane et al., 2008; Liu et al., 2017). Furthermore, lesion studies in both humans and macaques suggest that the amygdala plays a key role in the recognition of facial expressions (Aggleton and Passingham, 1981; Adolphs et al., 1994; Hadj-Bouziane et al., 2012).

Here, we investigated the neural circuitry involved in the processing of dynamic facial expressions in marmosets using ultra-high-field (9.4 T) fMRI. We acquired whole-brain fMRI in six awake marmosets while they viewed videos of marmoset faces with neutral or negative facial expressions. To further explore the physiological state of the monkeys during the observation of these different emotional contexts, we also recorded the respiratory rate (RR) of the animals inside the scanner during the task. For comparison, we performed a similar task with static images of neutral and negative marmoset faces to determine the potential differences obtained during the processing of dynamic and static facial expressions. By running a face-localizer task contrasting pictures of faces of marmosets with nonface objects, a method commonly used in the literature (Kanwisher et al., 1997; Tsao et al., 2003; Hung et al., 2015), we were able to relate the dedicated facial expression processing networks to the face-processing system in marmosets.

Materials and Methods

Common marmoset subjects

For this study, we acquired ultra-high-field fMRI data from awake common marmoset monkeys (Callithrix jacchus). For the main fMRI dynamic facial expressions experiment, we scanned the following six animals: three females (MGR, MHE, and MKO: weight range, 345–462 g; age range, 30–34 months) and three males (MMI, MMA, and MGU: weight range, 348–440 g; age range, 30–55 months). For the two additional fMRI experiments, the static facial expressions task and the face-localizer task, we scanned, respectively six animals (four females: MHE, MKO, MAL, and MAN; weight range, 328–462 g; age range, 36–45 months; two males: MMA and MGU; weight range, 409–440 g; age range, 40–48 months) and seven animals (three females: MHE, MAL, MAN; weight range, 328–462 g; age range, 36–45 months; four males: MMA, MGU, MAF, and MMA; weight range, 352–440 g; age range, 36–48 months).

All experimental procedures follow the guidelines of the Canadian Council of Animal Care policy and a protocol approved by the Animal Care Committee of the University of Western Ontario Council on Animal Care #2021–111.

Surgical procedure

To prevent head motion during MRI acquisition, the marmosets were surgically implanted with an MR-compatible head restraint/recording chamber (Johnston et al., 2018; Schaeffer et al., 2019) or with an MR-compatible machined PEEK (polyetheretherketone) head post (Gilbert et al., 2023), conducted under anesthesia and aseptic conditions. For the dynamic facial expressions task, five animals were implanted with the head restraint/recording chamber (monkeys MGR, MHE, MMI, MMA, and MGU), whereas one animal was implanted with the head post (monkey MKO). For the face-localizer and the static facial expressions tasks, all the head restraint/recording chambers on animals were replaced by head posts.

During the surgical procedure, the animals were first sedated and intubated to maintain the animals under gas anesthesia with a mixture of O2 and air (isoflurane, 0.5–3%). With their head immobilized in a stereotactic apparatus, the head fixation device was positioned on the skull after a midline incision of the skin along the skull and maintain in place using a resin composite (Core-Flo DC Lite, Bisco). Additional resin was added as needed to cover the skull surface and ensure an adequate seal around the device. For optimal adhesion, the skull surface was well prepared before the application of the resin by applying two coats of an adhesive resin (All-Bond Universal, Bisco) using a microbrush, air dried, and cured with an ultraviolet dental curing light (King Dental; for details, see Johnston et al., 2018). Throughout the surgery, heart rate, oxygen saturation, and body temperature were continuously monitored. Two weeks after the surgery, the monkeys were acclimated to the head fixation system and MRI environment with 3 weeks of training in a mock scanner described in the study by Gilbert et al. (2021).

Experimental setup

During the scanning sessions, monkeys sat in a sphinx position in the MRI-compatible restraint system (Schaeffer et al., 2019; Gilbert et al., 2023) positioned within a horizontal MR scanner (9.4 T). Their head was restrained by fixation of the head chamber or the head post. An MR-compatible camera (sampling rate, 60 Hz; model 12 M-i, MRC Systems) was positioned in front of the animal allowing to monitor the animal during the acquisition. Horizontal and vertical eye movements were monitored at 60 Hz using a video eye tracker (ETL-200 System, ISCAN). Data from functional runs with more stable eye signals were analyzed using in-house R script (RStudio software; for the dynamic facial expressions task, n = 54; for the static facial expressions task, n = 27; for the face-localizer task, n = 67). The distance of the eye-tracking camera and the eyes of the marmosets was constant in our setup. At the onset of the study, we determined the mapping of horizontal and vertical eye position signals to visual degrees by performing 5-point calibrations using small movies with faces (4° × 2°) that were presented at the center and at 5° eccentricity to the left, right, up, and down. For each run of the experimental data, we identified the central eye position using the eye position density plots during the baseline periods in which only a filled circle was presented at the center of the screen. We then applied our previously determined scaling to the horizontal and vertical eye position signals. Finally, for the different conditions, we calculated the percentage of time when the eye positions of animals were within the stimuli presented on the screen (i.e., a 14° × 10° screen for the dynamic facial expressions task or a 10° × 6° screen for the static facial expressions task and the face-localizer task). This calibration procedure was sufficient to determine the time the monkeys spent looking at the screen but not for any more fine-grained eye-tracking analysis. This percentage of time was >92% for the dynamic facial expressions task (per condition: baseline, 95.3%; neutral faces, 92.3%; negative faces, 92.4%; scrambled neutral faces, 92%; scrambled negative faces, 92.4%), 88% for the static task (per condition: baseline, 89.3%; neutral faces, 91.4%; negative faces, 93.3%; scrambled neutral faces, 91.4%; scrambled negative faces, 88.4%), and 80% for the localizer task (per condition: baseline, 82.7%; body parts, 79.8%; faces, 83.2%; objects, 80.1%). There were no significant differences between the conditions for the three tasks [ANOVA; dynamic facial expressions task: F(1.48,7.39) = 0.33, p = 0.67; static facial expressions task: F(1.97,9.85) = 1.84, p = 0.21; face-localizer task: F(1.25,7.49) = 5.59, p = 0.05 (but not resistant to the p value adjustment by Bonferroni correction)], ruling out the possibility that any differences in fMRI activation between the conditions were because of a different exposure to the visual stimuli. To verify that the marmosets were watching the stimuli as they appeared in the tasks, we additionally quantified eye position densities (Fig. 1D–F, plots for each condition in each experiment). These results demonstrate good compliance of the marmosets in the different tasks.

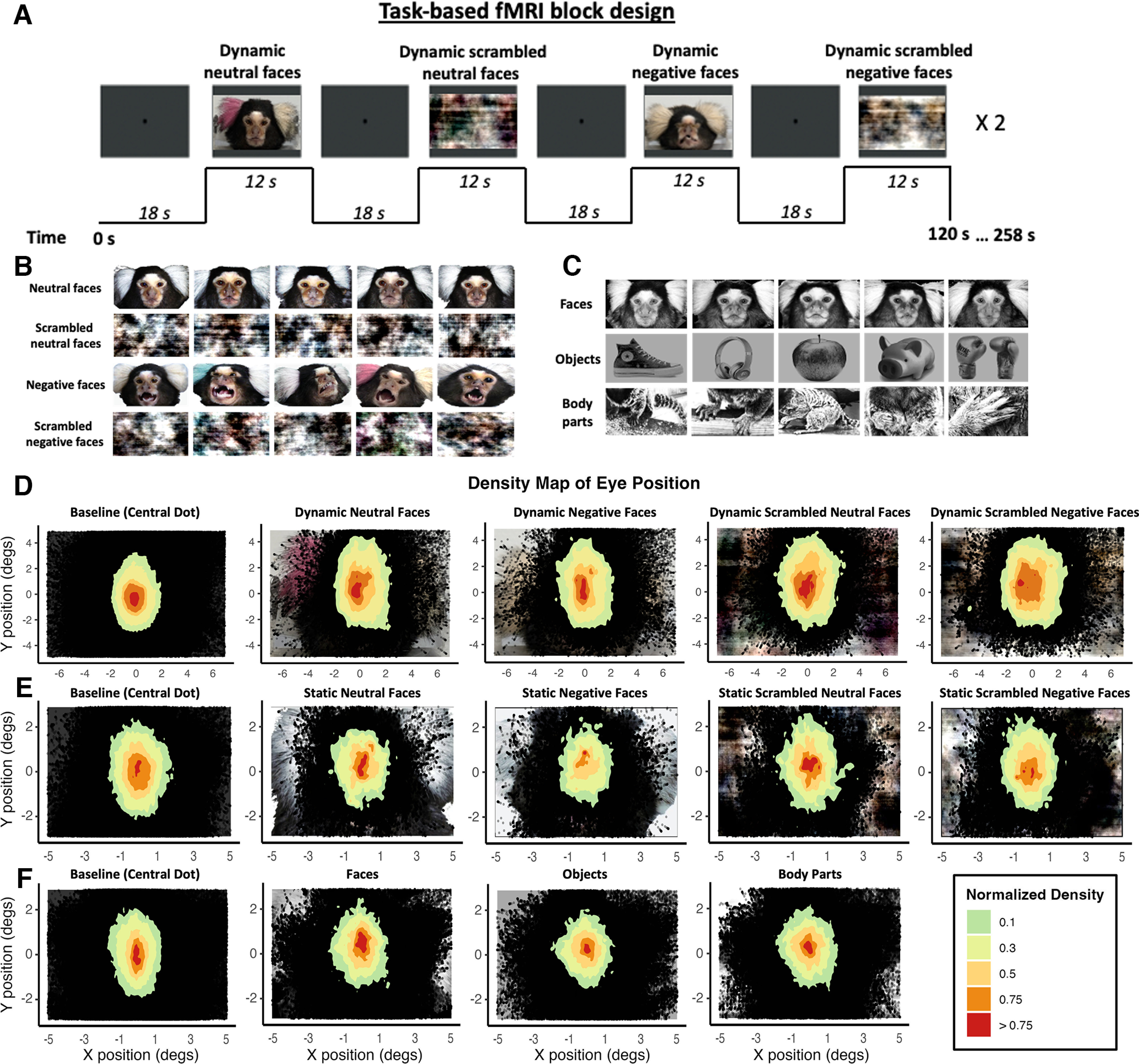

Figure 1.

Experimental setup and stimuli. A, fMRI task block design. In each run of the passive-viewing dynamic facial expressions task, four different types of videos lasting 12 s each were presented twice in a randomized order. The videos consisted of faces of marmosets depicting different facial expressions (neutral faces and negative faces conditions) and the corresponding scrambled version (scrambled neutral faces and scrambled negative faces conditions). Each video was separated by baseline blocks of 18 s, where a central dot was displayed in the center of the screen. This fMRI block design was also used for the static facial expressions and the face-localizer tasks. B, Stimuli of the face-localizer task: three different categories were used in the experiment (faces, objects, and body parts), resulting in blocks of 12 s with stimuli from the same category randomly selected and displayed for 500 ms. Only responses to face and object stimuli were used in this study. Thirty exemplars of each category were used. C, Stimuli used in the passive-viewing static facial expressions task. The following four types of images were used: neutral faces, scrambled neutral faces, negative faces, and scrambled negative faces. Thirty-five exemplars of each category were used. D–F, Density histogram of eye positions for each task (D, dynamic facial expressions task; E, static facial expressions task; F, face-localizer task). Plots showing density histograms of eye positions for all animals during the baseline (central dot) and for each condition of the tasks. In each graph, an example of stimuli for each condition is represented below, in its correct location.

Inside the scanner, monkeys faced a plastic screen placed at 119 cm from the head of the animal where visual stimuli were projected with an LCSD projector (model VLP-FE40, Sony) via a back-reflection on a first surface mirror. Visual stimuli were presented with Keynote software (version 12.0; Apple) and were synchronized with MRI TTL (transistor–transistor logic) pulses triggered by a Raspberry Pi (model 3B+, Raspberry Pi Foundation) running via a custom-written Python program. Reward was provided to the monkeys at the beginning and end of the sessions but not during the scanning.

Passive viewing dynamic facial expressions task

Visual stimuli consisted of video clips of a marmoset face depicting either neutral or negative facial expressions as well as a scrambled version of each. We recorded these videos from six marmosets while they sat non-head fixed in a marmoset chair (Johnston et al., 2018). To evoke the negative facial expression, a plastic snake was presented to the monkeys while they were filmed. Twelve second video clips were then created using custom video-editing software (iMovie, Apple). Scrambled versions of these videos were created by random rotation of the phase information using a custom program (Shepherd and Freiwald, 2018; MATLAB R2022a, MathWorks). In this program, the video was separated into its magnitude and phase component using a Fourier transform. The phase components were randomly rotated, and then the magnitude and rotated phase components were recombined using an inverse Fourier transform. The same random rotation matrix was used for each frame in the scrambled conditions to preserve motion and luminance components.

To ensure that these four categories of videos shared the same low-level and mid-level visual properties, we quantified the amount of motion and calculated the average pixel intensity across frames in each video. We first extracted the individual frames from the videos. Then, to quantify the amount of motion, we applied a motion estimation algorithm to calculate the motion vectors between consecutive frames (vision.BlockMatcher function in MATLAB), and we then averaged across all frames to obtain an overall measure of motion. To calculate the average pixel intensity, we read the pixel values of each frame and we then calculated the average value across all frames. Finally, we looked for any differences between the categories of videos by conducting unpaired two-tailed t tests (neutral face videos vs negative face videos and scrambled neutral face videos vs scrambled negative face videos). We observed no significant differences in the amount of motion or in average pixel intensity extracted between neutral and negative videos (unpaired two-tailed t test; motion: t(34) = −0.92, p = 0.36; pixel intensity: t(34) = −0.07, p = 0.94) as well as between scrambled neutral and scrambled negative videos (unpaired two-tailed t test; motion: t(34) = −1.01, p = 0.32; pixel intensity: t(34) = −0.12, p = 0.94).

A block design was used in which each run consisted to eight blocks of stimuli (12 s each) interleaved by nine baseline blocks (18 s each; Fig. 1A). In these blocks, the following four different conditions were repeated twice: (1) neutral faces, (2) negative faces, (3) scrambled neutral faces, and (4) scrambled negative faces. The order of these conditions was randomized for each run, leading to 20 different stimulus sets, counterbalanced within the same animal and between animals. During baseline blocks, a 0.36° circular black cue was displayed in the center of the screen against a gray background to reduce the nystagmus induced by strong magnetic fields (Ward et al., 2019). To compare the physiological state of each animal during the different conditions, we also measured RR during the runs via a sensor strap (customized respiration belt) around the chest of the animals and recorded the signals with the LabChart software (ADInstruments).

Passive viewing static facial expressions task

In this control task, the visual stimuli consisted of pictures of faces of marmosets with a neutral or negative facial expression and phase-scrambled versions of these pictures, resulting in four different conditions (Fig. 1B). Neutral face pictures were selected from the study by Hung et al. (2015). Negative face pictures were screenshots of the video clips used in the main task by selecting frames in which the faces were clearly visible. All images presented were luminance matched. To accomplish this, we first removed the background of each image and replaced it with a white background. We then measured and computed the mean luminance for each image and normalized to match the total intensity to one of the face images selected arbitrarily. The phase-scrambled images were created by permuting the phase information while preserving the amplitude information of the spectrum of negative and neutral face images using a custom program (MATLAB R2022a, MathWorks).

As is the previous task, in each run eight blocks of stimuli were interleaved by nine baseline blocks. Within each block of stimuli, individual images were displayed for 500 ms, with no gap between stimuli, for a total block time of 12 s (i.e., 24 pictures/block). As in the previous task, each condition was repeated twice.

Face localizer task

We used the following three different stimulus categories: conspecific faces, conspecific body parts, and man-made objects (Fig. 1C). Conspecific face images were taken from the study of Hung et al. (2015) but were transformed into gray scale. Marmoset body parts and images of objects were selected from the Internet. Images were equated for luminance, such that the mean luminance did not differ across categories. For that, we first transformed all the images into gray scale, and we calculated the mean luminance for each image. We then normalized the images to match the total intensity to one of the face images selected arbitrarily. A fixation dot was present in all images to facilitate central fixation. For the purpose of the study, we only considered responses to conspecific faces and objects.

Images from the same category were presented in 12 s blocks, with blocks of different categories randomly interleaved and separated by baseline blocks of 18 s during which a 0.36° circular black cue was displayed in the center of the screen against a gray background. Within each block, individual stimuli from the same category were randomly selected and displayed for 500 ms, resulting in 24 images in total in each block, with no gap between stimuli. In each run, each condition was repeated three times leading to a total of 9 blocks of stimuli interleaved by 10 baseline blocks.

fMRI data acquisition

Data were acquired on a 9.4 T 31 cm horizontal bore magnet and BioSpec Avance III console with software package Paravision-6 (Bruker). We used a custom-made receive surface coil paired with a custom-built high-performance 15-cm-diameter gradient coil with 400 mT/m maximum gradient strength (xMR). For the main task, we used a five-channel (subjects MGR, MHE, MMI, MMA, and MGU with head restraint/recording chamber) or eight-channel (subject MKO with machined PEEK head post) receive surface coil whereas for the two additional tasks, we only used the eight-channel receive surface coil for all animals (machined PEEK head post). For the radio frequency transmit coil, we used a quadrature birdcage coil (inner diameter, 12 cm) built and customized in-house.

Functional gradient echo-based single-shot echoplanar images (EPIs) covering the whole brain were acquired over multiple sessions, using the following sequence parameters: TR = 1.5 s; TE = 15 ms; flip angle = 40°; field of view = 64 × 48 mm; matrix size = 96 × 128; resolution, 0.5 mm3 isotropic; number of slices (axial) = 42; bandwidth = 400 kHz; generalized autocalibrating partial parallel acquisition (GRAPPA) acceleration factor, 2 (left–right). Another set of EPIs with an opposite phase-encoding direction (right–left) has been collected for the EPI distortion correction. For each animal, a T2-weighted structure was acquired during one of the sessions [TR = 7 s; TE = 52 ms; field of view = 51.2 × 51.2 mm; resolution, 0.133 × 0.133 × 0.5 mm; number of slices (axial) = 45; bandwidth = 50 kHz; GRAPPA acceleration factor, 2]. A total of 79 runs (9–19 runs/animal depending on the compliance of the animal) were acquired for the main experiment. For the two additional experiments, a total of 24 runs (3–7 runs/animals) and 72 runs (8–12 runs/animals) were acquired for the static facial expressions task and the face-localizer task, respectively.

fMRI data preprocessing

fMRI data were preprocessed with AFNI (Cox, 1996) and FMRIB/FSL (Smith et al., 2004) software packages. After converting the raw functional images into NifTI format using the dcm2nixx function of AFNI, the images were reoriented from the sphinx position using FSL (with the FSL fslswapdim and fslorient functions). Functional images were then despiked (with the AFNI 3Ddespike function) and volumes were registered to the middle volume of each time series (with the AFNI 3dvolreg function). The motion parameters from volume registration were stored for later use with nuisance regression. Images were smoothed with a full-width at half-maximum Gaussian kernel of 2 mm (for the two facial expressions tasks) or 1.5 mm (for the face-localizer task; with the AFNI 3dmerge function) and bandpass filtered (0.1–0.01 Hz) to prepare the data for the regression analysis (AFNI's 3dBandpass function). An average functional image was calculated for each run and linearly registered to the respective T2-weighted anatomic image of each animal (with FSL FLIRT function). The transformation matrix obtained after the registration was then used to transform the 4D time series data. T2-weighted anatomic images were manually skull stripped, and the mask of each animal was applied to the corresponding functional images.

Finally, T2-weighted anatomic images were registered to the National Institutes of Health (NIH) marmoset brain atlas (Liu et al., 2018) via the nonlinear registration using the Advanced Normalization Tools ApplyTransforms function.

Statistical analysis of fMRI data

For each run, we used the AFNI 3dDeconvolve function to estimate the hemodynamic response function (HRF). The task timing was thus first convolved with the time series data to estimate the HRF for each condition (AFNI BLOCK convolution; four conditions for the dynamic and static facial expressions tasks and three conditions for the face-localizer task). Then, the amplitude and timing of the HRF for each condition was estimated, leading to the creation of a regressor for each condition to be used in the subsequent regression analysis. Finally, all the conditions were entered into the same model, along with polynomial detrending regressors and the motion parameters, allowing evaluation of the statistical significance of the estimated HRF. This regression generated four or three t-value maps, depending on the experiment, corresponding to our experimental conditions, per animal per run. These maps were then registered to the NIH marmoset brain atlas (Liu et al., 2018) using the transformation matrices obtained with the registration of anatomic images on the template (see above).

These maps were then compared at the group level via paired t tests using the AFNI 3dttest++ function, resulting in z-value maps. These z-value maps were displayed on fiducial maps obtained from the Connectome Workbench (version 1.5.0; Marcus et al., 2011) using the NIH marmoset brain template (Liu et al., 2018) and on coronal sections. We used the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2018) to define anatomic locations of cortical and subcortical regions.

In the dynamic and static facial expressions tasks, we first examined the voxels that were significantly more strongly activated by the combination of all facial expression conditions compared with the combination of all scrambled facial expression conditions (i.e., neutral + negative faces > scrambled neutral + scrambled negative faces contrast). Then, to investigate voxels dedicated to negative and neutral facial expressions, we compared the activations obtained for each facial expression with their scrambled version (i.e., neutral faces > scrambled neutral faces and negative faces > scrambled negative faces contrasts). Finally, we identified voxels more activated by negative faces compared with neutral faces (i.e., negative faces > neutral faces). We used a clustering-level method derived from 10,000 Monte Carlo simulations (ClustSim option, α = 0.05) or a voxel-level method (α = 0.01) to the resultant z-test maps.

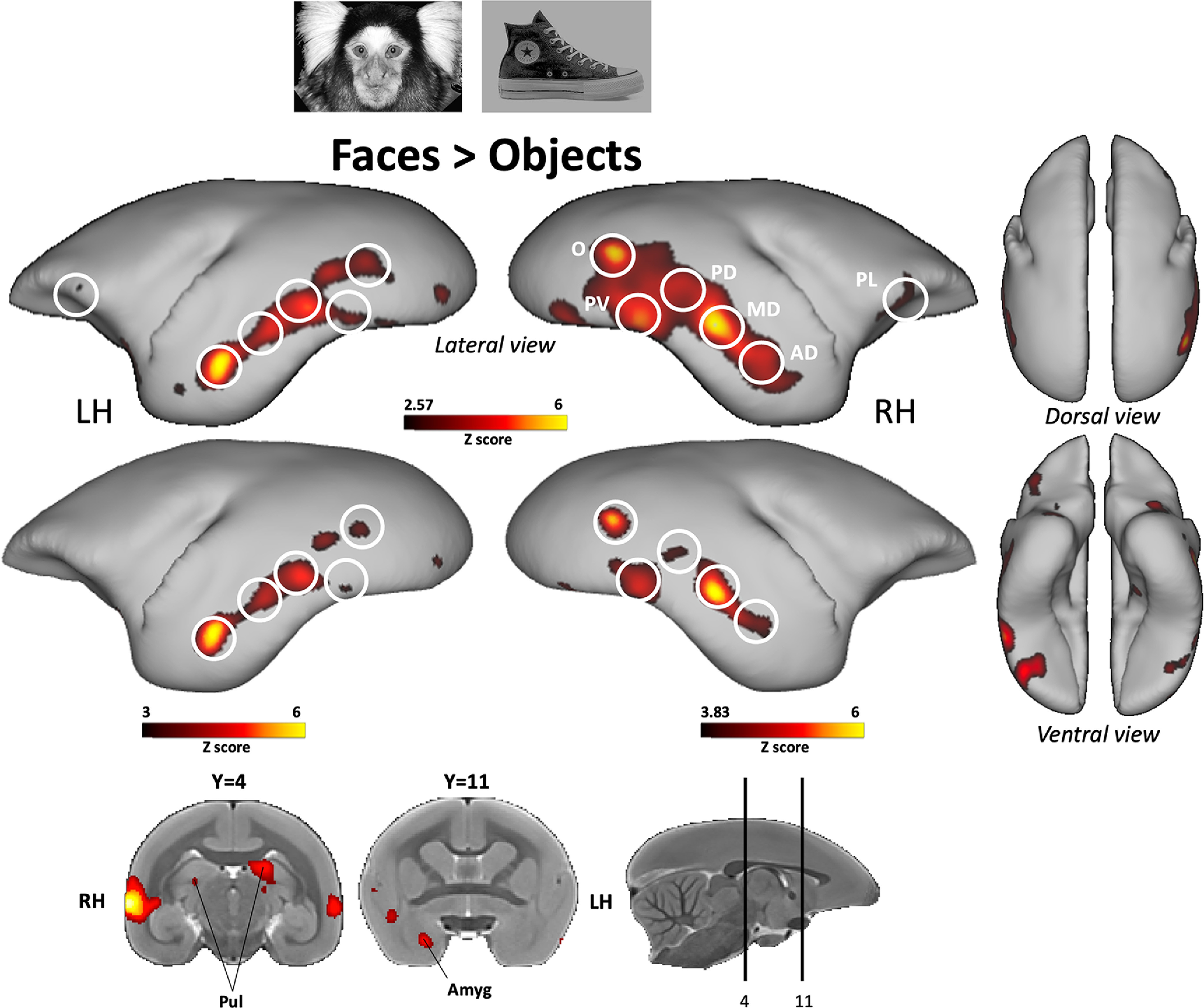

To localize face patches, we identified brain regions more activated by faces compared with objects (faces > objects contrast) using the face-localizer task. We used a voxel-level method for the resultant z-test maps (α = 0.01).

Based on the results obtained with this last contrast, six regions of interest (ROIs) were drawn on each hemisphere corresponding to cortical face patches. Five patches were identified along the occipitotemporal axis that correspond to the face patches identified by Hung et al. (2015) using the same contrast, as follows: patches O (occipital), PV (posterior ventral), PD (posterior dorsal), MD (middle dorsal), AD (anterior dorsal) and a sixth face patch in the lateral prefrontal cortex (areas 45/47) that was also previously described by Schaeffer et al. (2020) when comparing faces to scrambled faces (faces > scrambled faces), which we called the PL patch. First, we created ROIs of 1.5 mm around the right and left activation peaks obtained for each face patch activated by our face-localizer task using the AFNI 3dUndump function. The voxel location chosen for each ROI, reported below (Table 1), corresponded to the highest z value (i.e., activation peak) obtained in each face patch. For that, we increased the statistical threshold to the resultant face-localizer map to obtain isolated occipitotemporal subregions and determine the precise peak of activation of each face patch (Fig. 2, visualization). Second, times series for each condition and each run were extracted from the resultant regression coefficient maps using the AFNI 3dmaskave function. Finally, the difference in response magnitude (i.e., the percentage of signal change) between each condition and the baseline was computed, and the comparison between conditions was performed using one-sided paired t tests with false discovery rate (FDR) post hoc correction (p < 0.05) in custom-written MATLAB scripts (R022a; MathWorks).

Table 1.

Voxel location of each ROI with z value associated obtained from an activation map resulting from the face-localizer task (faces > objects contrast)

| ROI names | Hemisphere | Voxel location |

z Value | ||

|---|---|---|---|---|---|

| x | y | z | |||

| O face patch | Right | 10 | −3 | 14 | 5.60 |

| Left | −10 | −3 | 13 | 3.48 | |

| PV face patch | Right | 11 | −1 | 9 | 4.98 |

| Left | −11 | −2 | 9 | 4.5 | |

| PD face patch | Right | 11 | 1 | 11 | 4.36 |

| Left | −11 | −2 | 9 | 4.40 | |

| MD face patch | Right | 11 | 7 | 6 | 4.64 |

| Left | −11 | 7 | 6 | 5.42 | |

| AD face patch | Right | 10 | 9 | 4 | 3.90 |

| Left | −10 | 10 | 4 | 3.64 | |

| PL face patch | Right | 7 | 16 | 10 | 3.93 |

| Left | −8 | 16 | 11 | 2.53 | |

Figure 2.

Face patches identified by comparing faces with objects. Group functional maps depicting significantly higher activations for faces compared with objects obtained from 7 awake marmosets. The maps reveal six functional patches, displayed on lateral, dorsal, and ventral views of left and right fiducial marmoset cortical surfaces. No activations were found on medial view. The white circles delineate the peak of activation of the following face patches using the labeling described in the study by Hung et al. (2015): occipitotemporal face patches O (V2/V3), PV (V4/TEO), PD (V4t/FST), MD (posterior TE), and AD (anterior TE). The frontal face patch that we called PL patch (areas 45/47) has only previously been identified when faces were compared with scrambled faces (Schaeffer et al., 2020). Subcortical activations are illustrated on coronal slices. In the top map, brain areas reported have an activation threshold corresponding to z scores > 2.57 (p < 0.01, AFNI 3dttest++). In the bottom map, we increased the activation threshold to isolate face patch subregions and to delineate the highest z value (i.e., peak of activation) of each face patch, which allowed us to determine the ROIs for the face patches (z scores > 3 for the left hemisphere; z scores > 3.83 for the right hemisphere).

Respiratory rate analyses

A customized respiratory belt located around both the ventral and dorsal thorax of the marmoset was used to record respiratory signals. The strength of these signals could vary between the different sessions as the positioning of the belt could change with the movement of the animal. Therefore, we preliminarily inspected the quality of the data and excluded all runs with unstable respiratory signals.

In humans, it has been shown that repeated exposures to fear-provoking stimuli lead to a decrease in physiological responses (Watson et al., 1972; Olatunji et al., 2009; Zaback et al., 2019), which is because of a habituation-induced diminution of the physiological response (van Hout and Emmelkamp, 2002). To circumvent this phenomenon, which may cause a decreased response of respiratory rate induced by the repeated viewing of the negative facial expression across the same session, we selected the first run of each session per animal corresponding to a minimum of two runs and a maximum of five runs per monkey, depending on the number of sessions and the quality of the data. Therefore, a total of 17 runs was selected throughout all the sessions for the six animals.

To control for a potential decline in respiratory responses that could be caused by the repeated viewing of negative faces, we also conducted an additional analysis by selecting the last run of each session for each animal, corresponding to the maximum number of stimuli repetition during each session (i.e., a total of 17 runs throughout all the sessions for the six animals).

The respiration signals recorded during the experiments were analyzed with the LabChart data analysis software (ADInstruments) to obtain the average RR per minute [breaths per minute (bpm)] for each animal in each block of the runs. For that, we first detected all the inter-beat RR peaks automatically. The number of RR peaks per minute corresponds to the number of respirations, namely the number of times the chest rises (beats) in 1 minute (i.e., bpm; RR frequency). We then computed the average number of breaths per minute (i.e., mean RR) during the blocks of stimulus presentations (12 s of video clips) and also during the baseline periods (central dot block of 18 s).

External triggers were recorded for every TR (i.e., every 1.5 s). The first trigger started as soon as the central dot appeared, and we extracted RR data for the baseline period counting 12 triggers (corresponding to 18 s). Then, we extracted RR data for the video clip periods during the eight following triggers (corresponding to 12 s), and we repeated the same steps until the end of the run. In this way, we obtained RR values for nine blocks of baseline and eight blocks of video clips for each run. We then computed the mean RR for each condition in each run (i.e., neutral faces, negative faces, scrambled neutral faces, and scrambled negative faces) as well as the mean RR for the entire period of baseline for each run. Finally, we computed the mean δ RR by subtracting each mean RR obtained for each condition by the mean RR obtained during the baseline period. As the respiratory rate range was variable between animals, we computed the δ RR to normalize these ranges between animals. We were then able to determine the effect of dynamic facial expressions on the RR of animals and to compare these fluctuations between conditions for the group.

To identify the effect of facial expressions on respiratory signals, three paired t tests were used on δ RR of the first runs selected (p < 0.05), as follows: neutral versus scrambled neutral faces, negative versus scrambled negative faces, and negative versus neutral faces. To investigate the potential habituation effect in physiological signals provoked by repeated exposures of negative faces, we also performed the same analysis on the last runs selected and also four paired t tests were performed on δ RR between first and last runs conditions, as follows: neutral faces of first runs versus neutral faces of last runs; negative faces of first runs versus negative faces of last runs; scrambled neutral faces of first runs versus scrambled neutral faces of last runs; and scrambled negative faces of first runs versus scrambled neutral faces of last runs. Data were analyzed with the open source software R (R Foundation for Statistical Computing).

Results

Our aim was to characterize the brain network involved in dynamic processing of emotional faces and more specifically in negative facial expressions. For that, we presented awake marmosets with video clips of marmosets displaying a neutral or negative facial expression and their scrambled versions in a block-design fMRI task. We also measured the respiratory rates of monkeys to identify the effect of negative facial expressions on their physiological responses during the fMRI scans. To determine the effect of facial motion during the perception of facial expression changes, we also conducted a similar task using images of neutral and negative marmoset faces. Finally, to test how emotional faces affect activation in the face patches, we ran a face-localizer task in awake marmosets contrasting images of marmoset faces with nonface objects.

fMRI results

Face patches determined by the face-localizer task

To be able to relate the functional activations obtained during facial expression processing and therefore to establish a direct link with the primate face processing system existing in the literature, we ran a face-localizer experiment contrasting marmoset faces with nonface objects, commonly used in humans (Kanwisher et al., 1997), macaques (Tsao et al., 2003), and recently in marmosets (Hung et al., 2015). In the resulting map (Fig. 2), we found the same five patches identified by Hung et al. (2015) that they labeled according to their positions along the occipitotemporal axis, as follows: AD and MD patches along STS, PD patch in area V4t/FST, PV patch at the V4/TEO border, and O patch at the V2/V3 border. In addition to these face-selective patches in temporal lobe, we also found preferential activations in lateral prefrontal cortex (corresponding to area 45/47) that we will call PL patch. This patch was also identified in the study by Schaeffer et al. (2020) when comparing video of neutral marmoset faces with their scrambled versions. Finally, at the subcortical level, we found higher activations in pulvinar and amygdala.

Functional brain activations while watching dynamic emotional faces

We first investigated the processing for each dynamic face video (i.e., neutral and negative videos) and their corresponding scrambled versions, compared with baseline. Figure 3 shows group activation maps for each condition.

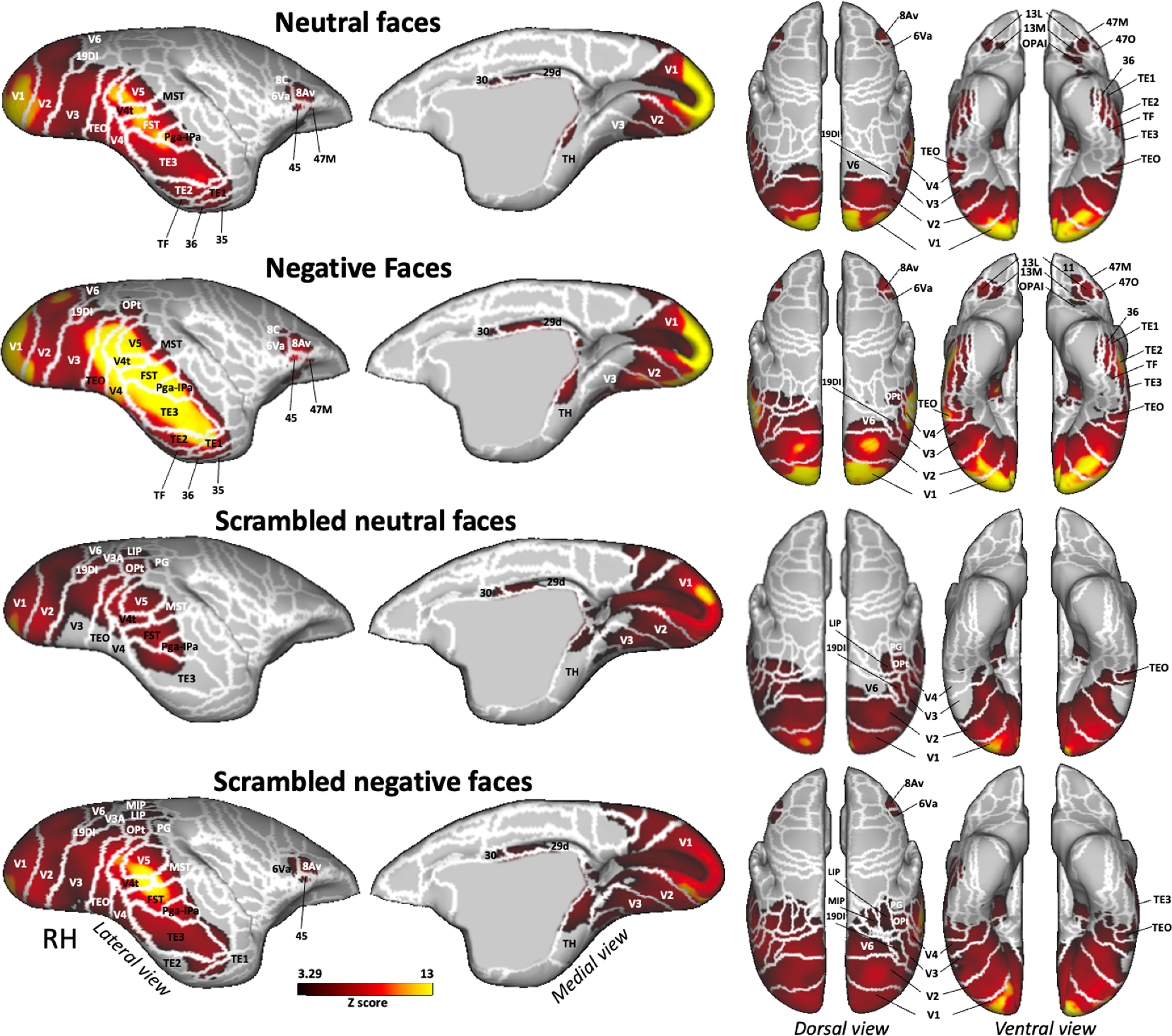

Figure 3.

Brain networks activated by each condition versus baseline. Group functional maps showing significantly greater activations for dynamic neutral, negative, scrambled neutral, and scrambled negative faces, compared with baseline. Group maps obtained from 6 awake marmosets displayed on lateral and medial views of the right fiducial marmoset cortical surfaces as well as dorsal and ventral views of left and right fiducial marmoset cortical surfaces. The white line delineates the regions based on the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2017). The brain areas reported have activation threshold corresponding to z scores > 3.29 (p < 0.001, AFNI 3dttest++; cluster-size correction, α = 0.05 from 10,000 Monte-Carlo simulations).

All four conditions activated a bilateral network with higher activations in occipital and temporal cortices, including visual areas V1, V2, V3, V4, V4T, V5, and V6; medial superior temporal (MST) areas; 19 dorsointermediate part (19DI), and temporal areas TEO, Pga-Ipa, FST, TH, and also areas 29d and 30 in posterior cingulate cortex. The two scrambled conditions also activated visual area V3A and posterior parietal areas LIP and PG, and the occipitoparietal transitional (OPt) area surrounding the intraparietal sulcus (IPS). The scrambled negative face condition showed bilateral activations in medial intraparietal (MIP) area, in temporal areas TE2 and TE1, and in premotor and frontal areas 6Va, 8Av, and 45. Neutral and negative faces recruited a larger and stronger network with bilateral activations in lateral and inferior temporal areas TE2 and TE1; in ventral temporal areas TF, 35, and 36; in area 6, ventral part (6Va); and in area 8, caudal part (8C) of the premotor cortex; and in frontal areas 8Av, 45, and 47, medial part (47 M) and orbital part (47O); and more anterior in orbitofrontal cortex in area 13 lateral (13L), 13 medial (13 M), and orbital periallocortex (OPAI).

Next, we identified the brain regions that were more active during the observation of dynamic faces compared with their scrambled versions (i.e., all faces > all scrambled faces, neutral faces > scrambled neutral faces, and negative faces > scrambled negative faces contrasts). Group activation maps are represented in Figure 4.

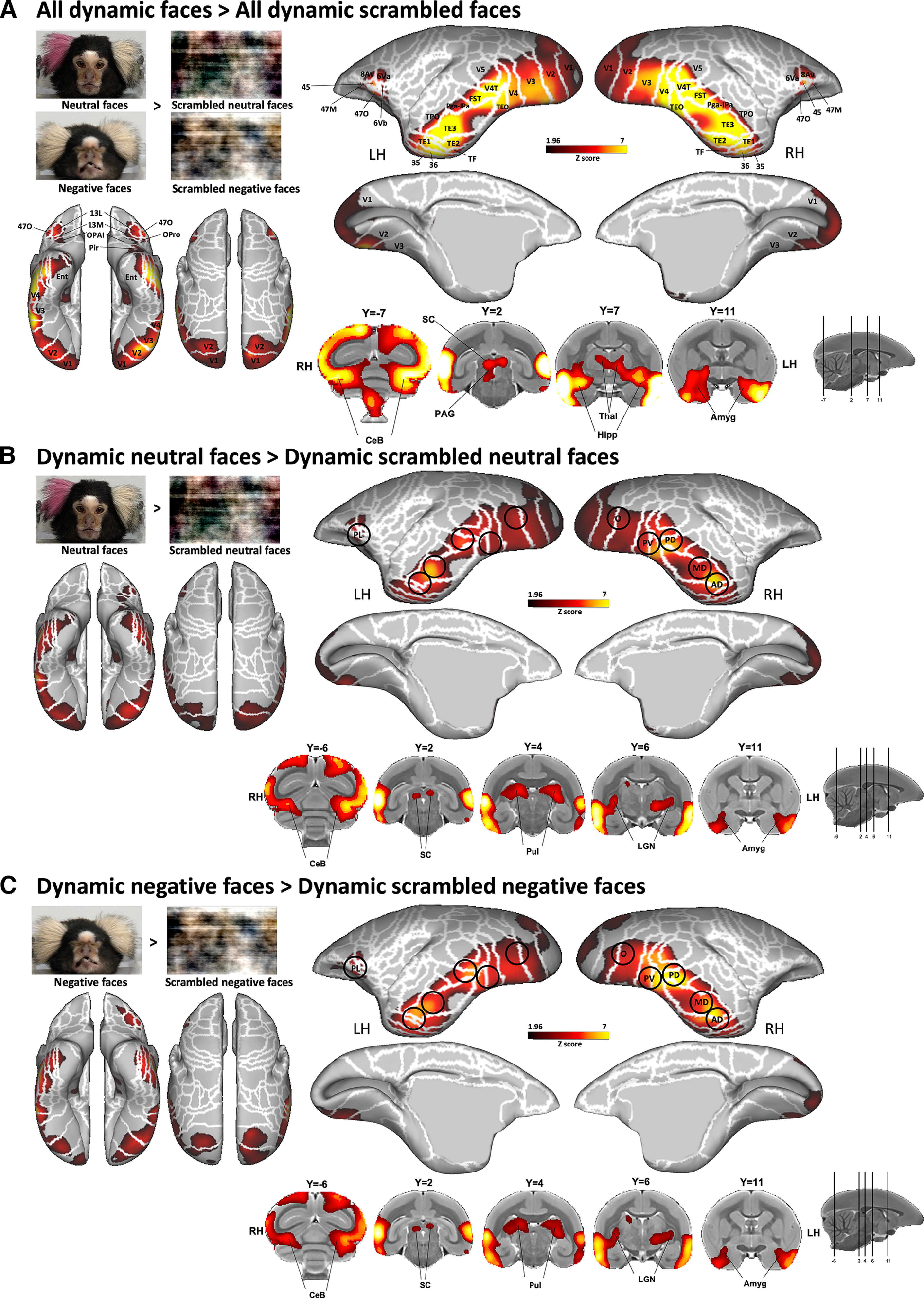

Figure 4.

Brain networks involved in dynamic facial expression processing. A–C, Group functional maps showing significantly greater activations for the comparison between the following: A, All emotional faces (i.e., neutral and negative faces) and all scrambled emotional faces (i.e., scrambled versions of neutral and negative faces); B, neutral facial expression and its scrambled version; and C, negative facial expression and its scrambled version. Group functional topology comparisons are displayed on the left and right fiducial marmoset cortical surfaces (lateral, medial, dorsal, and ventral views) as well as on coronal slices, to illustrate the activations in subcortical areas. The white line delineates the regions based on the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2018). The black circles delineate the position of face patches identified by the face-localizer task depicted in Figure 2. The brain areas reported have activation threshold corresponding to z scores > 1.96 (p < 0.05, AFNI's 3dttest++; cluster-size correction, α = 0.05 from 10,000 Monte-Carlo simulations).

We observed significant activations for all dynamic faces compared with all dynamic scrambled faces in a large network comprising a set of bilateral cortical and subcortical regions (Fig. 4A). The major significant clusters are located along the occipitotemporal axis in visual areas V1, V2, V3, V4, V5, V4T, and MST; in lateral and inferior temporal areas TE1, TE2, TE3, TEO, TPO, FST, and PGa-Ipa; as well as in ventral temporal areas 35, 36, TF, entorhinal cortex, and superior temporal rostral (STR) area. In addition, significantly greater bilateral activations are also found in premotor areas 6Va and 6Vb and in motor area ProM of the left hemisphere as well as in lateral frontal areas 8Av, 45, 47M, and 47O; and more anterior in orbitofrontal areas 11, 13M, 13L, OPAI, and orbital proisocortex (OPro). Finally, intact face videos elicited activations in a set of bilateral subcortical areas, including the superior colliculus (SC), periaqueductal gray (PAG), thalamus, lateral geniculate nucleus (LGN), hippocampus, caudate, amygdala, and substantia nigra (SNR), as well as different parts of the cerebellum (posterior lobe of the cerebellum and each left and right side of the cerebellum; Fig. 4A).

Each dynamic neutral and negative facial expression, compared with their scrambled versions, activated a network similar to the one described above, with stronger activations in occipital (V1, V2, V3, V4, V5, and V4T), temporal (TE1, TE2, TE3, TEO, TF, FST, TPO, PGa-IPa, 35, 36, and entorhinal cortex), premotor (6Va and 6Vb), and frontal and orbitofrontal (8Av, 45, 47, 47 M, 47O, 13 M, 13L, OPAI, and Opro) cortices, as well as in some subcortical areas (SC, pulvinar, LGN, hippocampus, caudate, amygdala, and cerebellum; Fig. 4B,C). For neutral faces, activations are also present in frontal area 13M and OPAI and in the piriform cortex (Pir; Fig. 4B), whereas for negative faces, stronger activations are also observed in temporal area STR, in motor area ProM, in orbitofrontal area 11, and in the SNR (Fig. 4C). As indicated by black circles in Figure 4, peaks of activations were located in the five bilateral face patches along the occipitotemporal axis (i.e., patches O, PV, PD, MD, and AD) and in the left PL patch identified by our localizer task (Fig. 2).

To identify brain areas that were more active during the observation of negative dynamic faces compared with neutral dynamic faces, we directly compared the two conditions (i.e., dynamic negative faces > dynamic neutral faces contrast; Fig. 5). As observed in the previous section, the overall broad topologies of the circuitries for each facial expression are similar (Fig. 4), but we found critical differences when contrasting the two conditions (Fig. 5A). This analysis reveals significantly greater bilateral activations for negative faces along the occipitotemporal axis located mainly in the five face patches O, PV, PD, MD, and AD identified previously (Fig. 2). Specifically, we found activations in occipital areas (visual areas V1, V2, V3, V4, and V5), in inferior temporal areas (TE1, TE2, TE3, TEO, TF, and TH), in superior temporal areas (V4T, MST, TPO, and PGa-Ipa), and in the fundus of the superior temporal sulcus (FST). We also observed stronger subcortical activations in the right hippocampus, right LGN, right SC, bilateral hypothalamus, and bilateral amygdala.

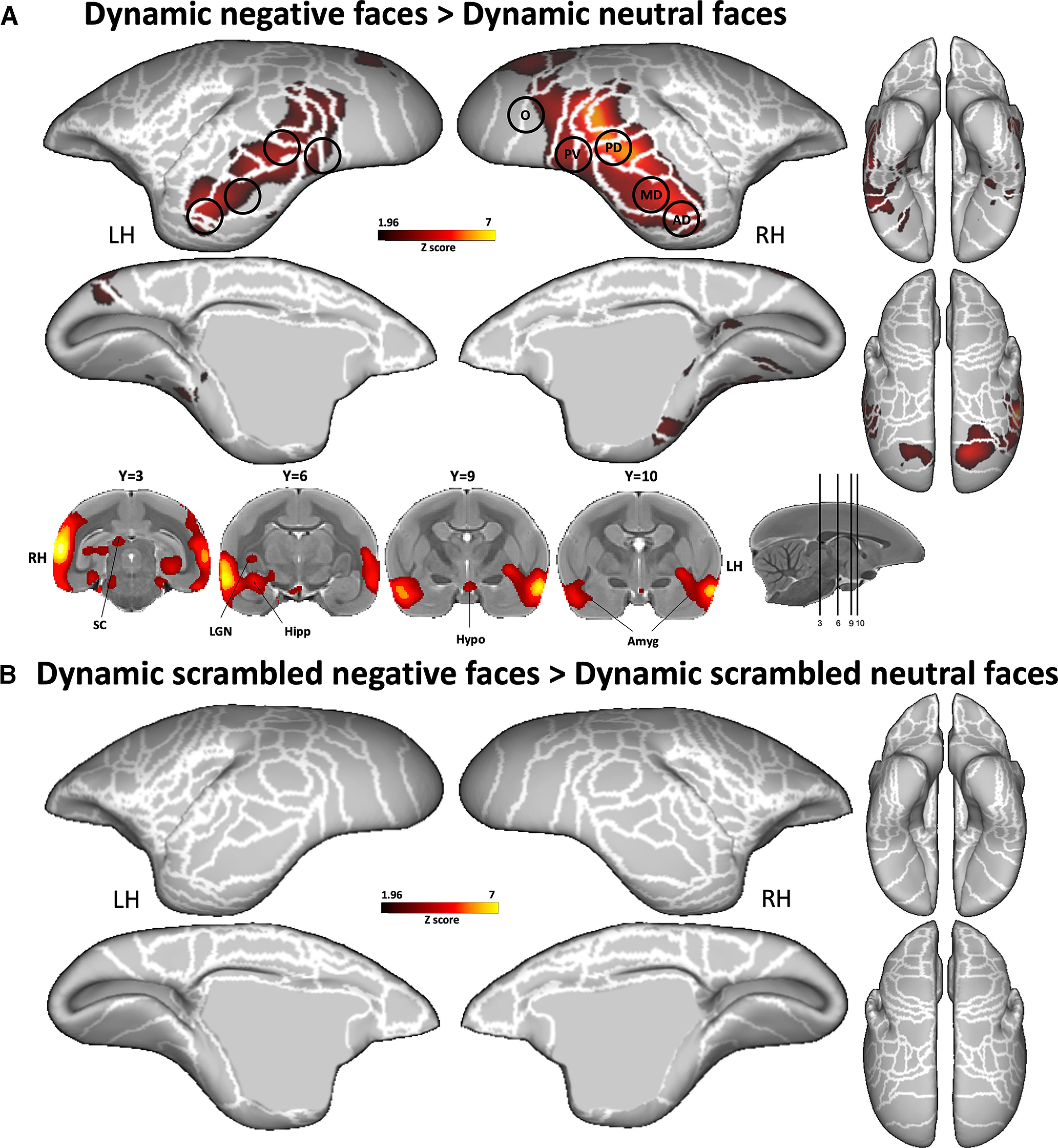

Figure 5.

Comparison between the two dynamic facial expressions. A, B, Group functional maps showing significantly greater activations for negative compared with neutral face videos (A) and for scrambled negative compared with scrambled neutral face videos (B) displayed on the left and right fiducial marmoset cortical surfaces (lateral, medial, dorsal, and ventral views). Coronal slices represent the activations in subcortical areas. The white line delineates the regions based on the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2018). The black circles delineate the position of face patches identified by our face-localizer task depicted in Figure 2. The brain areas reported have activation threshold corresponding to z scores > 1.96 (p < 0.05, AFNI 3dttest++; cluster-size correction, α = 0.05 from 10,000 Monte-Carlo simulations).

Importantly, the comparison between the scrambled negative and scrambled neutral dynamic conditions did not reveal any greater activations for scrambled negative faces (Fig. 5B). Therefore, as low-level properties are preserved in the scrambled videos, this indicates that the activations obtained when comparing negative with neutral faces was not caused by differences in any low-level properties of the videos. Furthermore, we did not find any differences in motion or average pixel intensity between neutral and negative faces video (see Materials and Methods above).

Functional brain activations while watching static emotional faces

To compare the pattern of activations between dynamic and static facial expression processing, we conducted a similar experiment using static images of marmoset faces instead of videos. Figure 6 displays the results obtained by comparing all static faces versus their scrambled versions and each static facial expression versus their corresponding scrambled images (i.e., all static faces > all static scrambled faces, static neutral faces > static scrambled neutral faces and static negative faces > static scrambled negative faces contrasts). Overall, the results are very similar to those obtained with the videos but with slightly lower and less expanded activations. We found greater activations for intact neutral face than scrambled images in right O, PV, PD, MD, and AD face patches, and in left O, PV, and PD face patches (Fig. 6B), and stronger activations for negative versus scrambled faces located in bilateral O, PV, PD, MD, AD, and PL face patches (Fig. 6C). Subcortically, pulvinar, LGN, and amygdala were recruited.

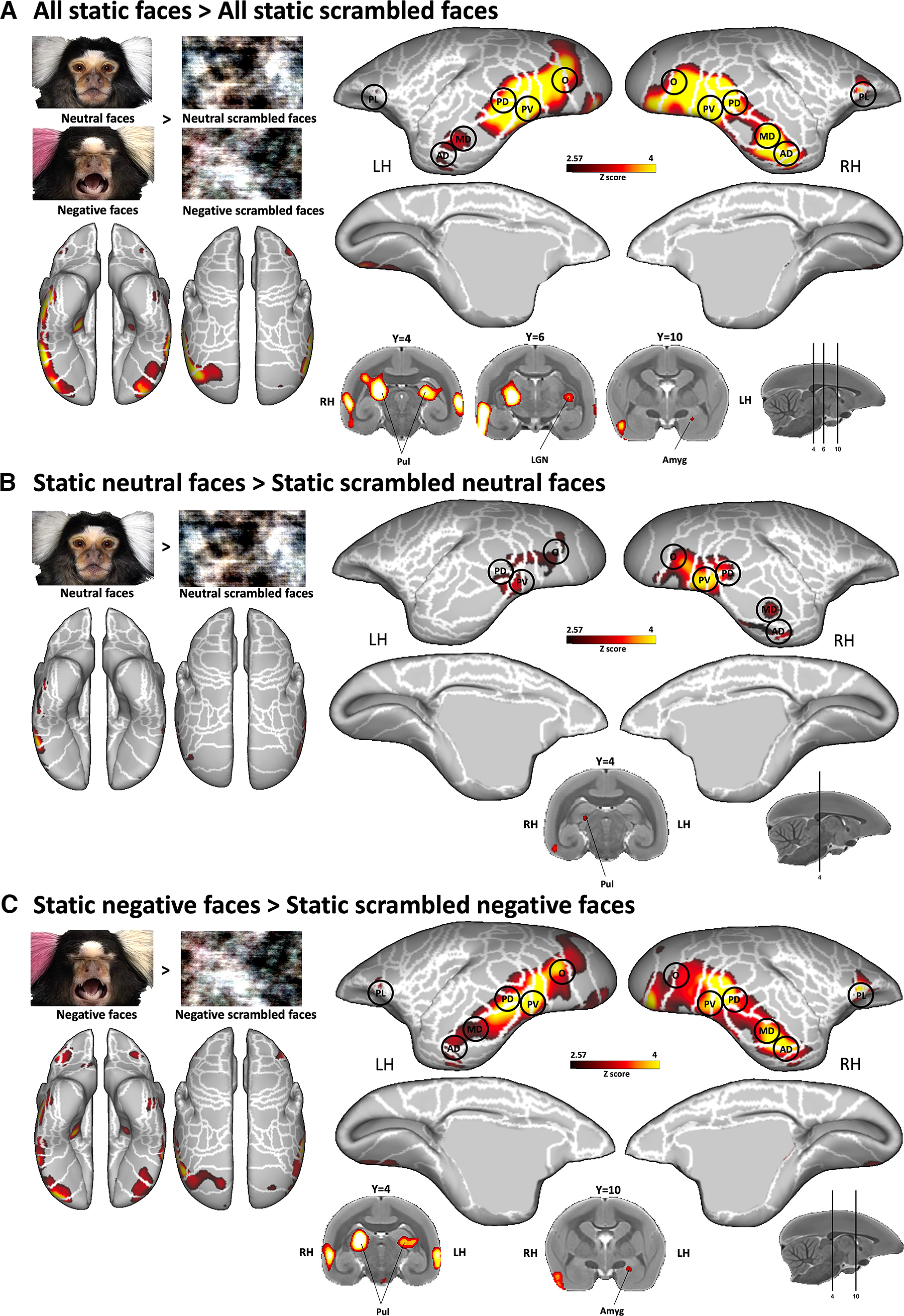

Figure 6.

Brain networks involved in static facial expression processing. A–C, Group functional maps showing significantly greater activations for the comparison between the following: A, all emotional faces and all scrambled emotional faces; B, neutral facial expressions and its scrambled versions; and C, negative facial expression and its scrambled version. Group functional topology comparisons are displayed on the left and right fiducial marmoset cortical surfaces (lateral, medial, dorsal, and ventral views) as well as on coronal slices to illustrate the activations in subcortical areas. The white line delineates the regions based on the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2018). The black circles delineate the position of face patches identified by our face-localizer task depicted in Figure 2. The brain areas reported have activation threshold corresponding to z scores > 2.57 (p < 0.01, AFNI 3dttest++).

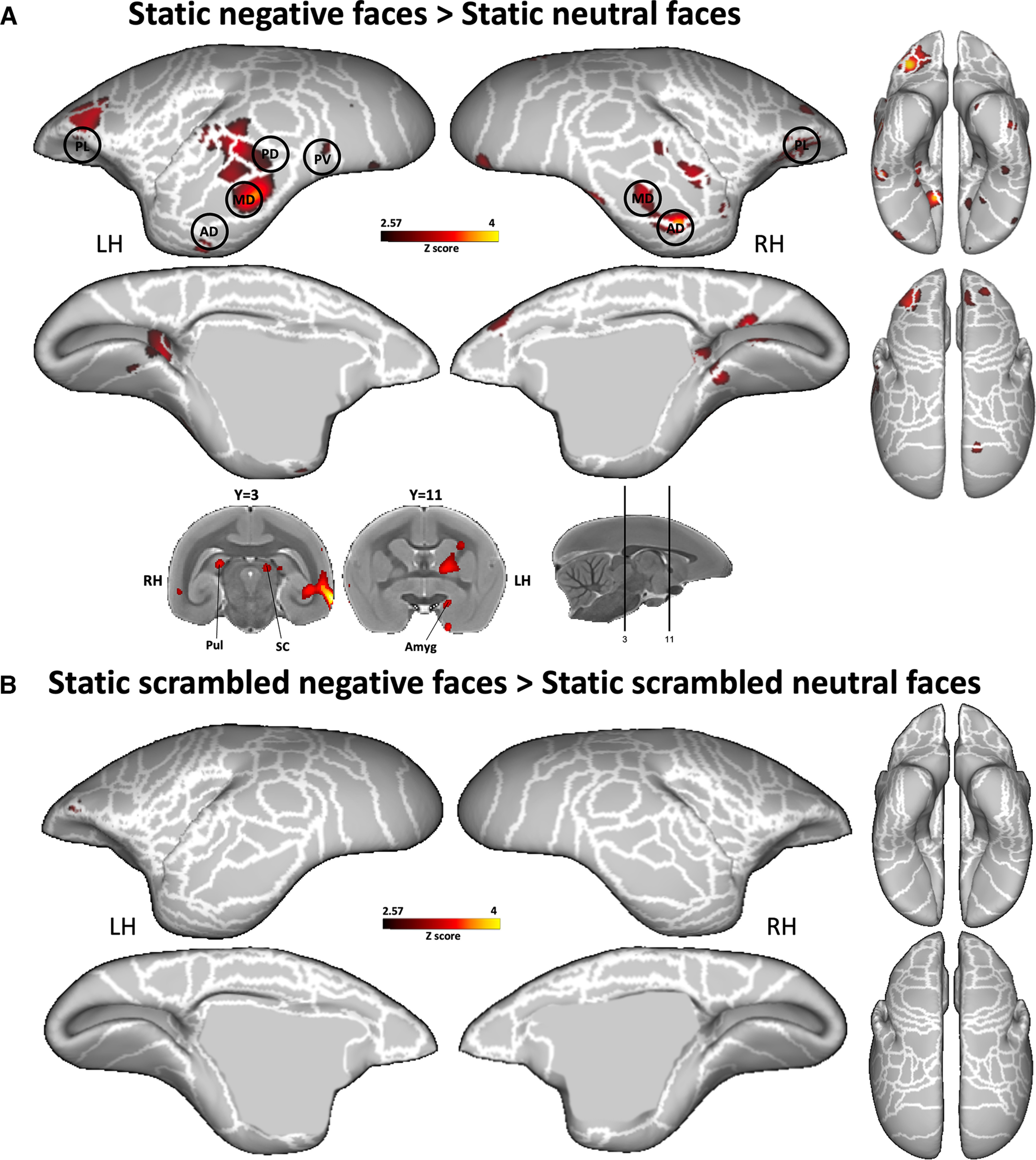

Finally, by comparing negative with neutral static faces (Fig. 7A), we found higher activations mostly in temporal cortex (in MST, TPO, FST, PGa-IPa, TE1, TE2, TE3, 36, and STR areas) and in frontal cortex (in 8Av, 8aD, 6DR, 45, 47L, 47M, and 47O areas, and in orbitofrontal areas 11, 13M, and 13L). These activations comprised face patches MD, AD, and PL in the right hemisphere and PV, PD, MD, AD, and PL in the left hemisphere. Subcortical activations were also observed in right pulvinar, left SC, and left amygdala. These activations in temporal PV, PD, MD, and AD face patches as well as in the SC and amygdala were similar to those identified by dynamic negative faces (Fig. 5). Furthermore, as in the dynamic task, we did not find any difference when we compared static scrambled negative faces with static scrambled neutral faces (Fig. 7B).

Figure 7.

Comparison between the two static facial expressions. A, B, Group functional maps showing significantly greater activations for negative compared with neutral face pictures (A) and for scrambled negative face pictures compared with scrambled neutral face pictures (B) displayed on the left and right fiducial marmoset cortical surfaces (lateral, medial, dorsal, and ventral views). Coronal slices represent the activations in subcortical areas. The white line delineates the regions based on the Paxinos parcellation of the NIH marmoset brain atlas (Liu et al., 2018). The black circles delineate the position of the face patches identified by our face-localizer task and depicted in Figure 2. The brain areas reported have activation threshold corresponding to z scores > 2.57 (p < 0.01, AFNI 3dttest++).

Temporal and frontal face patches are directly involved in dynamic facial expression processing: ROI analysis

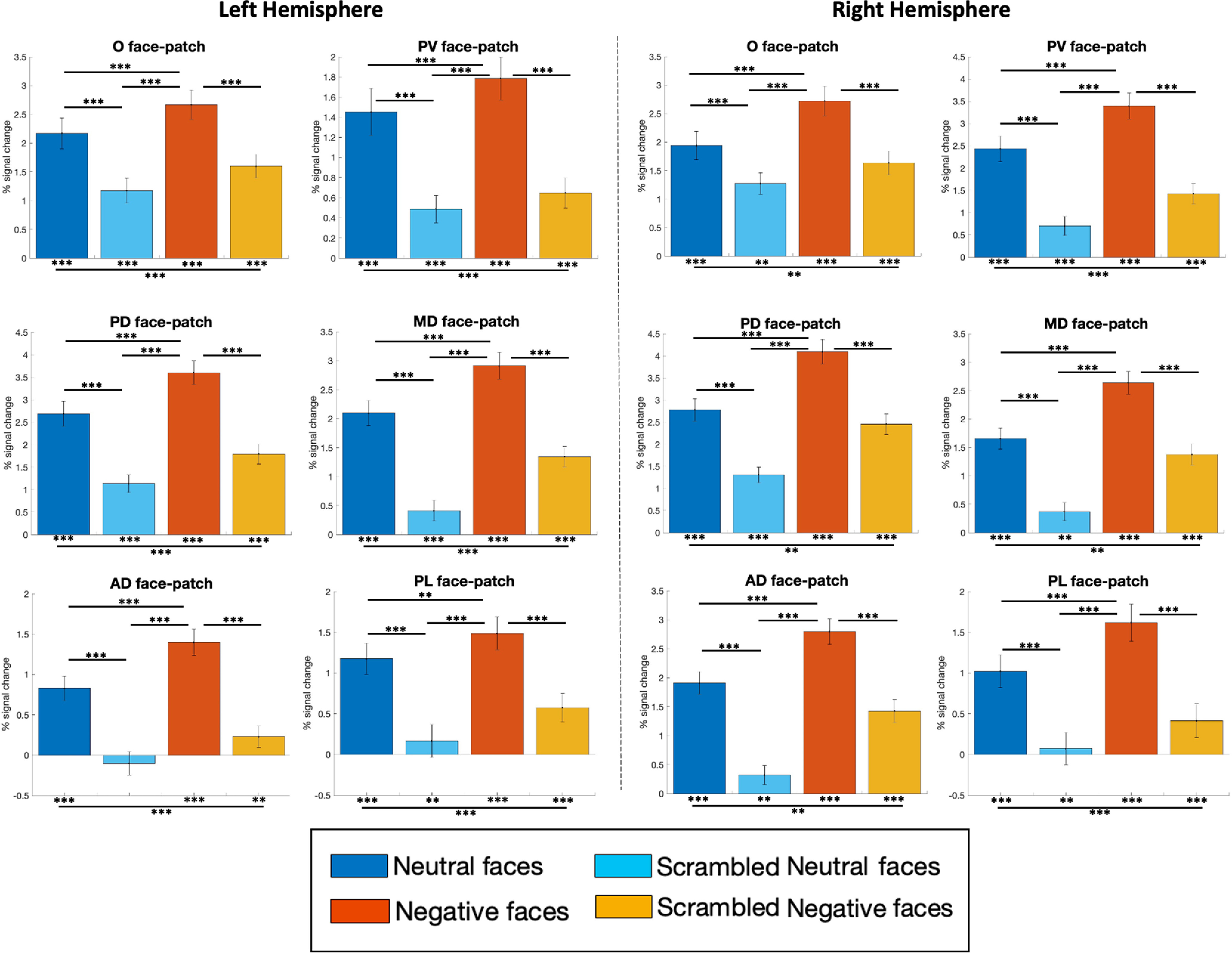

From the group activation maps obtained from our face-localizer task (Fig. 2), we created 10 temporal ROIs (i.e., left and right face patches O, PV, PD, MD, and AD) as well as two frontal ROIs (i.e., left and right PL face patch; see Materials and Methods section). Following the extraction of the time series from these ROIs, we compared the response magnitudes between the conditions (Fig. 8).

Figure 8.

ROI analysis: differences in the percentages of signal change responses among the four conditions (i.e., neutral and negative facial expressions and their scrambled versions) in face-selective patches. The magnitude of the percentage of signal change for each condition has been extracted from time series of 12 (6 right, 6 left) functional regions of interest extracted from the activation map obtained by faces > objects contrast in the face-localizer task (Fig. 2, Table 1). The differences from baseline (represented by asterisks below each bar graph) and between conditions (represented by asterisk on horizontal bar) were tested using one-sided paired t tests corrected for multiple comparisons (FDR): *p < 0.05, **p < 0.01, and ***p < 0.001. The error bars correspond to the SEM.

All right and left face patches showed significantly stronger activations for neutral faces compared with scrambled ones (paired t tests with FDR correction; left hemisphere: O, t(78) = 3.97; PV, t(78) = 4.19; PD, t(78) = 5.23; MD, t(78) = 6.90; AD, t(78) = 4.67; PL, t(78) = 3.90; all p < 0.001; right hemisphere: O, t(78) = 3.19; PV, t(78) = 6.78; PD, t(78) = 6.25; MD, t(78) = 6.79; AD, t(78) = 7.07; PL, t(78) = 3.64; all p < 0.001), but also for negative faces compared with scrambled ones (paired t tests with FDR correction; left hemisphere: O, t(78) = 3.69; PV, t(78) = 4.95; PD, t(78) = 6.90; MD, t(78) = 7.13; AD, t(78) = 6.41; PL, t(78) = 3.98; all p < 0.001; right hemisphere: O, t(78) = 4.39; PV, t(78) = 7.36; PD, t(78) = 7.00; MD, t(78) = 7.08; AD, t(78) = 6.82; PL, t(78) = 4.66; all p < 0.001).

All of these ROIs were also significantly more activated by negative faces compared with neutral faces (paired t tests with FDR correction; left hemisphere: O: t(78) = 2.35; p = 0.001; PV: t(78) = 2.06; p < 0.001; PD: t(78) = 3.85; p < 0.001; MD: t(78) = 4.40; p < 0.001; AD: t(78) = 3.34; p < 0.001; PL: t(78) = 1.58; p = 0.004; right hemisphere: O, t(78) = 3.83; PV, t(78) = 4.34; PD, t(78) = 6.24; MD, t(78) = 5.57; AD, t(78) = 4.60; PL, t(78) = 2.55; all p < 0.001).

Respiration rate

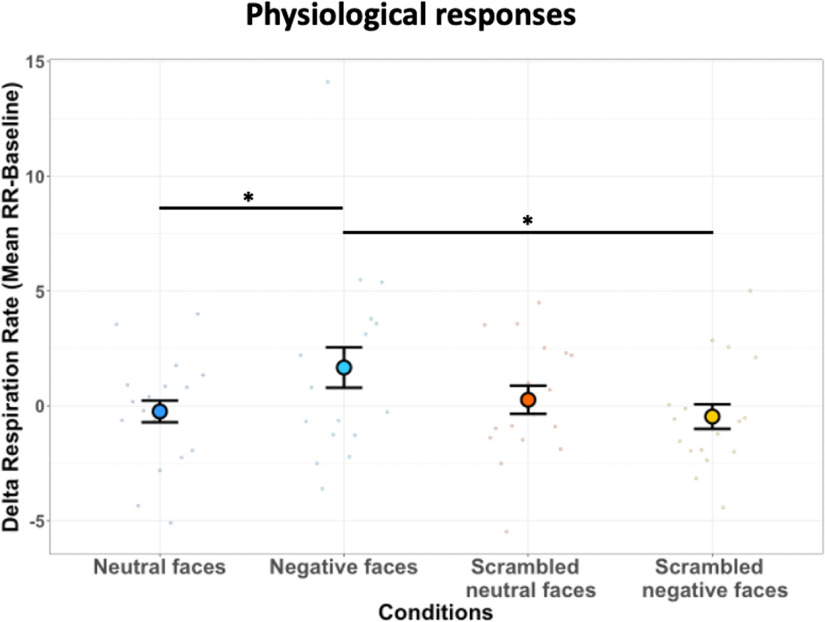

We recorded the respiration rates of monkeys to determine whether observation of negative facial expressions influenced their arousal state. We computed the difference between the mean RR frequency obtained during the presentation of videos and the mean RR frequency during the baseline period [(i.e., δ RR); see Materials and Methods]. Figure 9 illustrates the δ RR frequency of animals as a function of the viewing condition: neutral and negative facial expressions as well as scrambled version of each facial expression. The statistical analysis reveals a significant increase of δ RR for negative faces compared with scrambled negative faces (t(16) = 1.93, p = 0.04) and neutral faces (t(16) = 1.85, p = 0.04).

Figure 9.

Respiration rate as a function of the viewing condition. Dot plot depicting δ RR of all six marmosets in bpm (i.e., mean RR during block of video clips – mean RR during baseline) according to each condition: neutral, negative, scrambled neutral, and scrambled negative faces. In the plot, the mean δ RR for each condition is represented by a different colored dot. For each condition, the vertical bars represent the SEM and the small dots indicate individual values obtained for each run. The following three paired t tests were performed: neutral faces versus scrambled neutral faces, negative faces versus scrambled negative faces, and negative faces versus neutral faces (*p < 0.05, **p < 0.01, and ***p < 0.001).

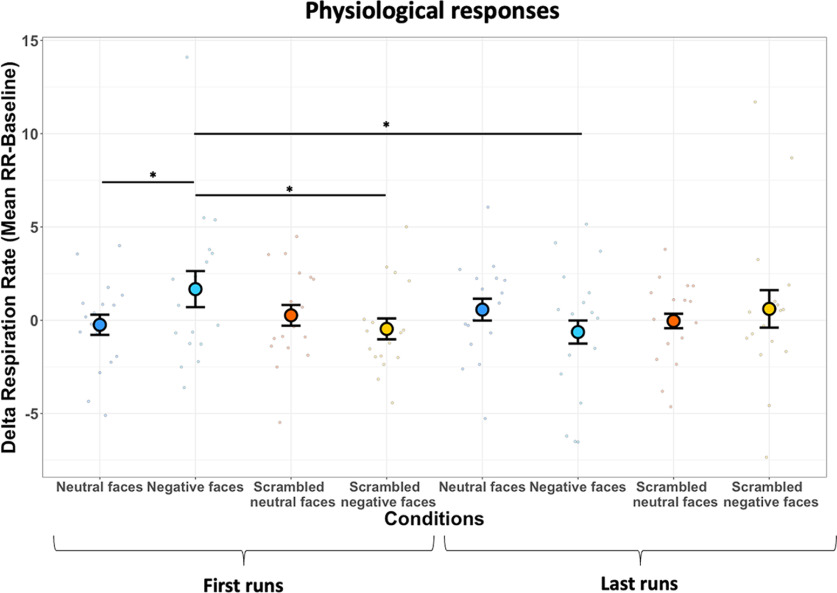

As described in the Materials and Methods section, we included only the first run of each session for all animals to avoid an habituation effect on physiological signals obtained by the repeated viewing of the negative faces within the same session, as described previously in humans for repeated exposures to fear stimuli (Watson et al., 1972; van Hout and Emmelkamp, 2002; Olatunji et al., 2009; Zaback et al., 2019). Therefore, to control for this effect, we performed the same analysis with the last run of each session, and we computed the differences for conditions between each first run versus each last run with paired t tests (Fig. 10). The analysis reveals a significant decrease of δ RR for the negative faces condition when we compared the first with the last runs (t(16) = 1.84, p = 0.04), confirming a habituation effect in these animals.

Figure 10.

Respiration rate as a function of the viewing condition for first versus last runs. Dot plot depicting δ RR of all six marmosets in bpm (i.e., mean RR during block video clips conditions – mean RR during baseline) according to each condition for each first run and last run. In the plot, the mean δ RR for each condition is represented by a different colored dot. The vertical bars represent the SEM, and the small dots indicate individual values obtained for each run. Four paired t tests were tested to identify the difference between the first and last run conditions (*p < 0.05, **p < 0.01, and ***p < 0.001).

Discussion

The correct identification of facial expressions is critical for understanding the intentions of others during social communication in the daily life of all primates. Previous studies have shown that humans and Old World macaque monkeys exhibit higher activations in face-selective regions and surrounding cortices during the processing of emotional faces. Here we examined whether New World common marmoset monkeys show a similar effect. We presented videos of marmoset faces depicting either a neutral or negative facial expression and their corresponding scrambled versions during ultra-high-field fMRI acquisitions. We also measured the respiration rate of the animals during the scans to determine whether marmosets showed physiological reactions to the videos. As it is known that dynamic faces facilitate the recognition of facial expressions in humans (Ceccarini and Caudek, 2013), we also performed a similar task where we presented pictures of static marmoset faces instead of videos. In this way, we were able to determine the effect of facial motion in the processing of facial expression recognition in marmosets.

Faces provide a rich source of social information. In primates, facial behavior is of particular importance during social interactions, with changing attributes such as facial expressions. The mechanisms allowing the processing of facial expressions in humans and macaques have been identified in several fMRI studies. In humans, the face-selective occipitotemporal areas responding more strongly to faces than nonface objects—including the fusiform gyrus (i.e., fusiform face area), the anterior and posterior regions of the STS, and the inferotemporal cortex—as well as areas in the dorsolateral prefrontal cortex, the orbitofrontal cortex, and the amygdala are among the multiple structures that participate in processing and recognizing facial emotions (Kanwisher et al., 1997; Puce et al., 1998; Haxby et al., 2000; Posamentier and Abdi, 2003; Hennenlotter and Schroeder, 2006; Fusar-Poli et al., 2009; Arsalidou et al., 2011; Zinchenko et al., 2018).

In Old World primates, these structures are situated along the occipitotemporal axis, mainly along the STS, in ventral and medial temporal lobes, and in some subregions of the inferior temporal cortex, as well as in frontal cortex and some subcortical regions such as amygdala and hippocampus—regions also known to be face selective (Tsao et al., 2003; Pinsk et al., 2005; Hadj-Bouziane et al., 2008; Bell et al., 2009; Weiner and Grill-Spector, 2015).

We demonstrated that, as in humans and macaques, areas involved in face processing in marmosets are also involved in the processing of facial expressions. In our maps, peaks of activations obtained when contrasting each facial expression with their scrambled version were located in the temporal face-selective patches (O, PV, PD, MD, and AD) identified by a face-localizer task and also were previously identified in marmosets when contrasting neutral face pictures with neutral objects (Hung et al., 2015). We also found activations in frontal cortex, located in areas 45/47, that we termed PL. This area had also been previously identified in another study when contrasting videos of marmoset faces with videos of scrambled ones (Schaeffer et al., 2020). These regions along the occipitotemporal axis are comparable to those identified in macaques and humans (Tsao et al., 2008; Weiner and Grill-Spector, 2015). Our activations in posterior and anterior TE (i.e., TE3, TE2, and TE1) could correspond to the location of the STS in macaques and humans (Yovel and Freiwald, 2013). This suggests that, as in humans and macaque monkeys (Calder and Young, 2005), a division between dorsal and ventral axes appears for the processing of facial expressions in marmosets. Activations in V4T, FST, TE1, TE2, and TE3 may constitute a dorsal pathway that stands out compared with V4 and TEO areas, which are more ventral areas. This suggests that the progression of areas involved in facial expression processing along this dorsoventral axis may be preserved across Old World and New World primates. However, a lateralization in the right hemisphere for face processing in humans (Haxby et al., 2000) was not reported in our results, similar to what has been observed previously in macaques (Tsao et al., 2003, 2008). This suggests that the processing of facial expressions in marmosets may be distributed across both hemispheres like in macaques.

This network was not only restricted to these face patches, but also included other inferior temporal areas (e.g., areas 35, 36, TF, and entorhinal cortex), frontal regions, and subcortical areas. Our activations in orbitofrontal cortex (areas 11, 13M, 13L, OPAI, and OPro) are in line with previous fMRI studies in humans (Vuilleumier et al., 2001; Adolphs, 2002; Beyer et al., 2015) and lesion studies in humans and macaques (Hornak et al., 1996; Willis et al., 2014) demonstrating a direct role of orbitofrontal cortex in the recognition of facial expressions. This suggests that the frontal cortex in marmosets may also participate in the perception and recognition of facial expressions. At a subcortical level, the activations in the amygdala are also in line with human and macaque studies demonstrating modulation of this area during viewing of facial expressions and emotional valence (Brothers et al., 1990; Adolphs et al., 1994; Breiter et al., 1996; Morris et al., 1996; Posamentier and Abdi, 2003; Hennenlotter and Schroeder, 2006; Kuraoka and Nakamura, 2006; Gothard et al., 2007; Hoffman et al., 2007; Vuilleumier and Pourtois, 2007; Hadj-Bouziane et al., 2008, 2012; Arsalidou et al., 2011; Mattavelli et al., 2014). The activation of the SC and pulvinar supports a model in which a subcortical pathway through these subcortical structures provides the amygdala with visual information about facial expressions, allowing for a rapid and subconscious detection of emotions (Johnson, 2005; Tamietto and De Gelder, 2010).

When we used static facial expressions, we found similar activations. However, some differences emerged, mainly in premotor area 6V and in the posterior part of the cerebellum, where we only found activations for dynamic faces. Electrical microstimulation of 6V area has been shown to evoke shoulder, back, neck, and facial movements in marmosets (Selvanayagam et al., 2019). In addition, this area has been shown to be activated by social interaction observation in marmosets (Cléry et al., 2021). In human fMRI studies on facial mimicry, described as the process where facial expressions induce identical expressions in an observer (Nieuwburg et al., 2021), it has been shown that the perception of dynamic facial expressions induced activations in brain regions associated with facial movements (parts of primary motor and premotor cortex) and regions associated with emotional processing (Carr et al., 2003), which were also correlated with facial muscle responses (Rymarczyk et al., 2018). Furthermore, meta-analysis of neuroimaging studies have reported cerebellar activation in tasks involving dynamic facial expressions, in particular in the posterior lobe (vermal lobule VII), supporting an anterior sensorimotor versus posterior emotional dichotomy in the human cerebellum (Fusar-Poli et al., 2009; Stoodley and Schmahmann, 2009; Baumann and Mattingley, 2012; Zinchenko et al., 2018; Sato et al., 2019). In clinical populations, lesion studies have shown that cerebellar impairment was associated with deficits in emotional processing (Bolceková et al., 2017; Gold and Toomey, 2018). The more widespread network observed during the processing of dynamic facial expressions suggests that motion recruits additional regions that could provide additional information for the processing of facial expressions.

With our activation maps and ROI analysis, we further demonstrated that face-selective patches and some subcortical areas like the amygdala and the hypothalamus were more activated by negative faces—compared with neutral faces. Importantly, the activations obtained for dynamic negative faces were not caused by low-level properties of the videos, as the contrast between scrambled versions of dynamic neutral and negative faces did not show significant activation differences. The higher responses induced by dynamic negative faces were also accompanied by an increase of RR, supporting a direct link between the observation of negative faces and a modulation of the physiological state. Interestingly, the hypothalamus is well known to have a central role in the integration of autonomic responses required for homeostasis and adaptation to internal or external stimuli allowing it to monitor and regulate many variables in the body (Benarroch, 2009). Recently, an fMRI study in humans has demonstrated that exposure to a stress versus a no-stress neutral experiment increased neural activation in brain circuits underlying the stress response (i.e., amygdala, hypothalamus, and midbrain), which were accompanied by increased average heart rate and plasma cortisol response (Sinha et al., 2016). Therefore, physiological threats (e.g., emotional responses to negative faces) may be relayed to occipitotemporal areas and some subcortical areas, like the amygdala, via the stress-integrative brain center located in the hypothalamus, influencing autonomic nervous system (ANS) activities in marmoset (McCorry, 2007; Bains et al., 2015). This activation of the sympathetic branch of the ANS would lead to an increased level of arousal, as observed in many previous physiological studies in humans (Lang et al., 1993; Bradley et al., 2001; Bernat et al., 2006; Fujimura et al., 2013).

In summary, we report evidence from task-based fMRI that face-selective brain regions in New World marmosets are not only involved in basic face detection processing, but also in the processing of facial expressions. This effect was stronger for dynamic facial stimuli than static images. We further demonstrate that negative facial expressions increased arousal levels as indicated by higher respiration rates. The ability to interpret facial expression and to activate the ANS for negative facial stimuli is crucial for effective social interactions (Russell, 1997).

Our findings of functional brain mapping and physiological state in awake marmosets highlight the potential of this species as a powerful model for some neuropsychiatric and neurodevelopmental disorders known to induce deficits in interpreting and understanding facial expressions (Harms et al., 2010; Monk et al., 2010; Dickstein and Xavier Castellanos, 2012; Uljarevic and Hamilton, 2013; Contreras-Rodríguez et al., 2014; Azuma et al., 2015; Safar et al., 2021). For example, the use of transgenic marmoset models of autism spectrum disorder (Watanabe et al., 2021) or obsessive-compulsive disorder, which are known to have difficulties in navigating social interactions and understanding the emotions of others (Gaigg, 2012; Eack et al., 2015; Thorsen et al., 2018), could be used to gain insights into the neurobiology, molecular characterization, and underlying social-cognitive deficits. The use of in vivo calcium imaging (Ebina et al., 2018) of neural activity in temporal lobe in socially behaving marmosets could provide information about the spatial organization of the cortical dynamics underlying face detection and recognition mechanisms. Finally, lesion studies in the same areas could help to determine various levels of impairment in the processing and understanding of facial expressions.

Footnotes

Support was provided for this research by the Canadian Institutes of Health Research (Grant FRN 148365), the Canada First Research Excellence Fund to BrainsCAN, and a Discovery grant by the Natural Sciences and Engineering Research Council of Canada to S.E. We thank Cheryl Vander Tuin, Whitney Froese, Hannah Pettypiece, and Miranda Bellyou for animal preparation and care; and Dr. Alex Li for scanning assistance.

The authors declare no competing financial interests.

References

- Adolphs R (2002) Recognizing emotion from facial expressions: psychological and neurological mechanisms Behav Cogn Neurosci Rev 1:21–62. 10.1177/1534582302001001003 [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A (1994) Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature 372:669–672. 10.1038/372669a0 [DOI] [PubMed] [Google Scholar]

- Aggleton JP, Passingham RE (1981) Syndrome produced by lesions of the amygdala in monkeys (Macaca mulatta). J Comp Physiol Psychol 95:961–977. 10.1037/h0077848 [DOI] [PubMed] [Google Scholar]

- Albuquerque N, Guo K, Wilkinson A, Savalli C, Otta E, Mills D (2016) Dogs recognize dog and human emotions. Biol Lett 12:20150883. 10.1098/rsbl.2015.0883 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arsalidou M, Morris D, Taylor MJ (2011) Converging evidence for the advantage of dynamic facial expressions. Brain Topogr 24:149–163. 10.1007/s10548-011-0171-4 [DOI] [PubMed] [Google Scholar]

- Azuma R, Deeley Q, Campbell LE, Daly EM, Giampietro V, Brammer MJ, Murphy KC, Murphy DG (2015) An fMRI study of facial emotion processing in children and adolescents with 22q11.2 deletion syndrome. J Neurodev Disord 7:1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bains JS, Cusulin JIW, Inoue W (2015) Stress-related synaptic plasticity in the hypothalamus. Nat Rev Neurosci 16:377–388. 10.1038/nrn3881 [DOI] [PubMed] [Google Scholar]

- Baumann O, Mattingley JB (2012) Functional topography of primary emotion processing in the human cerebellum. Neuroimage 61:805–811. 10.1016/j.neuroimage.2012.03.044 [DOI] [PubMed] [Google Scholar]

- Bell AH, Hadj-Bouziane F, Frihauf JB, Tootell RBH, Ungerleider LG (2009) Object representations in the temporal cortex of monkeys and humans as revealed by functional magnetic resonance imaging. J Neurophysiol 101:688–700. 10.1152/jn.90657.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benarroch EE (2009) Central regulation of autonomic function. In: Encyclopedia of neuroscience (Binder MD, Hirokawa N, Windhorst U, eds), pp 654–657. Berlin: Springer. [Google Scholar]

- Bernat E, Patrick CJ, Benning SD, Tellegen A (2006) Effects of picture content and intensity on affective physiological response. Psychophysiology 43:93–103. 10.1111/j.1469-8986.2006.00380.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beyer F, Münte TF, Göttlich M, Krämer UM (2015) Orbitofrontal cortex reactivity to angry facial expression in a social interaction correlates with aggressive behavior. Cereb Cortex 25:3057–3063. 10.1093/cercor/bhu101 [DOI] [PubMed] [Google Scholar]

- Blair RJR (2003) Facial expressions, their communicatory functions and neuro-cognitive substrates. Philos Trans R Soc Lond B Biol Sci 358:561–572. 10.1098/rstb.2002.1220 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bolceková E, Mojzeš M, Van Tran Q, Kukal J, Ostrý S, Kulišták P, Rusina R (2017) Cognitive impairment in cerebellar lesions: a logit model based on neuropsychological testing. Cerebellum Ataxias 4:13. 10.1186/s40673-017-0071-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Codispoti M, Cuthbert BN, Lang PJ (2001) Emotion and motivation I: defensive and appetitive reactions in picture processing. Emotion 1:276–298. 10.1037/1528-3542.1.3.276 [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR (1996) Response and habituation of the human amygdala during visual processing of facial expression. Neuron 17:875–887. 10.1016/s0896-6273(00)80219-6 [DOI] [PubMed] [Google Scholar]

- Brothers L, Ring B, Kling A (1990) Response of neurons in the macaque amygdala to complex social stimuli. Behav Brain Res 41:199–213. 10.1016/0166-4328(90)90108-q [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW (2005) Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci 68 6:641–651. 2005 [DOI] [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL (2003) Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci U S A 100:5497–5502. 10.1073/pnas.0935845100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ceccarini F, Caudek C (2013) Anger superiority effect: the importance of dynamic emotional facial expressions. Vis Cogn 21:498–540. 10.1080/13506285.2013.807901 [DOI] [Google Scholar]

- Cléry JC, Hori Y, Schaeffer DJ, Menon RS, Everling S (2021) Neural network of social interaction observation in marmosets. Elife 10:e65012. 10.7554/eLife.65012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Contreras-Rodríguez O, Pujol J, Batalla I, Harrison BJ, Bosque J, Ibern-Regàs I, Hernández-Ribas R, Soriano-Mas C, Deus J, López-Solà M, Pifarré J, Menchón JM, Cardoner N (2014) Disrupted neural processing of emotional faces in psychopathy. Soc Cogn Affect Neurosci 9:505–512. 10.1093/scan/nst014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. 10.1006/cbmr.1996.0014 [DOI] [PubMed] [Google Scholar]

- Darwin C (2004) The expression of the emotions in man and animals. Cambridge, UK: Cambridge UP. [Google Scholar]

- Dickstein DP, Xavier Castellanos F (2012) Face processing in attention deficit/hyperactivity disorder. Curr Top Behav Neurosci 9:219–237. [DOI] [PubMed] [Google Scholar]

- Eack SM, Mazefsky CA, Minshew NJ (2015) Misinterpretation of facial expressions of emotion in verbal adults with autism spectrum disorder. Autism 19:308–315. 10.1177/1362361314520755 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebina T, Masamizu Y, Tanaka YR, Watakabe A, Hirakawa R, Hirayama Y, Hira R, Terada SI, Koketsu D, Hikosaka K, Mizukami H, Nambu A, Sasaki E, Yamamori T, Matsuzaki M (2018) Two-photon imaging of neuronal activity in motor cortex of marmosets during upper-limb movement tasks. Nat Commun 9:1879. 10.1038/s41467-018-04286-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ferretti V, Papaleo F (2019) Understanding others: emotion recognition in humans and other animals. Genes Brain Behav 18:e12544. 10.1111/gbb.12544 [DOI] [PubMed] [Google Scholar]

- Fujimura T, Katahira K, Okanoya K (2013) Contextual modulation of physiological and psychological responses triggered by emotional stimuli. Front Psychol 4:212. 10.3389/fpsyg.2013.00212 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Benedetti F, Abbamonte M, Gasparotti R, Barale F, Perez J, McGuire P, Politi P (2009) Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci 34:418–432. [PMC free article] [PubMed] [Google Scholar]

- Gaigg SB (2012) The interplay between emotion and cognition in autism spectrum disorder: implications for developmental theory. Front Integr Neurosci 6:113. 10.3389/fnint.2012.00113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert KM, Cléry JC, Gati JS, Hori Y, Johnston KD, Mashkovtsev A, Selvanayagam J, Zeman P, Menon RS, Schaeffer DJ, Everling S (2021) Simultaneous functional MRI of two awake marmosets. Nat Commun 12:6608. 10.1038/s41467-021-26976-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gilbert KM, Dureux A, Jafari A, Zanini A, Zeman P, Menon RS, Everling S (2023) A radiofrequency coil to facilitate task-based fMRI of awake marmosets. J Neurosci Methods 383:109737. 10.1016/j.jneumeth.2022.109737 [DOI] [PubMed] [Google Scholar]

- Gold AK, Toomey R (2018) The role of cerebellar impairment in emotion processing: a case study. Cerebellum Ataxias 5:11. 10.1186/s40673-018-0090-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gothard KM, Battaglia FP, Erickson CA, Spitler KM, Amaral DG (2007) Neural responses to facial expression and face identity in the monkey amygdala. J Neurophysiol 97:1671–1683. 10.1152/jn.00714.2006 [DOI] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Bell AH, Knusten TA, Ungerleider LG, Tootell RBH (2008) Perception of emotional expressions is independent of face selectivity in monkey inferior temporal cortex. Proc Natl Acad Sci U S A 105:5591–5596. 10.1073/pnas.0800489105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Liu N, Bell AH, Gothard KM, Luh WM, Tootell RBH, Murray EA, Ungerleider LG (2012) Amygdala lesions disrupt modulation of functional MRI activity evoked by facial expression in the monkey inferior temporal cortex. Proc Natl Acad Sci U S A 109:E3640–E3648. 10.1073/pnas.1218406109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harms MB, Martin A, Wallace GL (2010) Facial emotion recognition in autism spectrum disorders: a review of behavioral and neuroimaging studies. Neuropsychol Rev 20:290–322. 10.1007/s11065-010-9138-6 [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI, Haxby JV, Hoffman EA, Gobbini MI, Haxby JV, Hoffman EA, Gobbini MI (2000) The distributed human neural system for face perception. Trends Cogn Sci 4:223–233. 10.1016/s1364-6613(00)01482-0 [DOI] [PubMed] [Google Scholar]

- Hennenlotter A, Schroeder U (2006) Partly dissociable neural substrates for recognizing basic emotions: a critical review. Prog Brain Res 156:443–456. [DOI] [PubMed] [Google Scholar]

- Hoffman KL, Gothard KM, Schmid MCC, Logothetis NK (2007) Facial-expression and gaze-selective responses in the monkey amygdala. Curr Biol 17:766–772. 10.1016/j.cub.2007.03.040 [DOI] [PubMed] [Google Scholar]

- Hornak J, Rolls ET, Wade D (1996) Face and voice expression identification in patients with emotional and behavioural changes following ventral frontal lobe damage. Neuropsychologia 34:247–261. 10.1016/0028-3932(95)00106-9 [DOI] [PubMed] [Google Scholar]

- Hung CC, Yen CC, Ciuchta JL, Papoti D, Bock NA, Leopold DA, Silva AC (2015) Functional mapping of face-selective regions in the extrastriate visual cortex of the marmoset. J Neurosci 35:1160–1172. 10.1523/JNEUROSCI.2659-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MH (2005) Subcortical face processing. Nat Rev Neurosci 6:766–774. 10.1038/nrn1766 [DOI] [PubMed] [Google Scholar]

- Johnston KD, Barker K, Schaeffer L, Schaeffer D, Everling S (2018) Methods for chair restraint and training of the common marmoset on oculomotor tasks. J Neurophysiol 119:1636–1646. 10.1152/jn.00866.2017 [DOI] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM (1997) The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci 17:4302–4311. 10.1523/JNEUROSCI.17-11-04302.1997 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K (2006) Impacts of facial identity and type of emotion on responses of amygdala neurons. Neuroreport 17:9–12. 10.1097/01.wnr.0000194383.02999.c5 [DOI] [PubMed] [Google Scholar]

- Lang PJ, Greenwald MK, Bradley MM, Hamm AO (1993) Looking at pictures: affective, facial, visceral, and behavioral reactions. Psychophysiology 30:261–273. 10.1111/j.1469-8986.1993.tb03352.x [DOI] [PubMed] [Google Scholar]

- Liu C, Ye FQ, Yen CCC, Newman JD, Glen D, Leopold DA, Silva AC (2018) A digital 3D atlas of the marmoset brain based on multi-modal MRI. Neuroimage 169:106–116. 10.1016/j.neuroimage.2017.12.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu N, Hadj-Bouziane F, Moran R, Ungerleider LG, Ishai A (2017) Facial expressions evoke differential neural coupling in macaques. Cereb Cortex 27:1524–1531. 10.1093/cercor/bhv345 [DOI] [PMC free article] [PubMed] [Google Scholar]