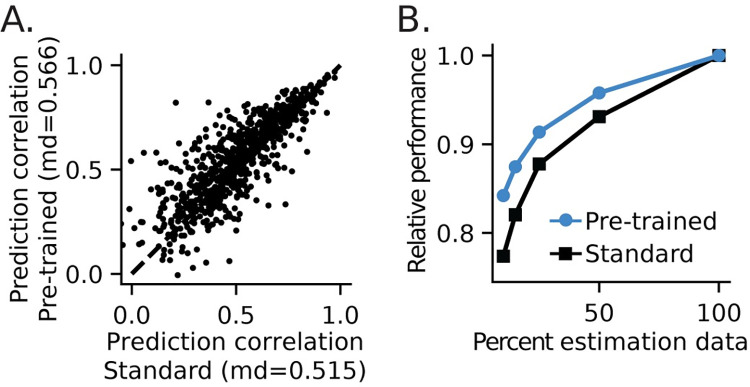

Fig 9. Generalization of a pre-trained 1Dx2-CNN model to smaller datasets.

A. A pre-trained model was fit to every stimulus with neurons from one recording site excluded. The output layer was then re-fit for the excluded neurons, using a fraction of the available stimuli. The standard model was fit to all neurons, but only a subset of stimuli was used for the entire fit. Scatter plot compares prediction correlation between the pre-trained and standard models using 10% of available data. On average, the pre-trained model more accurately predicted the subsampled data (signed-rank test, p = 2.91 x 10−21). B. Median prediction correlation for pre-trained and standard models fit to subsampled data, normalized to performance of the model fit to the full dataset. Improved accuracy of the pre-trained model was consistent across all subsample sizes (signed-rank test, p < 10−9). Performance converges when 100% of data are used to fit both models.