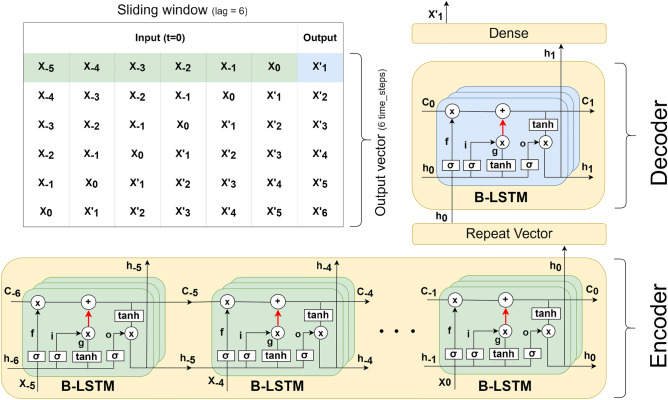

Figure 2.

The encoder-decoder architecture of Bayesian Long Short-Term Memory (B-LSTM). stands for the input at time-step i. stands for the hidden state, which stores information from the recent time steps (short-term). stands for the cell state, which stores all processed information from the past (long-term). The number of input time steps in the encoder is a variable tuned as a hyper-parameter, while the output in the decoder is a single time-step. The depth and number of layers are another set of hyper-parameters tuned during the model optimisation. The red arrows indicate a recurrent dropout maintained during the testing and prediction. The figure shows an example for an input with time lag=6 and a single layer. The final hidden state produced by the encoder is passed to the Repeat Vector layer to convert it from 2 dimensional output to 3 dimensional input as expected by the decoder. The decoder processes the input and produces the final hidden state . This hidden state is finally passed to a dense layer to produce the output. The table illustrates the concept of sliding window method used to forecast multiple time steps during the testing and prediction (i.e., using the output at a time-step as an input to forecast the next time-step). Using this concept, we can predict as many time steps as needed. In the table, an output vector of 6 time steps was predicted.