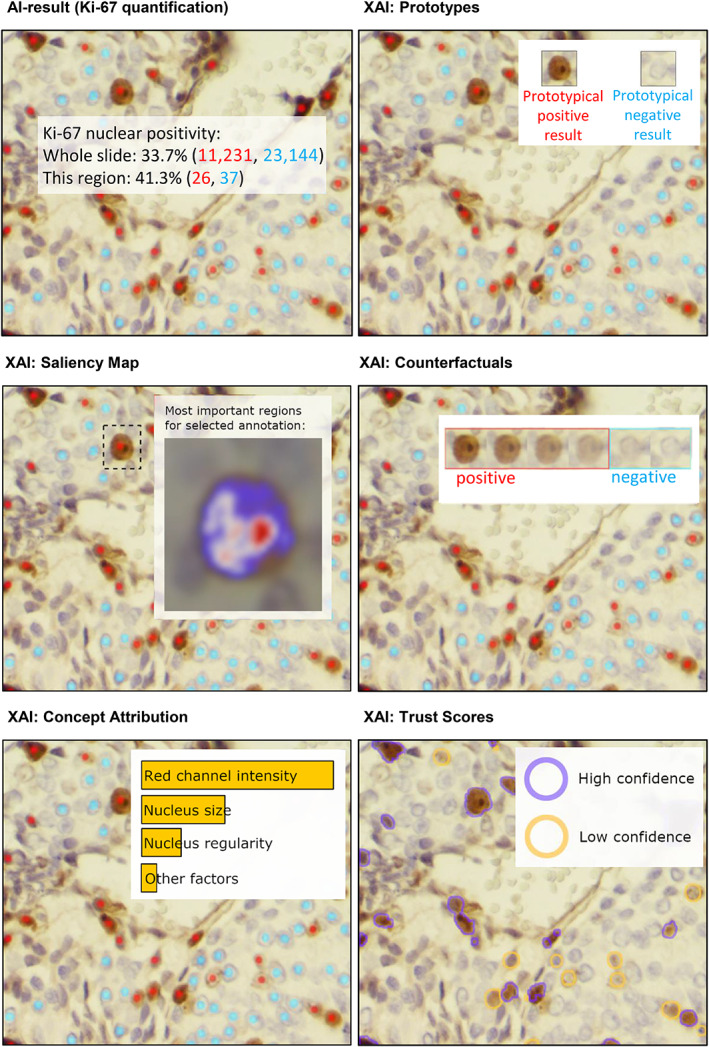

Figure 1.

Popular presentation modalities of XAI methods are prototypes (show representative input examples for the predicted classes), counterfactuals (show how minimal changes in the input would lead to contrastive output), trust scores (highlight areas where the ML model's uncertainty is high), saliency map (visualises estimated relevance of input pixels to the ML model's output) and concept attribution (depict the estimated relevance of human‐defined concepts to the ML model's prediction). Here these presentation modalities of XAI methods are exemplified through AI results for Ki‐67 quantification (graphic adapted from [29]).