Abstract

Introduction:

As the population ages, the prevalence of cognitive impairment is expanding. Given the recent pandemic, there is a need for remote testing modalities to assess cognitive deficits in individuals with neurological disorders. Self-administered, remote, tablet-based cognitive assessments would be clinically valuable if they can detect and classify cognitive deficits as effectively as traditional in-person neuropsychological testing.

Methods:

We tested whether the Miro application, a tablet-based neurocognitive platform, measured the same cognitive domains as traditional pencil-and-paper neuropsychological tests. Seventy-nine patients were recruited and then randomized to either undergo pencil-and-paper or tablet testing first. Twenty-nine age-matched healthy controls completed the tablet-based assessments. We identified Pearson correlations between Miro tablet-based modules and corresponding neuropsychological tests in patients and compared scores of patients with neurological disorders with those of healthy controls using t-tests.

Results:

Statistically significant Pearson correlations between the neuropsychological tests and their tablet equivalents were found for all domains with moderate (r > 0.3) or strong (r > 0.7) correlations in 16 of 17 tests (p <0.05). All tablet-based subtests differentiated healthy controls from neurologically impaired patients by t-tests except for the Spatial Span Forward and Finger Tapping modules. Participants reported enjoyment of the tablet-based testing, denied that it provoked anxiety, and noted no preference between modalities.

Conclusions:

This tablet-based application was found to be widely acceptable to participants. This study supports the validity of these tablet-based assessments in the differentiation of healthy controls from patients with neurocognitive deficits in a variety of cognitive domains and across multiple neurological disease etiologies.

Introduction

Clinical management of neurological disorders affecting cognition depends on reliable diagnostic tools for identifying impairments and aiding in early, accurate disease detection. Early detection of certain neurological conditions such as mild cognitive impairment (MCI) and dementia is difficult because of the insidious nature of cognitive impairment [1]. Early detection can improve patient outcomes by increasing patient medication adherence and cognitive functioning [2,3]. Traditional neuropsychological testing such as the Wechsler Adult Intelligence Scale-Fourth Edition (WAIS-IV) [4], the Boston Naming Test [5], Verbal Learning Tests [6], the Trail Making Test [7], remain the gold standard for assessment of cognitive functioning, supported by biomarker, neuroimaging and genetic diagnostics [8]. While comprehensive neuropsychological batteries provide detailed cognitive profiles, they can be time-consuming, difficult for patients of some backgrounds to access [9–11], and subject to effects of patient fatigue or anxiety [12,13]. Brief cognitive screenings such as the Montreal Cognitive Assessment (MOCA) [14] and Mini-Mental State Examination (MMSE)[15] lack the sensitivity to capture the subtle cognitive deficits and clinically-relevant information [16]. Tablet-based or computer-based programs, including MicroCog [17], CogState [18,19], Cognitive Assessment for Dementia, iPad version (CADi2) [20], Computerized cognitive screening (CCS) [21] and NIH Toolbox-Cognition Battery [22] have been promising assessment tools, but these tools have been used largely in neurodegenerative populations and have not been validated in other neurological conditions. Furthermore, these measures are not self-administered, requiring the participant to perform testing under the supervision of a care provider in a healthcare setting [23].

While instruments like the NIH Toolbox align with the aesthetic of traditional testing, other computerized assessments are gamified, leveraging behavioral strategies inherent in gamification, like goal setting, reinforcement, feedback, connectivity and playfulness [24]. Many gamified assessments including Wii Tests [25], Shapebuilder [26], The Great Brain Experiment [27], BAM-COG [28], and Tap the Hedgehog [29] have been successfully validated with moderate correlation to traditional testing. However, these gamified tasks vary in terms of their alignment with the tasks in traditional testing. Additionally, many of the gamified tools focus on one specific cognitive domain of interest (e.g. executive function, working memory, attention) and do not tackle multiple cognitive domains nor a comprehensive assessment of global cognition.

The current global pandemic highlights the need for remote behavioral testing options. Self-administered, remote, tablet-based cognitive assessments would be efficient, inexpensive, and clinically valuable if they are shown to detect, classify, and track cognitive deficits as effectively as traditional in-person neuropsychological testing while maintaining social distancing and permitting individuals to access services even in remote areas. Technology-based assessments have the potential to capture behaviors that were previously observed but not quantified, such as voice analysis, eye movement trajectories, kinematics of movement, and to use machine learning strategies to process larger quantities of data.

We aimed to evaluate the feasibility and effectiveness of Miro, a novel tablet-based neurocognitive mobile application with gamified activities, developed by The Cognitive Healthcare Company, featuring tablet modules designed to assess multiple cognitive domains typically assessed in traditional neuropsychological testing (Table 1). We compared performance on Miro’s tablet-based cognitive games to performance on traditional pencil-and-paper test equivalents. We hypothesized that tablet-based games would: (1) provide reliable, objective scoring that differentiates people with neurodegenerative disease and stroke from healthy controls; (2) offer ease of use and enjoyment for patients using game-like activities; and (3) correlate with corresponding traditional neuropsychological pencil-and-paper tests.

Table 1.

Cognitive domains tested in traditional testing and tablet testing.

| TRADITIONAL TESTING | TABLET MODULE | COGNITIVE DOMAINS ASSESSED |

|---|---|---|

| Trails A and B | Bolt Bot | Visuospatial attention and executive function |

| Design Fluency | Chart a Course | Design generativity, flexibility, working memory and fine motor function |

| Picture Description | Speak the Scene | Language, speech and voice |

| Spatial Span | Follow the Glow | Visuospatial memory |

| Finger Tapping | Take Flight | Self-directed motor speed and consistency |

| Digits Backward and Forward | Hungry Bees | Basic auditory attention, auditory memory span and working memory |

| Verbal Adaptive Learning Test | Spy Games | Verbal learning and memory |

| Verbal Fluency | Lucky Letters | Verbal generativity, flexibility and working memory |

| Category Fluency | Categories | Generativity; flexibility and working memory |

| Simple Reaction Time | Monster Mash | Simple reaction time |

| Picture Naming | Wordy Goat | Word retrieval |

| Symbol Digit Coding | Treasure Tomb | Processing Speed |

| Extra-ocular Eye Movement Exam | Bosco | Psychomotor speed, saccades, anti-saccades |

Methods

Study Design

This study was approved by the Johns Hopkins University Institutional Review Board. A convenience sample was taken from patients attending a neurology clinical visit at the Stroke and Cognitive Disorders Clinic. Seventy-nine patients were recruited. Participants were evaluated and diagnosed with cognitive impairment based on clinical assessment, neuroimaging evaluation and neuropsychological testing prior to study enrollment. Diagnosis was obtained from clinical chart review and based on accepted diagnostic criteria for Alzheimer’s disease [30], MCI [31], Primary Progressive Aphasia (PPA) [32], Corticobasal Degeneration (CBD) [33], Progressive Supranuclear Palsy (PSP) [34], Healthy controls were recruited through advertisement.

Screening measures included: demographics; medical history (self-reported); Telephone Interview for Cognitive Status (TICS); Geriatric Depression Scale; and the Mini Mental Status Exam (MMSE). Written informed consent was obtained from each participant. Inclusion and exclusion criteria are listed in Table 2. Participants were randomized to either undergo pencil- and-paper testing first and tablet testing second or the reverse order; all participants underwent both testing modalities during the study visit. The trained neuropsychometrician performed the pencil-and-paper testing with the participant. The neuropsychometrician also supervised, but did not guide, the participant in completion of the tablet-based testing.

Table 2.

Inclusion and exclusion criteria of this study.

| Inclusion Criteria | • Referral to Neurology for assessment of cognitive function (for suspicion of Mild Cognitive Impairment, Alzheimer’s Disease, frontotemporal lobar degeneration spectrum, Lewy Body Dementia, or stroke) • Able to give informed consent. • Premorbid proficiency in English (by self-report). • Age 21 or older. |

| Exclusion Criteria | • Prior history of neurological disease affecting the brain other than Alzheimer’s disease, Frontotemporal Lobar Degeneration or Dementia with Lewy bodies, or stroke (e.g., brain tumor, multiple sclerosis, traumatic brain injury) • Known uncorrected hearing loss • Known uncorrected vision loss • Prior history of severe psychiatric illness, developmental disorders, or intellectual disability (e.g., schizophrenia, autism spectrum disorders). |

This study examines a limited set of Miro tablet assessments that are based on versions of 13 common tasks from the domains of neuropsychology and cognitive psychology summarized in Table 1. These tablet modules were designed as games to reduce anxiety, fatigue, and boredom commonly associated with traditional neuropsychological testing and to increase participant motivation for completion of the tasks while still assessing performance across the domains of learning and memory visual attention, cognitive flexibility, speed of processing, auditory attention and working memory, speech and language, and inhibition. Brief descriptions of the activities performed during the tablet modules are reported in Table 3.

Table 3.

Brief descriptions of Miro tablet modules.

| TABLET MODULE | DESCRIPTION |

|---|---|

| Bolt Bot |

A: The participant taps randomly arranged bolts, labeled 1-16 in ascending order, as fast as possible. B: The participant alternates tapping between the numbers 1-16 and the letters A-P, in ascending order, as fast as possible. |

| Chart a Course |

A: Using the touch screen, the participant draws as many unique routes as possible between five targets in 90 seconds. If participants repeat a route or do not finish a route, they are prompted. Penalties (not shown) are incurred with each repeat, incomplete or incorrect route. B: Using the touch screen, the participant draws as many unique routes as possible alternating between two different icons. If participants repeat a route or do not finish a route, they are prompted. Penalties (not shown) are incurred with each repeat, incomplete or incorrect route. |

| Speak the Scene | The participant is prompted to describe the illustrated scene in complete sentences, using as much detail as possible. |

| Follow the Glow | The participant watches the screen as individual lily pads light up one-by-one out of a cluster of lily pads. The number of lily pads that illuminate in succession increases on each subsequent trial. Once the lily pads dim for each trial, the participant must touch the lily pads that illuminated in the same order that they illuminated. |

| Take Flight | The participant secures their middle finger of each hand to the touch screen to isolate index finger movement. A visual description of finger position is shown. The participant then taps their index finger as fast as possible over three trials per hand for up to 15 seconds. |

| Hungry Bees |

A (forward): The participant is prompted to watch a sequence of random numbers, then identifies and taps the sequences on a dial pad. If correct, the participant advances to the next level. Trial length increases linearly. The activity concludes after two incorrect answers per level. Numbers are presented in a controlled random order. B (backward): The participant is prompted to watch a sequence of random number sequences, then identifies and taps the sequences on a dial pad in reverse order. If correct, the participant advances to the next level. Trial length increases linearly. The activity concludes after two incorrect answers per level. Numbers are presented in a controlled random order. |

| Spy Games | The participant hears a list of words, then repeats the words in any order. The assessment includes multiple levels that increase in complexity. Each successive level includes the previous level’s words, plus additional words. In level 1, the activity concludes after 4 incorrect trials. In all other levels, the activity concludes after 3 incorrect trials. |

| Lucky Letters | The participant views a letter that appears on the screen. The participant is prompted to say aloud as many words as possible that begin with that letter within a one-minute timeframe. As explained in the instructions, proper nouns are not accepted. |

| Categories | The participants are prompted to verbalize as many words as possible that belong to a visually presented and described category. Time is limited to 60 seconds. |

| Monster Mash | Targets appear on the touch screen at sequenced intervals. The participant taps each target as quickly as possible. The participant reaction time and correct score are displayed. |

| Wordy Goat | Images of miscellaneous objects appear on the screen one-by-one. The participant names each object aloud. Unnamed or incorrectly named objects are counted as errors (not shown). |

| Treasure Tomb | A number is displayed (1-9). The participant finds the symbol that corresponds to a number in a separate number-symbol key. The participant then locates the symbol in a group of symbols and taps the corresponding symbol. The symbols and number-symbol pairings change after each trial. The trial length increases linearly. |

| Bosco |

A: The participant is shown a target image. The participant taps the corresponding Target Button. When a distractor image appears, the participant taps the corresponding Non-target Button. B: The Target Button and Non-target Buttons switch locations on the screen. The instructions to the participant remain the same: The participant is shown a target image. The participant taps the corresponding Target Button. When a distractor image appears, the participant taps the corresponding Non-target Button. |

The study also included 29 age-matched healthy controls with the same inclusion and exclusion criteria as patients but without a neurological diagnosis.

Data analysis

We used t-tests to evaluate the effectiveness of each tablet game in distinguishing cognitively impaired subjects from cognitively intact, age-matched subjects. We determined Pearson correlations between the tablet and pencil-and-paper scores for all cognitively impaired subjects and for each disease group. STATA version 14 was used for statistical analyses, and a p-value of <0.05 was considered significant.

Data Availability

Anonymized data will be shared with any qualified investigator upon request.

Results

Seventy-nine subjects with cognitive impairments were assessed during their first study encounter. Mean age was 62.9 years old (SD 11.8); 62% of participants were 60 to 79 years old. Men accounted for 61% of the subjects; 70% of subjects had at least college education (Table 4). All participants completed both pencil-and-paper and tablet assessments. Our cohort included cognitively impaired subjects with right and left hemispheric strokes, MCI, various neurodegenerative disorders such as Alzheimer’s dementia, Parkinsonism related cognitive impairment, or Primary Progressive Aphasia (PPA).

Table 4.

Baseline demographics of enrolled patients.

| Characteristic | Patient Participants (n=79) | |

|---|---|---|

| Age (years) | Mean Age (SD): 62.9 (11.8) | |

| 20-39 | 3.8% | 3 |

| 40-59 | 29.1% | 23 |

| 60-79 | 62.0% | 49 |

| Older than 80 | 5.1% | 4 |

| Education level | ||

| Some high school | 3.8% | 3 |

| High school diploma | 17.7% | 14 |

| College degree | 40.5% | 32 |

| Advanced degree | 31.6% | 25 |

| Not reported | 6.3% | 5 |

| Sex | ||

| Female | 39% | 31 |

| Male | 61% | 48 |

| Handedness | ||

| Right | 88.6% | 70 |

| Left | 10.1% | 8 |

| Not Reported | 1.2% | 1 |

| Diagnosis | ||

| Left hemisphere stroke | 20.3% | 16 |

| Right hemisphere stroke | 3.8% | 3 |

| Primary progressive aphasia | 12.7% | 10 |

| Mild cognitive impairment | 35.4% | 28 |

| Alzheimer’s Dementia | 8.9% | 7 |

| Parkinsonian Disorder* | 16.5% | 13 |

| Unclassified cognitive impairment | 2.5% | 2 |

Includes Parkinson’s Disease, Progressive Supranuclear Palsy, and Corticobasal Syndrome

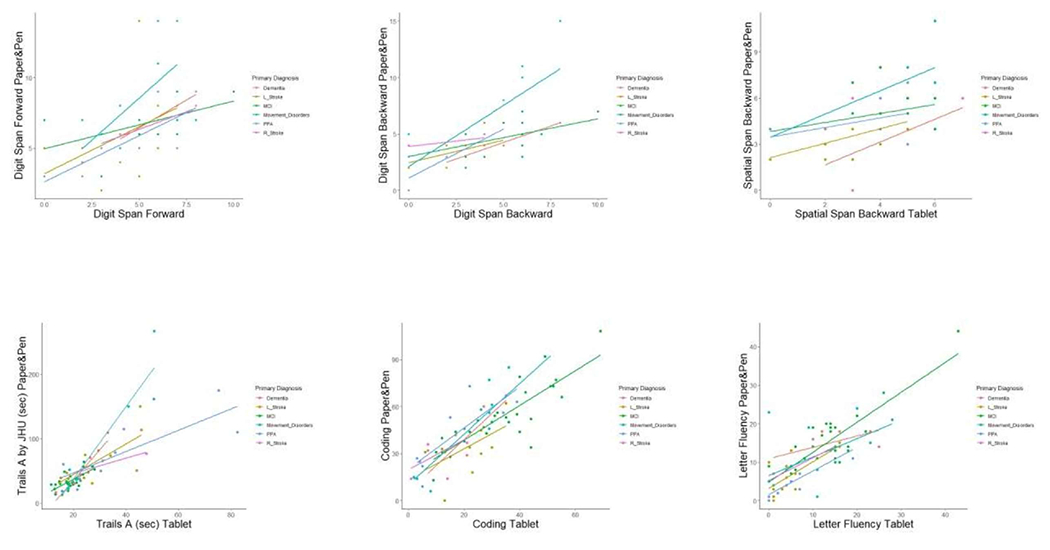

Pearson correlation coefficients between neuropsychological tests and tablet modules for neurologically impaired individuals and corresponding p-values are shown in Table 5. Statistically significant Pearson correlations were noted for all tests except Finger Tapping of the Right Hand (Table 5). Subgroups per diagnosis showed similar correlations, but they were underpowered to show statistical significance (p >0.05; shown in Fig. 1). All subtests of the tablet-based application differentiated healthy controls from patients by t-tests except for the Spatial Span Forward and Finger Tapping modules (Table 6). Average tablet module scores and pencil-and-paper subtest scores are shown in S1 and S2 Tables, respectively.

Table 5.

Pearson correlation coefficients between neuropsychological tests and tablet modules in neurologically impaired participants.

| Neuropsychological Test | Tablet Module | Pearson r | p-value |

|---|---|---|---|

| Digit Span Forward | Hungry Bees | 0.52 | <0.001 |

| Digit Span Backward | Hungry Bees | 0.61 | <0.001 |

| Spatial Span Forward | Follow the Glow | 0.32 | 0.018 |

| Spatial Span Backward | Follow the Glow | 0.56 | <0.001 |

| Letter Fluency | Letters | 0.81 | <0.001 |

| Category Fluency | Categories | 0.75 | <0.001 |

| Coding | Treasure Tomb | 0.82 | <0.001 |

| Design Fluency | Chart A Course | 0.44 | <0.001 |

| Trails A | Bolt Bot | 0.82 | <0.001 |

| Trails B | Bolt Bot | 0.55 | <0.001 |

| Picture Naming | Wordy Goat | 0.52 | 0.015 |

| Finger Tapping: Right Hand | Take Flight | 0.25 | 0.172 |

| Finger Tapping: Left Hand | Take Flight | 0.35 | 0.044 |

| Rey Auditory Verbal Learning Test: Immediate Recall | Spy Games | 0.40 | 0.047 |

| Hopkins Verbal Learning Test: Immediate Recall | Spy Games | 0.51 | 0.004 |

| Rey Auditory Verbal Learning Test: Delayed Recall | Spy Report | 0.87 | 0.001 |

| Hopkins Verbal Learning Test: Delayed Recall | Spy Report | 0.64 | 0.008 |

Fig. 1. Pencil-and-Paper Subtest Scores by Corresponding Tablet Module Scores by Diagnosis.

Paper&Pen = Pencil-and-Paper based subtest. L_Stroke = Left hemispheric stroke; MCI = Mild Cognitive Impairment; PPA = Primary Progressive Aphasia; R_Stroke = Right hemispheric stroke.

Table 6.

Mean (SD) for each tablet module and t-test comparing Patient Group with Healthy Control Group.

| Tablet Module | Description | Patient Group Mean(SD) | Healthy Control Group Mean(SD) | p-value |

|---|---|---|---|---|

| Hungry Bees | Digit Span Forward | 5.23(2.02) | 6.32(1.25) | 0.009 |

| Hungry Bees | Digit Span Backward | 3.69(2.25) | 4.89(1.50) | 0.011 |

| Follow The Glow | Spatial Span Forward | 4.83(1.52) | 5.56(0.88) | <0.083 |

| Follow The Glow | Spatial Span Backward | 4.11(1.53) | 5.67(1.80) | <0.004 |

| Lucky Letters | Letter Fluency | 12.31(8.72) | 16.79(4.31) | 0.006 |

| Categories | Category Fluency | 9.70(7.76) | 14.89(5.49) | 0.001 |

| Bolt Bot | Trails A | 28.17(18.91) | 7.60(2.18) | <0.001 |

| Bolt Bot | Trails B | 100.25(23.81) | 35.33(8.73) | <0.001 |

| Treasure Tomb | Coding | 23.60(15.11) | 36.52(11.94) | <0.001 |

| Chart a Course | Design Fluency | 10.49(4.58) | 13.38(4.07) | 0.004 |

| Take Flight | Tapping: Right Hand | 61.44(24.38) | 67.44(13.29) | 0.213 |

| Take Flight | Tapping: Left Hand | 60.36(23.28) | 63.55(12.64) | 0.486 |

| Spy Games | Verbal Learning: Immediate Recall | 5.82(4.67) | 14.52(12.50) | <0.001 |

| Spy Games | Verbal Learning: Delayed Recall | 9.93(10.14) | 21.25(8.42) | <0.001 |

Forty-eight patients participated in a post-assessment survey (Table 7). Patients reported ease of use with the tablet-based assessment. On a Likert scale (1= strongly agree, 4= neither agree nor disagree, 7=strongly disagree), most patients reported agreement with the statement “I enjoyed the tablet games” (mean 2.5, SD 1.79) and disagreement with the statement “Tablet games made me anxious” (mean 5.5, SD 1.78). Regarding modality preference, 48% of participants preferred the tablet; 18.8% preferred the pencil-and-paper tests; 33% had no preference. There were no statistical differences across diagnostic groups (Fisher’s exact test: p = 0.763). Age positively correlated with agreement (higher scores= lower agreement) with the statements “I enjoy the tablet games” (r=0.32; p=0.02) and “I prefer tablet games compared to pencil-and-paper testing” (r=0.33; p=0.02); the older the patient, the less they enjoyed it or preferred it. Most diagnostic groups reported enjoyment of the tablet modules and did not feel that they induced anxiety. Diagnostic groups of PPA and Right hemispheric stroke demonstrated a preference for pencil-and-paper testing (5.0 and 4.0, respectively); Parkinsonian Disorder participants noted a preference for tablet-based testing (1.9), and the other diagnostic groups were neutral (3.0, 3.2, 3.8). There were no differences between sexes on any of the scales.

Table 7.

Survey Response Mean (SD) Per Diagnosis.

| Statement | Left Stroke N=9 | Right Stroke N=3 | PPA N=5 | MCI N=19 | Alzheimer’s Dementia N=5 | Parkinsonian Disorder N=9 | Overall Mean (SD) N=48 |

|---|---|---|---|---|---|---|---|

| I enjoyed the tablet games. | 3.7(2.1) | 2.0(1.7) | 2.4(1.5) | 2.15(1.6) | 2.5(1.7) | 2.3(1.8) | 2.5(1.8) |

| The tablet games made me anxious. | 4.6(2.0) | 7.0(0) | 5.4(2.3) | 5.5(1.7) | 6(1.4) | 5.9(1.6) | 5.5(1.78) |

| I prefer tablet games compared to pencil-and-paper testing. | 3.8(2.2) | 4.0(3.0) | 5.0(1.6) | 3.2(1.8) | 3.0(1.4) | 1.9(1.4) | 3.3(1.96) |

Participants responded to the above statements on a scale from 1 to 7 reflecting their agreement/disagreement with the statement (e.g., 1 = strongly agree, 4 = neither agree nor disagree, 7 = strongly disagree).

PPA = Primary Progressive Aphasia; MCI = Mild Cognitive Impairment

Discussion

The growing population of individuals with cognitive impairment of vascular and non-vascular etiologies, the rising health care costs associated with in-person neuropsychological evaluations, and the need for social distancing considering the recent global pandemic underscore the need for accurate, reliable, and remote neurocognitive assessment options. Results of neurocognitive testing are instrumental in developing appropriate and timely referrals for services (e.g., rehabilitative therapies, community or home health services) [35], avoid preventable hospitalizations [36], and assessment of an individual’s level of independence (e.g., medication management and cooking) [37]. In mild cognitive impairment, accurate data on cognitive performance over time can assist in determining the pathology, progression and prognosis of the underlying neurological disease [38]. In stroke, capturing accurate data on cognitive-linguistic skills can assist in identifying, tracking and managing new-onset behavioral changes following stroke [39].

Currently, there are few psychometrically-sound tablet-based instruments for neurocognitive evaluation that can be widely used across neurological conditions. In this study, we compare the performance profile of Miro’s mobile assessments with traditional in-person neuropsychological assessments. The present study supports the validity of these tablet-based assessments in the differentiation of healthy controls from patients with neurocognitive deficits in a variety of cognitive domains and across multiple neurological disease etiologies.

Analysis of correlation of tablet-based testing modules with their neuropsychological testing counterparts revealed moderate to strong correlation for 16 out of 17 modules. Finger tapping of the Left Hand and Spatial Span Forward had Pearson correlations that were relatively low, but statistically significant.

All subtests of the tablet-based application differentiated healthy controls from neurologically-impaired individuals by t-tests except for the Spatial Span Forward and Left and Right Finger Tapping modules (Table 6). This could be due to the fact that Spatial span and other assessments of working memory can miss the early stages of neurodegenerative disease [40,41]. Furthermore, in this study, the non-tablet-based finger tapping test was measured by total number of finger taps, and it did not quantify rhythm or pauses; tablet-based assessments like ‘Take Flight’ quantify both number of taps and characteristics of finger tapping. Differences in physical requirements of the testing modalities may also account for differences in performance. Take Flight requires additional coordination between the thumb middle and index fingers and utilization of both hands during the module, unlike traditional finger tapping which only requires use of an index finger on one hand. The tablet-based module has a motivating task (allowing the participant to “catch” a bird and prevent it from falling if fast enough) that may be more engaging than traditional finger tapping.

Despite the concern that older adults would find tablet-based testing to be inaccessible or challenging, participants found the assessments to be widely acceptable for self-administration across a broad age range from 20s to 80s. Participants reported no preference between testing modalities and endorsed enjoyment of the tablet games without provoking anxiety. To further accommodate for older participants who may also have vision, hearing or language impairments, future iterations of these modules should consider adaptations, like adding multimedia options for task instructions or including additional practice trials.

Gamification is a rapidly expanding area that has important opportunities for clinical research in cognition as lack of participant motivation has a negative impact on data quality [42] and conversely gamification can improve motivation while still maintaining a scientifically valid task [38]. Though gamified tasks promote participant engagement and motivation, the gamification inherently involves additional stimuli for the participant and increased burden of visuospatial processing [39]. Several gamified tablet-based or computer-based assessments exist for neuropsychological evaluation but there have been no tools to emerge that provide a comprehensive assessment battery correlated with neuropsychological evaluation by individual test.

Although the findings provide some indications for the potential of this type of tablet-based assessments in clinical practice, our study is subject to several limitations that can be explored with further research. First, our sample size was relatively small, especially when attempting to evaluate trends by diagnostic group. Because prior validation studies of other computerized cognitive assessments have been limited by the specific patient population tested, we wished to recruit participants with cognitive impairment agnostic of etiology to demonstrate tolerability and validity across multiple neuropathologies. Second, participants were grouped into broad diagnostic groups, there was a degree of heterogeneity, such that patients within each diagnostic group had varying degrees of cognitive deficits (e.g., patients within the left hemispheric stroke group had different degrees of cognitive-linguistic impairment). Third, our study reflects a cross-sectional view of participant performance. In patients with neurodegenerative conditions with evolving cognitive statuses, it will be important to include longitudinal analyses as well, which is a planned next stage of this study.

Self-administered, tablet-based assessments, could standardize the evaluation and scoring processes, increase accessibility, and support remote, longitudinal tracking of patient status over time. Remote evaluation tools have become essential for both clinical care and research environments. As such, tablet-based tools could be a valuable tool for both clinical care and research environments aiming to characterize evolving cognitive deficits in neurological conditions.

Supplementary Material

Funding Sources

Shenly Glenn receives financial support from Miro. The authors are supported by NIH: NIDCD through grants R01 DC05375 (AEH, AEW, CS), R01 DC015466 (AEH, SS, KK), R01 DC011317 (AEH), and P50 DC014664 (AEH, RF), and NINDS through R25 NS065729 (AEH). Kelly Sloane and Zafer Keser report no disclosures.

Footnotes

Statement of Ethics

This study protocol was reviewed and approved for the use of human subjects by the Johns Hopkins University Institutional Review Board (approval number IRB00088299). Written informed consent was obtained from participants (or their legally appointed representative) to participate in the study.

Conflict of Interest Statement

Shenly Glenn is the CEO of Miro.

Data Availability Statement

Data will be made available upon reasonable request to the corresponding author.

References

- 1.Lyons BE, Austin D, Seelye A, Petersen J, Yeargers J, Riley T, et al. Pervasive computing technologies to continuously assess Alzheimer’s disease progression and intervention efficacy. Front Aging Neurosci. 2015;7(JUN):1–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Laske C, Sohrabi HR, Frost SM, López-de-Ipiña K, Garrard P, Buscema M, et al. Innovative diagnostic tools for early detection of Alzheimer’s disease. Alzheimers Dement. 2015. May;11(5):561–78. [DOI] [PubMed] [Google Scholar]

- 3.Rodakowski J, Saghafi ES, Butters MA, Skidmore ER. Nonpharmacological Interventions in Adults with MCI and Early dementia. Mol Asp Med. 2015;(412):38–53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Wechsler D Wechsler Adult Intelligence Scale-Fourth Edition: Administration and scoring manual. 2008. [Google Scholar]

- 5.Goodglass H, Kaplan E. The assessment of aphasia and related disorders. Lea & Febiger; 1972. [Google Scholar]

- 6.Benedict RHB, Schretlen D, Groninger L, Brandt J. Hopkins Verbal Learning Test – Revised: Normative Data and Analysis of Inter-Form and Test-Retest Reliability. Clin Neuropsychol [Internet], 1998. Feb 1;12(1):43–55. Available from: 10.1076/clin.12.1.43.1726 [DOI] [Google Scholar]

- 7.Tombaugh TN. Trail Making Test A and B: Normative data stratified by age and education. Arch Clin Neuropsychol. 2004;19(2):203–14. [DOI] [PubMed] [Google Scholar]

- 8.Sperling RA, Aisen PS, Beckett LA, Bennet DA, Craft S. Toward defining the preclinical stages of alzheimer’s disease: Recommendations from the national institute on aging. Alzheimer’s Dement. 2011;7(3):280–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chin A, Negash S, Hamilton RH. Diversity and Disparity in Dementia: The Impact of Ethnoracial Differences in Alzheimer’s Disease. Alzheimer Dis Assoc Disord [Internet]. 2011;25(3):187–95. Available from: doi: 10.1097/WAD.0b013e318211c6c9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Teel CS. Rural practitioners’ experiences in dementia diagnosis and treatment. Aging Ment Health [Internet], 2004. Sep 1;8(5):422–9. Available from: 10.1080/13607860410001725018 [DOI] [PubMed] [Google Scholar]

- 11.Barth J, Nickel F, Kolominsky-Rabas PL. Diagnosis of cognitive decline and dementia in rural areas — A scoping review. Int J Geriatr Psychiatry. 2018;33(3):459–74. [DOI] [PubMed] [Google Scholar]

- 12.McGuire C, Crawford S, Evans JJ. Effort Testing in Dementia Assessment: A Systematic Review. Arch Clin Neuropsychol. 2019;34(1):114–31. [DOI] [PubMed] [Google Scholar]

- 13.Steele CM. A Threat in the Air: How Stereotypes Shape Intellectual Identity and Performance. Am Psychol. 1997;52(6):613–29. [DOI] [PubMed] [Google Scholar]

- 14.Nasreddine ZS, Phillips NA, Bédirian V, Charbonneau S, Whitehead V, Collin I, et al. The Montreal Cognitive Assessment, MoCA: A brief screening tool for mild cognitive impairment. J Am Geriatr Soc. 2005;53(4):695–9. [DOI] [PubMed] [Google Scholar]

- 15.Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”. A practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12(3):189–98. [DOI] [PubMed] [Google Scholar]

- 16.Tombaugh TN, McIntyre NJ. The Mini-Mental State Examination: A Comprehensive Review. J Am Geriatr Soc [Internet], 1992. Sep 1;40(9):922–35. Available from: 10.llll/j.1532-5415.1992.tb01992.x [DOI] [PubMed] [Google Scholar]

- 17.Elwood RW. MicroCog: Assessment of cognitive functioning. Neuropsychol Rev. 2001;11(2):89–100. [DOI] [PubMed] [Google Scholar]

- 18.Mielke MM, Machulda MM, Hagen CE, Edwards KK, Roberts RO, Pankratz VS, et al. Performance of the CogState computerized battery in the Mayo Clinic Study on Aging. Alzheimer’s Dement [Internet]. 2015;11(11):1367–76. Available from: 10.1016/j.jalz.2015.01.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.de Jager CA, Schrijnemaekers ACMC, Honey TEM, Budge MM. Detection of MCI in the clinic: Evaluation of the sensitivity and specificity of a computerised test battery, the Hopkins Verbal Learning Test and the MMSE. Age Ageing. 2009;38(4):455–60. [DOI] [PubMed] [Google Scholar]

- 20.Onoda K, Yamaguchi S. Revision of the cognitive assessment for dementia, iPad version (CADi2). PLoS One. 2014;9(10). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Scanlon L, O”Shea E, O”Caoimh R, Timmons S. Usability and Validity of a Battery of Computerised Cognitive Screening Tests for Detecting Cognitive Impairment. Gerontology. 2016;62(2):247–52. [DOI] [PubMed] [Google Scholar]

- 22.Heaton RK, Akshoomoff N, Tulsky D, Mungas D, Weintraub S, Dikmen S, et al. Reliability and validity of composite scores from the NIH toolbox cognition battery in adults. J Int Neuropsychol Soc. 2014;20(6):588–98. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Casaletto KB, Umlauf A, Beaumont J, Gershon R, Slotkin J, Akshoomoff N, et al. Demographically Corrected Normative Standards for the English Version of the NIH Toolbox Cognition Battery. J Int Neuropsychol Soc. 2015;21(5):378–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cugelman B Gamification: What it is and why it matters to digital health behavior change developers. JMIR Serious Games. 2013;1(1):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Gamberini L, Cardullo S, Seraglia B, Bordin A. Neuropsychological testing through a Nintendo Wii console. Stud Health Technol Inform. 2010;154:29–33. [PubMed] [Google Scholar]

- 26.Atkins SM, Sprenger AM, Colflesh GJH, Briner TL, Buchanan JB, Chavis SE, et al. Measuring working memory is all fun and games. Exp Psychol. 2014;61(6):417–38. [DOI] [PubMed] [Google Scholar]

- 27.McNab F, Dolan RJ. Dissociating distractor-filtering at encoding and during maintenance. J Exp Psychol Hum Percept Perform. 2014;40(3):960–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Aalbers T, Baars MAE, Rikkert MGMO, Kessels RPC. Puzzling with online games (BAM-COG): Reliability, validity, and feasibility of an online self-monitor for cognitive performance in aging adults. J Med Internet Res. 2013;15(12):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Verhaegh J, Fontijn WFJ, Aarts EHL, Resing WCM. In-game assessment and training of nonverbal cognitive skills using TagTiles. Pers Ubiquitous Comput. 2013;17(8):1637–46. [Google Scholar]

- 30.McKhann GM, Knopman DS, Chertkow H, Hyman BT, Jack CRJ, Kawas CH, et al. The diagnosis of dementia due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011. May;7(3):263–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Albert MS, DeKosky ST, Dickson D, Dubois B, Feldman HH, Fox NC, et al. The diagnosis of mild cognitive impairment due to Alzheimer’s disease: recommendations from the National Institute on Aging-Alzheimer’s Association workgroups on diagnostic guidelines for Alzheimer’s disease. Alzheimers Dement. 2011. May;7(3):270–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gorno-Tempini ML, Hillis AE, Weintraub S, Kertesz A, Mendez M, Cappa SF, et al. Classification of primary progressive aphasia and its variants. Neurology. 2011. Mar;76(ll):1006–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Armstrong MJ, Litvan I, Lang AE, Bak TH, Bhatia KP, Borroni B, et al. Criteria for the diagnosis of corticobasal degeneration. Vol. 80, Neurology. Litvan, Irene: ilitvan@ucsd.edu: Lippincott Williams & Wilkins; 2013. p. 496–503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Litvan I, Agid Y, Caine D, Campbell G, Dubois B, Duvoisin RC, et al. Clinical research criteria for the diagnosis of progressive supranuclear palsy (Steele-Richardson-Olszewski syndrome): report of the NINDS-SPSP international workshop. Neurology. 1996. Jul;47(1):1–9. [DOI] [PubMed] [Google Scholar]

- 35.Ashford JW, Soo B, O’Hara R, Dashd P, Franke L, Philippe R, et al. Should older adults be screened for dementia? It is important to screen for evidence of dementia! Alzheimers Dement. 2007;3(2):75–80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Anderson TS, Marcantonio ER, McCarthy EP, Herzig SJ. National Trends in Potentially Preventable Hospitalizations of Older Adults with Dementia. J Am Geriatr Soc. 2020. Jul; [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tang JY-M, Wong GH-Y, Ng CK-M, Kwok DT-S, Lee MN-Y, Dai DL-K, et al. Neuropsychological Profile and Dementia Symptom Recognition in Help-Seekers in a Community Early-Detection Program in Hong Kong. J Am Geriatr Soc. 2016. Mar;64(3):584–9. [DOI] [PubMed] [Google Scholar]

- 38.Levy JA, Chelune GJ. Cognitive-behavioral profiles of neurodegenerative dementias: Beyond alzheimer’s disease. J Geriatr Psychiatry Neurol. 2007;20(4):227–38. [DOI] [PubMed] [Google Scholar]

- 39.Mijajlović MD, Pavlović A, Brainin M, Heiss WD, Quinn TJ, Ihle-Hansen HB, et al. Post-stroke dementia - a comprehensive review. BMC Med. 2017;15(1):1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Kessels RPC, Overbeek A, Bouman Z. Assessment of verbal and visuospatial working memory in mild cognitive impairment and Alzheimer’s dementia. Dement Neuropsychol. 2015;9(3):301–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Wiechmann A, Hall JR, O’Bryant SE. The utility of the spatial span in a clinical geriatric population. Neuropsychol Dev Cogn Sect B, Aging, Neuropsychol Cogn. 2011. Jan;18(1):56–63. [DOI] [PubMed] [Google Scholar]

- 42.DeRight J, Jorgensen RS. I just want my research credit: frequency of suboptimal effort in a non-clinical healthy undergraduate sample. Clin Neuropsychol. 2015;29(1):101–17. [DOI] [PubMed] [Google Scholar]

- 43.Dörrenbächer S, Müller PM, Tröger J, Kray J. Dissociable effects of game elements on motivation and cognition in a task-switching training in middle childhood. Vol. 5, Frontiers in Psychology. 2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Cowley B, Charles D, Black M, Hickey R. Toward an Understanding of Flow in Video Games. Comput Entertain [Internet], 2008. Jul;6(2). Available from: 10.1145/1371216.1371223 [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Anonymized data will be shared with any qualified investigator upon request.

Data will be made available upon reasonable request to the corresponding author.