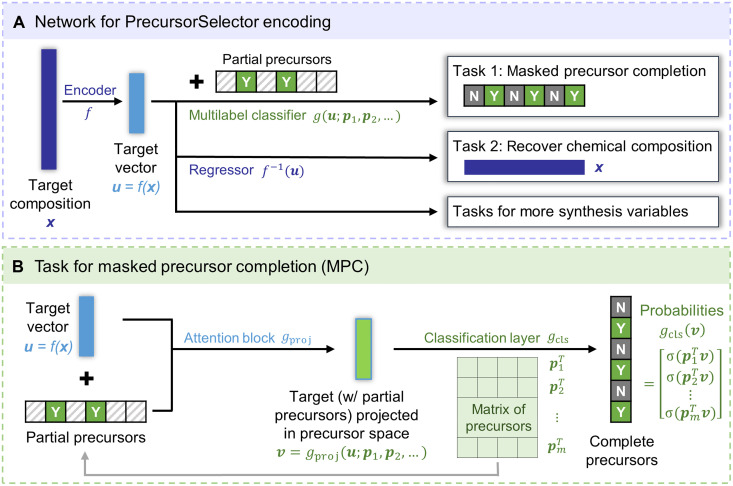

Fig. 3. Representation learning to encode precursor information for target materials.

(A) Multitask network structure to encode the target material in the upstream and to predict the complete precursor set, chemical composition, and more synthesis variables in the downstream. x and u represent the composition and encoded vector of the target material, respectively. pi represents the ith precursor in a predefined ordered precursor list. Dense layers are used in each layer unless specified differently. (B) Submodel of multilabel classification for the MPC task. Part of the precursors are randomly masked; the remaining precursors (marked as “Y”) are used as a condition to predict the probabilities of other precursors for the target material. The probabilities corresponding to the complete precursors (marked as “Y”) are expected to be higher than that of unused precursors (marked as “N”). The attention block gproj (61) is used to aggregate the target vector and conditional precursors. The final classification layer gcls and the embedding matrix for conditional precursors share the same weights. σ represents the sigmoid function.