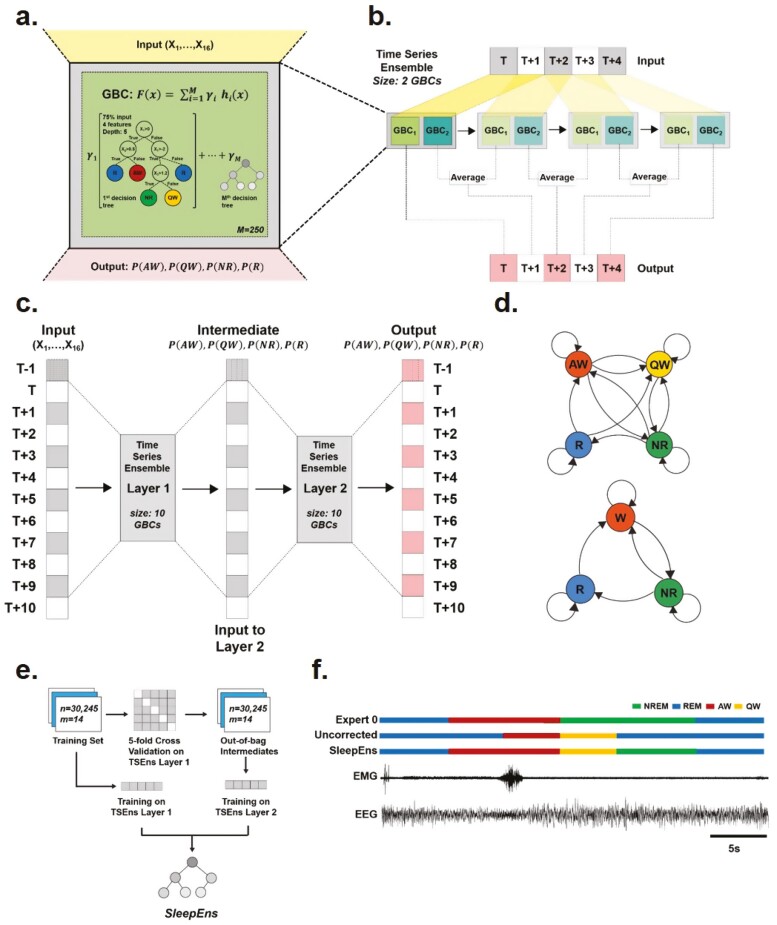

Figure 2.

Model architecture of SleepEns, physiological state transition graph, and training protocol. (a) Representation of a gradient boosting classifier (GBC) architecture, receiving inputs from 16 features of EEGs and EMGs signals (X1,…, X16). GBC is an ensemble of successive decision trees and can be express as a mathematic function: ; where F(x) is the overall GBC, comprised of the weighted sum of M base estimators (i.e. number of decision trees), h(x) represent each decision tree, x is the input, and γ the weight of each estimators. For SleepEns, we found through cross-validation that optimal parameters were 250 base estimators (i.e. M) each receiving a random subset of input features limited to the square root of the total number of features (i.e. four features randomly selected from the 16 input features for each estimator) and trained on a random 75% of the training data. (b) Demonstration of how a small-scale Time Series Ensemble, composed of only two GBCs, operates over a 5 epoch time-series data as it moves its window along the sequence. The first epoch, T, is determined only by one gradient boosting classifier (GBC 1) as there is no prior epoch for GBC 2 to operate on. The second epoch, T + 1, is determined by combining the output of GBC 2 using T to T + 1 with the output of GBC 1 (of the following time step) using T + 1 to T + 2. This continues until the final epoch, T + 4, which is solely determined by GBC 2. (c) Architectural details of the SleepEns model, consisting of two layers of Time Series Ensembles, each with a window size of 10, totaling to 20 GBCs, each with 250 constituent estimators (M). The model operates in a greedy fashion, using the first layer to produce intermediate probabilities of active wake (PAW), quiet wake (PQW), NREM sleep (PNR), and REM sleep (PR), across the sequence and then feeding this to the second layer to produce the final output. (d) State transition graph illustrating the feasible transitions that can occur in 4-states (top) and 3-states (bottom). In particular, Wake states can inter-transition, REM must always be preceded by NREM and can only transition into waking states. (e) Training SleepEns requires layer-by-layer training. To train a subsequent Time Series Ensembles (TSEns) layer, cross-validation is used to produce out-of-bag values to avoid over-fitting. (f) Nine epochs covering 45s were taken from one of the test recordings to demonstrate an example of a postprocessing correction, in this case the utility of Valid Transitions to improve the predictions by implementing the most probably physiological state transition sequence. The two bottom traces represent the EEG and EMG input signals. The top three traces depict hypnograms by Expert 0, a SleepEns without postprocessing, and the final post-processed SleepEns. Note that without postprocessing, SleepEns could identify a direct wake to REM sleep transition.