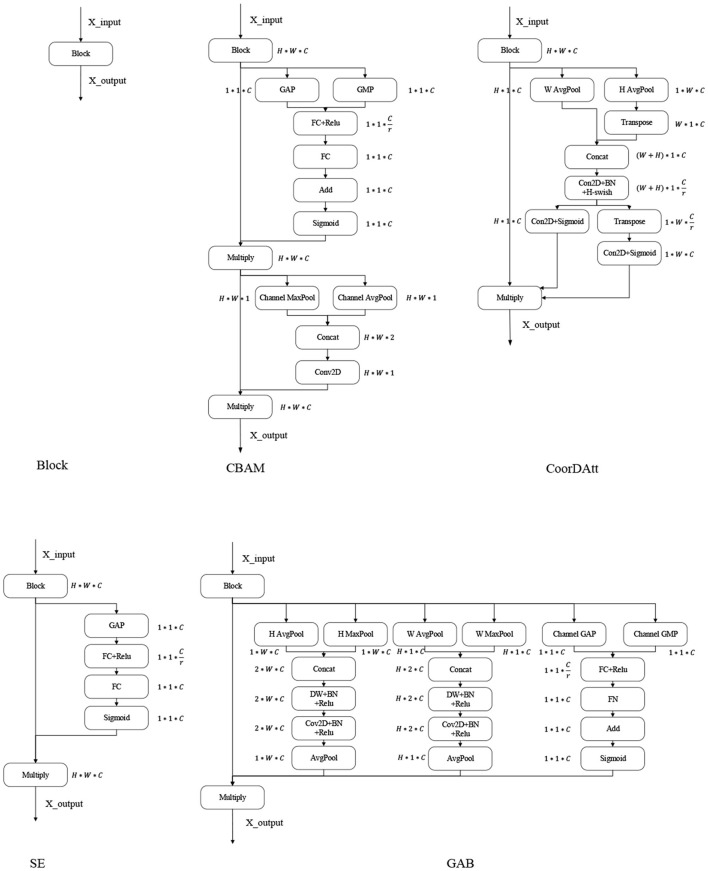

Figure 1.

Attention mechanism. GAP, global average pooling layer; GMP, global max pooling layer; Channel AvgPool and Channel MaxPool represent the average and maximum pooling operations conducted on the channels, respectively; W AvgPool and W MaxPool represent the maximum and average pooling operations conducted on the width, respectively; H AvgPool and H MaxPool represent the maximum and average pooling operations conducted on the height, respectively; FC, fully connected layer; ReLU, sigmoid and H-swish are the activation functions; BN, batch normalization; Conv2D, convolutional layer; Add, element-by-element addition; Multiply, vector multiplication; Transpose, transposition of high-dimensional vectors; Concat, stitching according to a certain dimension.