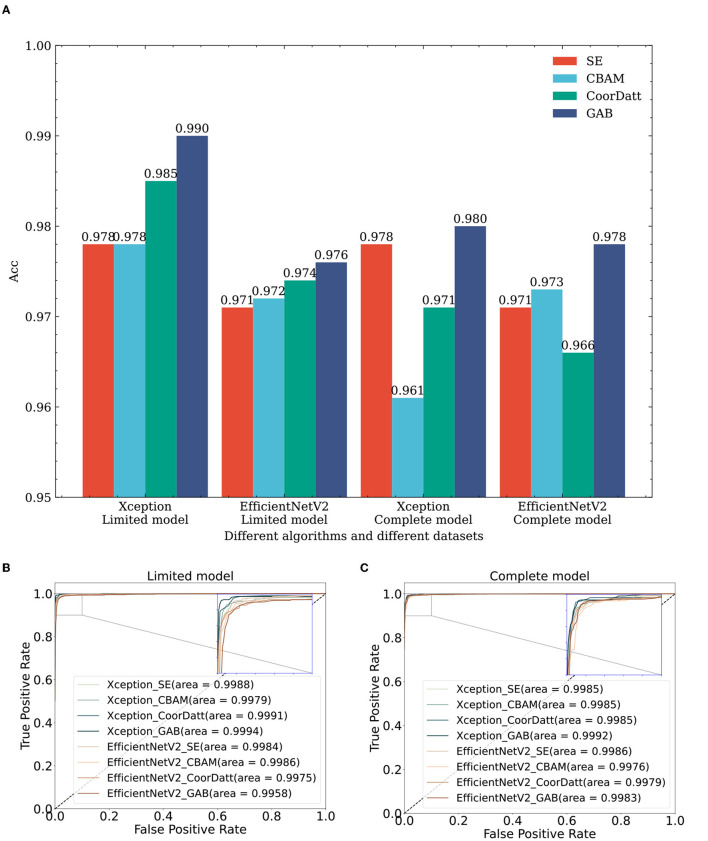

Figure 7.

The impact of attention mechanisms on classification performance: a comparative study across multiple algorithms and datasets. (A) Effect of attention mechanisms on classification accuracy across diverse datasets and algorithms. (B) Effect of attention mechanisms on ROC of different algorithms in the limit model dataset. (C) Effect of attention mechanisms on ROC of different algorithms in the complete model dataset. Xception_SE, Xception_CBAM, Xception_CoorDatt and Xception_GAB represent the fusion of Xception with four different attention mechanisms, SE, CBAM, CoorDatt and GAB, respectively; EfficientNetV2_SE, EfficientNetV2_CBAM, EfficientNetV2_CoorDatt, and EfficientNetV2_GAB represent the fusion of EfficientNetV2 with four different attention mechanisms, SE, CBAM, CoorDatt and GAB, respectively.