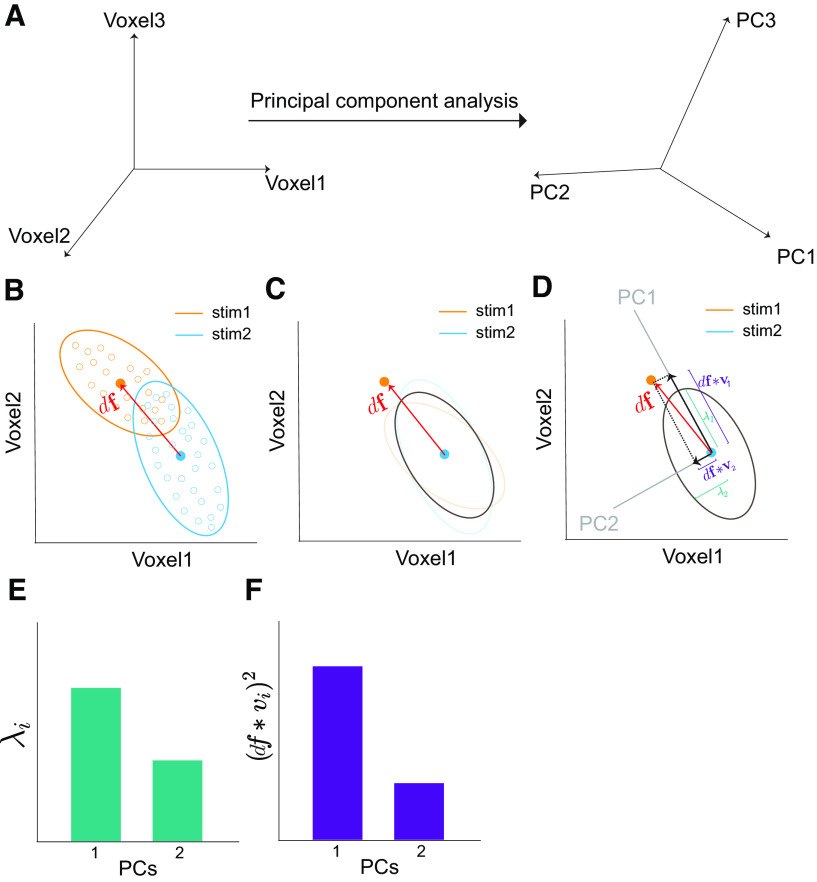

Figure 7.

Schematics of eigen decomposition of information. A, The information contained by trial-by-trial high-dimensional population responses can be calculated in the eigenspace (obtained by principal component analysis) instead of the original voxel space (Eq. 13). Thanks to the linear independence of the eigenspace, the information of the whole population can be simply reformulated as the summation of information along each eigendimension (Eq. 13). B, Two 2 d response distributions of two voxels toward two stimuli. The yellow and blue ellipses also show the direction of covariances ( and ). The red vector () can be viewed as the Euclidean distance between the two distributions (Eq. 2 with the assumption of = 1). C, The gray ellipse depicts the averaged covariance (averaged and ; Eq. 3). D, The averaged covariance can be decomposed into two principal components (PC1 and PC2). E, The variance along each PC is illustrated. Intervoxel RCs result in a larger variance in PC1 () than PC2 (). F, The squared projected signals on each PC are illustrated. Note that the sum of squared projected signals [i.e., the sum of bars in F, )] is a constant, which amounts to the norm of the signal vector