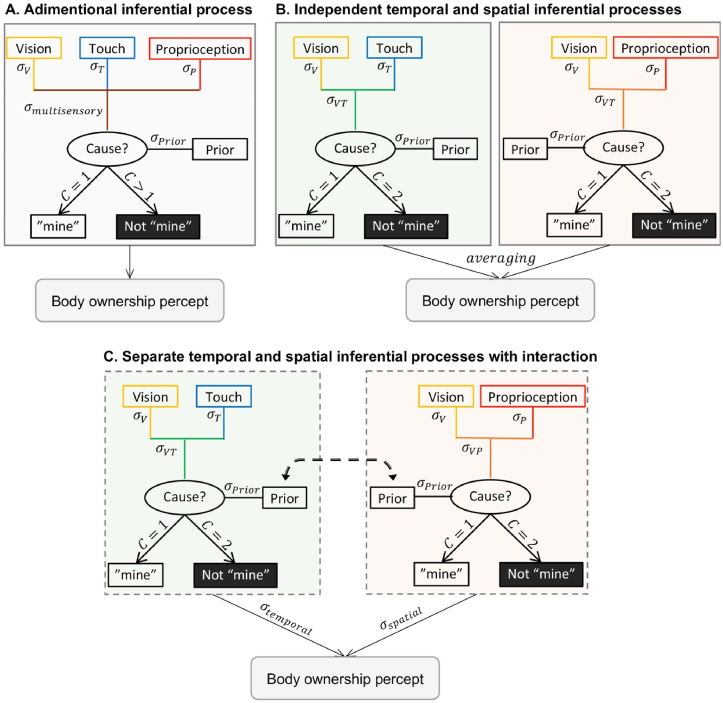

Fig. 4.

Theoretical models for the integration of visual, tactile, and proprioceptive signals into a body ownership percept. In the first model (A), the most likely causal structure (common cause C = 1 or different causes C > 1) for the visual, tactile, and proprioceptive signals is inferred from the combination of the three sensory cues and the corresponding sensory uncertainty ( resulting from the combination of unisensory noise ), without distinction between temporal and spatial dimensions. From this Bayesian causal inference process, the participant will perceive the hand in front of them as theirs or not, depending on whether a common cause is inferred or not. The lack of significant change in the sensory uncertainty parameter estimates between our no noise and noise conditions tends to disagree with this model. Instead, our results suggest that bimodal congruencies along temporal (visuotactile) and spatial (visuoproprioceptive) dimensions lead to separate estimates, which are subsequently merged in a single multisensory estimate that corresponds to the subjective feeling of body ownership. The last two models both agree with this hierarchical structure but diverge in their assumption about how the spatial and temporal estimates interact. In the work of Samad and colleagues (2015), the two estimates are assumed to be fully independent, and the final percept is computed from an average of these two estimates (B). Alternatively, we propose a model where the estimates influence each other via a modulation of their respective priors; additionally, when the estimates are combined, each one contributes to the final sense of body ownership according to its relative reliability ( or , C).