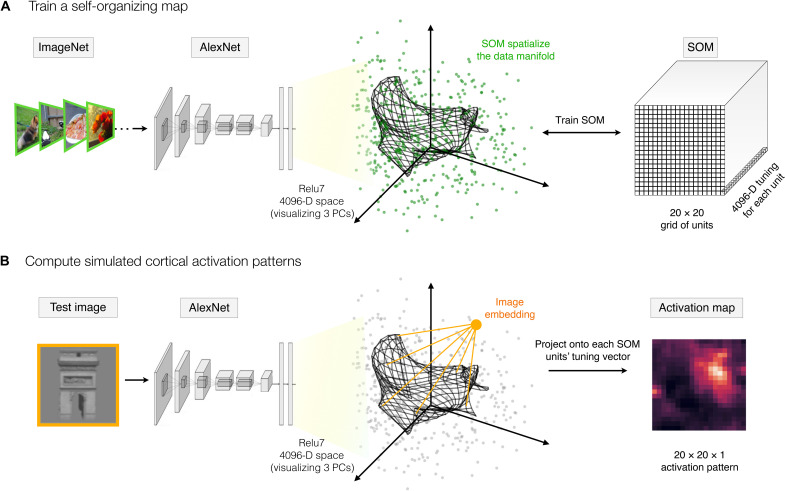

Fig. 1. Self-organizing the features space of a deep neural network.

(A) A self-organizing map is appended to a pretrained AlexNet, following the relu7 stage. The relu7 layer is a 4096-D feature space, visualized here along the first three principal components (PCs), where the green dots reflect the embedding of a sample of ImageNet validation images. The final SOM layer consists of a 2D map of units of size 20 × 20, each with 4096-D tuning (depicted as a black grid). During training, the tuning curves of these map units are updated to capture the data manifold of the input images (i.e., the set of green dots). (B) To compute the spatial activation map for any test image, the image is run through the model and the relu7 embedding is computed. Then, for each map unit, the projection of the image embedding onto the tuning vector is computed (conceiving of these tuning vectors as carrying out a filter operation), and this value is taken as the activation of this map unit to this image.