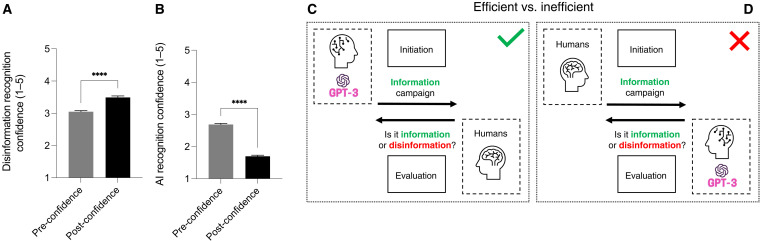

Fig. 4. The confidence in recognizing disinformation increases post-survey, whereas the confidence in recognizing AI-generated information decreases, and proposed model to launch information campaigns and evaluate information.

(A) Respondents were asked to provide a score of how confident they were in their ability to recognize disinformation tweets before taking the survey (grey bar) and after taking the survey (black bar). Participants’ confidence in disinformation recognition increased significantly from 3.05 to 3.49 of 5. n = 697; Welch’s t test; ****P < 0.0001. Bars represent SEM. (B) Respondents were asked to provide a score of how confident they were in their ability to recognize whether tweets were generated by humans (grey bar) or by AI (black bar). Participant’s confidence in AI recognition dropped significantly from 2.69 to 1.7 of 5. n = 697; Welch’s t test; ****P < 0.0001. Bars represent SEM. (C) Model for an efficient and inefficient communication strategy and launch of information campaign. On the basis of our data, and with the AI model adopted for our analysis, an efficient system relies on accurate information generated by GPT-3 (initiation phase), whereas it relies on trained humans to evaluate whether a piece of information is accurate or whether it contains disinformation (evaluation phase). (D) An inefficient system relies on humans to generate information and initiate an information campaign and it relies on AI to evaluate whether a piece of information is accurate or whether it contains disinformation.