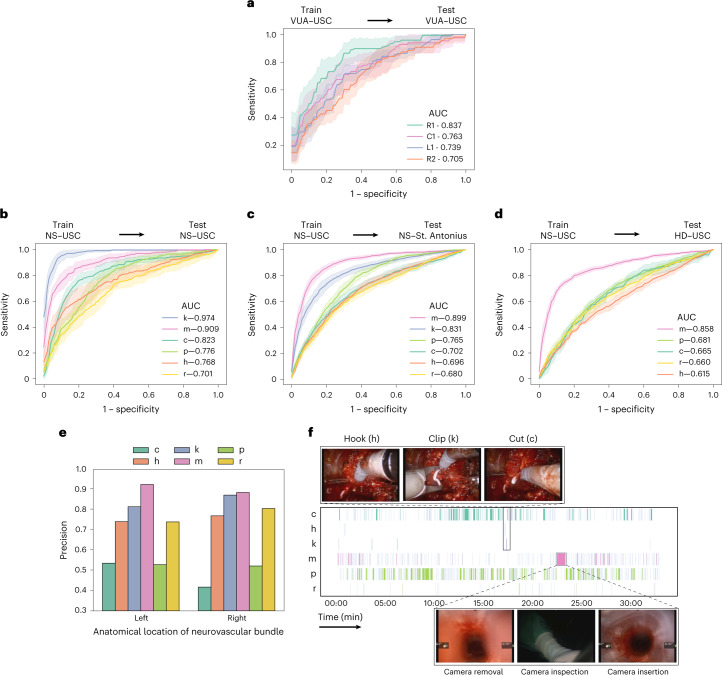

Fig. 3. Decoding surgical gestures from videos.

a, SAIS is trained and evaluated on the VUA data exclusively from USC. The suturing gestures are right forehand under (R1), right forehand over (R2), left forehand under (L1) and combined forehand over (C1). b–d, SAIS is trained on the NS data exclusively from USC and evaluated on the NS data from USC (b), NS data from SAH (c) and HD data from USC (d). The dissection gestures are cold cut (c), hook (h), clip (k), camera move (m), peel (p) and retraction (r). Note that clips (k) are not used during the HD step. Results are shown as an average (±1 standard deviation) of ten Monte Carlo cross-validation steps. e, Proportion of predicted gestures identified as correct (precision) stratified on the basis of the anatomical location of the neurovascular bundle in which the gesture is performed. f, Gesture profile where each row represents a distinct gesture and each vertical line represents the occurrence of that gesture at a particular time. SAIS identified a sequence of gestures (hook, clip and cold cut) that is expected in the NS step of RARP procedures, and discovered outlier behaviour of a longer-than-normal camera move gesture corresponding to the removal, inspection and re-insertion of the camera into the patient’s body.