Abstract

Brain-wide association studies (BWAS) are a fundamental tool in discovering brain-behavior associations. Several recent studies showed that thousands of study participants are required for good replicability of BWAS because the standardized effect sizes (ESs) are much smaller than the reported standardized ESs in smaller studies. Here, we perform analyses and meta-analyses of a robust effect size index using 63 longitudinal and cross-sectional magnetic resonance imaging studies from the Lifespan Brain Chart Consortium (77,695 total scans) to demonstrate that optimizing study design is critical for increasing standardized ESs and replicability in BWAS. A meta-analysis of brain volume associations with age indicates that BWAS with larger variability in covariate have larger reported standardized ES. In addition, the longitudinal studies we examined reported systematically larger standardized ES than cross-sectional studies. Analyzing age effects on global and regional brain measures from the United Kingdom Biobank and the Alzheimer’s Disease Neuroimaging Initiative, we show that modifying longitudinal study design through sampling schemes improves the standardized ESs and replicability. Sampling schemes that improve standardized ESs and replicability include increasing between-subject age variability in the sample and adding a single additional longitudinal measurement per subject. To ensure that our results are generalizable, we further evaluate these longitudinal sampling schemes on cognitive, psychopathology, and demographic associations with structural and functional brain outcome measures in the Adolescent Brain and Cognitive Development dataset. We demonstrate that commonly used longitudinal models can, counterintuitively, reduce standardized ESs and replicability. The benefit of conducting longitudinal studies depends on the strengths of the between- versus within-subject associations of the brain and non-brain measures. Explicitly modeling between- versus within-subject effects avoids averaging the effects and allows optimizing the standardized ESs for each separately. Together, these results provide guidance for study designs that improve the replicability of BWAS.

Brain-wide association studies (BWAS) use noninvasive magnetic resonance imaging (MRI) to identify associations between inter-individual differences in behavior, cognition, biological or clinical measurements and brain structure or function1,2. A fundamental goal of BWAS is to identify true underlying biological associations that improve our understanding of how brain organization and function are linked to brain health across the lifespan.

Recent studies have raised concerns about the replicability of BWAS1–3. Statistical replicability is typically defined as the probability of obtaining consistent results from hypothesis tests across different studies. Like statistical power, replicability is a function of both the standardized effect sizes (ESs) and the sample size4–6. Low replicability in BWAS has been attributed to a combination of small sample sizes, small standardized ESs, and bad research practices (such as p-hacking and publication bias)1,2,7–11. The most obvious solution to increasing the replicability in BWAS is to increase study sample sizes. Several recent studies showed that thousands of study participants are required to obtain replicable findings in BWAS1,2. However, massive sample sizes are often infeasible in practice.

Standardized ESs (such as Pearson’s correlation and Cohen’s d) are statistical values that not only depend on the underlying biological association in the population, but also on the study design. Two studies of the same biological effect with different study designs will have different standardized ESs. For example, contrasting brain function of depressed versus non-depressed groups will have a different Cohen’s d ES if the study design measures more extreme depressed states contemporaneously with measures of brain function, as opposed to less extreme depressed states, even if the underlying biological effect is the same. While researchers cannot increase the magnitude of the underlying biological association, its standardized ES – and thus its replicability – can be increased by critical features of study design.

In this paper, we focus on identifying modifiable study design features that can be used to improve the replicability of BWAS by increasing standardized ESs. Increasing standardized ESs through study design prior to data collection stands in sharp contrast to bad research practices that can artificially inflate reported ESs, such as p-hacking and publication bias. Surprisingly, there has been very little research regarding how modifications to the study design might improve BWAS replicability. Specifically, we focus on two major design features that directly influence standardized ESs: variation in sampling scheme and longitudinal designs1,12–14. Notably, these design features can be implemented without inflating the sample estimate of the underlying biological effect when using correctly specified models15. By increasing the replicability of BWAS through study design, we can more efficiently utilize the National Institutes of Health’s $1.8 billion average annual investment in neuroimaging research in the past decade16.

Here, we conduct a comprehensive investigation of cross-sectional and longitudinal BWAS designs by capitalizing on multiple large-scale data resources. Specifically, we begin by analyzing and meta-analyzing 63 neuroimaging datasets including 77,695 scans from 60,900 cognitively normal (CN) participants from the Lifespan Brain Chart Consortium17 (LBCC). We leverage longitudinal data from the UK Biobank (UKB; up to 29,031 scans), the Alzheimer’s Disease Neuroimaging Initiative (ADNI; 2,232 scans), and the Adolescent Brain Cognitive Development study (ABCD; up to 17,210 scans). Within these datasets, we consider many of the most commonly measured brain phenotypes, including multiple measures of brain structure and function. To ensure that our results are broadly generalizable, we evaluate associations with diverse covariates of interest, including age, sex, cognition, and psychopathology. To facilitate comparison between BWAS designs, we introduce a new version of the robust effect size index (RESI) that allows us to demonstrate how longitudinal study design directly impacts standardized ESs. We show that covariate variability has a marked impact on the standardized ES and the replicability of BWAS. Additionally, we find that longitudinal designs are not always beneficial and can in fact reduce standardized ESs in specific cases where careful modeling is required. Critically, the impact of longitudinal data depends directly on the difference in between- and within-subjects effects. Together, our results emphasize that careful study design can markedly improve the replicability of BWAS. To this end, we provide recommendations that allow investigators to optimally design their studies prospectively.

Meta-analyses show standardized ESs depend on study population and design

To fit each study-level analysis, we regress each of the global brain measures (total gray matter volume (GMV), total subcortical gray matter volume (sGMV), and total white matter volume (WMV), and, mean cortical thickness (CT)) and regional brain measures (regional GMV and CT, based on Desikan-Killiany parcellation18) on sex and age in each of the 63 neuroimaging datasets from the LBCC. Age is modeled using nonlinear spline function in linear regression models for the cross-sectional datasets and generalized estimating equations (GEEs) for the longitudinal datasets (Methods). Site effects are removed before the regressions using ComBat19,20 (Methods). Analyses for total GMV, total sGMV and total WMV use all 63 neuroimaging datasets (16 longitudinal; Tab. S1). Analyses of regional brain volumes and CT used 43 neuroimaging datasets (13 longitudinal; Methods; Tab. S2).

Throughout the present study, we use the robust effect size index (RESI)21–23 as a measure of standardized ES. The RESI is a recently developed index encompassing many types of test statistics and is equal to ½ Cohen’s d under some assumptions (Methods; Supplementary Information)21. To investigate the effects of study design features on the RESI, we perform meta-analyses for the four global brain measures and two regional brain measures in the LBCC to model the association of study-level design features with standardized ESs. Study design features are quantified as the sample mean, standard deviation (SD), and skewness of the age covariate as nonlinear terms, and a binary variable indicating the design type (cross-sectional or longitudinal). After obtaining the estimates of the standardized ESs of age and sex in each analysis of the global and regional brain measures, we conduct meta-analyses of the estimated standardized ESs using weighted linear regression models with study design features as covariates (Methods).

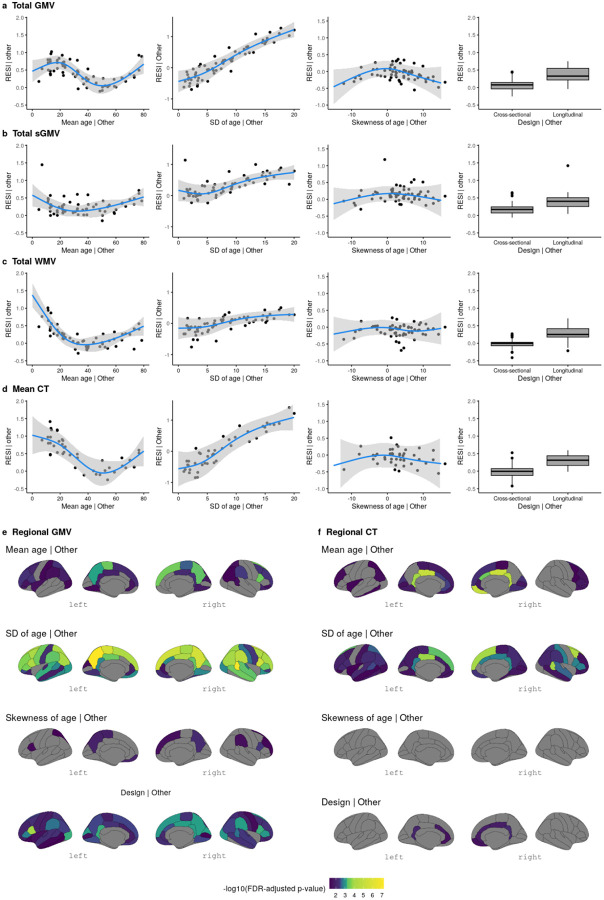

For total GMV, the partial regression plots of the effect of each study design feature demonstrate a strong cubic-shape relationship between the standardized ES for total GMV-age association and study population mean age. This cubic shape indicates that the strength of the age effect varies with respect to the age of the population being studied. The largest age effect on total GMV in the human lifespan occurs during early and late adulthood (Tab. S3; Fig. 1a). There is also a strong positive linear effect of the study population SD of age and the standardized ES for total GMV-age association. For each unit increase in the SD of age (in years), expected standardized ES increases by about 0.1 (Fig. 1a). This aligns with the well-known relationship between correlation strength and covariate SD indicated by statistical principles24. Plots for total sGMV, total WMV, and mean CT show U-shaped changes of the age effect with respect to study population mean age (Fig. 1b–d). A similar but sometimes weaker relationship is shown between expected standardized ES and study population SD and skewness of the age covariate (Fig. 1b–d; Tab. S4–S6). Finally, the meta-analyses also show a moderate effect of study design on the standardized ES of age on each of the global brain measures (Fig. 1a–d; Tab. S3–S6). The average standardized ES for total GMV-age associations in longitudinal studies (RESI=0.39) is substantially larger than in cross-sectional studies (RESI=0.08) after controlling for the study design variables, corresponding to a >380% increase in the standardized ES for longitudinal studies. This value quantifies the systematic differences in the standardized ESs between the cross-sectional and longitudinal studies among the 63 neuroimaging studies. Notably, longitudinal study design does not improve the standardized ES for biological sex, because sex does not vary within subjects in these studies (Tab. S7–S10; Fig. S2).

Fig. 1. Meta-analyses reveal study design features that are associated with larger standardized effect sizes (ESs) of age on different brain measures.

(a-d) Partial regression plots of the meta-analyses of standardized ESs (RESI) for the association between age and global brain measures (a) total gray matter volume (GMV), (b) total subcortical gray matter volume (sGMV), (c) total white matter volume (WMV), and (d) mean cortical thickness (CT) (“| Other” means after fixing the other features at constant levels: design = cross-sectional, mean age = 45 years, sample age SD = 7 and/or skewness of age = 0 (symmetric)) show that standardized ESs vary with respect to the mean and SD of age in each study. The blue curves are the expected standardized ESs for age from the locally estimated scatterplot smoothing (LOESS) curves. The gray areas are the 95% confidence bands from the LOESS curves. (e-f) The effects of study design features on the standardized ESs for the associations between age and regional brain measures (regional GMV and CT). Regions with Benjamini-Hochberg adjusted p-values<0.05 are shown in color.

For regional GMV and CT, similar effects of study design features also occur across regions (Fig. 1e–f; 34 regions per hemisphere). In most of the regions, the standardized ESs of age on regional GMV and CT are strongly associated with the study population SD of age. Longitudinal study designs generally tend to have a positive effect on the standardized ESs for regional GMV-age associations and a positive, but weaker effect on the standardized ESs for regional CT-age associations (see Extended Display Item). To improve the comparability of standardized ESs between cross-sectional and longitudinal studies, we propose a new ES index: the cross-sectional RESI for longitudinal datasets (Supplementary Information: Section 1). The cross-sectional RESI for longitudinal datasets represents the RESI in the same study population, if the longitudinal study had been conducted cross-sectionally. This newly developed ES index allows us to quantify the benefit of using a longitudinal study design in a single dataset (Supplementary Information: Section 1.3).

The meta-analysis results demonstrate that standardized ESs are dependent on study design features, such as mean age, SD of the age of the sample population, and crosssectional/longitudinal design. Moreover, the results suggest that modifying study design features, such as increasing variability and conducting longitudinal studies, can increase the standardized ESs in BWAS.

Improved sampling schemes can increase standardized ESs and replicability

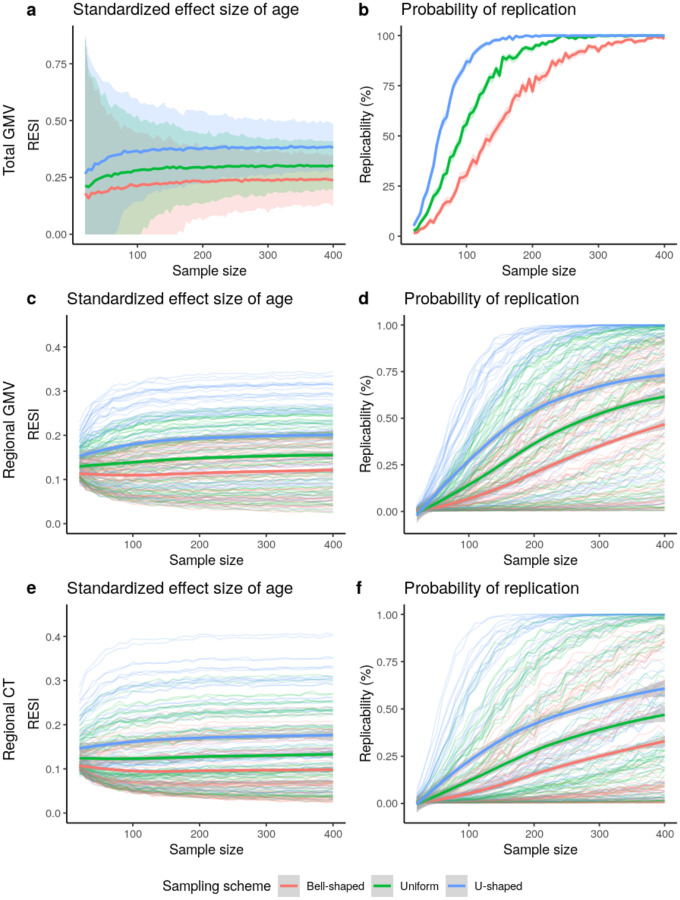

To investigate the impact of modifying the variability of the age covariate on increasing standardized ESs and replicability, we implement three sampling schemes that produce different sample SD of the age covariate. We treat the large-scale cross-sectional United Kingdom Biobank (UKB) data as the population and draw samples whose age distributions follow a pre-specified shape (bell-shaped, uniform, and U-shaped; Fig. S3; Methods). In UKB, the U-shaped sampling scheme on age increases the standardized ES for the total GMV-age association by 60% compared to bell-shaped and by 27% compared to uniform (Fig. 2a), with an associated increase in replicability (Fig. 2b). To achieve 80% replicability for detecting the total GMV-age association (Methods), <100 subjects are sufficient if using the U-shaped sampling scheme, whereas about 200 subjects are needed if the bell-shaped sampling scheme is used (Fig. 2b). A similar pattern can be seen for the regional outcomes of GMV and CT (Fig. 2c–f). The U-shaped sampling scheme typically provides the largest standardized ESs of age and the highest replicability, followed by the uniform and bell-shaped schemes. The U-shaped sampling scheme shows greater region-specific improvement in the standardized ESs for regional GMV-age and regional CT-age associations and replicability compared to the bell-shaped scheme (Fig. S4).

Fig. 2. Increased standardized effect sizes (ESs) and replicability for age associations with different brain measures: (a-b) total gray matter volume (GMV), (c-d) regional GMV, and (e-f) regional cortical thickness (CT), under three sampling schemes in the UKB study.

(N = 29,031 for total GMV and N = 29,030 for regional GMV and CT). The sampling schemes target different age distributions to increase the variability of age: Bell-shaped<Uniform<U-shaped (Fig. S3). Using the resampling schemes that increase age variability increases (a) the standardized ESs and (b) replicability (at significance level of 0.05) for total GMV-age association. The same result holds for (c-d) regional GMV and (e-f) regional CT. The curves represent the average standardized ES or estimated replicability at a given sample size and sampling scheme. The shaded areas represent the corresponding 95% confidence bands. (c-f) The bold curves are the average standardized ES or replicability across all regions with significant uncorrected effects using the full UKB data.

To investigate the effect of increasing the variability of the age covariate longitudinally, we implement sampling schemes to adjust the between- and within-subject variability of age in the bootstrap samples from the longitudinal ADNI dataset. In the bootstrap samples, each subject has two measurements (baseline and a follow-up). To imitate the true operation of a study, we select each subject’s two measurements based on baseline age and the follow-up age by targeting specific distributions for the baseline age and the age change at the follow-up time point (Fig. S5; Methods). Increasing between-subject and within-subject variability of age increases the average observed standardized ESs, with corresponding increases in replicability (Fig. 3). U-shaped between-subject sampling scheme on age increases the standardized ES for total GMV-age association by 23.6% compared to bell-shaped and by 12.1% compared to uniform, when using uniform within-subject sampling scheme (Fig. 3a).

Fig. 3. Increased standardized effect sizes (ESs) and replicability for age associations with structural brain measures under different longitudinal sampling schemes in the ADNI data.

Three different sampling schemes (Fig. S5) are implemented in bootstrap analyses to modify the between- and within-subject variability of age, respectively. (a-b) Implementing the sampling schemes results in higher (between- and/or within-subject) variability and increases the (a) standardized ES and (b) replicability (at significance level of 0.05) for the total gray matter volume (GMV)-age association. The curves represent the average standardized ES or estimated replicability and the gray areas are the 95% confidence bands across the 1,000 bootstraps. (c-d) Increasing the number of measurements from one to two per subject provides the most benefit on (c) standardized ES and (d) replicability for the total GMV-age association when using uniform between- and within-subject sampling schemes and N = 30. The points represent the mean standardized ESs or estimated replicability and the whiskers are the 95% confidence intervals. (e-h) Increased standardized ESs and replicability for the associations of age with regional (e-f) GMV and (g-h) cortical thickness (CT), across all brain regions under different sampling schemes. The bold curves are the average standardized ES or estimated replicability across all regions with significant uncorrected effects using the full ADNI data. The gray areas are the corresponding 95% confidence bands. Increasing the between- and within-subject variability of age by implementing different sampling schemes can increase the standardized ESs of age, and the associated replicability, on regional GMV and regional CT.

Additionally, we investigate the effect of the number of measurements per subject on the standardized ES and replicability in longitudinal data using the ADNI dataset. Adding a single additional measurement after the baseline increases the standardized ES for total GMV-age association by 156% and replicability by 350%. The benefit of additional measurements is minimal (Fig. 3c–d). Finally, we also evaluate the effects of the longitudinal sampling schemes on regional GMV and CT in ADNI (Fig. 3e–h). When sampling two measurements per subject, the between- and within-subject sampling schemes producing larger age variability increase the standardized ES and replicability across all regions.

Together, these results suggest having larger spacing in between- and within-subject age measurements increases standardized ES and replicability. Most of the benefit of the number of within-subject measurements is due to the first additional measurement after baseline.

Preferred sampling schemes and longitudinal designs depend on the between- and within-subject associations of the brain and non-brain measures

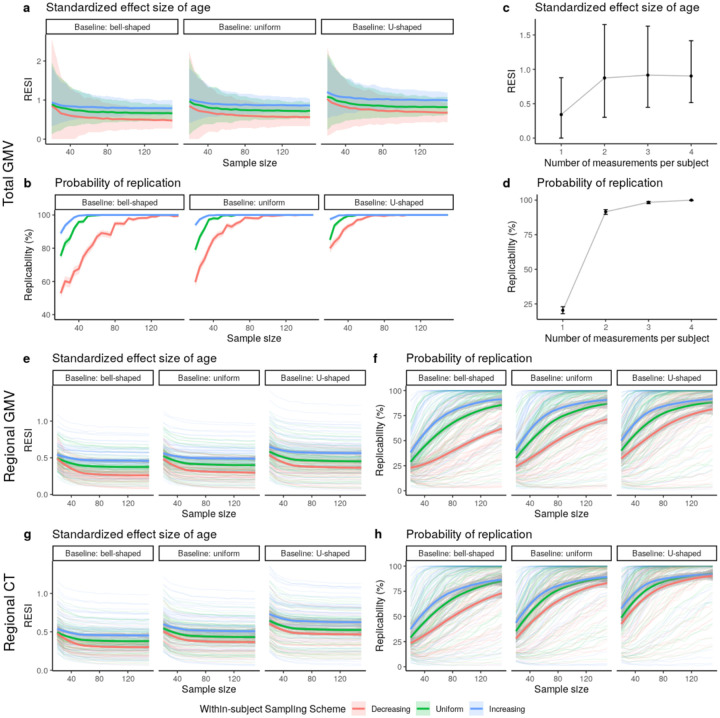

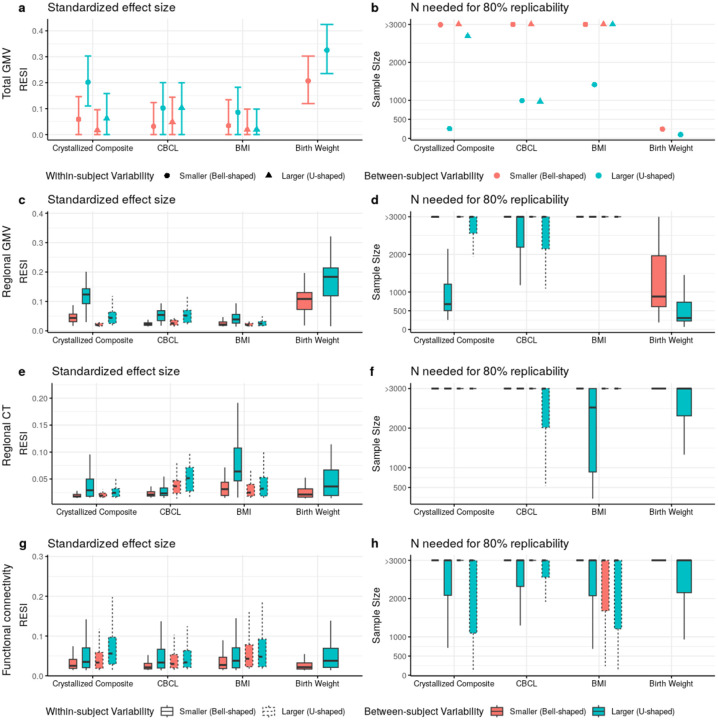

To explore if the proposed sampling schemes are effective on a variety of non-brain covariates and their associations with structural and functional brain measures, we implement the sampling schemes on all subjects (with and without neuropsychiatric symptoms) with cross-sectional and longitudinal measurements from the ABCD dataset. The non-brain covariates include the NIH toolbox25, Child Behavior Checklist (CBCL), body mass index (BMI), birth weight, and handedness (Tab. S11–S12; Methods). Functional connectivity (FC) is used as a functional brain measure and is computed for all pairs of regions in the Gordon atlas26 (Methods). We use the bell- and U-shaped target sampling distributions to control the between- and within-subject variability of each non-brain covariate (Methods). For each non-brain covariate, we show the results for the four combinations of between- and within-subject sampling schemes. Overall, there is a consistent benefit to increasing between-subject variability of the covariate (Fig. S6), which leads to >1.8 factor reduction in sample size needed to scan for 80% replicability and >1.4 factor increase in the standardized ES for over 50% of associations. Moreover, 72% of covariate-outcome associations had increased standardized ESs by increasing the between-subject variability of the covariates (Fig. S7).

Importantly, increasing within-subject variability decreases the standardized ESs for many structural associations (Fig. 4a–f; Fig. S6a–f), suggesting that conducting longitudinal analyses can result in decreased replicability compared to cross-sectional analyses. For the FC outcomes, there is a slight positive effect of increasing within-subject variability (Fig. 4g; Fig. S6g). To evaluate the lower replicability of the structural associations with increasing within-subject variability, we compare cross-sectional standardized ESs of the non-brain covariates on each brain measure using the baseline measurements to the standardized ESs estimated using the full longitudinal data (Fig. 5a–d). Consistent with the reduction in standardized ES by increasing within-subject variability, for most structural associations (GMV and CT), conducting cross-sectional analyses using the baseline measurements results in larger standardized ESs (and higher replicability) than conducting analyses using the full longitudinal data. This finding is consistent when fitting a cross-sectional model using the 2-year follow-up measurement (Fig. S8). Identical results are found using linear mixed models (LMMs) with individual-specific random intercepts, which are commonly used in BWAS (Fig. S9). Together, these results suggest that the benefit of conducting longitudinal studies and increasing within-subject variability is highly dependent on the brain-behavior association, and counterintuitively, longitudinal designs can reduce the standardized ESs and replicability (unless the models are properly specified - see below).

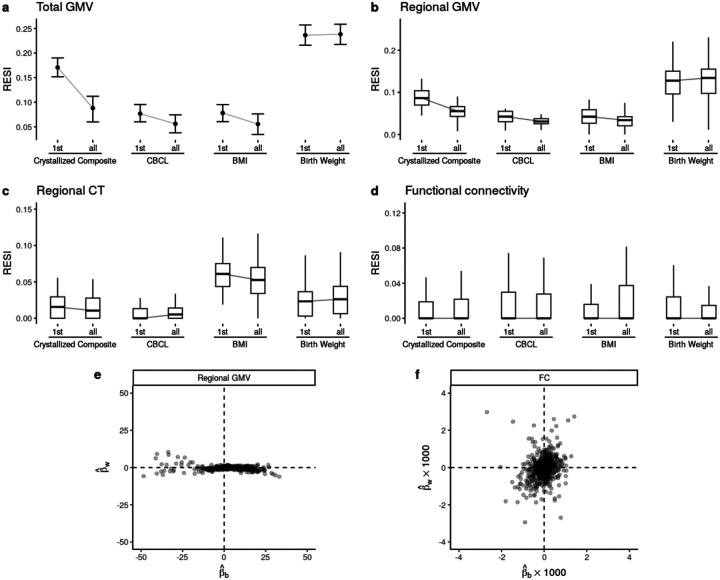

Fig. 4. Heterogeneous improvement of standardized effect sizes (ESs) for select cognitive, mental health, and demographic associations with structural and functional brain measures in the ABCD study with bootstrapped samples of N = 500.

(a) U-shaped between-subject sampling scheme (blue) that increases between-subject variability of the non-brain covariate produces larger standardized ESs and (b) reduces the number of participants scanned to obtain 80% replicability in total gray matter volume (GMV). The points and triangles are the average standardized ESs across bootstraps and the whiskers are the 95% confidence intervals. Increasing within-subject sampling (triangles) can reduce standardized ESs. A similar pattern holds in (c-d) regional GMV and (e-f) regional cortical thickness (CT); boxplots show the distributions of the standardized ESs across regions (or region pairs for functional connectivity (FC)). In contrast, (g) regional pairwise FC standardized ESs improve by increasing between- (blue) and within-subject variability (dashed borders) with a corresponding reduction in the (h) number of participants scanned for 80% replicability. See Fig. S6 for the results for all non-brain covariates examined.

Fig. 5. Longitudinal study designs can reduce standardized effect sizes (ESs) and replicability due to differences in between- versus within-subject associations of brain and behavior measures.

Boxplots show the distributions of the standardized ESs across regions. (a-c) Cross-sectional analyses (using only the baseline measures; indicated by “1st”s on the x-axes) can have larger standardized ESs than the same longitudinal analyses (using the full longitudinal data; indicated by “all”s on the x-axes) for structural brain measures in ABCD. (d) The functional connectivity (FC) measures do not show such a reduction of standardized ESs in longitudinal modeling. See Fig. S8 for the results for all non-brain covariates examined. (e) Most regional gray matter volume (GMV) associations (Fig. 5c) have larger between-subject parameter estimates (βb, x-axis) than within-subject parameter estimates (βw, y-axis; see Supplementary Information: Eqn (13)), whereas (f) FC associations (Fig. 5g) show less heterogeneous relationships between the two parameters.

To investigate why increasing within-subject variability or using longitudinal designs is not beneficial for some associations, we examined an assumption common to widely used GEEs and LMMs in BWAS. Widely used models assume that there is consistent association strength between the brain measure and non-brain covariate across between- and within-subject changes in the non-brain covariate. However, the between- and within-subject association strengths can differ because non-brain measures can be more variable than structural brain measures for a variety of reasons. For example, crystallized composite scores may vary within a subject longitudinally because of time-of-day effects, lack of sleep, or natural noise in the measurement. In contrast, GMV is more precise and it is not vulnerable to other sources of variability that might accompany the crystallized composite score. This combination leads to low within-subject association between these variables (Tab. S13). FC measures are more similar to crystallized composite scores in that they are subject to higher within-subject variability and natural noise, so they have a higher potential for stronger within-subject associations with crystallized composite scores (i.e., they are more likely to vary together based on time of day, lack of sleep, etc). To demonstrate this, we fit models that estimate distinct effects for between- and within-subject associations in the ABCD (Methods) and find that there are large between-subject parameter estimates and small within-subject parameter estimates in total and regional GMV (Supplementary Information: Section 3.2; Tab. S13; Fig. 5e), whereas the FC associations are distributed more evenly across between- and within-subject parameters (Fig. 5f). If the between- and within-subject associations are different, these widely used longitudinal models average the two associations (Supplementary Information: Section 3 Eqn. (14)). Fitting these associations separately avoids averaging the larger effect with the smaller effect and can inform our understanding of brain-behavior associations (Supplementary Information: Section 3.2). This approach ameliorates the reduction in standardized ESs caused by longitudinal designs for structural brain measures in the ABCD (Fig. S10–S11; Supplementary Information: Section 3). This longitudinal model has a similar between-subject standardized ES to the cross-sectional model (Methods: Estimation of the between- and within-subject effects; Fig. S11). In short, longitudinal designs can be detrimental to replicability when the between- and within-subject effects differ and the model is incorrectly specified.

Discussion

BWAS are a fundamental tool to discover brain-behavior associations that may inform our understanding of brain health across the lifespan. With increasing evidence of small standardized ESs and low replicability in BWAS, optimizing study design to increase standardized ESs and replicability is a critical prerequisite for progress1,27. We show the influence of study design features on standardized ESs and replicability using diverse datasets, neuroimaging features, and covariates of interest. Our results indicate that increasing between-subject variability of the covariate systematically increases standardized ESs and replicability. Furthermore, we demonstrate that the benefit of performing a longitudinal study strongly depends on the nature of the association and the model used to fit the data. We use statistical principles to show that typical longitudinal models used in BWAS average between- and within-subject effects. When we model between- and within-subject effects separately, we can increase the standardized ESs for the between- and within-subject effects separately using the modified sampling schemes and retain similar or slightly higher replicability compared to the cross-sectional model (Fig. S10–S11). While striving for large sample sizes remains important when designing a study, our findings emphasize the importance of considering other design features to improve standardized ESs and replicability of BWAS.

Sampling strategies can increase replicability

Our results demonstrate that standardized ES and replicability can be increased by enriched sampling of subjects with small and large values of the covariate of interest. This is well-known in linear models where the standardized ES is explicitly a function of the SD of the covariate24. We show that designing a study to have larger covariate SD increases standardized ESs by a median factor of 1.4, even when there is nonlinearity in the association, such as with age and GMV (Fig. S1). When the association is very non-monotonic – as in the case of a U-shape relationship between covariate and outcome – sampling the tails more heavily could decrease replicability, and diminish our ability to detect nonlinearities in the center of the study population. In such a case, sampling to obtain a uniform distribution of the covariate balances power across the range of the covariate and can increase replicability relative to random sampling when the covariate has a normal distribution in the population. Increasing between-subject variability is beneficial in more than 72% of the association pairs we studied, despite the presence of such nonlinearities (Fig. S7).

Because standardized ESs are dependent on study design, careful design choices can simultaneously increase standardized ESs and study replicability. Two-phase, extreme group, and outcome-dependent sampling designs can inform which subjects should be selected for imaging from a larger sample in order to increase the efficiency and standardized ESs of brain-behavior associations28–34. For example, given the high degree of accessibility of cognitive and behavioral testing (e.g., to be performed virtually or electronically), individuals scoring at the extremes on a testing scale/battery (“phase I”) could be prioritized for subsequent brain scanning (“phase II”). When there are multiple covariates of interest, multivariate two-phase designs can be used to increase standardized ESs and replicability35. Multivariate designs are also needed to stratify sampling to avoid confounding by other socio-demographic variables. Together, the use of optimal designs can increase both standardized ESs and replicability relative to a design that uses random sampling32. If desired, weighted regression (such as inverse probability weighting) can be combined with optimized designs to estimate a standardized ES that is consistent with the standardized ES if the study had been conducted in the full population35–37. Choosing highly reliable psychometric measurements or interventions (e.g., medications or neuromodulation within a clinical trial)38–40 may also be effective for increasing replicability. The decision to pursue an optimized design will also depend on other practical factors, such as the cost and complexity of acquiring other (non-imaging) measures of interest and the specific translational goals of the research.

Longitudinal design considerations

In the meta-analysis, longitudinal studies of the total GMV-age associations have, on average, >380% larger standardized ESs than cross-sectional studies. However, we see in subsequent analyses that the benefit of conducting a longitudinal design is highly dependent on both the between- and the within-subject effects. When the between- and the within-subject effect parameters are equal and the within-subject brain measure error is low, longitudinal studies offer larger standardized ESs than cross-sectional studies (Supplementary Information: Section 3.1)41. This combination of equal between- and within-subject effects and low within-subject measurement error is the reason there is a benefit of longitudinal design in ADNI for the total GMV-age association (Fig. S12). While the standardized ES in these analyses did not account for cost per measurement, comparing efficiency per measurement supports the approach of collecting two measurements per subject in this scenario (Supplementary Information: Section 3.1).

Longitudinal models offer the unique ability to separately estimate between- and within-subject effects. When the between- and within-subject effects differ but we still fit them with a single effect, we mistakenly assume they are equal and the interpretation of that coefficient becomes complicated. The effect becomes a weighted average of the between- and within-subject effects whose weights are determined by the study design features (Supplementary Information: Section 3). The apparent lack of benefit of longitudinal designs in the ABCD on the study of GMV associations is because within-subject changes in the non-brain measures are not associated with within-subject changes in GMV (Fig. 5e; Tab. S13). The smaller standardized ESs we find in longitudinal analyses are due to the contribution from the smaller within-subject effect to the weighted average of the between- and within-subject effects (Supplementary Information: Section 3 Eqn. (14)).

We provide recommendations for longitudinal design and analysis strategies (Fig. S13–S14). Briefly, fitting the between- and within-subject effects separately prevents averaging the two effects (Supplementary Information: Section 3.2). These two effects are often not directly comparable to the effect obtained from a cross-sectional model because they have different interpretations (Supplementary Information: Section 3.2)42–44. Using sampling strategies to increase between- and within-subject variability of the covariate will respectively increase the standardized ESs for between- and within-subject associations. Thus, longitudinal designs can be helpful and optimal even when the between- and within-subject effects differ, if modeled correctly.

Conclusions

While it is difficult to provide universal recommendations for study design and analysis, the present study provides general guidelines for designing and analyzing BWAS for optimal standardized ESs and replicability based on both empirical and theoretical results (Fig. S13–S14). Although the decision for a particular design or analysis strategy may depend on unknown features of the brain and non-brain measures and their association, these characteristics can be evaluated in pilot data or the analysis dataset (Fig. S12; Supplementary Information: Section 3.2). One general principle that increases standardized ESs for most associations is to increase the covariate SD (through, e.g., two-phase, extreme group, and outcome-dependent sampling), which is practically applicable to a wide range of BWAS contexts. Moreover, longitudinal designs can provide larger standardized ES and higher replicability, but the benefit is dependent on the form of the association between the covariate and outcome. Longitudinal BWAS enable us to study between- and within-subject effects, and they should be used when the two effects are hypothesized to be different. Together, our findings highlight the importance of rigorous evaluation of study designs and statistical analysis methods that can improve the replicability of BWAS.

Methods

LBCC dataset and processing

The original LBCC dataset includes 123,984 MRI scans from 101,457 human participants across more than 100 studies (which include multiple publicly available datasets45–55) and is described in our prior work17 (see Supplemental Information and Tab. S1 from prior study). We filtered to the subset of cognitively normal (CN) participants whose data were processed using FreeSurfer version 6.1. Studies were curated for the analysis by excluding duplicated observations and studies with fewer than 4 unique age points, sample size less than 20, and/or only participants of one sex. If there were fewer than three participants having longitudinal observations, only the baseline observations were included and the study was considered cross-sectional. If a subject had changing demographic information during the longitudinal follow-up (e.g., changing biological sex), only the most recent observation was included. We updated the LBCC dataset with the ABCD release 5 resulting in a final dataset that includes 77,695 MRI scans from 60,900 CN participants with available total GMV, sGMV, and GMV measures across 63 studies (Tab. S1). In this dataset, 74,148 MRI scans from 57,538 participants across 43 studies have complete-case regional brain measures (regional GMV, regional surface area, and regional cortical thickness (CT), based on Desikan-Killiany parcellation18) (Tab. S2). The global brain measure mean CT was derived using the regional brain measures (see below).

Structural brain measures

Details of data processing are described in our prior work17. Briefly, total GMV, sGMV, and WMV were estimated from T1-weighted and T2-weighted (when available) MRIs using the “aseg” output from Freesurfer 6.0.1. All three cerebrum tissue volumes were extracted from the aseg.stats files output by the recon-all process: ‘Total cortical gray matter volume’ for GMV; ‘Total cerebral white matter volume’ for WMV; and ‘Subcortical gray matter volume’ for sGMV (inclusive of thalamus, caudate nucleus, putamen, pallidum, hippocampus, amygdala, and nucleus accumbens area; https://freesurfer.net/fswiki/SubcorticalSegmentation). Regional GMV and CT across 68 regions (34 per hemisphere, based on Desikan-Killiany parcellation18) were obtained from the aparc.stats files output by the recon-all process. Mean CT across the whole brain is the weighted average of the regional CT weighted by the corresponding regional surface areas.

Preprocessing specific to ABCD

Functional connectivity measures

Longitudinal functional connectivity (FC) measures were obtained from the ABCD-BIDS community collection, which houses a community-shared and continually updated ABCD neuroimaging dataset available under Brain Imaging Data Structure (BIDS) standards. The data used in these analyses were processed using the abcd-hcp-pipeline version 0.1.3, an updated version of The Human Connectome Project MRI pipeline56. Briefly, resting state fMRI time series were demeaned and detrended, and a GLM was used to regress out mean WM, CSF, and global signal as well as motion variables and then band-pass filtered. High motion frames (filtered-FD > 0.2mm) were censored during the demeaning and detrending. After preprocessing the time series were parcellated using the 352 regions of the Gordon atlas (including 19 subcortical structures) pairwise Pearson correlations were computed among the regions. FC measures were estimated from resting state fMRI time series using a minimum of 5 minutes of data. After Fisher’s z-transformation, the connectivities were averaged across the 24 canonical functional networks26, forming 276 inter-network connectivities and 24 intra-network connectivities.

Cognitive and other covariates

The ABCD dataset is a large-scale repository aiming to track the brain and psychological development of over 10,000 children ages 9 to 16 by measuring hundreds of variables including demographic, physical, cognitive, and mental health variables57. We used release 5 of ABCD study to examine the effect of the sampling schemes on other types of covariates including cognition (fully corrected T-scores of the individual subscales and total composite scores of NIH Toolbox25), mental health (total problem CBCL syndrome scale), and other common demographic variables (body mass index (BMI), birth weight, and handedness). For each of the covariates, we evaluated the effect of the sampling schemes on their associations with the global and regional structural brain measures and FC after controlling for nonlinear age and sex (and, for FC outcomes only, mean frame displacement (FD)).

For the analyses of structural brain measures, there were 3 non-brain covariates with fewer than 5% non-missing follow-ups at both 2-year and 4-year follow-ups (i.e., Dimensional Change Card Sort Test, Cognition Fluid Composite, and Cognition Total Composite Score; Tab. S11), and only their baseline cognitive measurements were included in the analyses. For the remaining 11 variables (i.e., Picture Vocabulary Test, Flanker Inhibitory Control and Attention Test, List Sorting Working Memory Test, Pattern Comparison Processing Speed Test, Picture Sequence Memory Test, Oral Reading Recognition Test, Crystallized Composite, CBCL, birth weight, BMI and handedness), all of the available baseline, 2-year and 4-year follow-up observations were used. For the analyses of the FC, only the baseline observations for List Sorting Working Memory Test were used due to missingness (Tab. S12).

The records with BMI lying outside the lower and upper 1% quantiles (i.e., BMI < 13.5 or BMI > 36.9) were considered misinput and replaced with missing values. The variable handedness was imputed using the last observation carried forward.

Statistical analysis

Removal of site effects

For multi-site or multi-study neuroimaging studies, it is necessary to control for potential heterogeneity between sites to obtain unconfounded and generalizable results. Before estimating the main effects of age and sex on the global and regional brain measures (total GMV, total WMV, total sGMV, mean CT, regional GMV and regional CT), we applied ComBat19 and LongComBat20 in cross-sectional datasets and longitudinal datasets, respectively, to remove the potential site effects. The ComBat algorithm involves several steps including data standardization, site effect estimation, empirical Bayesian adjustment, removing estimated site effects, and data rescaling.

In the analysis of cross-sectional datasets, the models for ComBat were specified as a linear regression model illustrated below using total GMV:

where ns denotes natural cubic splines on two degrees of freedom, which means that there were two boundary knots and one interval knot placed at the median of the covariate age. Splines were used to accommodate nonlinearity in the age effect. For the longitudinal datasets, the model for LongComBat used a linear mixed effects model with subject-specific random intercepts:

When estimating the effects of other non-brain covariates in the ABCD dataset, ComBat was used to control the site effects respectively for each of the cross-sectional covariates. The ComBat models were specified as illustrated below using GMV:

where x denotes the non-brain covariate. LongComBat was used for each of the longitudinal covariates with a linear mixed effects model with subject-specific random intercepts only:

When estimating the effects of other covariates on the FC in the ABCD data, we additionally controlled for the mean frame displacement (FD) of the frames remaining after scrubbing. The longComBat models were specified as:

The Combat and LongComBat were implemented using the neuroCombat58 and longCombat59 R packages. Site effects were removed before all subsequent analyses including the bootstrap analyses described below.

Robust effect size index (RESI) for association strength

The RESI is a recently developed standardized effect size (ES) index that has consistent interpretation across many model types, encompassing all types of test statistics in most regression models21,22. Briefly, the RESI is a standardized ES parameter describing the deviation of the true parameter value(s) β from the reference value(s) β0 from the statistical null hypothesis H0: β = β0,

where S denotes the parameter RESI, β and β0 can be vectors, ∑β is the covariance matrix for (where is the estimator for β) (Supplementary Information: Section 1).

In previous work, we defined a consistent estimator for RESI21,

Where T2 is the chi-square test statistics for testing the null hypothesis H0: β = β0, m is the number of parameters being tested (i.e., the length of β) and N is the number of subjects.

As RESI is generally applicable across different models and data types, it is also applicable to the situation where Cohen’s d was defined. In this scenario, the RESI is equal to ½ Cohen’s d21, so Cohen’s suggested thresholds for ES can be adopted for RESI: small (RESI=0.1); medium (RESI=0.25); and large (RESI=0.4). Because RESI is robust, when the assumptions of Cohen’s d are not satisfied, such as when the variances between the groups are not equal, RESI is still a consistent estimator, but Cohen’s d is not. The confidence intervals (CIs) for RESI in our analyses were constructed using 1,000 non-parametric bootstraps22.

The systematic difference in the standardized ESs between cross-sectional and longitudinal studies puts extra challenges on the comparison and aggregation of standardized ES estimates across studies with different designs. To improve the comparability of standardized ESs between cross-sectional and longitudinal studies, we proposed a new ES index: the cross-sectional RESI (i.e., CS-RESI) for longitudinal datasets. The CS-RESI for longitudinal datasets represents the RESI in the same study population if the longitudinal study had been conducted cross-sectionally. Detailed definition, point estimator, and CI construction procedure for CS-RESI can be found in Supplementary Information: Section 1. Comprehensive statistical simulation studies were also performed to demonstrate the valid performance of the proposed estimator and CI for CS-RESI (Supplementary Information: Section 1.2). With CS-RESI, we can quantify the benefit of using a longitudinal study design in a single dataset (Supplementary Information: Section 1.3).

Study-level models

After removing the site effects using ComBat or LongComBat in the multi-site data, we estimated the effects of age and sex on each of the global/regional brain measures using generalized estimating equations (GEEs) and linear regression models in the longitudinal datasets and cross-sectional datasets, respectively. The mean model was specified as below after ComBat/LongComBat:

where yij was taken to be a global brain measure (i.e., total GMV, WMV, sGMV or mean CT) or regional brain measure (i.e., regional GMV or CT) at the j-th visit from the subject i, and j = 1 for cross-sectional datasets. The age effect was estimated with natural cubic splines with two degrees of freedom, which means that there were two boundary knots and one interval knot placed at the median of the covariate age. For the GEEs, we used an exchangeable correlation structure as the working structure and identity linkage function. The model assumes the mean was correctly specified, but made no assumption about the error distribution. The GEEs were fitted with the “geepack” package60 in R. We used the RESI as a standardized ES measure. RESIs and confidence intervals (CIs) were computed using the “RESI” R package (version 1.2.0)23.

Meta-analysis of the age and sex effects

The weighted linear regression model for the meta-analysis of age effects across the studies was specified as:

where denotes the estimated RESI for study k, and the weights were the inverse of the standard error of each RESI estimate. The sample mean, standard deviation (SD), and skewness of the age were included as nonlinear terms estimated using natural splines with 3 degrees of freedom (i.e., two boundary knots plus two interval knots at the 33rd and 66th percentiles of the covariates), and a binary variable indicating the design type (cross-sectional/longitudinal) was also included.

The weighted linear regression model for the meta-analysis of sex effects across the studies was specified as

where denotes the estimated RESI of sex for study k, and the weights were the inverse of the standard error of each RESI estimate. The sample mean, SD of the age covariate, and the proportion of males in each study were included as nonlinear terms estimated using natural splines with 3 degrees of freedom, and a binary variable indicating the design type (cross-sectional or longitudinal) was also included.

These meta-analyses were performed for each of the global and regional brain measures. Inferences were performed using robust standard errors61. In the partial regression plots, the expected standardized ESs for the age effects were estimated from the meta-analysis model after fixing mean age at 45, SD of age at 7 and/or skewness at 0; the expected standardized ESs for the sex effects were estimated from the meta-analysis model after fixing mean age at 45, SD of age at 7 and/or proportion of males at 0.5.

Sampling schemes for age in the UKB and ADNI

We used bootstrapping to evaluate the effect of different sampling schemes with different target sample covariate distributions on the standardized ESs and replicability in the cross-sectional UKB and longitudinal ADNI datasets. For a given sample size and sampling schemes, 1,000 bootstrap replicates were conducted. The standardized ES was estimated as the mean standardized ES (i.e., RESI) across the bootstrap replicates. The 95% confidence interval (CI) for the standardized ES was estimated using the lower and upper 2.5% quantiles across the 1,000 estimates of the standardized ES in the bootstrap replicates. Power was calculated as the proportion of bootstrap replicates producing p-values less than or equal to 5% for those associations that were significant at 0.05 in the full sample. In the UKB, only one region was not significant for age in each of GMV and CT, and in ADNI, only 1 and 4 regions were not significant for age in GMV and CT, respectively. Replicability in prior work has been defined as having a significant p-value and the same sign for the regression coefficient. Because we were fitting nonlinear effects, we defined replicability as the probability that two independent studies have significant p-values; this is equivalent to the definition of power squared. The 95% CIs for replicability were derived using Wilson’s method62.

In the UKB dataset, to modify the (between-subject) variability of the age variable we used the following three target sampling distributions (Fig. S3): bell-shaped, where the target distribution had most of the subjects distributed in the middle age range; uniform, where the target distribution had subjects equally distributed across the age range; and U-shaped, where the target distribution had most of the subjects distributed closer to the range limits of the age in the study. The samples with U-shaped age distribution had the largest sample variance of age, followed by the ones with uniform age distribution and the ones with bell-shaped age distribution. The bell-shaped and U-shaped functions were proportional to a quadratic function. To sample according to these distributions, each record was first inversely weighted by the frequency of the records with age falling in the range of +/− 0.5 years of the age for that record to achieve the uniform sampling distribution. Each record was then rescaled to derive the weights for bell- and U-shaped sampling distributions. The records with age <50 or >78 years were winsorized at 50 or 78 years when assigning weights, respectively, to limit the effects of outliers on the weight assignment, but the actual age values were used when analyzing each bootstrapped data.

In each bootstrap from the ADNI dataset, each subject was sampled to have two records. We modified the between- and within-subject variability of age, respectively, by making the “baseline age” follow one of the three target sampling distributions used for the UKB dataset and the “age change” independently follow one of three new distributions: decreasing; uniform; and increasing (Fig. S5). The increasing and decreasing functions were proportional to an exponential function. The samples with “increasing” distribution of “age change” had the largest within-subject variability of age, followed by the ones with the uniform distribution of “age change” and the ones with “decreasing” distribution of age change.

To modify the “baseline age” and the “age change” from baseline independently, we first created all combinations of the baseline record and one follow-up from each participant, and derived the “baseline age” and “age change” for each combination. The “bivariate” frequency of each combination was obtained as the number of combinations with values of “baseline age” and “age change” falling in the range of +/− 0.5 years of the values of “baseline age” and “age change” for this combination. Then each combination was inversely weighted by its “bivariate” frequency to target a uniform bivariate distribution of baseline age and age change. The weight for each combination was then rescaled to make the baseline age and age change follow different sampling distributions independently. The combinations with baseline age <65 or >85 years were winsorized at 65 or 85 years, and the combinations with age change greater than 5 years were winsorized at 5 years when assigning weights to limit the effects of outliers on the weight assignment, but the actual ages were used when analyzing each bootstrapped data.

The sampling methods could be easily extended to the scenario where each subject had three records (and more than three) in the bootstrap data by making combinations of the baseline and two follow-ups. Each combination was inversely weighted to achieve uniform baseline age and age change distributions, respectively, by the “trivariate” frequency of the combinations with baseline age and the two age changes from baseline for the two follow-ups falling into the range of +/− 0.5 years of the corresponding values for this combination. Since we only investigated the effect of modifying the number of measurements per subject under uniform between- and within-subject sampling schemes (Fig. 3c–d), we did not need to consider rescaling the weights here to achieve other sampling distributions but they could be done similarly. For the scenario where each subject only had one measurement (Fig. 3c–d), the standardized ESs and replicability were estimated only using the baseline measurements.

All site effects were removed using ComBat or LongComBat prior to performing the bootstrap analysis.

Sampling schemes for other non-brain covariates in ABCD

We used bootstrapping to study how different sampling strategies affect the RESI in the ABCD dataset. Each subject in the bootstrap data had two measurements. We applied the same weight assignment method described above for the ADNI dataset to modify the between- and within-subject variability of a covariate. We made the baseline covariate and the change in covariate follow bell-shaped and/or U-shaped distributions, to let the sample have larger or smaller between- and/or within-subject variability of the covariate, respectively. The baseline covariate and change of covariate were winsorized at the upper and lower 5% quantiles to limit the effect of outliers on sampling. For each cognitive variable, only the subjects with non-missing baseline measurements and at least one non-missing follow-up were included.

Generalized linear models (GLMs) and GEEs were fitted to estimate the effect of each non-brain covariate on the structural brain measures after controlling for age and sex,

where x denotes one of the non-brain covariates. For the GEEs, we used an exchangeable correlation structure as the working structure and identity linkage function.

Only the between-subject sampling schemes were applied for the non-brain covariates that were stable over time (e.g., birth weight and handedness). In other words, the subjects were sampled based on their baseline covariate values, and then a follow-up was selected randomly for each subject. The sampling schemes to increase the between-subject variability in the covariate “handedness”, which was a binary variable (right-handed or not), was specified differently. The expected proportion of right-handed subjects in the bootstrap samples was 50% under the sampling scheme with “larger” between-subject variability and 10% under the sampling scheme with “smaller” between-subject variability.

For given between- and/or within-subject sampling schemes, We obtained 1,000 bootstrap replicates. The standardized ES was estimated as the mean standardized ES across the bootstrap replicates. The 95% CIs for standardized ES were estimated using the lower and upper 2.5% quantiles across the 1,000 estimates of the standardized ES in the bootstrap replicates. The sample sizes needed for 80% replicability were estimated based on the (mean) estimated standardized ES and F-distribution (see below).

Analysis of FC in ABCD

In a subset of the ABCD where we have preprocessed longitudinal FC data at two timepoints (baseline and 2-year follow-up), we only restricted to the subjects with non-missing measurements at both of the two times. In the GEEs used to estimate the effects of non-brain covariates on FC, the mean model was specified as below after LongComBat:

where yij was taken to be an FC outcome and x denotes a non-brain covariate. Mean framewise displacement (FD) (mean_FD) was also included as a covariate with natural cubic splines with 3 degrees of freedom. We used an exchangeable correlation structure as the working structure and identity linkage function in the GEEs. The frame count of each scan was used as the weights.

When evaluating the effect of different sampling schemes on the standardized ESs, we obtained 1,000 bootstrap replicates for given between- and/or within-subject sampling schemes. The standardized ES was estimated as the mean standardized ES across the bootstrap replicates. CIs were computed as described above. The sample sizes needed for 80% replicability were estimated based on the (mean) estimated standardized ESs and F-distribution (see below).

Sample size calculation for a target power or replicability with a given standardized effect size

After estimating the standardized ES for an association, the calculation of the corresponding sample size N needed for detecting this association with γ × 100% power at significance level of α was based on an F-distribution. Let df denote the total degree of freedom of the analysis model, F(x; γ) denotes the cumulative density function for the random variable X, which follows the (non-central) F-distribution with degrees of freedom being 1 and N - df and non-centrality parameter γ. The corresponding sample size N is:

where is the estimated RESI for the standardized ES. Power curves for the RESI are given in Figure 3 of Vandekar et al. (2002)21. Replicability was defined as the probability that two independent studies have significant p-values, which is equivalent to power squared.

Estimation of the between- and within-subject effects

For the non-brain covariates that were analyzed longitudinally in ABCD dataset, GEEs with exchangeable correlation structures were fitted to estimate their cross-sectional and longitudinal effects on structural and functional brain measures after controlling for age and sex, respectively. The mean model was specified as illustrated with GMV:

where X_bl denotes the subject-specific baseline covariate values, and the X_change denotes the difference of the covariate value at each visit to the subject-specific baseline covariate value (see Supplementary Information: Section 3.2). The subjects without baseline measures were not included in the modeling. The model coefficients for the terms “X_bl” and “X_change” represent the between- and within-subject effects of this non-brain covariate on total GMV, respectively. For the FC data, the same covariates and weighting were used as described above. Using the first time point as the between-subject term was a special case that ensured comparing the parameter using the baseline cross-sectional model was equal to the parameter for the between-subject effect in the longitudinal model. In this model, the between-subject variance was defined as the variance of the baseline measurement and the within-subject variance was the mean square of X_change. This model specification ensured that the sampling schemes independently affected the between- and within-subject variances separately (Supplementary Information: Section 3.2 Eqn (17)).

Supplementary Material

Acknowledgments

S.N.V was supported by R01MH123563. A.A.B. and J.S. were partially supported by R01MH132934 and R01MH133843. Data processing done at the University of Cambridge is supported by the NIHR Cambridge Biomedical Research Centre (BRC-1215-20014) and NIHR Applied Research Collaboration East of England. Any views expressed are those of the author(s) and not necessarily those of the funders, IHU-JU2, the NIHR or the Department of Health and Social Care.

Footnotes

Data used in the preparation of this article includes data obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). As such, the investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at: http://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf

Code availability

All code used to produce the analyses presented in this paper is available at https://github.com/KaidiK/RESI_BWAS.

Disclosures

J.S., and R.B. are directors and hold equity in Centile Bioscience. A.A.B. holds equity in Centile Bioscience and received consulting income from Octave Bioscience in 2023.

Data availability

Participant-level data from many datasets are available according to study-level data access rules. Study-level model parameters are available at https://github.com/KaidiK/RESI_BWAS. We acknowledge the usage of several openly shared MRI datasets, which are available at the respective consortia websites and are subject to the sharing policies of each consortium: OpenNeuro (https://openneuro.org/), UK BioBank (https://www.ukbiobank.ac.uk/), ABCD (https://abcdstudy.org/), the Laboratory of NeuroImaging (https://loni.usc.edu/), data made available through the Open Science Framework (https://osf.io/), the Human Connectome Project (http://www.humanconnectomeproject.org/), and the OpenPain project (https://www.openpain.org). The ABCD data repository grows and changes over time. The ABCD data used in this report came from DOI 10.15154/1503209. Data used in this article were provided by the brain consortium for reliability, reproducibility and replicability (3R-BRAIN) (https://github.com/zuoxinian/3R-BRAIN). Data used in the preparation of this article was obtained from the Australian Imaging Biomarkers and Lifestyle flagship study of aging (AIBL) funded by the Commonwealth Scientific and Industrial Research Organisation (CSIRO) which was made available at the ADNI database (https://adni.loni.usc.edu/aibl-australian-imaging-biomarkers-and-lifestyle-study-of-ageing-18-month-data-now-released/). The AIBL researchers contributed data but did not participate in analysis or writing of this report. AIBL researchers are listed at https://www.aibl.csiro.au. Data used in preparation of this article were obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (https://adni.loni.usc.edu/). The investigators within the ADNI contributed to the design and implementation of ADNI and/or provided data but did not participate in analysis or writing of this report. A complete listing of ADNI investigators can be found at https://adni.loni.usc.edu/wp-content/uploads/how_to_apply/ADNI_Acknowledgement_List.pdf. More information on the ARWIBO consortium can be found at https://www.arwibo.it/. More information on CALM team members can be found at https://calm.mrc-cbu.cam.ac.uk/team/ and in the Supplementary Information. Data used in this article were obtained from the developmental component ‘Growing Up in China’ of the Chinese Color Nest Project (http://deepneuro.bnu.edu.cn/?p=163). Data used in the preparation of this article were obtained from the IConsortium on Vulnerability to Externalizing Disorders and Addictions (c-VEDA), India (https://cveda-project.org/). Data used in the preparation of this article were obtained from the Harvard Aging Brain Study (HABS P01AG036694) (https://habs.mgh.harvard.edu). Data used in the preparation of this article were obtained from the IMAGEN consortium (https://imagen-europe.com/). The POND network (https://pond-network.ca/) is a Canadian translational network in neurodevelopmental disorders, primarily funded by the Ontario Brain Institute.

The LBCC dataset used in the preparation of this article includes data obtained from the Alzheimer’s Disease Neuroimaging Initiative (ADNI) database (adni.loni.usc.edu). The ADNI was launched in 2003 as a public-private partnership, led by Principal Investigator Michael W. Weiner, MD. The primary goal of ADNI has been to test whether serial magnetic resonance imaging (MRI), positron emission tomography (PET), other biological markers, and clinical and neuropsychological assessment can be combined to measure the progression of mild cognitive impairment (MCI) and early Alzheimer’s disease (AD). Its data collection and sharing for this project was funded by the Alzheimer’s Disease Neuroimaging Initiative (ADNI) (National Institutes of Health Grant U01 AG024904) and DOD ADNI (Department of Defense award number W81XWH-12–2-0012). ADNI is funded by the National Institute on Aging, the National Institute of Biomedical Imaging and Bioengineering, and through generous contributions from the following: AbbVie, Alzheimer’s Association; Alzheimer’s Drug Discovery Foundation; Araclon Biotech; BioClinica, Inc.; Biogen; Bristol-Myers Squibb Company; CereSpir, Inc.; Cogstate; Eisai Inc.; Elan Pharmaceuticals, Inc.; Eli Lilly and Company; EuroImmun; F. Hoffmann-La Roche Ltd and its affiliated company Genentech, Inc.; Fujirebio; GE Healthcare; IXICO Ltd.; Janssen Alzheimer Immunotherapy Research & Development, LLC.; Johnson & Johnson Pharmaceutical Research & Development LLC.; Lumosity; Lundbeck; Merck & Co., Inc.; Meso Scale Diagnostics, LLC.; NeuroRx Research; Neurotrack Technologies; Novartis Pharmaceuticals Corporation; Pfizer Inc.; Piramal Imaging; Servier; Takeda Pharmaceutical Company; and Transition Therapeutics. The Canadian Institutes of Health Research is providing funds to support ADNI clinical sites in Canada. Private sector contributions are facilitated by the Foundation for the National Institutes of Health (www.fnih.org). The grantee organization is the Northern California Institute for Research and Education, and the study is coordinated by the Alzheimer’s Therapeutic Research Institute at the University of Southern California. ADNI data are disseminated by the Laboratory for Neuro Imaging at the University of Southern California.

Reference

- 1.Marek S. et al. Reproducible brain-wide association studies require thousands of individuals. Nature 603, 654–660 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Owens M. M. et al. Recalibrating expectations about effect size: A multi-method survey of effect sizes in the ABCD study. PLOS ONE 16, e0257535 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Spisak T., Bingel U. & Wager T. D. Multivariate BWAS can be replicable with moderate sample sizes. Nature 615, E4–E7 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Nosek B. A. et al. Replicability, Robustness, and Reproducibility in Psychological Science. Annu. Rev. Psychol. 73, 719–748 (2022). [DOI] [PubMed] [Google Scholar]

- 5.Patil P., Peng R. D. & Leek J. T. What should we expect when we replicate? A statistical view of replicability in psychological science. Perspect. Psychol. Sci. J. Assoc. Psychol. Sci. 11, 539–544 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Liu S., Abdellaoui A., Verweij K. J. H. & van Wingen G. A. Replicable brain–phenotype associations require large-scale neuroimaging data. Nat. Hum. Behav. 7, 1344–1356 (2023). [DOI] [PubMed] [Google Scholar]

- 7.Button K. S. et al. Power failure: why small sample size undermines the reliability of neuroscience. Nat. Rev. Neurosci. 14, 365–376 (2013). [DOI] [PubMed] [Google Scholar]

- 8.Szucs D. & Ioannidis J. P. Sample size evolution in neuroimaging research: An evaluation of highly-cited studies (1990–2012) and of latest practices (2017–2018) in high-impact journals. NeuroImage 221, 117164 (2020). [DOI] [PubMed] [Google Scholar]

- 9.Reddan M. C., Lindquist M. A. & Wager T. D. Effect Size Estimation in Neuroimaging. JAMA Psychiatry 74, 207–208 (2017). [DOI] [PubMed] [Google Scholar]

- 10.Vul E. & Pashler H. Voodoo and circularity errors. NeuroImage 62, 945–948 (2012). [DOI] [PubMed] [Google Scholar]

- 11.Vul E., Harris C., Winkielman P. & Pashler H. Puzzlingly High Correlations in fMRI Studies of Emotion, Personality, and Social Cognition. Perspect. Psychol. Sci. J. Assoc. Psychol. Sci. 4, 274–290 (2009). [DOI] [PubMed] [Google Scholar]

- 12.Nee D. E. fMRI replicability depends upon sufficient individual-level data. Commun. Biol. 2, 1–4 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Smith P. L. & Little D. R. Small is beautiful: In defense of the small-N design. Psychon. Bull. Rev. 25, 2083–2101 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Klapwijk E. T., van den Bos W., Tamnes C. K., Raschle N. M. & Mills K. L. Opportunities for increased reproducibility and replicability of developmental neuroimaging. Dev. Cogn. Neurosci. 47, 100902 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lawless J. F., Kalbfleisch J. D. & Wild C. J. Semiparametric Methods for Response-Selective and Missing Data Problems in Regression. J. R. Stat. Soc. Ser. B Stat. Methodol. 61, 413–438 (1999). [Google Scholar]

- 16.RePORT 〉 RePORTER. https://reporter.nih.gov/search/_dNnH1VaiEKU_vZLZ7L2xw/projects/charts.

- 17.Bethlehem R. a. I. et al. Brain charts for the human lifespan. Nature 604, 525–533 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Desikan R. S. et al. An automated labeling system for subdividing the human cerebral cortex on MRI scans into gyral based regions of interest. NeuroImage 31, 968–980 (2006). [DOI] [PubMed] [Google Scholar]

- 19.Johnson W. E., Li C. & Rabinovic A. Adjusting batch effects in microarray expression data using empirical Bayes methods. Biostatistics 8, 118–127 (2007). [DOI] [PubMed] [Google Scholar]

- 20.Beer J. C. et al. Longitudinal ComBat: A method for harmonizing longitudinal multiscanner imaging data. NeuroImage 220, 117129 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Vandekar S., Tao R. & Blume J. A Robust Effect Size Index. Psychometrika 85, 232–246 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kang K. et al. Accurate Confidence and Bayesian Interval Estimation for Non-centrality Parameters and Effect Size Indices. Psychometrika (2023) doi: 10.1007/s11336-022-09899-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jones M., Kang K. & Vandekar S. RESI: An R Package for Robust Effect Sizes. Preprint at 10.48550/arXiv.2302.12345 (2023). [DOI]

- 24.Boos D. D. & Stefanski L. A. Essential Statistical Inference: Theory and Methods. (Springer-Verlag, New York, 2013). [Google Scholar]

- 25.Carlozzi N. E. et al. Construct Validity of the NIH Toolbox Cognition Battery in Individuals With Stroke. Rehabil. Psychol. 62, 443–454 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gordon E. M. et al. Generation and Evaluation of a Cortical Area Parcellation from Resting-State Correlations. Cereb. Cortex N. Y. NY 26, 288–303 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Noble S., Mejia A. F., Zalesky A. & Scheinost D. Improving power in functional magnetic resonance imaging by moving beyond cluster-level inference. Proc. Natl. Acad. Sci. 119, e2203020119 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Tao R., Zeng D. & Lin D.-Y. Optimal Designs of Two-Phase Studies. J. Am. Stat. Assoc. 115, 1946–1959 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Schildcrout J. S., Garbett S. P. & Heagerty P. J. Outcome Vector Dependent Sampling with Longitudinal Continuous Response Data: Stratified Sampling Based on Summary Statistics. Biometrics 69, 405–416 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Tao R., Zeng D. & Lin D.-Y. Efficient Semiparametric Inference Under Two-Phase Sampling, With Applications to Genetic Association Studies. J. Am. Stat. Assoc. 112, 1468–1476 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Fisher J. E., Guha A., Heller W. & Miller G. A. Extreme-groups designs in studies of dimensional phenomena: Advantages, caveats, and recommendations. J. Abnorm. Psychol. 129, 14–20 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Preacher K. J., Rucker D. D., MacCallum R. C. & Nicewander W. A. Use of the extreme groups approach: a critical reexamination and new recommendations. Psychol. Methods 10, 178–192 (2005). [DOI] [PubMed] [Google Scholar]

- 33.Amanat S., Requena T. & Lopez-Escamez J. A. A Systematic Review of Extreme Phenotype Strategies to Search for Rare Variants in Genetic Studies of Complex Disorders. Genes 11, 987 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Lotspeich S. C., Amorim G. G. C., Shaw P. A., Tao R. & Shepherd B. E. Optimal multiwave validation of secondary use data with outcome and exposure misclassification. Can. J. Stat. n/a, (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Tao R. et al. Analysis of Sequence Data Under Multivariate Trait-Dependent Sampling. J. Am. Stat. Assoc. 110, 560–572 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Lin H. et al. Strategies to Design and Analyze Targeted Sequencing Data. Circ. Cardiovasc. Genet. 7, 335–343 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Tao R., Lotspeich S. C., Amorim G., Shaw P. A. & Shepherd B. E. Efficient semiparametric inference for two-phase studies with outcome and covariate measurement errors. Stat. Med. 40, 725–738 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Nikolaidis A. et al. Suboptimal phenotypic reliability impedes reproducible human neuroscience. 2022.07.22.501193 Preprint at 10.1101/2022.07.22.501193 (2022). [DOI]

- 39.Xu T. et al. ReX: an integrative tool for quantifying and optimizing measurement reliability for the study of individual differences. Nat. Methods 20, 1025–1028 (2023). [DOI] [PubMed] [Google Scholar]

- 40.Gell M. et al. The Burden of Reliability: How Measurement Noise Limits Brain-Behaviour Predictions. 2023.02.09.527898 Preprint at 10.1101/2023.02.09.527898 (2024). [DOI]

- 41.Diggle Peter, Heagerty Patrick, Liang Kung-Yee, & Zeger Scott. Analysis of Longitudinal Data. vol. Second edition (OUP Oxford, Oxford, 2013). [Google Scholar]

- 42.Pepe M. S. & Anderson G. L. A cautionary note on inference for marginal regression models with longitudinal data and general correlated response data. Commun. Stat. - Simul. Comput. (1994) doi: 10.1080/03610919408813210. [DOI] [Google Scholar]

- 43.Begg M. D. & Parides M. K. Separation of individual-level and cluster-level covariate effects in regression analysis of correlated data. Stat. Med. 22, 2591–2602 (2003). [DOI] [PubMed] [Google Scholar]

- 44.Curran P. J. & Bauer D. J. The Disaggregation of Within-Person and Between-Person Effects in Longitudinal Models of Change. Annu. Rev. Psychol. 62, 583–619 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.The ADHD-200 Consortium. The ADHD-200 Consortium: A Model to Advance the Translational Potential of Neuroimaging in Clinical Neuroscience. Front. Syst. Neurosci. 6, 62 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Di Martino A. et al. The autism brain imaging data exchange: towards a large-scale evaluation of the intrinsic brain architecture in autism. Mol. Psychiatry 19, 659–667 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Lukas Snoek et al. AOMIC-PIOP1. OpenNeuro (2020) doi:doi: 10.18112/openneuro.ds002785.v2.0.0. [DOI] [Google Scholar]

- 48.Lukas Snoek; et al. AOMIC-PIOP2. OpenNeuro (2020) doi:doi: 10.18112/openneuro.ds002790.v2.0.0. [DOI] [Google Scholar]

- 49.Lukas Snoek; et al. AOMIC-ID1000. OpenNeuro (2021) doi:doi: 10.18112/openneuro.ds003097.v1.2.1. [DOI] [Google Scholar]

- 50.Bilder R et al. UCLA Consortium for Neuropsychiatric Phenomics LA5c Study. OpenNeuro (2020) doi:doi: 10.18112/openneuro.ds000030.v1.0.0. [DOI] [Google Scholar]

- 51.Nastase Samuel A.; et al. Narratives. OpenNeuro (2020) doi:doi: 10.18112/openneuro.ds002345.v1.1.4. [DOI] [Google Scholar]

- 52.Alexander L. M. et al. An open resource for transdiagnostic research in pediatric mental health and learning disorders. Sci. Data 4, 170181 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Richardson H., Lisandrelli G., Riobueno-Naylor A. & Saxe R. Development of the social brain from age three to twelve years. Nat. Commun. 9, 1027 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Kuklisova-Murgasova M. et al. A dynamic 4D probabilistic atlas of the developing brain. NeuroImage 54, 2750–2763 (2011). [DOI] [PubMed] [Google Scholar]

- 55.Reynolds J. E., Long X., Paniukov D., Bagshawe M. & Lebel C. Calgary Preschool magnetic resonance imaging (MRI) dataset. Data Brief 29, 105224 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Feczko E. et al. Adolescent Brain Cognitive Development (ABCD) Community MRI Collection and Utilities. 2021.07.09.451638 Preprint at 10.1101/2021.07.09.451638 (2021). [DOI]

- 57.Casey B. J. et al. The Adolescent Brain Cognitive Development (ABCD) study: Imaging acquisition across 21 sites. Dev. Cogn. Neurosci. 32, 43–54 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]