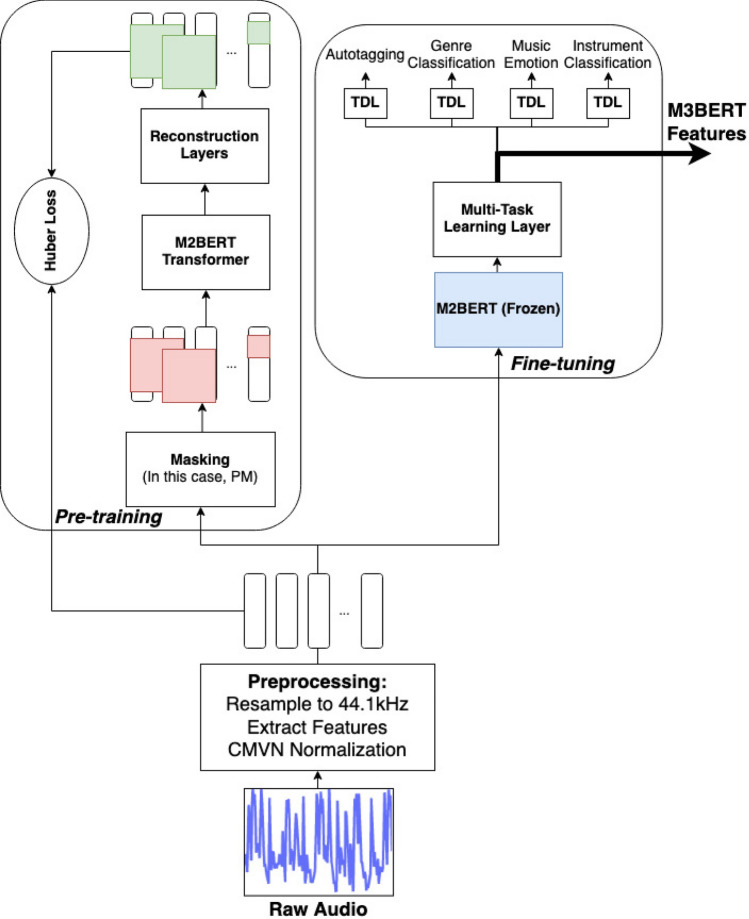

Figure 1.

M3BERT pre-training and fine-tuning. During pre-training, the M3BERT transformer layers are updated and we use a Huber Loss between the reconstructed signal and the original signal. During fine-tuning, the M3BERT layers are frozen, and a dense, multi-task learning neural network layer is used to enrich the output representations. TDL stands for Time-Distributed layer, and without loss of generality, we show the patch-masking (PM) policy in this diagram.