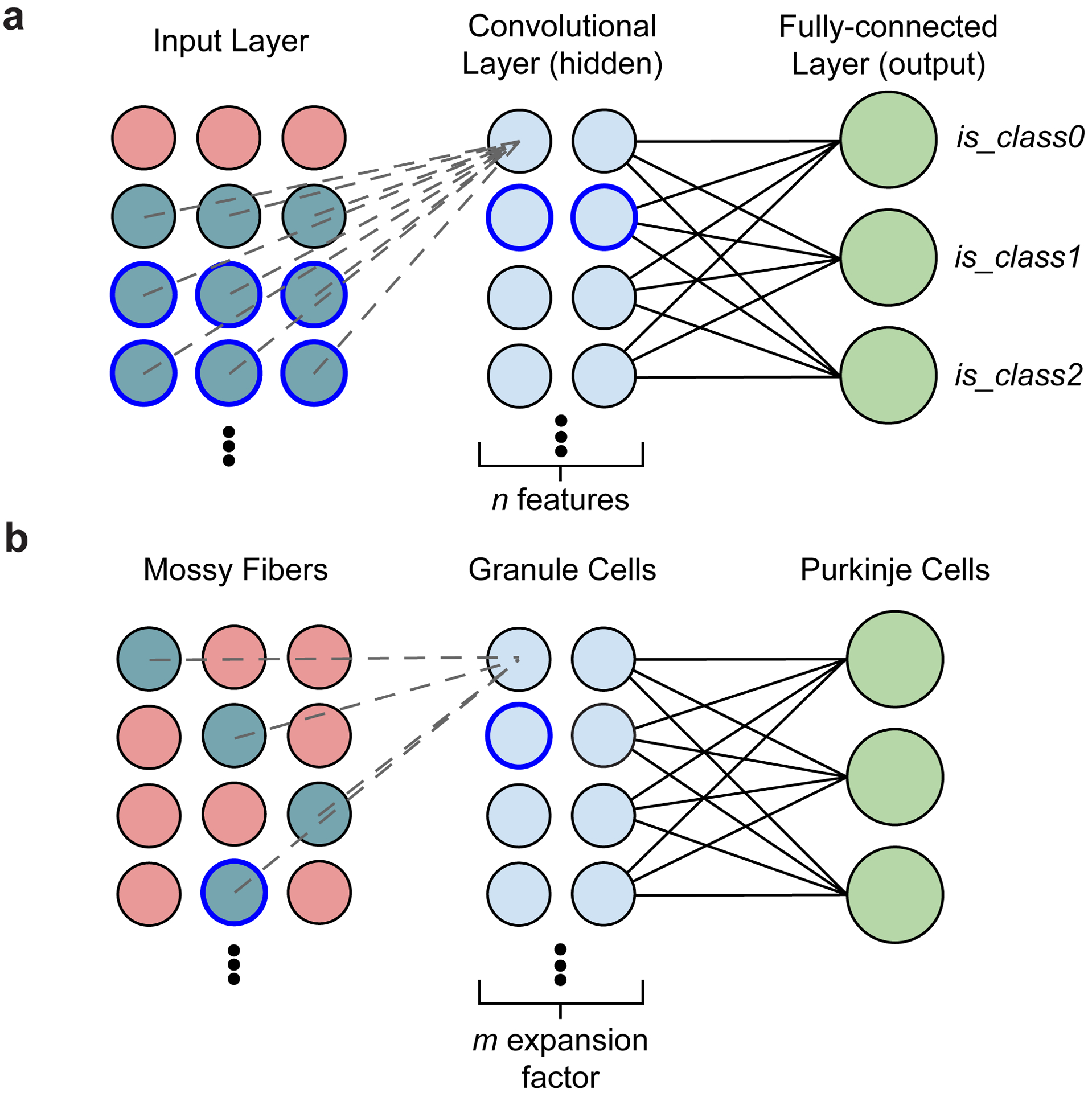

Extended Data Figure 1. Similarity between a convolutional neural network and the cerebellar feedforward network.

a, Diagram of a simple convolutional neural network with one convolutional layer (input→hidden) and one fully connected layer (hidden→output). The input (left) is made up of a single-channel 2D grid of neurons. The convolutional layer (middle) is made up of neurons each sampling a small local grid of the input (e.g., nine inputs when a 3×3 filter is used, cyan colored circles). This is notably different from a multi-layer perceptron network where the input and the hidden layer are fully connected - the convolution allows an increase in features while decreasing computational cost. Due to the small field of view of each convolutional layer neuron, adjacent neurons share a significant amount of inputs with each other. To increase capacity of the hidden layer, the convolutional neurons can be replicated by n times (typically parameterized as n features). Finally, the output neurons (right) are fully connected with neurons in the preceding convolutional layer. For a classification network, each label (class) is associated with a single binary output neuron for both training and inference. b, Diagram of the cerebellar feedforward network. Mossy fibers (MFs; left) can be considered a 2D grid of sensory and afferent command inputs typically of mixed modalities40,41. Granule cells (GrCs; middle) sample only ~4 MF inputs each. The total number of GrCs is estimated to be hundreds of times more than the number of MFs (Fig. 1b), represented by an expansion factor m. Finally, Purkinje cells (PCs; right) - output neurons of the cerebellar cortex - receive input from tens to hundreds of thousands of GrC axons that pass by PC dendrites.