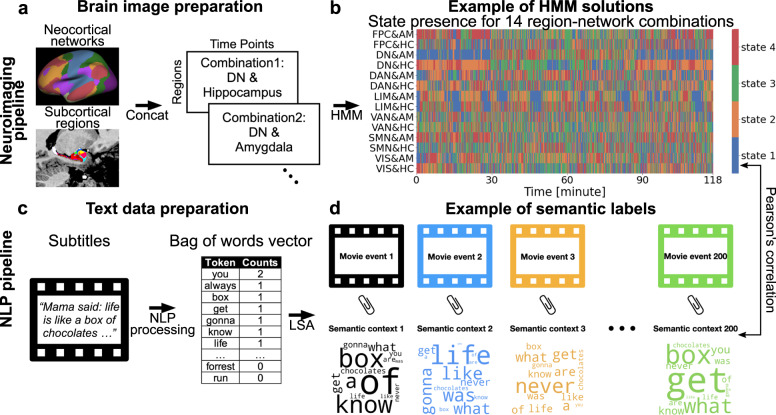

Fig. 1. Quantitative analysis workflow.

a As part of the brain-imaging data processing pipeline, the Schaefer-Yeo reference atlas served to extract neural responses during movie watching from 100 anatomical subregions from seven established functional brain networks, spanning from lower sensory to highly associative circuits. We supplemented the neocortical functional networks with neural activity from hippocampal and amygdalar subregion sets (summarized here as’limbic regions’) using rigorous microanatomical segmentation. Subsequently, the time series of 14 limbic-neocortical combinations provided the basis for estimating 14 separate hidden Markov models (HMM), for each subject. b The extracted state presence (color coding is specific to each region-network combination [row]) delineates the temporal dynamics of functional brain coupling cliques both vertically (across region-network combinations) and horizontally (across narrative shifts). c As part of the text data processing pipeline, movie subtitles and narrator descriptions served as raw text information. By means of natural language processing (NLP) techniques, the text was re-expressed as word occurrences (i.e., vocabulary word occurrence matrix). d The per-timepoint expressions of 200 semantic contexts were obtained from latent semantic analysis (LSA; cf. methods). Each color represents a unique semantic context, whose presence was inferred as proxy trajectories for underlying movie events. Short names for Schaefer-Yeo networks: VIS Visual network, SMN somatomotor network, DAN dorsal attention network, DN default network, LIM limbic network, VAN salience and ventral attention network, FPC Frontoparietal network. Source data are provided as a Source Data file.