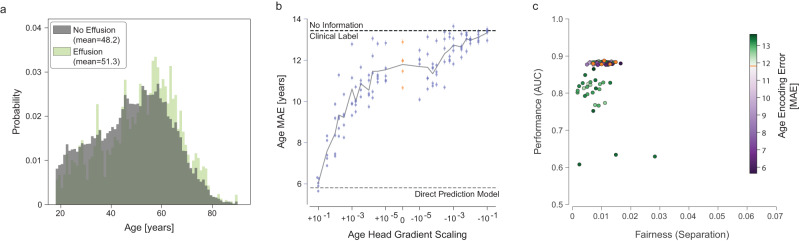

Fig. 2. Intervening on age encoding using multitask learning.

a The distribution of ages for positive (light green) and negative (gray) examples for Effusion in the training set. b The effect of altering the gradient scaling of the age prediction head on age encoding (as determined by subsequent transfer learning). For large positive values of gradient scaling (left), the models encoded age strongly, with a low MAE that approached the performance of a dedicated age prediction model (empirical LEB). For large negative values of gradient scaling (right), the age prediction performance of the multitask model approached the empirical UEB. Baseline models (with zero gradient scaling from the age head, equivalent to a single task condition prediction model) are shown in orange. Each dot represents a model trained (25 values of gradient scaling times 5 replicates), with error bars denoting 95% confidence intervals from bootstrapping examples (n = 17,723) within a model. c Fairness and performance of all replicates (n = 125). The degree of age encoding by the particular replicate is color-coded, with purple dots denoting more age information and green dots less information, than the baseline model without gradient scaling (in orange, overlapping with the purple dots in this case). Source data are provided as a Source Data file.