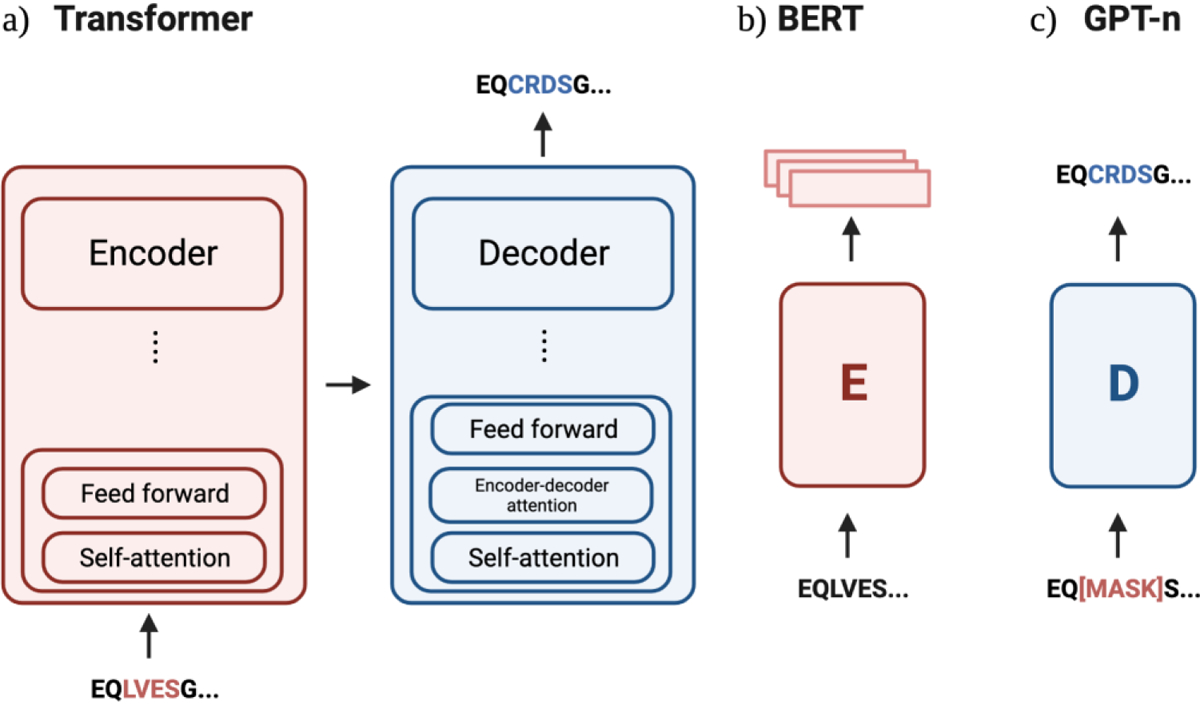

Fig. 2: Schematic overview of sequence generation models in antibody design.

a) The original transformer architecture consisted of encoder and decoder models with stacks of six layers each. An example architecture that has both an encoder and decoder architecture is the Manifold Samplers [33,35]. b) BERT models are also known as autoencoders, and only use the encoder from the original Transformer. BERT-inspired protein models include AntiBERTy [15], AbLang [39], AntiBERTa [40], PARA [41], and the ESM suite [42] [13]. c) The GPT-n model contains only the decoder model, where the most prominent example in antibody design is IgLM [37]** and the ProGen suite [30,34].