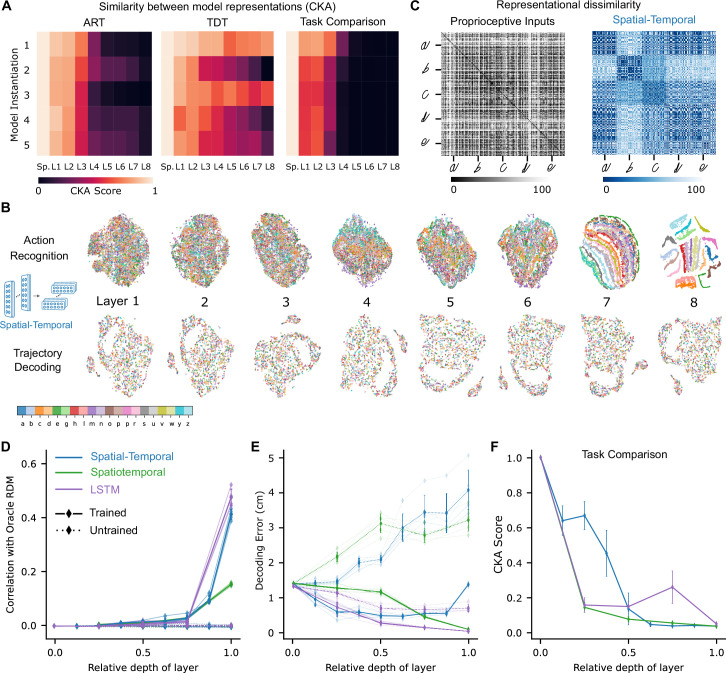

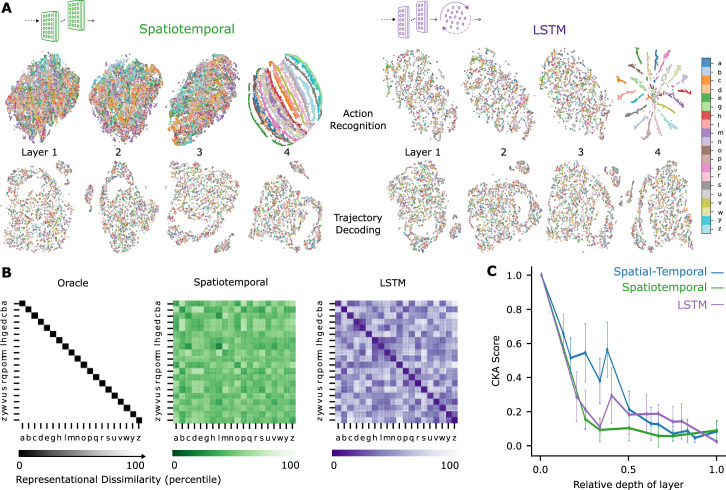

Figure 4. Low-dimensional embedding of network layers reveals structure.

(A) Similarity in representations (centered kernel alignment [CKA]) between the trained and the untrained models for each of the five instantiations of the best-performing spatial-temporal models (left and center). CKA between models trained on recognition vs. decoding (right). (B) t-Distributed stochastic neighbor embedding (t-SNE) for each layer of one instantiation of the best-performing spatial-temporal model trained on both tasks. Each data point is a random stimulus sample (N=2000, 50 per stimulus). (C) Representational dissimilarity matrices (RDMs). Character level representation is visualized using percentile RDMs for proprioceptive inputs (left) and final layer features (right) of one instantiation of the best-performing spatio-temporal model trained on the recognition task. (D) Similarity in stimulus representations between RDMs of an Oracle (ideal observer) and each layer for the five instantiations of the action recognition task (ART)-trained models and their untrained counterparts. (E) Decoding error (in cm) along the hierarchy for each model type on the trajectory decoding task. (F) Centered kernel alignment (CKA) between models trained on recognition vs. decoding for the five instantiations of all network types (right).