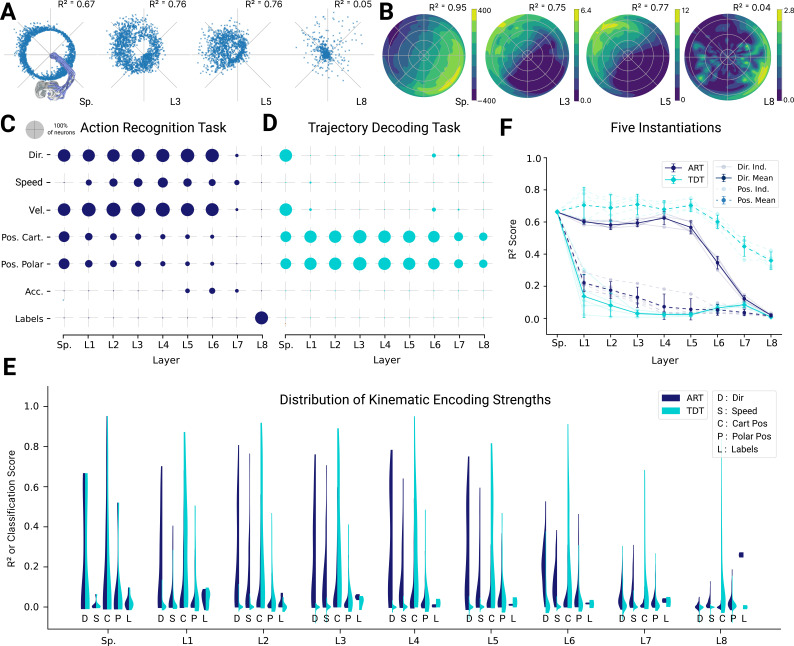

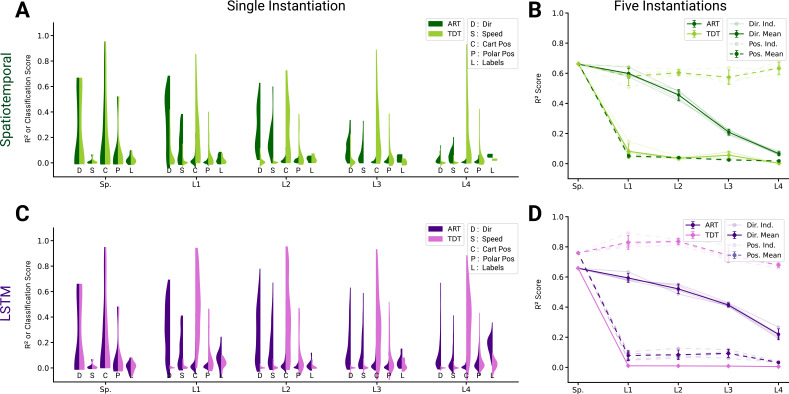

Figure 5. Analysis of single unit tuning properties for spatial-temporal models.

(A) Polar scatter plots showing the activation of units (radius ) as a function of end-effector direction, as represented by the angle θ for directionally tuned units in different layers of the top-performing spatial-temporal model trained on the action recognition task, where direction corresponds to that of the end-effector while tracing characters in the model workspace. The activation strengths of one (velocity-dependent) muscle spindle one unit each in layers 3, 5, and 8 are shown. (B) Similar to (A), except that now radius describes velocity and color represents activation strength. The contours are determined following linear interpolation, with gaps filled in by neighbor interpolation and smoothed using a Gaussian filter. Examples of one muscle spindle, one unit each in layers 3, 5, and 8, are shown. (C) For each layer of one trained instantiation, the units are classified into types based on their tuning. A unit was classified as belonging to a particular type if its tuning had a test . Tested features were direction tuning, speed tuning, velocity tuning, Cartesian and polar position tuning, acceleration tuning, and label tuning (18/5446 scores excluded for action recognition task [ART]-trained, 430/5446 for trajectory decoding task [TDT]-trained; see Methods). (D) The same plot but for the spatial-temporal model of the same architecture but trained on the trajectory decoding task. (E) For an example instantiation, the distribution of test scores for both the ART- and TDT-trained models are shown as vertical histograms (split-violins), for five kinds of kinematic tuning for each layer: direction tuning, speed tuning, Cartesian position tuning, polar position tuning, and label specificity indicated by different shades and arranged left-right for each layer including spindles. Tuning scores were excluded if they were equal to 1, indicating a constant neuron, or less than −0.1, indicating an improper fit (12/3890 scores excluded for ART, 285/3890 for TDT; see Methods). (F) The means of 90% quantiles over all five model instantiations of models trained on ART and TDT are shown for direction tuning (dark) and position tuning (light). 95% confidence intervals are shown over instantiations ().