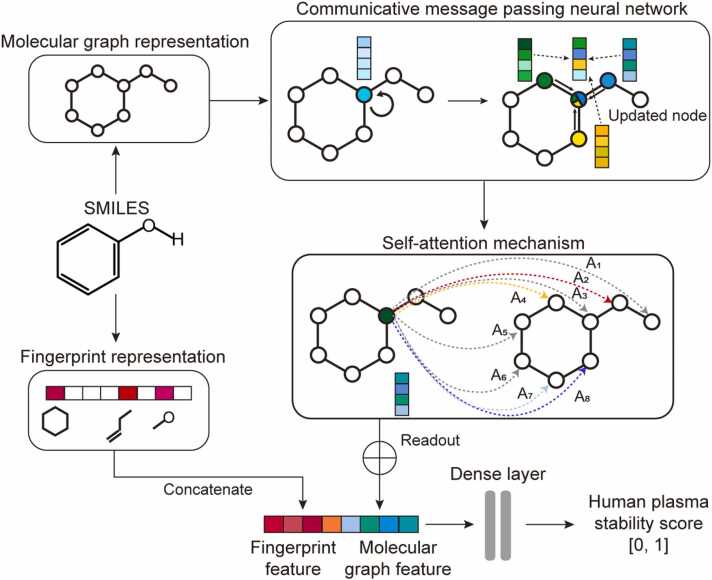

Fig. 2.

Model architecture of PredPS. PredPS integrates the communicative message passing neural network (CMPNN) [28], a self-attention layer, and fully-connected layers. It transforms input compounds (SMILES) into molecular fingerprints and graph representations, updating all node and edge features. The self-attention method generates a molecular feature vector, which when concatenated with graph features, undergoes training as fully connected layers.