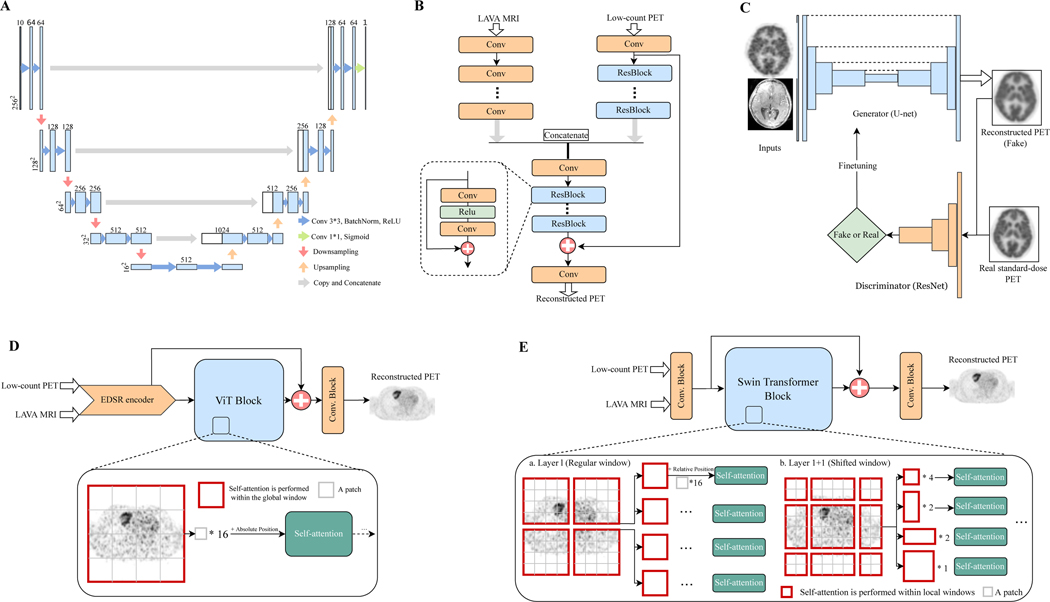

Figure 1: Schematic overviews of AI algorithm frameworks for low-count PET reconstruction.

(A) The classic U-net model. (B) The adapted EDSR (enhanced deep super-resolution network) model. (C) The GAN (generative adversarial network) model. (D) The EDSR-ViT model. EDSR-ViT takes the feature encoder part from the adapted EDSR (B) directly, and makes use of the ViT (Visual transformer) block to obtain global self-attention within the image. (E) The SwinIR model, consisting of Swin transformer blocks. The main difference of Swin transformer and ViT transformer is where the self-attention operation applies. For Swin transformer block, the self-attention is applied within each of the local windows, including the regular window partitions (Layer l) and the following shifted-windows (Layer l+1, etc). For ViT, the self-attention is applied within the global image, which is equally partitioned into fixed-size patches.