Abstract

Background

Currently, surgical education utilizes a combination of the apprentice model, wet-lab training, and simulation, but due to reliance on subjective data, the quality of teaching and assessment can be variable. The “language of surgery,” an established concept in engineering literature whose incorporation into surgical education has been limited, is defined as the description of each surgical maneuver using quantifiable metrics. This concept is different from the traditional notion of surgical language, generally thought of as the qualitative definitions and terminology used by surgeons.

Methods

A literature search was conducted through April 2023 using MEDLINE/PubMed using search terms to investigate wet-lab, virtual simulators, and robotics in ophthalmology, along with the language of surgery and surgical education. Articles published before 2005 were mostly excluded, although a few were included on a case-by-case basis.

Results

Surgical maneuvers can be quantified by leveraging technological advances in virtual simulators, video recordings, and surgical robots to create a language of surgery. By measuring and describing maneuver metrics, the learning surgeon can adjust surgical movements in an appropriately graded fashion that is based on objective and standardized data. The main contribution is outlining a structured education framework that details how surgical education could be improved by incorporating the language of surgery, using ophthalmology surgical education as an example.

Conclusion

By describing each surgical maneuver in quantifiable, objective, and standardized terminology, a language of surgery can be created that can be used to learn, teach, and assess surgical technical skill with an approach that minimizes bias.

Key message

The “language of surgery,” defined as the quantification of each surgical movement's characteristics, is an established concept in the engineering literature. Using ophthalmology surgical education as an example, we describe a structured education framework based on the language of surgery to improve surgical education.

Classifications

Surgical education, robotic surgery, ophthalmology, education standardization, computerized assessment, simulations in teaching.

Competencies

Practice-Based Learning and Improvement.

Keywords: Surgical education, Robotic surgery, Language of surgery, Education standardization, Ophthalmology, Surgical instruments

Highlights

-

•

Current state of ophthalmology surgical education

-

•

“Language of surgery” is defined as quantifying each surgical maneuver.

-

•

Technologies like video recordings and surgical robots can quantify maneuvers.

-

•

Education framework based on the language of surgery can improve surgical education.

Introduction

Surgical education has traditionally relied on an apprenticeship teaching model that recently integrated new modalities, including wet-lab practice and virtual simulation [[1], [2], [3]]. While successful, the quality of teaching is highly dependent on the mentor's surgical and teaching abilities, which leads to variability in how trainees learn and acquire surgical skills. To improve surgical education, strategies that utilize standardized descriptions of surgical maneuvers have emerged. These approaches include graded simulator modules, analysis of video-recorded operations, and robotic surgery platforms. While various assessment tools have been created to standardize surgical skills evaluation, these modalities are subjective given that the grading is performed by humans [4,5]. Moreover, use of these tools remains limited in clinical practice and surgical curricula, partially due to resource limitations requiring an expert observer for grading [6,7]. Quantifiable, objective, and standardized methods to teach and assess surgical skill, especially between mentors and across institutions, is lacking in current surgical education.

Surgical skill can be divided into two components: technical performance, defined as the appropriate execution of each step, and cognitive decision-making, which comes with surgical experience. In this review, we will focus on technical performance, which is characterized by instrument-tissue interactions of each individual surgical maneuver. We propose that technical performance in surgical education will be improved by describing each surgical maneuver of a procedure using quantified metrics, and offering specific feedback for improvement that is based on these quantified metrics. Competently completing each surgical maneuver will lead to successful technical performance of the entire procedure.

We describe an established platform developed by the Hager lab, the “language of surgery,” which is a communication framework for surgical skill assessment that characterizes each surgical maneuver with quantified metrics [8]. We note that this concept is distinctly separate from the traditional notion of surgical language, which is the qualitative definitions and terminology used by surgeons on a daily basis. Using Hager's language of surgery framework, a library of quantified descriptions for each surgical maneuver is created and these objective descriptions are used to teach how each surgical maneuver is performed, and how each maneuver is analyzed for competency [9]. We explain how technology such as virtual simulators, video recordings, and robotic surgery can be utilized to measure each surgical movement in order to define quantified metrics that will be used to create a language of surgery.

Available data indicate that the “language of surgery” framework has not been incorporated into surgical curricula, as indicated by the lack of standardized assessment tools in surgical education today [7]. Its absence is also reflected by the absence of articles in the surgical literature including ophthalmology. Despite the compelling concept, the language of surgery approach is relatively unknown to surgical educators in part, because the concept appears in the engineering and not surgical literature. To raise awareness, we provide a review of the current state of surgical education and define the language of surgery. With the current array of available technological resources, we emphasize how the language of surgery, by providing specific, quantified, and objective data for each surgical maneuver, should be revisited to teach learning surgeons each surgical movement. Importantly, we describe a structured education framework for how the language of surgery can be incorporated into current ophthalmology teaching and help improve ophthalmology surgical education, with broader implications for its use in surgical education overall.

Methods – literature search

Literature search was conducted through April 2023 using MEDLINE/PubMed. Search terms, used in various combinations, included the following: “ophthalmology,” “wetlab” or “wet-lab,” “virtual” and “simulator,” “satisfaction,” and “robot” to investigate wet-lab, virtual simulators, and robotics in ophthalmology. Various combinations of the terms “surgery,” “training,” “program,” “course,” “education,” “language,” “apprentice,” and “Education, Medical”[Mesh] were used to investigate the language of surgery and surgical education. Multiple forms of the words “robot,” “surgery”, and “ophthalmology” were searched for using the truncation function (“*”) in PubMed. The literature search examined reference lists of relevant articles as well. Specific inclusion criteria varied depending on relevance to the topic of interest, which were the language of surgery, surgical education, video recording analysis, and robotic surgery. Broadly, the majority of included articles focused on these topics of interest and were published in 2005 or later, although the literature search was not restricted to this timeframe. Articles published before 2005 were mostly excluded, although a few of these articles were included on a case-by-case basis.

Results

Overview of the language of surgery

Surgery reflects a set of technical skills integrated to complete a specific medical objective. A surgical procedure can therefore be considered as a complex task composed of a series of individual maneuvers, and each maneuver is comprised of individual “gestures” [10]. While much of the literature on the “language of surgery” describes segmenting procedures into gestures, the terms “maneuver” or “movement” reflects the accepted terminology to clinicians. Herein, we will use “maneuver” or “movement” when discussing steps of a procedure with the hope that the use of these terms will encourage clinicians to accept the “language of surgery.” Each successful surgical maneuver must be completed within a competency framework, and a successful surgical procedure requires the skilled completion of each individual maneuver.

The “language of surgery” concept was first described in 2005 by the Hager lab as a communication framework that systematically describes each surgical maneuver using quantifiable metrics [8]. This concept analyzes and quantifies the motions of a surgical procedure using video recordings and surgical robots. The surgical movements can similarly be analyzed using virtual simulators, which have recently become a popular educational tool in ophthalmologic surgery. Examples of quantifiable descriptions of a surgical maneuver include, but are not limited to, the time it takes to complete a movement, number of contacts an instrument has with tissue, instrument force, velocity, rotation, and position in the surgical field. Each surgical maneuver is defined by multiple quantifiable metrics, which can be thought of as a “word.” A library of surgical maneuvers, similar to a “dictionary,” can then be created, forming the basis of a “language” in the “language of surgery.”

Research over the past 20 years on objective computer-aided technical skill evaluation (OCASE-T) has been integral to the evolution of the “language of surgery” [6]. Computer models have been extensively employed in open, laparoscopic, and robotic simulations of surgical maneuvers (i.e. cholecystectomy, suturing, knot tying) to describe quantifiable metrics, segment maneuvers into gestures/movements, and differentiate between novices and expert surgeons [[10], [11], [12], [13], [14], [15], [16], [17], [18], [19]]. In addition to the da Vinci Surgical System, the Imperial College Surgical Assessment Device (ICSAD), comprised of an electromagnetic tracking system with a tracker on each hand and a computer software program, is one such technology that has been used for OCASE-T [12]. A study investigating the use of ICSAD to evaluate suturing of an artificial cornea found significant differences in dexterity measures (time taken, number of hand movements, and path length of hand movements) between novice, trainee, and expert surgeons [15].

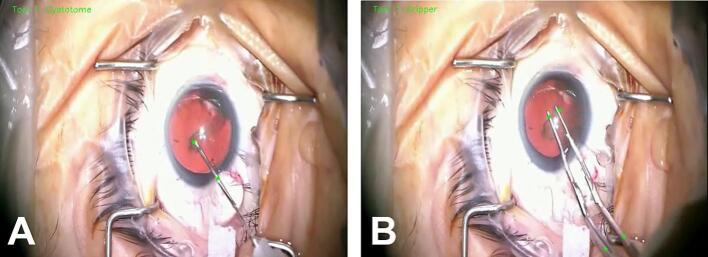

Furthermore, use of these computer models has been applied to analyze operations on humans, mainly through video recordings to identify instruments (Fig. 1, Supplemental Video 1), describe quantifiable metrics, segment procedures into maneuvers and gestures/movements, and assess technical skill by differentiating between novice and expert surgeons [[20], [21], [22], [23], [24], [25], [26], [27]]. This approach has been utilized to some extent in ophthalmologic surgery [22,[24], [25], [26], [27]]. Kim et al. used time to complete a maneuver, number of movements, and instrument trajectories to assess cataract surgical technique from video recordings of human operations [25]. Additional advancements in the “language of surgery” concept include the creation of a public dataset of video and kinematic data from robotic surgical tasks for consistent benchmarking, and the implementation of real-time teaching cues as a virtual coach during a needle-passing simulation using the da Vinci Skills Simulator [28,29]. Much of this work has been spearheaded by the Computational Interaction and Robotics Laboratory at Johns Hopkins University.

Fig. 1.

Surgical instrument annotation during video recording analysis following cataract surgery. Images of (A) cystotome and (B) forceps annotation, indicated by the bright green dots, using artificial intelligence algorithms on a video recording of cataract surgery. Time and tool velocities can be measured following instrument annotation. (For interpretation of the references to color in this figure legend, the reader is referred to the web version of this article.)

Using the language of surgery to define each surgical maneuver with quantifiable, precise, and accurate language can optimize how each surgical procedure is taught, which will clarify and simplify the learning objectives needed to gain competency by the learning surgeon. While the concept of the “language of surgery” is not new, especially to engineers, and the value of a universal language for surgical procedures has been emphasized, the language of surgery has not been integrated in surgical education, including in ophthalmology surgical education [30].

Current state of ophthalmology surgical education – the need for the language of surgery

Focusing on ophthalmology surgical education, training programs employ a variety of teaching strategies, but standardized methods to evaluate and teach surgical skill are lacking. This shortcoming highlights the need for the language of surgery. Current teaching strategies include the apprenticeship model, wet-lab practice, and virtual simulation, which each have strengths, but also limitations that can be mitigated by incorporating them into the language of surgery framework.

Apprenticeship model of education

The apprenticeship model remains a key element of ophthalmology surgical education for developing technical competence. In the spirit of “see one, do one,” the learning surgeon acquires individual surgical skills by observing an experienced surgeon that is followed by repeated trials of the new skill or procedure [1]. The apprenticeship model is now supplemented with access to wet-labs and virtual simulators, especially before the learning surgeon begins operating on patients. While remarkably effective, the apprentice model relies on the commitment and expertise of the teacher. The learning surgeon's educational experience is dependent upon what specific aspects the teacher chooses to emphasize, and how performance is assessed is based on the teacher's subjective observations. Using the language of surgery, trainees would learn a surgical maneuver using quantifiable, objective data that defines competence. This approach would help to standardize teaching and evaluation among different teachers.

Wet-lab training

Preprocedural training on structures such as animal or artificial eyes in the wet lab has become standard among US-based ophthalmology training programs [31]. A large, retrospective, cross-sectional study found that residents who had wet-lab training had better cataract surgery outcomes, including better vision one day after the operation and a lower frequency of complications compared to residents without wet-lab training [32]. A number of other studies have assessed the effectiveness of wet-lab models, but a systematic review by Lee et al. found that these studies did not demonstrate sufficient improved translational outcomes and model validity [33]. These studies were assessed using Messick's concept of validity, which examines the interpretation, relevance, value implications, and functional worth of test scores for their given purpose [33,34]. Consequently, it is difficult to assess outcomes within each study or compare outcomes across studies, in part because a standardized, quantifiable definition of success for each surgical maneuver is lacking. This omission highlights the need for developing a language of surgery that is based on objective, quantified data for surgical maneuvers.

Virtual simulators

Virtual simulators are a relatively new teaching platform for learning cataract and vitreoretinal surgery. Currently, the Eyesi Surgical (Haag-Streit Simulation) machine is used in 75 % of ophthalmology training programs in North America according to Haag-Streit Simulation, and is the most evaluated simulator in ophthalmology [33]. Eyesi includes both cataract and vitreoretinal modules, and incorporates a model head and model eye connected to a microscope (Fig. 2) [35]. A multi-center, retrospective study of 265 ophthalmology trainees in the United Kingdom showed that learning surgeons who received Eyesi training had a greater reduction in posterior capsule rupture rate for cataract surgery compared to those without Eyesi training [36]. However, cost has been a major barrier to virtual simulator adoption because an Eyesi simulator with both cataract and vitreoretinal modules can cost up to $270,000 USD [37]. Studies vary widely on how long it would take to recuperate the cost of an Eyesi simulator [33,36,38,39]. Some data support improved surgical performance and lower complications, but concerns remain regarding how well virtual simulators mimic the live human eye and their cost effectiveness.

Fig. 2.

Eyesi surgical virtual simulator. (A) Eyesi setup for cataract modules. Sample Eyesi simulator environment for (B) capsulorhexis and (C) phacoemulsification divide-and-conquer in the cataract module, and for (D) internal limiting membrane peeling in the vitreoretinal module.

Eyesi's quantitative scoring system is based on five domains: efficiency, target achievement, tissue treatment, instrument handling, and microscope handling [40]. While the machine's ability to track the movements and positions of virtual surgical instruments helps determine the final score, the algorithms used to evaluate performance remain proprietary information [33]. As a result, it is unclear what exact metrics are used in the simulator scoring system, and how well they assess performance. On some modules, but not all, Eyesi demonstrated construct validity, defined as the ability to distinguish between novice and experienced surgeons [33,[41], [42], [43], [44]]. In contrast, the language of surgery is intended to be open source and will be most useful with surgical systems and tools that can quantify maneuvers to provide learners with objective sources of feedback.

Other teaching modalities

Other teaching innovations offer different strategies to enhance surgical education in both the wet-lab and operating room. Virtual reality headsets can provide hands-on learning in a low-risk environment and are portable, cheap options compared to virtual simulator machines like Eyesi [45]. Standardized evaluation assessments have been created to decrease grading variability between mentors. Examples include the Objective Assessment of Skills in Intraocular Surgery (OASIS), which uses objective criteria to assess cataract surgery technical skill when operating on humans, and the Ophthalmology Surgical Competency Assessment Rubrics (ICO-OSCAR), which has been modified to assess wet-lab performance [[46], [47], [48]]. These assessments are a step towards more objective and standardized evaluation, but they remain subjective since humans grade the surgery. By using an expanded number of objective, quantified parameters to describe each surgical maneuver, the language of surgery framework could address this concern.

Discussion

Developing the language of surgery

Developing the language of surgery requires technologies that quantify essential metrics of each surgical maneuver during a procedure, and include the analysis of video recordings and robotic surgery.

Video recordings in ophthalmology

As described earlier, surgical video recordings can be utilized for OCASE-T. Some technical limitations of video recordings include the difficulty and cost of recording operations that do not require a surgical microscope (i.e. oculoplastic, orbital, and strabismus procedures in ophthalmology), and that video-based assessments can only grade surgical maneuvers within the camera's field of view [49]. In addition to using artificial intelligence for OCASE-T, other efforts have been made to reduce bias when using video recordings for formal assessment, including anonymizing recordings before grading by a panel of expert surgeons and using crowdsourcing from lay people to rate performance [49,50].

Robotic surgery in ophthalmology

Robotic surgery is a novel technology in ophthalmology and encompasses four different modalities: robot assisted tools, cooperative, teleoperated or telemanipulation, and automated systems [51]. In a cooperative system, as explained by Gerber et al., the surgeon and robot hold the surgical instruments together (Fig. 3). The surgeon initiates and performs surgical movements while the robot controls the movements. In ophthalmology, robotic surgery can be used for epiretinal membrane peeling, capsulorhexis and other cataract surgery maneuvers, retinal vein cannulation, and deep anterior lamellar keratoplasty [[51], [52], [53], [54], [55], [56], [57], [58], [59]]. Robot assisted tools, cooperative systems, and telemanipulation systems have been tested in vitro, for example on membrane peeling phantoms, and in vivo in animal eyes [53,[60], [61], [62], [63], [64], [65]]. Recently, robotic surgery was used to operate on human eyes in 2 small-scale clinical trials for epiretinal membrane peeling and retinal vein cannulation with ocriplasmin infusion [55,59]. Drawbacks of robotic surgery include high upfront costs, the need for stable high speed internet connections that may play a role in the seamless communication required in some robotic systems, and the learning curve for surgeons and training programs who have limited experience with these platforms. Robotic surgeries have longer operation times compared to manual surgeries, which is likely more pronounced with telemanipulation systems where the surgeon is not directly operating on the eye or eye phantom [40,55,66]. Some telemanipulation machines may require segmented movements due to the specific robotic motion controller, prolonging the time to complete the task [40]. Cooperative robotic systems, where the surgeon holds the surgical instrument, may be less likely to constrain movement. Overall, robotic surgery is a new development in ophthalmology with promise to improve patient outcomes, especially if longer procedure times and costs are reduced in the future.

Fig. 3.

Steady-hand eye robot from Johns Hopkins University. (A) Computer aided design model of the steady-hand eye robot with various components including a smart instrument (force-sensing surgical hook). (B) Image of the robotic system with a model eye and head. (C) Both the surgeon and the robot hold the smart instrument in a cooperative robotic surgical system. RCM = remote center-of-motion.

Importantly, the robot can be used to quantify movement metrics, including forces and position of surgical instruments to ocular structures, and can be guided with real-time intraoperative optical coherence tomography [57,60,[67], [68], [69], [70], [71]]. The computers in robotic surgery systems can collect and analyze data on movement metrics to incorporate virtual fixtures, which are defined as mechanisms designed to limit high risk movements that will lead to a surgical complication. The Steady-Hand Eye Robot (Fig. 3), a cooperative robotic surgical system from Johns Hopkins University, has a global force limiting feature that prevents surgeons from exceeding a certain force or velocity threshold [60,65]. These thresholds are adjustable and enable virtual fixtures to serve as a safeguard for surgeons in real time, and for trainees as they learn and practice technical surgical skills.

“Smart” robotic instruments and auditory feedback are additional features of robotic surgical systems that can quantify movements and deliver quantitative feedback. Smart instruments, defined as tools that provide physiological feedback, can be used as robots themselves or as part of more complex robotic systems. They have been developed to measure tool position and applied forces, including those below tactile sensation, which is a characteristic of ophthalmology surgical movements [53,68]. Feedback from smart instruments can be delivered audibly or visually during the operation. For example, auditory force feedback uses beeps in real time when the measured force exceeds a certain threshold during the maneuver. A small study using an epiretinal membrane peeling phantom showed that compared to no auditory force feedback training, training with auditory force feedback improved peeling performance during the training period, which persisted after training without use of auditory feedback [66]. Overall, robotic surgery systems are equipped to provide quantifiable feedback that can be incorporated into the language of surgery approach. These data will guide the learning surgeon with objective data in real time during an operation.

Using video recordings and robotic surgery to develop the language of surgery

During a procedure, video recordings could be used to capture multiple measures of each surgical maneuver including time, instrument velocity and angle, and record the number of times the instrument and tissue have interacted. The computer within a robotic surgery platform could further collect, analyze, and quantify these interactions, such as measuring force vectors as well as the tissue's physiology including oxygen saturation or duration of light exposure [9,60,72]. This information would be acquired in real time during an operation.

Capsulorhexis is a maneuver in cataract surgery where the surgeon removes the anterior capsule of the lens in order to gain access to the lens cortex and nucleus, which are removed by phacoemulsification. Capsulorhexis is performed by first incising the capsule and then using a specialized forceps to tear the anterior capsule in a continuous circular fashion [73]. This maneuver is technically challenging to the learning surgeon and will be used as an example of how the language of surgery can facilitate educating the novice surgeon. Analysis of surgical videos and data from the robot of initial incision of capsulorhexis could determine the type of instrument, the location of incision, the incision size, and the downward and tangential velocity needed to make the incision. Instrument location, velocity, and hand trajectory needed to complete this maneuver could also be quantified [70]. Additional information could be collected from “smart” instruments, such as force sensors incorporated at the instrument tip, which would measure downward and tangential forces throughout the capsulorhexis [60,67].

Data from the robotic system and video recording analysis would be combined to determine movement metrics that lead to successful endpoints. These would be compared to those metrics that cause complications, creating a comprehensive definition for the surgical maneuver. Since a range of performance based on objective, quantified data is likely to emerge, a competency “score” for each maneuver could be calculated and incorporated into the definition. The definition for each surgical maneuver could then be organized into a library. Surgeries performed by a range of surgeons, from expert to novice, could be assessed first to establish the initial definition and competency ranges for each surgical maneuver of a procedure.

How to implement the language of surgery

Implementing the language of surgery would provide trainees with clear, specific, quantifiable feedback that can be used to provide exact information on how to improve performance. After establishing a library of surgical maneuver definitions, objective feedback using the language of surgery can be delivered before, during, and after a procedure with robotic surgery systems and video analysis, respectively. An integrated language of surgery approach of pre-, intra-, and post-procedure is advocated (Fig. 4). While robotic systems may be expensive and complex, robots for surgical education do not require full functionality such as complete automation because they only need to quantify movements. At baseline, this simplified robot would be able to track position and velocity through sensors or computational methods that analyze image captures of the surgery, and measure force with a smart instrument [67]. The use of a simplified robot designed for surgical education would minimize cost and could be used throughout the training of surgical technical skills.

Fig. 4.

Schematic detailing how the language of surgery would be implemented in surgical education. Trainees can use the language of surgery pre-procedure, intra-procedure, and post-procedure. It can be used for both wet-lab and operating room procedures. Lessons learned in wet-lab training can be used to improve performance in the operating room, and vice versa.

Pre-procedure, trainees would be provided with quantifiable data on how each surgical maneuver is performed based on the description and competency ranges defined by the language of surgery. If applicable, trainees would review data collected from the robot and video recordings from a previous session, which can guide their learning in the upcoming session. The key advantage is that the learning surgeon can make adjustments for each maneuver in a quantitative manner to achieve the metrics that define competency. Taking capsulorhexis as an example, trainees would pay attention to metrics described using the language of surgery, such as hand arc/trajectory, velocity, force, and time to complete the maneuver.

During surgery, objective feedback would be provided by the surgical robot. Trainees would be informed in real time how the maneuver was performed relative to the established maneuver definition. For example, if the learning surgeon moved the instrument at a velocity of 2.5 mm/s instead of 1.5 mm/s during capsulorhexis, the surgeon would receive instant feedback informing them to reduce the instrument speed by 1.0 mm/s. This approach provides guidance based on validated data rather than being given qualitative feedback to “slow down.” Feedback on other maneuver metrics would be provided in a similar manner, and could be delivered in real time through auditory rather than visual feedback so as to not distract the surgeon's view of the surgical field so that immediate adjustments can be made.

Post-procedure, OCASE-T algorithms would use data collected from the robot and through video recording analysis from the procedure, and compare these metrics to established metrics that comprise the maneuver definition for the trainee to analyze. For example, at a specific time point during capsulorhexis, the surgeon could learn that the instrument velocity should have been decreased, i.e. from 2.5 mm/s to 1.5 mm/s, to fall within the established range of competency. This information would be used to modify the instrument velocity with a very measured fashion in a subsequent procedure. Feedback on other maneuver metrics would be provided in a similar manner. Lastly, OCASE-T could be used to generate a cumulative technical skill score for the procedure, which the trainee can use to compare to established competency ranges.

New trainees would first apply the integrated language of surgery approach with robotic surgery platforms and video analysis during wet-lab sessions on phantoms, which are practice models used to replicate the sensation of performing a surgical maneuver, and then on animal eyes. During this time, the learning surgeon could use virtual simulators, like Eyesi, as additional practice. Ideally, the metrics used to evaluate virtual simulator performance would match the language of surgery metrics that make up the established maneuver definition. It is possible that a set of core competency scores could be used to determine when a learning surgeon can appropriately transition to surgery on patients. Additional practice in the wet-lab could follow, providing an opportunity for the learning surgeon to improve their technique on maneuvers that have low competency scores in a low-stakes environment. With this structured approach, trainees would be able to demonstrate quantifiable improvement of their surgical technical skills with practice. Ultimately, utilizing the integrated language of surgery approach would assist trainees to better understand what to improve on and help standardize training and assessment of surgical skills.

Additional applications for the language of surgery

By instituting quantifiable definitions of surgical movements, the language of surgery can be used to enhance surgical education in a variety of contexts. As discussed previously, trainees can better compare their surgical technical skills to those of an experienced surgeon and to an established range of competency. Quantifiable metrics from maneuver definitions can be used to establish virtual fixture settings, creating safeguards for trainees. Residency programs could incorporate maneuver descriptions and ranges of competency when assessing trainees on their technical performance, making evaluations more objective and less dependent on the individual grader. Experienced surgeons could also use the language of surgery to compare aspects of their technical performance with an established standard or with one another [67].

The language of surgery could also be employed in situations outside of surgical education. Metrics from maneuver definitions can help establish and assess surgical competence when delineating hospital privileges to newly hired surgeons and during medicolegal cases. The language of surgery could also be implemented to standardize surgical procedures, by setting desired ranges of maneuver metrics for surgeons to follow, during clinical trials and with the introduction of new surgical techniques or devices.

Conclusion

Strategies to teach surgical technical skills are evolving and we emphasize that the language of surgery has substantial potential to improve how trainees learn and master these skills. While the concept has been readily described in engineering literature, the language of surgery has not been widely implemented in surgical training. This points to a possible need for improved communication between engineers and clinicians. Video recording analysis and robotic surgery platforms can be utilized to create and leverage the language of surgery for training purposes, providing a standardized, quantifiable framework to evaluate individuals. Standardized metrics of surgical maneuvers could help to compare the technical skills of both trainees and expert surgeons to an established competency range and to one another. Here, we provide a structured framework for incorporating the language of surgery into ophthalmology surgical education, although this approach could be applied broadly to improve education across all disciplines of surgery.

The following is the supplementary data related to this article.

Video of surgical instrument annotation during video recording analysis following cataract surgery. Cystotome and forceps annotation, indicated by the bright green dots, using artificial intelligence algorithms on a video recording of cataract surgery. Time and tool velocities can be measured.

Funding sources

This work was supported by the Robert Bond Welch Professorship to James T. Handa and an unrestricted grant from Research to Prevent Blindness to the Wilmer Eye Institute, along with a grant from the National Institutes of Health to Shameema Sikder (1R01EY033065).

Ethics approval

Not applicable, as this is a literature-based review article.

CRediT authorship contribution statement

Nathan Pan-Doh: Conceptualization, Investigation, Writing – original draft, Writing – review & editing. Shameema Sikder: Writing – review & editing. Fasika A. Woreta: Writing – review & editing. James T. Handa: Conceptualization, Supervision, Writing – original draft, Writing – review & editing.

Declaration of competing interest

James T. Handa receives grant funding and royalties from Bayer Pharmaceuticals. He also receives grant funding and is a member of the Scientific Advisory Board for Clover Therapeutics, is a member of the Scientific Advisory Board for Seeing Medicines, and serves as a consultant for Nano Retina. Nathan Pan-Doh, Shameema Sikder, and Fasika A. Woreta report no proprietary or commercial interest in any product mentioned or concept discussed in this article.

Acknowledgements

None.

References

- 1.Lorch A., Kloek C. An evidence-based approach to surgical teaching in ophthalmology. Surv Ophthalmol. 2017;62:371–377. doi: 10.1016/J.SURVOPHTHAL.2017.01.003. [DOI] [PubMed] [Google Scholar]

- 2.Sidwell R.A. Intraoperative teaching and evaluation in general surgery. Surg Clin North Am. 2021;101:587–595. doi: 10.1016/J.SUC.2021.05.006. [DOI] [PubMed] [Google Scholar]

- 3.Walter A.J. Surgical education for the twenty-first century: beyond the apprentice model. Obstet Gynecol Clin North Am. 2006;33:233–236. doi: 10.1016/J.OGC.2006.01.003. [DOI] [PubMed] [Google Scholar]

- 4.Puri S., Sikder S. Cataract surgical skill assessment tools. J Cataract Refract Surg. 2014;40:657–665. doi: 10.1016/J.JCRS.2014.01.027. [DOI] [PubMed] [Google Scholar]

- 5.Alnafisee N., Zafar S., Vedula S., Sikder S. Current methods for assessing technical skill in cataract surgery. J Cataract Refract Surg. 2021;47:256–264. doi: 10.1097/J.JCRS.0000000000000322. [DOI] [PubMed] [Google Scholar]

- 6.Vedula S.S., Ishii M., Hager G.D. Objective assessment of surgical technical skill and competency in the operating room. Annu Rev Biomed Eng. 2017;19:301–325. doi: 10.1146/ANNUREV-BIOENG-071516-044435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Lam K., Chen J., Wang Z., Iqbal F.M., Darzi A., Lo B., et al. Machine learning for technical skill assessment in surgery: a systematic review. NPJ Digit Med. 2022:5. doi: 10.1038/S41746-022-00566-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Lin H.C., Shafran I., Murphy T.E., Okamura A.M., Yuh D.D., Hager G.D. Automatic detection and segmentation of robot-assisted surgical motions. Med Image Comput Comput Assist Interv. 2005;8:802–810. doi: 10.1007/11566465_99. [DOI] [PubMed] [Google Scholar]

- 9.Channa R., Iordachita I., Handa J.T. Robotic eye surgery. Retina. 2017;37:1220. doi: 10.1097/IAE.0000000000001398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Vedula S.S., Malpani A.O., Tao L., Chen G., Gao Y., Poddar P., et al. Analysis of the structure of surgical activity for a suturing and knot-tying task. PloS One. 2016:11. doi: 10.1371/JOURNAL.PONE.0149174. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Richards C., Rosen J., Hannaford B., Pellegrini C., Sinanan M. Skills evaluation in minimally invasive surgery using force/torque signatures. Surg Endosc. 2000;14:791–798. doi: 10.1007/S004640000230. [DOI] [PubMed] [Google Scholar]

- 12.Datta V., Mackay S., Mandalia M., Darzi A. The use of electromagnetic motion tracking analysis to objectively measure open surgical skill in the laboratory-based model. J Am Coll Surg. 2001;193:479–485. doi: 10.1016/S1072-7515(01)01041-9. [DOI] [PubMed] [Google Scholar]

- 13.Datta V., Chang A., Mackay S., Darzi A. The relationship between motion analysis and surgical technical assessments. Am J Surg. 2002;184:70–73. doi: 10.1016/S0002-9610(02)00891-7. [DOI] [PubMed] [Google Scholar]

- 14.Rosen J., Hannaford B., Richards C.G., Sinanan M.N. Markov modeling of minimally invasive surgery based on tool/tissue interaction and force/torque signatures for evaluating surgical skills. IEEE Trans Biomed Eng. 2001;48:579–591. doi: 10.1109/10.918597. [DOI] [PubMed] [Google Scholar]

- 15.Saleh G.M., Voyazis Y., Hance J., Ratnasothy J., Darzi A. Evaluating surgical dexterity during corneal suturing. Arch Ophthalmol (Chicago, Ill 1960) 2006;(124):1263–1266. doi: 10.1001/ARCHOPHT.124.9.1263. [DOI] [PubMed] [Google Scholar]

- 16.Reiley C.E., Hager G.D. Task versus subtask surgical skill evaluation of robotic minimally invasive surgery. Med Image Comput Comput Assist Interv. 2009;12:435–442. doi: 10.1007/978-3-642-04268-3_54. [DOI] [PubMed] [Google Scholar]

- 17.Varadarajan B., Reiley C., Lin H., Khudanpur S., Hager G. Data-derived models for segmentation with application to surgical assessment and training. Med Image Comput Comput Assist Interv. 2009;12:426–434. doi: 10.1007/978-3-642-04268-3_53. [DOI] [PubMed] [Google Scholar]

- 18.Kumar R., Jog A., Vagvolgyi B., Nguyen H., Hager G., Chen C., et al. Objective measures for longitudinal assessment of robotic surgery training. J Thorac Cardiovasc Surg. 2012;143:528–534. doi: 10.1016/J.JTCVS.2011.11.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Corvetto M.A., Fuentes C., Araneda A., Achurra P., Miranda P., Viviani P., et al. Validation of the imperial college surgical assessment device for spinal anesthesia. BMC Anesthesiol. 2017:17. doi: 10.1186/S12871-017-0422-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Hayter M.A., Friedman Z., Bould M.D., Hanlon J.G., Katznelson R., Borges B., et al. Validation of the Imperial College Surgical Assessment Device (ICSAD) for labour epidural placement. Can J Anaesth. 2009;56:419–426. doi: 10.1007/S12630-009-9090-1. [DOI] [PubMed] [Google Scholar]

- 21.Chin K.J., Tse C., Chan V., Tan J.S., Lupu C.M., Hayter M. Hand motion analysis using the imperial college surgical assessment device: validation of a novel and objective performance measure in ultrasound-guided peripheral nerve blockade. Reg Anesth Pain Med. 2011;36:213–219. doi: 10.1097/AAP.0B013E31820D4305. [DOI] [PubMed] [Google Scholar]

- 22.Smith P., Tang L., Balntas V., Young K., Athanasiadis Y., Sullivan P., et al. “PhacoTracking”: an evolving paradigm in ophthalmic surgical training. JAMA Ophthalmol. 2013;131:659–661. doi: 10.1001/JAMAOPHTHALMOL.2013.28. [DOI] [PubMed] [Google Scholar]

- 23.Ahmidi N., Poddar P., Jones J.D., Vedula S.S., Ishii L., Hager G.D., et al. Automated objective surgical skill assessment in the operating room from unstructured tool motion in septoplasty. Int J Comput Assist Radiol Surg. 2015;10:981–991. doi: 10.1007/S11548-015-1194-1. [DOI] [PubMed] [Google Scholar]

- 24.Kim T.S., Malpani A., Reiter A., Hager G.D., Sikder S., Vedula S.S. Lect Notes Comput Sci. 11043 LNCS. 2018. Crowdsourcing annotation of surgical instruments in videos of cataract surgery; pp. 121–130. (Including Subser Lect Notes Artif Intell Lect Notes Bioinformatics). [DOI] [Google Scholar]

- 25.Kim T., O’Brien M., Zafar S., Hager G., Sikder S., Vedula S. Objective assessment of intraoperative technical skill in capsulorhexis using videos of cataract surgery. Int J Comput Assist Radiol Surg. 2019;14:1097–1105. doi: 10.1007/S11548-019-01956-8. [DOI] [PubMed] [Google Scholar]

- 26.Balal S., Smith P., Bader T., Tang H., Sullivan P., Thomsen A., et al. Computer analysis of individual cataract surgery segments in the operating room. Eye (Lond) 2019;33:313–319. doi: 10.1038/S41433-018-0185-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Yu F., Silva Croso G., Kim T., Song Z., Parker F., Hager G., et al. Assessment of automated identification of phases in videos of cataract surgery using machine learning and deep learning techniques. JAMA Netw Open. 2019;2 doi: 10.1001/JAMANETWORKOPEN.2019.1860. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ahmidi N., Tao L., Sefati S., Gao Y., Lea C., Haro B.B., et al. A dataset and benchmarks for segmentation and recognition of gestures in robotic surgery. IEEE Trans Biomed Eng. 2017;64:2025. doi: 10.1109/TBME.2016.2647680. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Malpani A., Vedula S.S., Lin H.C., Hager G.D., Taylor R.H. Effect of real-time virtual reality-based teaching cues on learning needle passing for robot-assisted minimally invasive surgery: a randomized controlled trial. Int J Comput Assist Radiol Surg. 2020;15:1187–1194. doi: 10.1007/S11548-020-02156-5. [DOI] [PubMed] [Google Scholar]

- 30.Nazari T., Vlieger E.J., Dankbaar M.E.W., van Merriënboer J.J.G., Lange J.F., Wiggers T. Creation of a universal language for surgical procedures using the step-by-step framework. BJS Open. 2018;2:151–157. doi: 10.1002/BJS5.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Binenbaum G., Volpe N. Ophthalmology resident surgical competency: a national survey. Ophthalmology. 2006;113:1237–1244. doi: 10.1016/J.OPHTHA.2006.03.026. [DOI] [PubMed] [Google Scholar]

- 32.Ramani S., Pradeep T.G., Sundaresh D.D. Effect of wet-laboratory training on resident performed manual small-incision cataract surgery. Indian J Ophthalmol. 2018;66:793. doi: 10.4103/IJO.IJO_1041_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Lee R., Raison N., Lau W., Aydin A., Dasgupta P., Ahmed K., et al. A systematic review of simulation-based training tools for technical and non-technical skills in ophthalmology. Eye (Lond) 2020;34:1737–1759. doi: 10.1038/S41433-020-0832-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Messick S. Meaning and values in test validation: the science and ethics of assessment. Educ Res. 1989;18:5–11. doi: 10.3102/0013189X018002005. [DOI] [Google Scholar]

- 35.EYESI SURGICAL Training Simulator for Intraocular Surgery 2021.

- 36.Ferris J., Donachie P., Johnston R., Barnes B., Olaitan M., Sparrow J. Royal College of Ophthalmologists’ National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020;104:324–329. doi: 10.1136/BJOPHTHALMOL-2018-313817. [DOI] [PubMed] [Google Scholar]

- 37.Ahmed Y., Scott I., Greenberg P. A survey of the role of virtual surgery simulators in ophthalmic graduate medical education. Graefes Arch Clin Exp Ophthalmol. 2011;249:1263–1265. doi: 10.1007/S00417-010-1537-0. [DOI] [PubMed] [Google Scholar]

- 38.Lowry E., Porco T., Naseri A. Cost analysis of virtual-reality phacoemulsification simulation in ophthalmology training programs. J Cataract Refract Surg. 2013;39:1616–1617. doi: 10.1016/J.JCRS.2013.08.015. [DOI] [PubMed] [Google Scholar]

- 39.Young B., Greenberg P. Is virtual reality training for resident cataract surgeons cost effective? Graefes Arch Clin Exp Ophthalmol. 2013;251:2295–2296. doi: 10.1007/S00417-013-2317-4. [DOI] [PubMed] [Google Scholar]

- 40.Jacobsen M.F., Konge L., Alberti M., La Cour M., Park Y.S., Thomsen A.S.S. Robot-assisted vitreoretinal surgery improves surgical accuracy compared with manual surgery: a randomized trial in a simulated setting. Retina. 2020;40:2091. doi: 10.1097/IAE.0000000000002720. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Mahr M., Hodge D. Construct validity of anterior segment anti-tremor and forceps surgical simulator training modules: attending versus resident surgeon performance. J Cataract Refract Surg. 2008;34:980–985. doi: 10.1016/J.JCRS.2008.02.015. [DOI] [PubMed] [Google Scholar]

- 42.Selvander M., Asman P. Ready for OR or not? Human reader supplements Eyesi scoring in cataract surgical skills assessment. Clin Ophthalmol. 2013;7:1973–1977. doi: 10.2147/OPTH.S48374. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Selvander M., Asman P. Cataract surgeons outperform medical students in Eyesi virtual reality cataract surgery: evidence for construct validity. Acta Ophthalmol. 2013;91:469–474. doi: 10.1111/J.1755-3768.2012.02440.X. [DOI] [PubMed] [Google Scholar]

- 44.Spiteri A., Aggarwal R., Kersey T., Sira M., Benjamin L., Darzi A., et al. Development of a virtual reality training curriculum for phacoemulsification surgery. Eye (Lond) 2014;28:78–84. doi: 10.1038/EYE.2013.211. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bakshi S., Lin S., Ting D., Chiang M., Chodosh J. The era of artificial intelligence and virtual reality: transforming surgical education in ophthalmology. Br J Ophthalmol. 2020 doi: 10.1136/BJOPHTHALMOL-2020-316845. bjophthalmol-2020-316845. [DOI] [PubMed] [Google Scholar]

- 46.Cremers S., Ciolino J., Ferrufino-Ponce Z., Henderson B. Objective assessment of skills in intraocular surgery (OASIS) Ophthalmology. 2005;112:1236–1241. doi: 10.1016/J.OPHTHA.2005.01.045. [DOI] [PubMed] [Google Scholar]

- 47.Golnik K.C., Beaver H., Gauba V., Lee A.G., Mayorga E., Palis G., et al. Cataract surgical skill assessment. Ophthalmology. 2011;118:427–427.e5. doi: 10.1016/J.OPHTHA.2010.09.023. [DOI] [PubMed] [Google Scholar]

- 48.Farooqui J.H., Jaramillo A., Sharma M., Gomaa A. Use of modified international council of ophthalmology-ophthalmology surgical competency assessment rubric (ICO-OSCAR) for phacoemulsification-wet lab training in residency program. Indian J Ophthalmol. 2017;65:898. doi: 10.4103/IJO.IJO_73_17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Thia B., Wong N., Sheth S. Video recording in ophthalmic surgery. Surv Ophthalmol. 2019;64:570–578. doi: 10.1016/J.SURVOPHTHAL.2019.01.005. [DOI] [PubMed] [Google Scholar]

- 50.Paley G.L., Grove R., Sekhar T.C., Pruett J., Stock M.V., Pira T.N., et al. Crowdsourced assessment of surgical skill proficiency in cataract surgery. J Surg Educ. 2021;78:1077–1088. doi: 10.1016/J.JSURG.2021.02.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gerber M., Pettenkofer M., Hubschman J. Advanced robotic surgical systems in ophthalmology. Eye (Lond) 2020;34:1554–1562. doi: 10.1038/S41433-020-0837-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Rahimy E., Wilson J., Tsao T., Schwartz S., Hubschman J. Robot-assisted intraocular surgery: development of the IRISS and feasibility studies in an animal model. Eye (Lond) 2013;27:972–978. doi: 10.1038/EYE.2013.105. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Sunshine S., Balicki M., He X., Olds K., Kang J., Gehlbach P., et al. A force-sensing microsurgical instrument that detects forces below human tactile sensation. Retina. 2013;33:200–206. doi: 10.1097/IAE.0B013E3182625D2B. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Bourcier T., Chammas J., Becmeur P., Sauer A., Gaucher D., Liverneaux P., et al. Robot-assisted simulated cataract surgery. J Cataract Refract Surg. 2017;43:552–557. doi: 10.1016/J.JCRS.2017.02.020. [DOI] [PubMed] [Google Scholar]

- 55.Edwards T., Xue K., Meenink H., Beelen M., Naus G., Simunovic M., et al. First-in-human study of the safety and viability of intraocular robotic surgery. Nat Biomed Eng. 2018;2:649–656. doi: 10.1038/S41551-018-0248-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Gijbels A., Smits J., Schoevaerdts L., Willekens K., Vander Poorten E., Stalmans P., et al. In-human robot-assisted retinal vein cannulation, a world first. Ann Biomed Eng. 2018;46:1676–1685. doi: 10.1007/S10439-018-2053-3. [DOI] [PubMed] [Google Scholar]

- 57.Draelos M., Tang G., Keller B., Kuo A., Hauser K., Izatt J.A. Optical coherence tomography guided robotic needle insertion for deep anterior lamellar Keratoplasty. IEEE Trans Biomed Eng. 2020;67:2073. doi: 10.1109/TBME.2019.2954505. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Keller B., Draelos M., Zhou K., Qian R., Kuo A.N., Konidaris G., et al. Optical coherence tomography-guided robotic ophthalmic microsurgery via reinforcement learning from demonstration. IEEE Trans Robot. 2020;36:1207–1218. doi: 10.1109/TRO.2020.2980158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Willekens K., Gijbels A., Smits J., Schoevaerdts L., Blanckaert J., Feyen J., et al. Phase I trial on robot assisted retinal vein cannulation with ocriplasmin infusion for central retinal vein occlusion. Acta Ophthalmol. 2021;99:90–96. doi: 10.1111/AOS.14480. [DOI] [PubMed] [Google Scholar]

- 60.Üneri A., Balicki M.A., Handa J., Gehlbach P., Taylor R.H., Iordachita I. New steady-hand eye robot with micro-force sensing for vitreoretinal surgery. Proc IEEE RAS EMBS Int Conf Biomed Robot Biomechatron. 2010;2010:814. doi: 10.1109/BIOROB.2010.5625991. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Yu H., Shen J.-H., Shah R.J., Simaan N., Joos K.M. Evaluation of microsurgical tasks with OCT-guided and/or robot-assisted ophthalmic forceps. Biomed Opt Express. 2015;6:457. doi: 10.1364/BOE.6.000457. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Nambi M., Bernstein P.S., Abbott J.J. A compact telemanipulated retinal-surgery system that uses commercially available instruments with a quick-change adapter. J Med Robot Res. 2016:1. doi: 10.1142/S2424905X16300016. [DOI] [Google Scholar]

- 63.de Smet M.D., Meenink T.C.M., Janssens T., Vanheukelom V., Naus G.J.L., Beelen M.J., et al. Robotic assisted cannulation of occluded retinal veins. PloS One. 2016:11. doi: 10.1371/JOURNAL.PONE.0162037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Willekens K., Gijbels A., Schoevaerdts L., Esteveny L., Janssens T., Jonckx B., et al. Robot-assisted retinal vein cannulation in an in vivo porcine retinal vein occlusion model. Acta Ophthalmol. 2017;95:270–275. doi: 10.1111/AOS.13358. [DOI] [PubMed] [Google Scholar]

- 65.Urias M., Patel N., Ebrahimi A., Iordachita I., Gehlbach P. Vivo Study. vol. 9. Transl Vis Sci Technol; 2020. Robotic retinal surgery impacts on scleral forces; pp. 1–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Cutler N., Balicki M., Finkelstein M., Wang J., Gehlbach P., McGready J., et al. Auditory force feedback substitution improves surgical precision during simulated ophthalmic surgery. Invest Ophthalmol Vis Sci. 2013;54:1316. doi: 10.1167/IOVS.12-11136. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Hubschman J., Son J., Allen B., Schwartz S., Bourges J. Evaluation of the motion of surgical instruments during intraocular surgery. Eye (Lond) 2011;25:947–953. doi: 10.1038/EYE.2011.80. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.MacLachlan R.A., Becker B.C., Tabarés J.C., Podnar G.W., Lobes L.A., Jr., et al. Micron: an actively stabilized handheld tool for microsurgery. IEEE Trans Robot. 2012;28:195. doi: 10.1109/TRO.2011.2169634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Mitsuishi M., Morita A., Sugita N., Sora S., Mochizuki R., Tanimoto K., et al. Master-slave robotic platform and its feasibility study for micro-neurosurgery. Int J Med Robot. 2013;9:180–189. doi: 10.1002/RCS.1434. [DOI] [PubMed] [Google Scholar]

- 70.Wilson J., Gerber M., Prince S., Chen C., Schwartz D., Hubschman J., et al. Intraocular robotic interventional surgical system (IRISS): mechanical design, evaluation, and master-slave manipulation. Int J Med Robot. 2018:14. doi: 10.1002/RCS.1842. [DOI] [PubMed] [Google Scholar]

- 71.Zhang D., Chen J., Li W., Bautista Salinas D., Yang G. A microsurgical robot research platform for robot-assisted microsurgery research and training. Int J Comput Assist Radiol Surg. 2020;15:15–25. doi: 10.1007/S11548-019-02074-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Ergeneman O., Dogangil G., Kummer M.P., Abbott J.J., Nazeeruddin M.K., Nelson B.J. A magnetically controlled wireless optical oxygen sensor for intraocular measurements. IEEE Sens J. 2008;8:29–37. doi: 10.1109/JSEN.2007.912552. [DOI] [Google Scholar]

- 73.Gimbel H.V., Neuhann T. Development, advantages, and methods of the continuous circular capsulorhexis technique. J Cataract Refract Surg. 1990;16:31–37. doi: 10.1016/S0886-3350(13)80870-X. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Video of surgical instrument annotation during video recording analysis following cataract surgery. Cystotome and forceps annotation, indicated by the bright green dots, using artificial intelligence algorithms on a video recording of cataract surgery. Time and tool velocities can be measured.