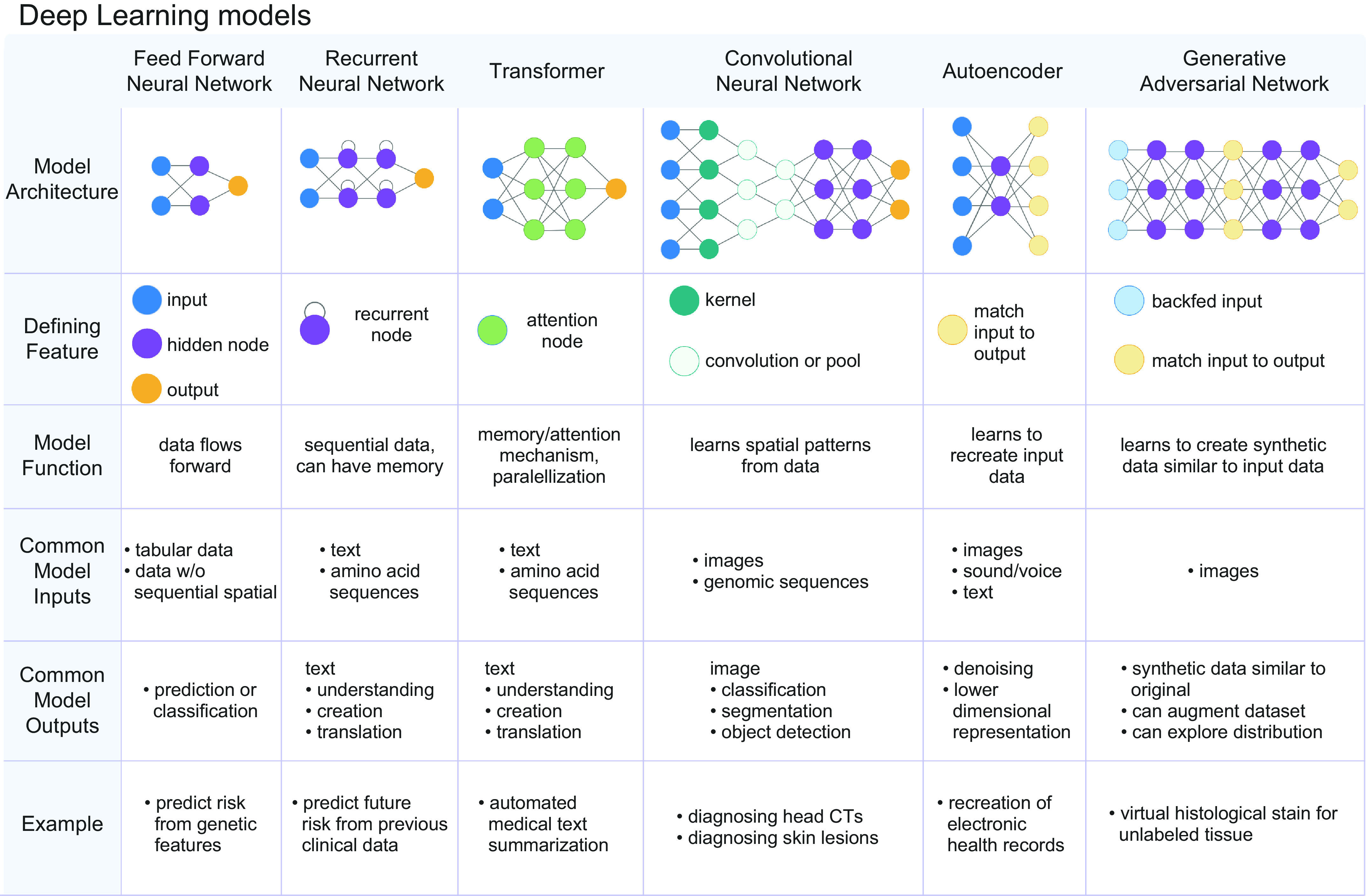

FIGURE 4.

There are numerous classes of deep learning models. The different classes can be defined by the data type that the model has been designed to handle. Feed-forward networks are bread-and-butter neural networks and best handle tabular data. Recurrent neural networks possess a recurrent node, which allows the model to exhibit memory for previously seen information. This renders recurrent neural networks adept at handling sequential data. Transformers are a class of models that are composed of attention mechanisms. This allows transformers to exhibit longer range memory and greater efficiency and parallelization, which allows these models to handle longer sequences and larger data sets. Convolutional neural networks are defined by repeating convolutional layers, which are adept at analyzing spatially arranged patterns commonly seen in images. Autoencoders are composed of encoders and decoders, and they are adept at featurizing the input and recreating the input to learn underlying patterns and structure within the data. Generative Adversarial Networks are composed of a pair of adversarial models, where one model endeavors to generate synthetic data and the other endeavors to detect synthetic data. Image created with BioRender.com, with permission.