Abstract

BACKGROUND:

Payers are faced with making coverage and reimbursement decisions based on the best available evidence. Often these decisions apply to patient populations, provider networks, and care settings not typically studied in clinical trials. Treatment effectiveness evidence is increasingly available from electronic health records, registries, and administrative claims. However, little is known about when and what types of real-world evidence (RWE) studies inform pharmacy and therapeutic (P&T) committee decisions.

OBJECTIVE:

To evaluate evidence sources cited in P&T committee monographs and therapeutic class reviews and assess the design features and quality of cited RWE studies.

METHODS:

A convenience sample of representatives from pharmacy benefit management, health system, and health plan organizations provided recent P&T monographs and therapeutic class reviews (or references from such documents). Two investigators examined and grouped references into major categories (published studies, unpublished studies, and other/unknown) and multiple subcategories (e.g., product label, clinical trials, RWE, systematic reviews). Cited comparative RWE was reviewed to assess design features (e.g., population, data source, comparators) and quality using the Good ReseArch for Comparative Effectiveness (GRACE) Checklist.

RESULTS:

Investigators evaluated 565 references cited in 27 monographs/therapeutic class reviews from 6 managed care organizations. Therapeutic class reviews mostly cited published clinical trials (35.3%, 155/439), while single-product monographs relied most on manufacturer-supplied information (42.1%, 53/126). Published RWE comprised 4.8% (21/439) of therapeutic class review references, and none (0/126) of the monograph references. Of the 21 RWE studies, 12 were comparative and assessed patient care settings and outcomes typically not included in clinical trials (community ambulatory settings [10], long-term safety [8]). RWE studies most frequently were based on registry data (6), conducted in the United States (6), and funded by the pharmaceutical industry (5). GRACE Checklist ratings suggested the data and methods of these comparative RWE studies were of high quality.

CONCLUSIONS:

RWE was infrequently cited in P&T materials, even among therapeutic class reviews where RWE is more readily available. Although few P&T materials cited RWE, the comparative RWE studies were generally high quality. More research is needed to understand when and what types of real-world studies can more routinely inform coverage and reimbursement decisions.

What is already known about this subject

Formulary committee monographs and therapeutic class reviews include many sources of evidence but primarily rely on clinical studies.

Real-world evidence (RWE) is becoming more available as health plans and others evaluate existing encounter and utilization data to make coverage decisions.

Previous studies of managed care decision-maker perceptions found that RWE is used in decision making, and use is expected to increase in the future.

What this study adds

Clinical studies and manufacturer-generated evidence were most commonly used in product monographs and therapeutic class reviews.

RWE was infrequently cited in pharmacy and therapeutic (P&T) committee materials.

Comparative RWE studies included in P&T materials were of high quality.

Payers have to make coverage and reimbursement decisions with the available evidence. These decisions involve more diverse patient populations, broader provider networks, different care settings, and treatment comparisons not typically included in efficacy information and product approval. For many decisions the use of administrative data, electronic health records, registries, and other datasets can supplement the existing efficacy information, identify differences in treatment response among patients, generalize care to usual care settings, and compare treatment alternatives.

For purposes of this article, we adopt the definition of real-world evidence (RWE) as proposed by the U.S. Food and Drug Administration (FDA): “We believe it refers to information on health care that is derived from multiple sources outside typical clinical research settings, including electronic health records (EHRs), claims and billing data, product and disease registries, and data gathered through personal devices and health applications.”1 Analyzing real-world patient experiences can inform decisions on how to best use available and emerging health care technologies.

In recognition of this benefit, previous surveys of managed care decision makers indicate that while use of RWE studies is limited, use is expected to increase in the future.2 However, barriers such as the lack of high-quality studies, lack of conclusive results, perceived legislative barriers, lack of relevant outcomes, and research design flaws need to be overcome.3,4 Other potential factors that may affect whether RWE is used include timing of study results, relevance, and transparency of matching or statistical techniques to control for bias. Because of these issues, decision makers rate RWE to be of lower importance and utility than other study designs and are likely to default to familiar sources of evidence, such as randomized controlled trials (RCTs), or use expert opinion.4

Over the past several years, multiple organizations have invested in efforts to improve the collection, curation, and analysis of real-world data. For example, in the public sector, the FDA-funded Sentinel project, the Patient Centered-Outcome Research Institute-sponsored National Patient-Centered Clinical Research Network, and the National Institutes of Health Precision Medicine Cohort have invested hundreds of millions of dollars to develop infrastructure to speed understanding of safety, treatment effectiveness, and personalized approaches to care. In the private sector, many large health insurance providers have created internal analysis groups to evaluate their own data. Other insurers and providers collaborate or sell data to third parties for conducting real-world studies. In parallel, numerous bodies have issued best practices and guidelines to improve the conduct and evaluation of studies using these data sources.5-13

The field of RWE is maturing to the point where evidence beyond clinical trials is of better quality and quantity to assist decision makers in a complex and dynamic health care environment. However, little is known about the actual (vs. self-reported) use of RWE to inform coverage and reimbursement decisions. The current study builds upon the literature by addressing 2 objectives: (1) Is RWE used to inform payer decision making in pharmacy and therapeutic (P&T) committee monographs? (2) When RWE is used in product monographs and therapeutic class reviews, what are the study features, and are the studies of high quality?

Methods

To examine actual from perceived use of RWE to inform health care delivery decisions in managed care, we conducted an evaluation of P&T committee monographs/therapeutic class reviews used by health plans/organizations when making coverage decisions. A convenience sample of pharmacists and physicians employed by managed care organizations (MCOs), pharmacy benefit managers (PBMs), health care systems, and government agencies were invited to participate in the study. Individuals agreeing to participate were asked to provide 3 product monographs and 2 therapeutic class reviews that had been presented to their P&T committee within the previous 24 months. Owing to concerns about proprietary interests, 1 organization sent only the references from such documents.

For each document—monograph, therapeutic class review, or reference section—we collected information on the therapies of interest, therapeutic area, whether the products were considered specialty medications, specific target population for the product(s), and monograph author source (if available). Decisions were categorized as 1 of the following: (a) schedule formulary review, (b) update previous decision, (c) make a new decision, (d) change formulary coverage, (e) update utilization management, or (f) unknown.

Two investigators (Hurwitz and Malone) tallied and independently reviewed the cited references from each document and grouped them into various sources of evidence. Primary literature reports classified as comparative RWE were obtained and evaluated further using a separate data collection form (available at http://cer.pharmacy.arizona.edu/news/RWE-reference-review-tool). For purposes of this study, we define observational study as having more than 1 treatment/intervention, where the outcome of interest is evaluated across multiple technologies. The 2 investigators independently reviewed and evaluated these observational studies, noting additional features related to study relevance (e.g., patient population, care setting, interventions, primary outcomes, country of origin) and conduct (e.g., data sources, author affiliations, and funding sources). To estimate study impact, we noted the number of times each article was cited (per Google Scholar as of May 6, 2016), as well as the journal impact factor (Web of Knowledge Journal Citation Reports) and type (i.e., general medicine, specialty or subspecialty medicine, managed care, health services research/policy, and other).

To evaluate the quality of the comparative RWE studies, investigators (Hurwitz and Malone) used the 11-item Good ReseArch for Comparative Effectiveness (GRACE) Checklist (version 5.0).14 The validated GRACE Checklist focuses on the quality of data (6 items) and methods (5 items) to address the study’s purpose. The investigators met to discuss and achieve consensus on the GRACE Checklist and general study characteristics.

Results

A total of 6 MCOs supplied 27 monographs or therapeutic class reviews. These organizations included 2 PBMs, 2 health plans, 1 quasi-governmental provider, and 1 contract pharmacy benefit consulting firm. Among the 27 monographs, 15 were therapeutic class reviews and 12 monographs were single-product reviews. Specialty pharmaceuticals were the subject of 4 single-product monographs and 4 therapeutic class reviews. The specific purpose of these 27 documents was to evaluate new coverage decisions (10), changes in formulary coverage (7), and scheduled formulary reviews (3); purposes of the 7 remaining documents could not be verified. The treatment areas concerned cardiovascular disease (5), diabetes (5), autoimmune disorders (3), chronic obstructive pulmonary disease (3), hepatitis C (3), mental health (2), and others (heart failure, weight loss, anticoagulation, contraception, epilepsy, and Multicentric Castleman disease). Across the 27 monographs, 565 references were cited, ranging from 1 to 110 references per monograph with a mean of 21 (standard deviation [SD] = 24).

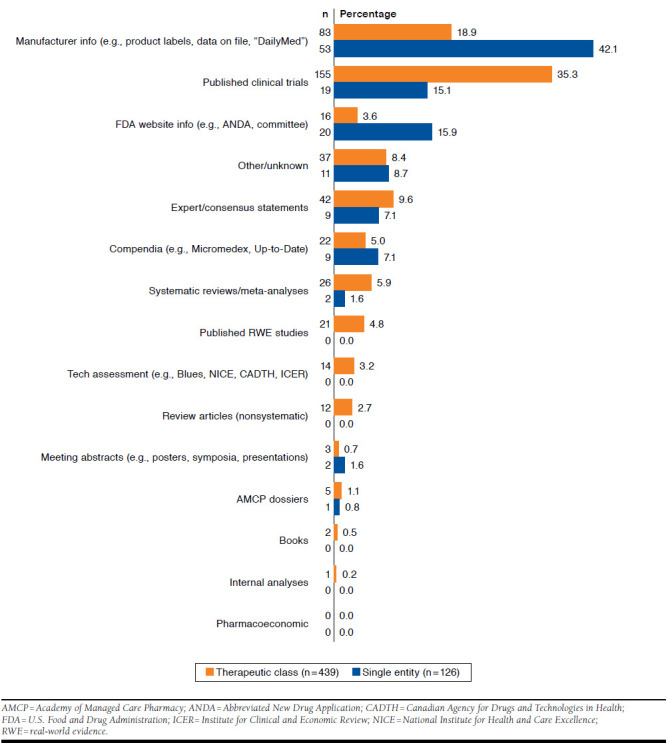

Figure 1 shows the distribution of references by evidence source and type of review (therapeutic class review or single-product monograph). Overall, the most frequently cited evidence came from clinical trials (n = 174/565, 31%), followed by manufacturer-provided information (n = 136/565, 24%; e.g., product labels, “DailyMed”). Systematic reviews, compendia, FDA reports, and expert consensus statements each comprised 5%-9% of the 565 references. Published RWE accounted for 4% of references (n = 21/565), while third-party tech assessments were 3%, and nonsystematic review articles, AMCP dossiers, books, and meeting abstracts each accounted for 2% or less of the cited references. Only 1 monograph cited internal data analyses.

FIGURE 1.

Proportions of Evidence Sources by Type of Review

Only 21 RWE studies were identified among the 565 references.15-35 Of these observational studies, 12 were considered to be comparative RWE studies (i.e., observational studies having more than 1 treatment/intervention compared).18-24,27,28,31,33,35 Ten of the 12 comparative RWE studies were from a single therapeutic class review evaluating various biological products for treating immunological disorders. The remaining 2 references came from separate therapeutic class reviews, one concerning pulmonary hypertension24 and the other involving incretin mimetic products and glucagon-like peptide-1 receptor agonists for management of type 2 diabetes mellitus.18

The characteristics of the 12 comparative RWE studies are displayed in Table 1. Most of the studies focused on narrow or restricted patient populations (10/12, 83%) in community/ambulatory settings (10/12, 83%) involving some, but not all, comparators (7/12, 58%). The primary outcomes focused on long-term safety (8/12, 67%), effectiveness (5/12, 42%), or a combination of these and other outcomes (e.g., short-term efficacy, short-term safety, and adherence). No studies concerned costs/health care utilization. Six of the 12 studies (50%) relied on registry data, 4 (33%) used electronic health record data, and 2 (17%) used data from MedWatch reports to the FDA. None of the studies used administrative claims data. Six of the 12 studies (50%) were conducted outside of the United States (i.e., Europe and Japan), and 11/12 (92%) included authors from the organization owning the data. The pharmaceutical industry was the most frequent source of funding (5/12, 42%), followed by federal agencies (3/12, 25%).

TABLE 1.

Characteristics of the Comparative Observational Research Studies (N = 12)

| % | n | |

| Patient population | ||

| Broad (few restrictions) | 16.7 | 2 |

| Some restrictions | 16.7 | 2 |

| Narrow | 66.7 | 8 |

| Patient subpopulation | 0.0 | 0 |

| Care setting | ||

| Hospital | 0.0 | 0 |

| Community/ambulatory | 83.3 | 10 |

| Unknown | 16.7 | 2 |

| Other | 0.0 | 0 |

| Interventions compared | ||

| Single comparator | 0.0 | 0 |

| Some but not all comparators | 58.3 | 7 |

| All comparators for a given therapeutic indication | 33.3 | 4 |

| Unknown | 8.3 | 1 |

| Primary outcomea | ||

| Short-term efficacy | 25.0 | 3 |

| Adherence | 16.7 | 2 |

| Long-term effectiveness | 41.7 | 5 |

| Long-term safety | 66.7 | 8 |

| Costs/health care utilization | 0.0 | 0 |

| Other: short-term safety | 8.3 | 1 |

| Observational study data source | ||

| Claims | 0.0 | 0 |

| Registry | 50.0 | 6 |

| Electronic health records | 33.3 | 4 |

| Other: Medwatch reports to FDA | 16.7 | 2 |

| Study location | ||

| Europe | 41.7 | 5 |

| Japan | 8.3 | 1 |

| United States | 50.0 | 6 |

| Funding source(s) | ||

| Federal (FDA, NIH) | 25.0 | 3 |

| Pharmaceutical industry | 41.7 | 5 |

| Foundation | 8.3 | 1 |

| No funding | 8.3 | 1 |

| Unknown/not mentioned | 16.7 | 2 |

| Author affiliationsa | ||

| University | 91.7 | 11 |

| Research centerb | 41.7 | 5 |

| Government | 25.0 | 3 |

| Industryc | 33.3 | 4 |

| Professional association | 8.3 | 1 |

| Other: hospital, private clinics, foundation | 25.0 | 3 |

| Analysis conducted by organization owning the datad | 91.7 | 11 |

| GRACE Principles-based evaluations of study data (D) and methods (M)e | ||

| D1. Treatment and/or important details of treatment exposure were adequately recorded for the study purpose in the data source(s). | 58.3 | 7 |

| D2. Primary outcomes were adequately recorded for the study purpose (e.g., available in sufficient detail through data source[s]). | 100.0 | 12 |

| D3. Primary clinical outcomes were measured objectively rather than subject to clinical judgment (e.g., opinion about whether the patient’s condition has improved.)f | 91.7 | 11 |

| D4. Primary outcomes were validated, adjudicated, or otherwise known to be valid in a similar population. | 91.7 | 11 |

| D5. Primary outcomes were measured or identified in an equivalent manner between the treatment/intervention group and the comparison group(s). | 83.3 | 10 |

| D6. Important covariates that may be known confounders or effect modifiers were available and recorded. | 66.7 | 8 |

| M1. Study (or analysis) population was restricted to new initiators of treatment or those starting a new course of treatment. | 50.0 | 6 |

| M2. If 1 or more comparison groups were used, they were either (a) concurrent comparators, or (b) authors who justified the use of historical comparison groups.g | 75.0 | 9 |

| M3. Important covariates, confounding, and effect modifying variables were taken into account in the design and/or analysis. | 66.7 | 8 |

| M4. Classification of exposed and unexposed person-time was free of „immortal time bias.“ | 91.7 | 11 |

| M5. Meaningful analyses were conducted to test key assumptions on which primary results are based. | ||

| Yes, and primary results did not substantially change. | 25.0 | 3 |

| Yes, and primary results changed substantially. | 41.7 | 5 |

aSelected all that applied (i.e., sums to more than 100%).

bNongovernmental-, nonuniversity-affiliated institution.

cFor-profit company, including, but not limited to, pharmaceutical companies.

dAny coauthor affiliated with the organization responsible for the data.

eAdapted from “GRACE Principles: A validated checklist for evaluating the quality of observational cohort studies for decision-making support (v 5.0),” from GRACE Initiative, available at https://www.graceprinciples.org/.

f“N/A: primary outcome not clinical (e.g., PROs)” was selected for the remaining study.

gEvaluators rated “No” for 2 studies and “Not Applicable” for the third, although “Not Applicable” is not a response option that actually appears for this item on the GRACE Checklist.

FDA = U.S. Food & Drug Administration; NIH = National Institutes of Health.

The number of times each of the 12 comparative RWE was cited—as a proxy for study impact—ranged from 2 to 479 (mean = 124, SD = 158). The 2015 impact factors for the journals that published these 12 studies—based on Web of Knowledge Journal Citation Reports accessed May 6, 2016—ranged from 0 to 17.8 (mean = 6.9, SD = 5.3). For reference, the Journal of Managed Care and Specialty Pharmacy had an impact factor of 2.2, the British Medical Journal had 19.7, and the New England Journal of Medicine had 59.6 at the time of this assessment.

Evaluation of Study Quality Using the GRACE Checklist

Data Quality.

The 12 comparative RWE studies evaluated clearly identified the primary outcomes (100%), and outcomes were measured objectively (92%), had high validity (92%), and measured in the same manner for each study group (83%). More than half of the studies (58%) provided adequate details about the treatment exposure, including the medication dosages, treatment durations, or baseline disease severity. Similarly, two thirds (67%) of the studies provided sufficient information on important covariates that may be known confounders or effect modifiers.

Methods Quality.

Study methods to adjust for potential bias due to lack of randomization (e.g., patients who are younger or have less severe disease are more likely to receive one treatment vs. another) are recommended. Half of the studies (50%) restricted treatment populations to new initiators or those starting a new course (including washout). Most studies (75%) used either concurrent comparators or justified the use of historical control groups, while the remaining studies were unclear or did not provide enough information needed for confirmation. Aside from identifying important covariates as noted earlier, 67% of studies further accounted for these in their designs or analyses. Eight studies (67%) also conducted follow-up analyses to test key assumptions, with results changing substantially in 5 of these studies. Virtually all the studies (92%) were free of “immortal time bias” or differences in follow-up time, which may affect study results.

Discussion

While other studies have assessed managed care decision-maker perceptions, this study sought to empirically assess the use of RWE in P&T decision making. The results suggest that the use of RWE by health care organizations to support P&T committees is limited, comprising only 4% of total references in monographs and therapeutic class reviews and cited in documents by only 2 of the 6 organizations. When comparative RWE was used, study methods were of high quality. Differences between the health care organizations in use of RWE may be due to limited availability of RWE at the time of decision, the quality of RWE, or concerns about “best evidence” (i.e., RCTs) versus best available evidence (i.e., real-world studies). This study did not address if the availability of RWE, or lack thereof, was influential in decision making.

Given the timeliness of P&T decisions, it is not surprising that RWE was not cited in single-product monographs, as RWE is not typically available at the time of product approval unless pragmatic clinical trials are part of the approval package or a product was approved outside the United States. A study by Chambers et al. (2016) found payers reported using clinical trials and guidelines in developing coverage policies.36 In the current study it was interesting to observe so few RWE studies cited in therapeutic class reviews, where RWE is more likely to be available. A search of MEDLINE for observational (i.e., RWE) studies available before the therapeutic class reviews were conducted found an average of 673 (SD = 975, minimum = 14, maximum = 3,290) observational studies in the literature, suggesting that while this evidence is available, it is not being widely used.

Limited citations of RWE may also be associated with historical skepticism, where RWE studies have been traditionally rated lower than RCTs in evidence hierarchies. That said, there is a difference between best evidence and best available evidence. Best evidence is collected with limited sources of bias, typically involving randomization. Time and money are required for generation of best evidence. Best available evidence is the notion that because decisions must be made in a timely manner, less-than-perfect evidence available at the time of the decision is better than no evidence at all.

A recent Cochrane review comparing RCTs and high-quality observational studies found similar (results) differences between the 2 study designs.37 In line with these results, high-quality RWE has been recognized as valuable by other guidance bodies. For example, The AMCP Format for Formulary Submissions, version 4.0, recognizes the value of best available evidence, regardless of study design.38 Recently the FDA released a draft guidance on the use of real-world data and RWE to support regulatory decision making for medical devices.39

Finally, available staff resources may be an important barrier. For example, conducting reviews of existing literature can be time consuming for organizations with limited staff time and resources. For others, the research methods applied to deal with potential biases and confounding in the real-world data can be complex and requires new skills to evaluate RWE results. To this end, tools and training such as the Comparative Effectiveness Research (CER) Collaborative and CER Certificate Program have been shown to improve staff confidence in their ability to evaluate RWE studies and incorporate these studies in decision making.40 On the other hand, the extent that MCOs analyze their own real-world data is difficult to assess, as such analyses are unpublished and proprietary.

Limitations

Several limitations should be considered when interpreting the results of this study. First, the findings are based on a small number of health care organizations and a limited number of P&T monographs. Eleven of the 12 comparative RWE studies cited were from 2 therapeutic class reviews conducted by the same national PBM. Monographs and class reviews provided by 4 of the 6 organizations did not cite RWE at all. Whether the lack of RWE citations is due to their evaluation or literature search criteria, or if the RWE studies identified were not sufficiently relevant or credible, is unknown.

Second, the findings are based upon a limited number of P&T monographs and therapeutic class reviews rather than a systematic sample of all monographs developed in the 24-month period. Therefore, the availability of RWE may be limited based on the condition and products evaluated. Indeed, most of these 12 studies are based on long-standing registries involving autoimmune conditions (e.g., inflammatory bowel disease, psoriasis, rheumatoid arthritis) treated with biologics (e.g., adalimumab, etanercept, infliximab).

Third, our study focused on citations used to support P&T decision making. RWE may be useful in other contexts such as the development of medical policy, utilization management criteria, or quality improvement analyses. Finally, we used the GRACE Checklist to assess study quality. Other standards and guidelines for evaluating study quality exist and may differ from GRACE Checklist assessments.41 Additional research and consensus on measures of RWE study quality are needed to advance the science.

Conclusions

Efficacy information (e.g., clinical trials, product labels) was the most cited source of evidence in P&T materials. Effectiveness information, even among therapeutic class reviews where RWE is more available, was infrequently used. Although only a few P&T materials cited RWE, the comparative RWE studies were generally of high quality. More research is needed to better understand when and what types of real-world studies can inform coverage and reimbursement decisions in a more consistent manner.

ACKNOWLEDGMENTS

The authors gratefully acknowledge the representatives from the organizations who participated in this research.

REFERENCES

- 1.Sherman RE, Anderson SA, Dal Pan GJ, et al. . Real-world evidence-what is it and what can it tell us? N Engl J Med. 2016;375(23):2293-97. [DOI] [PubMed] [Google Scholar]

- 2.Holtorf AP, Brixner D, Bellows B, Keskinaslan A, Dye J, Oderda G.. Current and future use of HEOR data in healthcare decision-making in the United States and in emerging markets. Am Health Drug Benefits. 2012;5(7):428-38. [PMC free article] [PubMed] [Google Scholar]

- 3.Weissman JS, Westrich K, Hargraves JL, et al. . Translating comparative effectiveness research into Medicaid payment policy: views from medical and pharmacy directors. J Comp Eff Res. 2015;4(2):79-88. [DOI] [PubMed] [Google Scholar]

- 4.Leung MY, Halpern MT, West ND.. Pharmaceutical technology assessment: perspectives from payers. J Manag Care Pharm. 2012;18(3):256-64. Available at: http://www.jmcp.org/doi/10.18553/jmcp.2012.18.3.256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Hickam D, Totten A, Berg A, Rader K, Goodman S, Newhouse R, eds.. The PCORI methodology report. November 2013. Available at: http://www.pcori.org/assets/2013/11/PCORI-Methodology-Report.pdf. Accessed February 24, 2017.

- 6.Agency for Healthcare Research and Quality. Methods guide for effectiveness and comparative effectiveness reviews. AHRQ Publication No. 10(14)-EHC063-EF. January 2014. Rockville, MD. Available at: http://effectivehealthcare.ahrq.gov/index.cfm/search-for-guides-reviews-and-reports/?pageaction=displayproduct&productid=318. Accessed February 24, 2017. [PubMed] [Google Scholar]

- 7.The European Network of Centres for Pharmacoepidemiology and Pharmacovigilance. ENCePP Guide on Methodological Standards in Pharmacoepidemiology. Revision 5. EMA/95098/2010. Available at: http://www.encepp.eu/standards_and_guidances/methodologicalGuide.shtml. Accessed February 24, 2017.

- 8.Garrison LP Jr,Neumann PJ, Erickson P, Marshall D, Mullins CD.. Using real-world data for coverage and payment decisions: the ISPOR Real-World Data Task Force report. Value Health. 2007;10(5):326-35. [DOI] [PubMed] [Google Scholar]

- 9.Motheral B, Brooks J, Clark MA, et al. . A checklist for retrospective database studies—report of the ISPOR Task Force on Retrospective Databases. Value Health. 2003;6(2):90-97. [DOI] [PubMed] [Google Scholar]

- 10.Berger ML, Mamdani M, Atkins D, Johnson ML.. Good research practices for comparative effectiveness research: defining, reporting and interpreting nonrandomized studies of treatment effects using secondary data sources: the ISPOR Good Research Practices for Retrospective Database Analysis Task Force Report—Part I. Value Health. 2009;12(8):1044-52. [DOI] [PubMed] [Google Scholar]

- 11.Cox E, Martin BC, Van Staa T, Garbe E, Siebert U, Johnson ML.. Good research practices for comparative effectiveness research: approaches to mitigate bias and confounding in the design of nonrandomized studies of treatment effects using secondary data sources: the International Society for Pharmacoeconomics and Outcomes Research Good Research Practices for Retrospective Database Analysis Task Force Report—Part II. Value Health. 2009;12(8):1053-61. [DOI] [PubMed] [Google Scholar]

- 12.Johnson ML, Crown W, Martin BC, Dormuth CR, Siebert U.. Good research practices for comparative effectiveness research: analytic methods to improve causal inference from nonrandomized studies of treatment effects using secondary data sources: the ISPOR Good Research Practices for Retrospective Database Analysis Task Force Report—Part III. Value Health. 2009;12(8):1062-73. [DOI] [PubMed] [Google Scholar]

- 13.CER Collaborative. Comparative effectiveness research tool. Available at: www.cercollaborative.org. Accessed February 24, 2017. [Google Scholar]

- 14.Dreyer NA, Velentgas P, Westrich K, Dubois R.. The GRACE Checklist for rating the quality of observational studies of comparative effectiveness: a tale of hope and caution. J Manag Care Spec Pharm. 2014;20(3):301-08. Available at: http://www.jmcp.org/doi/10.18553/jmcp.2014.20.3.301. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Alexander GC, Gallagher SA, Mascola A, Moloney RM, Stafford RS.. Increasing off-label use of antipsychotic medications in the United States, 1995-2008. Pharmacoepidemiol Drug Saf. 2011;20(2):177-84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Appelbaum KL. Stimulant use under a prison treatment protocol for attention-deficit/hyperactivity disorder. J Correct Health Care. 2011;17(3):218-25. [DOI] [PubMed] [Google Scholar]

- 17.Backes JM, Venero CV, Gibson CA, et al. . Effectiveness and tolerability of every-other-day rosuvastatin dosing in patients with prior statin intolerance. Ann Pharmacother. 2008;42(3):341-46. [DOI] [PubMed] [Google Scholar]

- 18.Bounthavong M, Tran JN, Golshan S, et al. . Retrospective cohort study evaluating exenatide twice daily and long-acting insulin analogs in a Veterans Health Administration population with type 2 diabetes. Diabetes Metab. 2014;40(4):284-91. [DOI] [PubMed] [Google Scholar]

- 19.Diak P, Siegel J, La Grenade L, Choi L, Lemery S, McMahon A.. Tumor necrosis factor alpha blockers and malignancy in children: forty-eight cases reported to the Food and Drug Administration. Arthritis Rheum. 2010;62(8):2517-24. [DOI] [PubMed] [Google Scholar]

- 20.Gelfand JM, Wan J, Callis Duffin K, et al. . Comparative effectiveness of commonly used systemic treatments or phototherapy for moderate to severe plaque psoriasis in the clinical practice setting. Arch Dermatol. 2012;148(4):487-94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Gniadecki R, Bang B, Bryld LE, Iversen L, Lasthein S, Skov L.. Comparison of long-term drug survival and safety of biologic agents in patients with psoriasis vulgaris. Br J Dermatol. 2015;172(1):244-52. [DOI] [PubMed] [Google Scholar]

- 22.Gomez-Reino JJ, Maneiro JR, Ruiz J, et al. . Comparative effectiveness of switching to alternative tumour necrosis factor (TNF) antagonists versus switching to rituximab in patients with rheumatoid arthritis who failed previous TNF antagonists: the MIRAR Study. Ann Rheum Dis. 2012;71(11):1861-64. [DOI] [PubMed] [Google Scholar]

- 23.Greenberg JD, Reed G, Decktor D, et al. . A comparative effectiveness study of adalimumab, etanercept and infliximab in biologically naive and switched rheumatoid arthritis patients: results from the US CORRONA registry. Ann Rheum Dis. 2012;71(7):1134-42. [DOI] [PubMed] [Google Scholar]

- 24.Hoeper MM, Markevych I, Spiekerkoetter E, Welte T, Niedermeyer J.. Goal-oriented treatment and combination therapy for pulmonary arterial hypertension. Eur Respir J. 2005;26(5):858-63. [DOI] [PubMed] [Google Scholar]

- 25.Kwan P, Brodie MJ.. Early identification of refractory epilepsy. N Engl J Med. 2000;342(5):314-19. [DOI] [PubMed] [Google Scholar]

- 26.Kwan P, Brodie MJ.. Effectiveness of first antiepileptic drug. Epilepsia. 2001;42(10):1255-60. [DOI] [PubMed] [Google Scholar]

- 27.Kwon HJ, Coté TR, Cuffe MS, Kramer JM, Braun MM.. Case reports of heart failure after therapy with a tumor necrosis factor antagonist. Ann Intern Med. 2003;138(10):807-11. [DOI] [PubMed] [Google Scholar]

- 28.Lichtenstein GR, Feagan BG, Cohen RD, et al. . Serious infection and mortality in patients with Crohn’s disease: more than 5 years of follow-up in the TREAT registry. Am J Gastroenterol. 2012;107(9):1409-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Luciano AL, Shorvon SD.. Results of treatment changes in patients with apparently drug-resistant chronic epilepsy. Ann Neurol. 2007;62(4):375-81. [DOI] [PubMed] [Google Scholar]

- 30.Mohan N, Edwards ET, Cupps TR, et al. . Demyelination occurring during anti-tumor necrosis factor alpha therapy for inflammatory arthritides. Arthritis Rheum. 2001;44(12):2862-69. [DOI] [PubMed] [Google Scholar]

- 31.Mokuda S, Murata Y, Sawada N, et al. . Tocilizumab induced acquired factor XIII deficiency in patients with rheumatoid arthritis. PloS One. 2013;8(8):e69944. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Momohara S, Hashimoto J, Tsuboi H, et al. . Analysis of perioperative clinical features and complications after orthopaedic surgery in rheumatoid arthritis patients treated with tocilizumab in a real-world setting: results from the multicentre TOcilizumab in Perioperative Period (TOPP) study. Mod Rheumatol. 2013;23(3):440-49. [DOI] [PubMed] [Google Scholar]

- 33.Simard JF, Neovius M, Askling J, Group AS.. Mortality rates in patients with rheumatoid arthritis treated with tumor necrosis factor inhibitors: drug-specific comparisons in the Swedish Biologics Register. Arthritis Rheum. 2012;64(11):3502-10. [DOI] [PubMed] [Google Scholar]

- 34.Singh S, Chang HY, Richards TM, Weiner JP, Clark JM, Segal JB.. Glucagonlike peptide 1-based therapies and risk of hospitalization for acute pancreatitis in type 2 diabetes mellitus: a population-based matched case-control study. JAMA Intern Med. 2013;173(7):534-39. [DOI] [PubMed] [Google Scholar]

- 35.van Dartel SA, Fransen J, Kievit W, et al. . Difference in the risk of serious infections in patients with rheumatoid arthritis treated with adalimumab, infliximab and etanercept: results from the Dutch Rheumatoid Arthritis Monitoring (DREAM) registry. Ann Rheum Dis. 2013;72(6):895-900. [DOI] [PubMed] [Google Scholar]

- 36.Chambers JD, Wilkinson CL, Anderson JE, Chenoweth MD.. Variation in private payer coverage of rheumatoid arthritis drugs. J Manag Care Spec Pharm. 2016;22(10):1176-81. Available at: http://www.jmcp.org/doi/10.18553/jmcp.2016.22.10.1176. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Anglemyer A, Horvath HT, Bero L.. Healthcare outcomes assessed with observational study designs compared with those assessed in randomized trials. Cochrane Database Syst Rev. 2014(4):MR000034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Academy of Managed Care Pharmacy. The AMCP Format for formulary submissions. Version 4.0. A format for submission of clinical and economic evidence in support of formulary consideration. April 2016. Available at: http://www.amcp.org/FormatV4/. Accessed February 24, 2017.

- 39.U.S. Department of Health and Human Services, Food and Drug Administration. Use of real-world evidence to support regulatory decision-making for medical devices. Draft guidance for industry and Food and Drug Adminstration staff. July 27, 2016. Available at: https://www.fda.gov/downloads/medicaldevices/deviceregulationandguidance/guidancedocuments/ucm513027.pdf. Accessed February 24, 2017.

- 40.Perfetto EM, Anyanwu C, Pickering MK, Zaghab RW, Graff JS, Eichelberger B.. Got CER? Educating pharmacists for practice in the future: new tools for new challenges. J Manag Care Spec Pharm. 2016;22(6):609-16. Available at: http://www.jmcp.org/doi/10.18553/jmcp.2016.22.6.609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Morton SC, Costlow MR, Graff JS, Dubois RW.. Standards and guidelines for observational studies: quality is in the eye of the beholder. J Clin Epidemiol. 2016;71:3-10. [DOI] [PubMed] [Google Scholar]