Abstract

BACKGROUND:

Understanding how treatments work in the real world and in real patients is an important and complex task. In recent years, comparative effectiveness research (CER) studies have become more available for health care providers to inform evidence-based decision making. There is variability in the strengths and limitations of this new evidence, and researchers and decision makers are faced with challenges when assessing the quality of these new methods and CER studies.

OBJECTIVES:

To (a) describe an online tool developed by the CER Collaborative, composed of the Academy of Managed Care Pharmacy, the International Society for Pharmacoeconomics and Outcomes Research, and the National Pharmaceutical Council, and (b) provide an early evaluation of the training program impact on learners' self-reported abilities to evaluate and incorporate CER studies into their decision making.

METHODS:

To encourage greater transparency, consistency, and uniformity in the development and assessment of CER studies, the CER Collaborative developed an online tool to assist researchers, new and experienced clinicians, and decision makers in producing and evaluating CER studies. A training program that supports the use of the online tool was developed to improve the ability and confidence of individuals to apply CER study findings in their daily work. Seventy-one health care professionals enrolled in 3 separate cohorts for the training program. Upon completion, learners assessed their abilities to interpret and apply findings from CER studies by completing on online evaluation questionnaire.

RESULTS:

The first 3 cohorts of learners to complete the training program consisted of 71 current and future health care practitioners and researchers. At completion, learners indicated high confidence in their CER evidence assessment abilities (mean = 4.2). Learners reported a 27.43%-59.86% improvement in capabilities to evaluate various CER studies and identify study design flaws (mean evaluation before CER Certificate Program [CCP] scores = 1.86-3.14 and post-CCP scores = 3.92-4.24). Additionally, 63% of learners indicated that they expected to increase their use of evidence from CER studies in at least 1-2 problem decisions per month.

CONCLUSIONS:

The CER Collaborative has responded to the need for increased practitioner training to improve understanding and application of new CER studies. The CER Collaborative tool and certificate training program are innovative solutions to help decision makers meet the challenges they face in honing their skills to best incorporate credible and relevant CER evidence into their decision making.

What is already known about this subject

In recent years, formulary decision makers have demonstrated an interest in comparative effectiveness research (CER) and in using CER studies to inform their coverage and reimbursement policies.

Despite this interest, barriers such as insufficient awareness and understanding of CER methods and a lack of tools to assess the quality of CER studies prevent its widespread use by formulary decision makers.

Without skills and training in new CER methodology, formulary decision makers may not feel equipped to evaluate or use CER findings for decision making, even when studies provide invaluable information.

What this study adds

The CER Collaborative tool and certificate training program are resources designed to help formulary decision makers strengthen their CER knowledge and skills in order to evaluate CER studies in a transparent and consistent fashion.

The CER Collaborative certificate training program improves participants' confidence to critically appraise diverse CER study designs and use evidence from CER studies in their decision making.

Innovative solutions are necessary in order for practitioners to develop the necessary skills and capabilities to incorporate evidence from CER studies in their decision making.

Health care decision makers, including pharmacists, clinicians, administrators, and pharmacy and therapeutics (P&T) committee members, align scientific evidence with therapeutic guidelines and drug coverage policies. Their responsibilities extend beyond determining whether a treatment or intervention works to also include the evaluation of how well a treatment or intervention works compared with other treatment options in the populations they care for. Often there is a notable gap between the evidence desired and the evidence available at the time of decision making.1 Decision makers often desire additional information on longer-term outcomes, comparisons of multiple treatment options, or assessments of treatment effectiveness in “real-world” settings based on patient populations or provider settings similar to their own. To help bridge this gap, there is an increasing interest and investment in comparative effectiveness research (CER).2 A large part of the CER investment extends beyond traditional randomized controlled trials (RCTs) to include varying and newer study designs and analytic techniques (e.g., pragmatic clinical trials, observational studies, decision-modeling techniques, and indirect treatment comparisons/network metaanalyses) to inform decision making.3

Decision makers want and need evidence on how various interventions work compared with other treatment alternatives, and CER studies using newer study designs can be helpful when evidence from direct, head-to-head, RCTs are missing.4 Evidence from other CER studies, such as indirect treatment comparisons or network meta-analyses (NMA), can fill these gaps when comparisons across treatment options are lacking. For example, to assess the benefits of using alendronate, risedronate, and teriparatide for the risks of vertebral, hip, and nonvertebral/nonhip fractures, the NMA technique was used to evaluate the evidence from 32 RCTs to inform benefit design.5 Understanding how to use evidence from newer techniques, such as NMA, allows decision makers to assess a broad range of comparisons with greater confidence and to improve decision making.5,6

In other scenarios, decision makers need to understand how various study designs may impact the relevance and credibility of the evidence used to inform care pathways and clinical practice guidelines. For example, differences exist between cholesterol treatment guidelines from the American Heart Association and American College of Cardiology (AHA/ACC) and the National Lipid Association (NLA). The AHA/ACC guidelines are primarily based on evidence from RCTs, systematic reviews, and meta-analyses of trials on atherosclerotic cardiovascular disease outcomes.7 In contrast, the NLA guidelines include these trials as well as observational and genetic studies. The NLA developers recognized that, by limiting evidence to only that of RCTs, the results may not be generalizable to patients who are different from the populations studied in the trials. The differences in the evidence reviewed and guidelines followed alter recommended cholesterol treatment thresholds and modalities such as lifestyle and drug therapy.7,8 Clinicians need to determine which evidence guidelines are most relevant to their decision-making environment.

In recent years, there has been increased interest on behalf of formulary decision makers, such as the Academy of Managed Care Format Committee, to use CER to inform coverage and reimbursement policies.9-11 Despite this interest, challenges exist because of the lack of perceived relevance of the evidence, insufficient awareness and understanding of the methods used to generate this information, and a lack of tools to assess the quality of the studies.12-14 The lack of tools and guidance on how to evaluate and use these new or unfamiliar study designs creates the possibility of 2 undesirable outcomes: (1) misinterpretation of new study data or (2) critical information not being used to inform decision making.15 To help solve these challenges, various roundtables and task forces recently recommended the development of tools to aid in the quality assurance and quality assessment of CER, as well as development of training for the producers and users of CER on how to generate, interpret, and apply CER findings.16,17

With this in mind, the CER Collaborative developed a tool and a training program to help address this need for real-world understanding and application of CER studies.18 The purpose of this article is to (a) provide an overview of the CER Collaborative online tool and training and (b) present baseline evaluation data on the impact of the tool and program reported by the first cohort of learners on self-reported abilities to interpret and use CER study findings.

The CER Collaborative and Tool Development

The Academy of Managed Care Pharmacy, International Society for Pharmacoeconomics and Outcomes Research, and National Pharmaceutical Council comprise the CER Collaborative. The collaborative was formed to provide greater uniformity and transparency in the evaluation and use of evidence for coverage and health care decision making, with the ultimate goal of improving patient outcomes.17 Its work is based on the premise that a uniform and well-accepted approach to CER assessment enables clear communication between researchers developing evidence and decision makers using evidence.

Development of the tool began with an extensive literature review of current CER methods, which was conducted by 5 task forces with membership representing various stakeholders from pharmacy practice, academia, industry, and health insurance plans. Four of the task forces were charged with reviewing available good practice standards and reports for various study designs (prospective observational, retrospective observational, decision modeling, and NMA). These elements were then evaluated to determine relevance from a health care decision maker's perspective. Next, questionnaires with domains and indicators were developed to assess the relevance and credibility of each study design (Table 1).19 To provide instruction on how to use the questionnaires, white papers and an online tool were developed to correspond to each study design and explain how to determine if the credibility and relevance of a study was sufficient to consider in decision making.20-22

TABLE 1.

CER Collaborative Toola

| Component | |

|---|---|

| 1. Assessment of Individual Studies |

Questionnaires assessing relevance and credibility

|

| 2. Synthesize a Body of Evidence |

Questionnaires assessing comparative net benefit and certainty

|

aSee www.CERCollaborative.org.

CER = comparative effectiveness research; ICER = Institute for Clinical and Economic Review.

To further help formulary decision makers evaluate the entire body or totality of evidence (e.g., all study findings in context), the fifth task force adapted an existing, widely used framework for synthesizing a body of evidence. The Institute for Clinical and Economic Review (ICER) Evidence Rating Matrix was adapted based on its validity, reliability, prior use, practicality, ease of use, and comprehensiveness.23 This rating matrix includes questions to assess the comparative net benefit and the corresponding certainty from all the evidence.

The questionnaires, white papers, and adapted ICER matrix were combined into a publicly accessible, web-based tool. This tool is divided into 2 components. The first component, “Assessing Individual Studies,” uses the questionnaires to assess the credibility and relevance of the 4 different types of study designs. The second component, “Synthesize a Body of Evidence,” helps the decision maker use the ICER Evidence Rating Matrix as a framework for organizing evidence from multiple studies and study designs (Table 1).24 The online tool guides users through the analysis to reach an evidence rating, which is an important input to the decision-making process.

The CER Certificate Program

The initial work of the CER Collaborative was successful in generating a consensus approach and well-constructed tool. However, active participation and input from various stakeholder experts did not automatically result in rapid adoption of new tools and guidelines. Thus, the next step for the CER Collaborative was to disseminate the tool and guidance through an educational approach. To make the CER Collaborative online tool accessible to new users, an online educational program was launched in 2014.

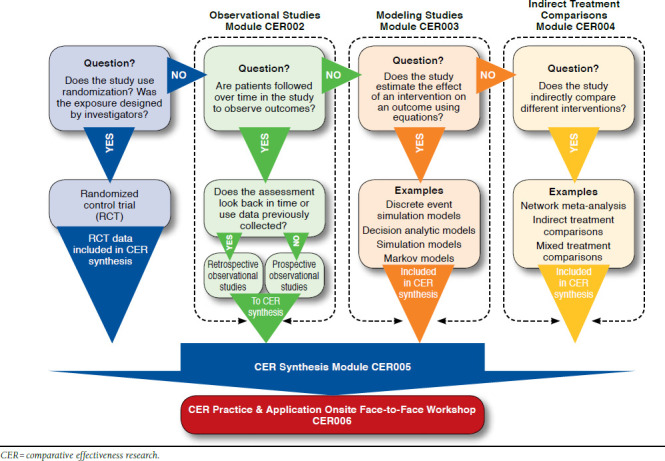

The CER Certificate Program (CCP), a web-based interactive course, was designed to teach pharmacists and other decision makers about CER study methods and how to use the CER Collaborative online tool.25 This program was developed, designed, and delivered through a partnership between the CER Collaborative and the University of Maryland School of Pharmacy. It was designed around the framework set by the CER Collaborative and the resources established as standards in the field (Figure 1).

FIGURE 1.

The CER Certificate Program Guide to the Comparative Effectiveness Research Tool

The CCP is a course approved by the Accreditation Council for Pharmacy Education and is composed of 5 online, self-paced modules with background content, step-by-step instructions, and case-based application. The 5 modules cover 4 study designs relevant to CER, as well as guidance on how to synthesize a body of evidence. The trainees remain engaged in the program through active learning exercises and case studies. The 19 credit-hour certificate concludes with an interactive workshop among the trainees held either in person or via a synchronous web-based teleconference (Table 2). During the workshop, teams of participants apply the information and skills gained throughout the program to make an evidence-based recommendation to a P&T committee by using studies from the literature. The methods and concepts included in this program are universally applied, so any health care decision maker interested in obtaining CER training can benefit.

TABLE 2.

CER Certificate Programa

| Module | Contact Hours | Topic |

|---|---|---|

| Module 1 (online) | 1 hour |

Introduction to Comparative Effectiveness Research

|

| Module 2 (online) | 3 hours |

The Value of Prospective and Retrospective Observational Studies in Comparative Effectiveness Research

|

| Module 3 (online) | 3 hours |

Modeling Studies

|

| Module 4 (online) | 2 hours |

Indirect Treatment Comparisons

|

| Module 5 (online) | 2 hours |

Synthesizing a Body of Evidence in Comparative Effectiveness Research

|

| Module 6 (interactive workshop) | 4 hours (self-paced) 4 hours (on-site or teleconference session) |

Skills Demonstration: Assessing CER Studies and Synthesizing CER Literature

|

CER = comparative effectiveness research; ICER = Institute for Clinical and Economic Review.

Methods

In October 2014 and April 2015, the CCP successfully certified 3 cohorts that consisted of 71 learners (Table 3). To obtain a certificate of completion, learners completed evaluation questionnaires after the live, interactive workshops (Module 6). The data obtained from the questionnaires provided an assessment of the program impact on the learners' self-assessed abilities, confidence, and use of CER studies. The questionnaires included Likert-scale and open-ended questions to evaluate learners' self-assessed ability to use the online CER Collaborative tool and resources, recognize and evaluate CER study designs, apply knowledge and skills obtained to new problem decisions in their work settings, and use transparent methods to facilitate the assessment of CER (Table 4). All data were de-identified. The protocol for this study was approved by the University of Maryland, Baltimore, Institutional Review Board.

TABLE 3.

Characteristics of All Learners (N = 71)

| Cohort | Professional Identification | Number of Learners | Total |

|---|---|---|---|

| October 2014: AMCP Nexus | Pharmacists | 14 | 18 |

| Physicians | 1 | ||

| Othera | 3 | ||

| April 2015: Kaiser Permanente | Pharmacists | 31 | 32 |

| Physicians | 0 | ||

| Othera | 1 | ||

| April 2015: AMCP Annual meeting | Pharmacists | 18 | 21 |

| Physicians | 0 | ||

| Othera | 3 |

aOther = students, health economics and outcomes researchers, and consultants.

AMCP = Academy of Managed Care Pharmacy.

TABLE 4.

Learner Self-Assessed Abilities at Completion (N = 71)

| At the conclusion of the CER Certificate Program, I was prepared to: | ||

| Assessment | Likert Scale Mean (1-5) and 95% CI | Likert Scale Median (1-5) |

| Demonstrate the use of CER in health care clinical and formulary decision making. | 4.35 (4.21-4.48) | 4 |

| Evaluate appropriateness and methodological rigor of a single observational or synthesis study. | 4.32 (4.19-4.45) | 4 |

| Apply CER findings using available tools to current medication-related decisions. | 4.26 (4.12-4.41) | 4 |

| Synthesize the literature across research designs. | 4.25 (4.12-4.38) | 4 |

| Practice CER study assessment in my work. | 4.17 (4.02-4.31) | 4 |

Note: 1 = Strongly disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly agree. CER = comparative effectiveness research; CI = confidence interval.

Results

Seventy-one learners successfully completed the CCP in 3 cohorts—1 group in October 2014 and 2 groups in April 2015. The majority of the learners were pharmacists; however, 1 physician and 7 students also participated in the program. All learners successfully completed the program evaluation after finishing the program. Learners indicated that the CCP prepared them to demonstrate and apply CER to formulary and/or medical decision making by increasing their abilities to evaluate CER methodological rigor and synthesize a body of evidence (Table 4). When asked to estimate the number of problem decisions in which CER evidence would be used, 63% of the learners indicated they expected to increase the use of CER studies in at least 1-2 problem decisions per month. With respect to their self-assessed abilities to apply and interpret CER studies before and after the CCP training, the mean incremental change across all categories ranged from 27.43% to 59.86%, with the lowest being the ability to distinguish among observational studies versus RCTs, and the highest being the ability to use the CER tool for analysis and synthesis (Table 5). Overall, learners indicated that they were highly confident in their CER evidence assessment abilities at program completion.

TABLE 5.

Learner Self-Assessed Abilities Before and After CCP (N = 71)

| Rate your professional capabilities in using CER in decision making BEFORE and AFTER this training. | |||

| Assessment | Mean Before (1-5) | Mean After (1-5) | Mean Incremental Change (%) and 95% CI |

| Use the online CER tool for analysis and synthesis. | 1.86 | 4.18 | +59.86 (53.16-66.56) |

| Evaluate indirect treatment comparison studies and their usefulness in decision making. | 2.31 | 4.06 | +43.75 (37.72-49.78) |

| Assess the value of an observational study in CER by examining its relevance and credibility. | 2.64 | 3.99 | +33.68 (28.92-38.44) |

| Use transparent methods to detect the presence of confounding in a case example. | 2.57 | 3.92 | +33.68 (28.92-38.44) |

| Confidence in my meaningful input into evidence-based decision making in my work setting. | 2.93 | 4.18 | +31.25 (26.14-36.36) |

| Evaluate observational studies and their usefulness in decision making. | 2.85 | 4.08 | +30.90 (25.55-36.25) |

| Analyze research studies for selection bias and information bias and critically examine the source, impact, and treatment of bias in CER studies. | 2.88 | 4.11 | +30.90 (25.91-35.89) |

| Distinguish among observational study designs versus randomized control trials and assess relative advantages and disadvantages in decision making. | 3.14 | 4.24 | +27.43 (22.67-32.19) |

Note: 1 = Strongly disagree, 2 = Disagree, 3 = Neutral, 4 = Agree, 5 = Strongly agree. For incremental differences, each numeric increase (e.g., going from 1 to 2) was assigned a 25% change in value.

CCP = CER Certificate Program; CER = comparative effectiveness research; CI = confidence interval.

Discussion

Decision makers need to make treatment and formulary decisions based on sound evidence. To comparatively evaluate which treatments work best for which patients, decision makers must be equipped to evaluate the ever-expanding and ever-diverse CER published literature. This requires decision makers to demonstrate familiarity with the broad range of methods that go beyond randomized controlled clinical trials and to understand the nuances of the range of diverse study designs employed in CER.

The CER Collaborative online tool and CCP are examples of new and innovative approaches designed to equip decision makers with the necessary resources to critically appraise CER studies. The online tool and the training program walk the user through a step-by-step process of evidence assessment, highlighting critical elements of study design to be assessed when evaluating studies. Through these resources, decision makers who are unfamiliar with newer and diverse CER studies can apply, with more confidence, the evidence from these studies in their decision making.

Learners who completed the CCP recognized the importance and value of being able to adequately interpret study findings for sound decision making. After completing the program, they reported increased confidence in their ability to critically appraise diverse CER study designs and indicated that they would be more inclined to use the skills and resources gained through the program in their routine decision-making practices. More than half of the learners reported that they intended to incorporate CER evidence into 1-2 problem decisions per month. While the training program was described as time and resource intensive, the majority of the learners found it valuable.

Program evaluation data indicate that the largest improvement in self-reported ability after taking the program was the ability to use the CER tool (Table 5). This is most likely because the learners were unfamiliar with the tool before enrolling in the CCP. Their increased ability to use the CER tool, however, can be attributed to consistent use of the tool throughout the program. The ability to evaluate indirect treatment comparisons was the second largest improvement reported by learners (44%). Learners' reported average baseline ability to evaluate indirect treatment comparisons was 2.31, the second lowest among all assessments (Table 5). This indicates that before completing the program, learners were not familiar with this newer and complex study design. The smallest change in self-reported ability was the ability to distinguish among observational study designs versus RCTs and to assess relative advantages and disadvantages in decision making (27.43%). This is largely because of learners' familiarity with these study designs, as indicated by a higher average baseline score (3.14) before participating in the CCP.

It is unknown if the CER Collaborative tools alone may improve the transparency, clarity, and uniformity of CER evaluation. As seen in the CCP learners' self-assessments, at baseline practitioners perceived a lack of ability to assess, analyze, and evaluate new CER studies. Participants in the CCP had the chance to not only learn about these types of new CER study designs but to apply the information to practical case studies. The CER Collaborative tool and CCP offer benefits for producers and users of CER. For students and managed care or drug information residents beginning their careers, the CCP provides a foundation to critically appraise and synthesize evidence. Existing practitioners, who often are familiar with RCTs and systematic reviews, can expand their confidence in using newer CER studies and apply study findings to real-world settings, compare multiple treatment options, or understand the impact for longer-term outcomes. In parallel, producers of research can increase the likelihood that the research produced will be relevant and credible for end users when designing, conducting, and reporting studies.

Limitations

There are 2 limitations that need to be noted with this early report, which was based on the first 3 learner cohorts. First, the numbers are small. As additional cohorts of learners complete the program, future analyses of their program evaluation data will allow an assessment using larger numbers. A second limitation is the self-reported data obtained from a one-time evaluation at completion of the CCP, with learners reporting on how they expect to use what they learned, not how they actually used it. This reporting does not determine whether or not learners used the information in their day-to-day work environments. Learners are now being asked to complete follow-up questionnaires at 2 and 6 months after completion of the CCP. Future analyses will examine how learners report actual use of the knowledge and skills gained in practice.

Conclusions

To ensure that the clinical workforce has the requisite capabilities, the CER Collaborative developed an online tool and educational programming for practitioners to hone their CER knowledge and skills in order to enhance transparency and consistency in evidence evaluation. Based on data from the first 3 cohorts of learners enrolled in the CCP, it appears that the online training program increased the awareness, confidence, and skills to assess and apply CER evidence in day-to-day practice. Future evaluations are needed to assess the association of clinician awareness and understanding of newer CER methods with proper interpretation and use of CER study results, informed decision making, and improved patient care.

References

- 1.Wang A, Halpert RJ, Baerwaldt T, Nordyke RJ.. U.S. payer perspectives on evidence for formulary decision making. Am J Manag Care. 2012;18(5 Spec No 2.): SP71-SP76. [PubMed] [Google Scholar]

- 2.Slutsky JR, Clancy CM.. Patient-centered comparative effectiveness research: essential for high-quality care. Arch Intern Med. 2010;170(5):403-04. Available at: http://archinte.jamanetwork.com/article.aspx?articleid=415677. Accessed April 22, 2016. [DOI] [PubMed] [Google Scholar]

- 3.Meyer AM, Wheeler SB, Weinberger A, Chen RC, Carpenter WR.. An overview of methods for comparative effectiveness research. Semin Radiat Oncol. 2014;24(1):5-13. [DOI] [PubMed] [Google Scholar]

- 4.Garrison LP, Neumann PJ, Erickson P, Marshall D, Mullins, CD.. Using real-world data for coverage and payment decisions: the ISPOR Real-World Data Task Force report. Value Health. 2007;10(5):326-35. Available at: http://www.sciencedirect.com/science/article/pii/S1098301510604706#. Accessed April 23, 2016. [DOI] [PubMed] [Google Scholar]

- 5.Chambers JD, Winn A, Zhong Y, Olchanski N, Cangelosi MJ.. Potential role of network-meta analysis in value-based insurance design. Am J Manag Care. 2014;20(8):641-48. Available at: http://www.ajmc.com/publications/issue/2014/2014-vol20-n8/Potential-Role-of-Network-Meta-Analysis-in-Value-Based-Insurance-Design. Accessed April 23, 2016. [PubMed] [Google Scholar]

- 6.Saret C. The CEA Registry Blog. Network meta-analysis in value-based insurance design. Cost-Effectiveness Analysis Registry. September 7, 2014. Available at: https://research.tufts-nemc.org/cear4/Resources/CEARegistryBlog/tabid/69/EntryId/385/Network-Meta-Analysis-in-Value-Based-Insurance-Design.aspx. Accessed April 23, 2016. [Google Scholar]

- 7.Morris PB, Ballantyne CM, Birtcher KK, Dunn SP, Urbina EM.. Review of clinical practice guidelines for the management of LDL-related risk. J Am Coll Cardiol. 2014;64(2):196-206. Available at: http://www.sciencedirect.com/science/article/pii/S0735109714025911. Accessed April 23, 2016. [DOI] [PubMed] [Google Scholar]

- 8.Jacobson TA, Ito MK, Maki KC, Orringer CE, et al. . National Lipid Association recommendations for patient-centered management of dyslipid-emia: part 1-executive summary. J Clin Lipidol. 2014;8(5):473-88. Available at: http://www.lipidjournal.com/article/S1933-2874%2814%2900274-8/full-text. Accessed April 23, 2016. [DOI] [PubMed] [Google Scholar]

- 9.AMCP Format Executive Committee. The AMCP Format for Formulary Submissions, Version 3.1. A format for submission of clinical and economic evidence of pharmaceuticals in support of formulary consideration. December 2012. Available at: http://www.amcp.org/practice-resources/amcp-format-formulary-submisions.pdf. Accessed April 23, 2016. [Google Scholar]

- 10.WellPoint. Use of comparative effectiveness research (CER) and observational data in formulary decision making. Evaluation criteria. Available at: https://www.pharmamedtechbi.com/~/media/Images/Publications/Archive/The%20Pink%20Sheet/72/021/00720210012/20100521_wellpoint.pdf. Accessed April 23, 2016. [Google Scholar]

- 11.Biskupiak JE, Dunn JD, Holtorf AP.. Implementing CER: what will it take? J Manag Care Pharm. 2012;18(5 Supp A):S19-29. Available at: http://www.jmcp.org/doi/abs/10.18553/jmcp.2012.18.s5-a.S19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Leung MY, Halpern MT, West ND.. Pharmaceutical technology assessment: perspectives from payers. J Manag Care Pharm. 2012;18(3):256-65. Available at: http://www.jmcp.org/doi/abs/10.18553/jmcp.2012.18.3.256. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Weissman JS, Westrich K, Hargraves JL.. Translating comparative effectiveness research into Medicaid payment policy: views from medical and pharmacy directors. J Comp Eff Res. 2015;4(2):79-88. [DOI] [PubMed] [Google Scholar]

- 14.Holtorf AP, Brixner D, Bellows B, Keskinaslan A, Dye J, Oderda G.. Current and future use of HEOR data in healthcare decision-making in the United States and in emerging markets. Am Health Drug Benefits. 2012:5(7):428-38. [PMC free article] [PubMed] [Google Scholar]

- 15.Bruns K, Mroz D, Hofelich A.. CER Collaborative improving health outcomes through new comparative evidence tools. National Pharmaceutical Council. March 24, 2014. Available at: http://hcbd.biz/newsite/CCA-story.aspx?id=41483. Accessed April 23, 2016. [Google Scholar]

- 16.Holtorf AP, Watkins JB, Mullins CD, Brixner D.. Incorporating observational data in formulary decision-making process—summary of a roundtable discussion. J Manag Care Pharm. 2008;14(3):302-08. Available at: http://www.amcp.org/data/jmcp/JMCPMaga_April08_302-308.pdf. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Murray MD. Curricular considerations for pharmaceutical comparative effectiveness research. Pharmacoepidemiol Drug Saf. 2011;20(8):797-804. [DOI] [PubMed] [Google Scholar]

- 18.CER Collaborative. Advancing use of comparative evidence to improve health outcomes. 2013. [Google Scholar]

- 19.CER Collaborative. Tool development and methodology. 2013. [Google Scholar]

- 20.Berger ML, Martin BC, Husereau D, et al. . A questionnaire to assess the relevance and credibility of observational studies to inform health care decision making: an ISPOR-AMCP-NPC Good Practice Task Force Report. Value Health. 2014;17(2):143-56. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Jansen JP, Trikalinos T, Cappelleri JC, et al. . Indirect treatment comparison/network meta-analysis study questionnaire to assess relevance and credibility to inform health care decision making: an ISPOR-AMCP-NPC Good Practice Task Force Report. Value Health. 2014;17(2):157-73. [DOI] [PubMed] [Google Scholar]

- 22.Caro JJ, Eddy DM, Kan H, et al. . questionnaire to assess relevance and credibility of modeling studies for informing health care decision making: an ISPOR-AMCP-NPC Good Practice Task Force Report. Value Health. 2014;17(2):174-82. [DOI] [PubMed] [Google Scholar]

- 23.CER Collaborative. Synthesize a body of evidence—tool development and methodology. 2013. [Google Scholar]

- 24.Ollendorf D, Pearson SD.. ICER Evidence Rating Matrix: a user's guide. Available at: http://www.icer-review.org/wp-content/uploads/2008/03/Rating-Matrix-User-Guide-FINAL-v10-22-13.pdf. Accessed April 23, 2016. [Google Scholar]

- 25.Carroll M. SOP partners with CER Collaborative to launch new certificate program. April 1, 2014. University of Maryland School of Pharmacy. Available at: https://rxsecure.umaryland.edu/apps/news/story/view.cfm?id=456&CFID=4020210&CFTOKEN=2df21564e5818c6-31A963F0-A975-3BD7-A7395EF2. Accessed April 23, 2016. [Google Scholar]