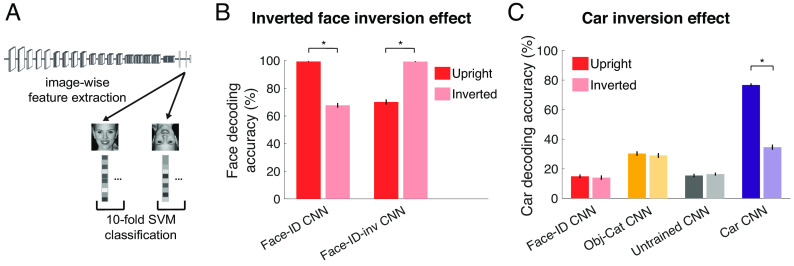

Fig. 4.

Inversion effects are not specific to upright faces per se. (A) Fine-grained category decoding accuracy was measured in CNNs trained on multiple tasks. Activations were extracted from the penultimate fully connected layer to different stimuli sets shown upright (darker bars) and inverted (lighter bars) and used to train and test a SVM. Image credit: Wikimedia Commons/Sgt. Bryson K. Jones. (B) To measure an “inverted face inversion” effect, 100 face identities (10 images each) were decoded from two face-identity-trained CNNs: one trained on upright faces (Face-ID CNN, Left) and one trained on inverted faces (Face-ID-inv CNN, Right). While the CNN trained on upright faces showed higher accuracy for upright than inverted faces, the CNN trained on inverted faces showed the opposite. (C) We decoded 100 car model/make categories (10 images each) from the face-identity-trained CNN (Face-ID CNN, red), the CNN trained on object categorization (Obj-Cat CNN, yellow), the untrained CNN (Untrained CNN, gray), and a CNN trained to categorize car models/makes (Car CNN, purple). Only the car-trained CNN showed an inversion effect for cars, i.e., lower performance for inverted than upright cars. Error bars denote SEM across classification folds. Asterisks indicate significant differences across conditions (*P < 1e-5, two-sided paired t test).