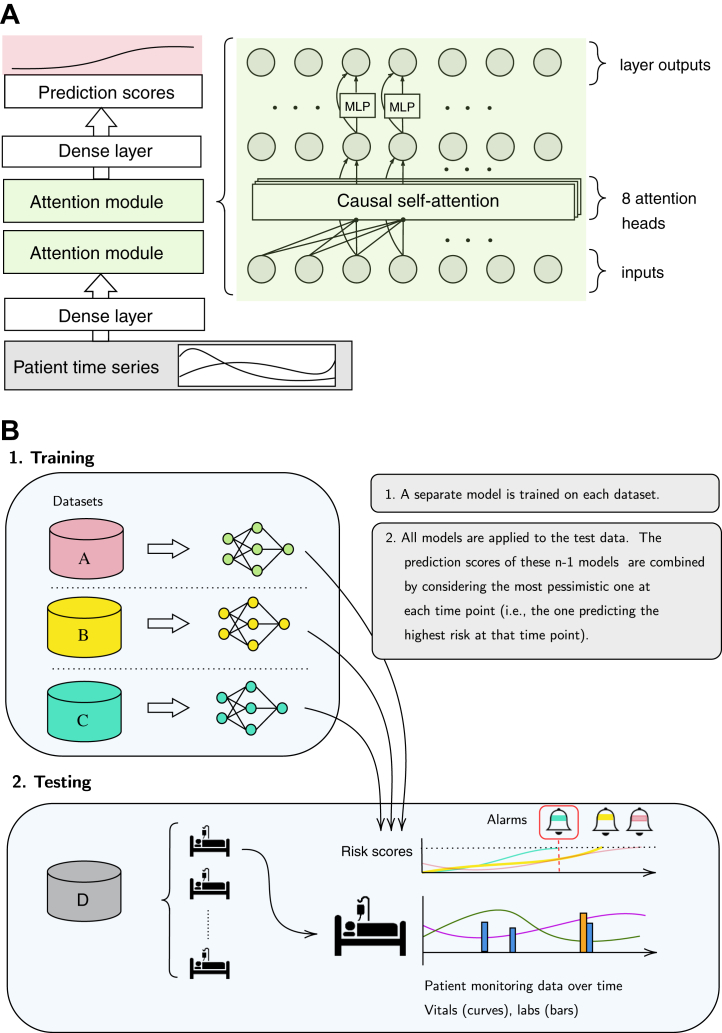

Fig. 2.

Illustration of the deep learning model and the pooling strategy. In Panel A, the deep self-attention model (attn) is shown. The input stream of data is fed through an initial dense layer. This is followed by two attention modules, each comprising a causal self-attention layer and a Multilayer perceptron (MLP). A final dense layer maps to a sequence of prediction scores. In Panel B, the pooling strategy is illustrated. We combined information from n-1 (training) datasets to predict on the n-th (test) dataset. For n = 4, we developed n-1 models, each optimised on a different training dataset. Second, we applied all these models to the test dataset, resulting in n-1 prediction scores (i.e., a predicted probability for sepsis) for each hour of the patients in the test dataset. We aggregated these n-1 predictions into a single risk score by taking the maximal value at each point in time. Hence, we raise the most pessimistic alarm as soon as the first model would raise an alarm (dark green bell with red frame). This strategy was referred to as pooled predictions.