Abstract

Given a Graph G = (V, E) and two vertices i, j ∈ V, we introduce Confluence(G, i, j), a vertex mesoscopic closeness measure based on short Random walks, which brings together vertices from a same overconnected region of the Graph G, and separates vertices coming from two distinct overconnected regions. Confluence becomes a useful tool for defining a new Clustering quality function QConf(G, Γ) for a given Clustering Γ and for defining a new heuristic Starling to find a partitional Clustering of a Graph G intended to optimize the Clustering quality function QConf. We compare the accuracies of Starling, to the accuracies of three state of the art Graphs Clustering methods: Spectral-Clustering, Louvain, and Infomap. These comparisons are done, on the one hand with artificial Graphs (a) Random Graphs and (b) a classical Graphs Clustering Benchmark, and on the other hand with (c) Terrain-Graphs gathered from real data. We show that with (a), (b) and (c), Starling is always able to obtain equivalent or better accuracies than the three others methods. We show also that with the Benchmark (b), Starling is able to obtain equivalent accuracies and even sometimes better than an Oracle that would only know the expected overconnected regions from the Benchmark, ignoring the concretely constructed edges.

1 Introduction

Terrain-Graphs are real world Graphs that model data gathered by field work, in diverse fields such as sociology, linguistics, biology, or Graphs from the internet. Most Terrain-Graphs contrast with artificial Graphs (deterministic or Random) and share four similar properties [1–3]. They exhibit:

-

p1:

Not many edges : m being O(n.log(n)) (where m is the number of edges and n the number of vertices);

-

p2:

Short paths (L, the average number of edges on the shortest path between two vertices is low);

-

p3:

A high Clustering rate (many overconnected local subGraphs in a globally sparse Graph);

-

p4:

A heavy-tailed degree distribution (the distribution of the degrees of the vertices of the Graph can be approximated by a power law).

Clustering a Terrain-Graph consists of grouping together in Modules vertices that belong to the same overconnected region of the Graph (property p3), while keeping separate vertices that do not (property p1). These groups of overconnected vertices form an essential feature of the structures of most Terrain-Graphs. Their detection is central in a wide variety of fields, such as in biology [4], in sociology [5], in linguistics [6] or in computer sciences [7], for many tasks as the grouping of most diverse entities [8–13], the pattern detection in data [14], the prediction of links [15], the model training [16], the label assignment [17], the recommender Algorithms [18], the data noise removal [19], or the feature matching [20].

In section 2 we put in context in the state of the art, the methods with which we compare our results: in section 2.1 we present the Spectral-Clustering, one of the most popular and efficient Graph Clustering methods, in section 2.2.1 Louvain, one of the most used Graph Clustering method optimizing Modularity the most popular Graph Clustering quality function, and in section 2.2.2 Infomap, one of the most efficient Graph Clustering method optimizing the most elegant Graph Clustering quality function.

In section 3 we present the Confluence, a vertex mesoscopic closeness measure and a new Clustering quality function QConf based on the Confluence. In section 4 we compare optimality for Modulatity and optimality for QConf. In section 5 we propose to consider a clustering method, as Binary Edge-Classifier By nodes Blocks (BECBB) trying to classify each pairs of vertices into two classes: the edges and the non-edges. In section 6 we propose a heuristic Starling for optimizing the objective function QConf.

In section 7, we compare the accuracies as BECBB, of Starling, Louvain, Infomap and Spectral-Clustering. These comparisons are done, on the one hand with artificial Graphs (a) Random Graphs and (b) a classical Graphs Clustering Benchmark, and on the other hand with (c) Terrain-Graphs gathered from real data. We show that with (a), (b) and (c), Starling is always able to obtain equivalent or better accuracies than the three others methods. We show also that with the Benchmark (b), Starling is able to obtain equivalent accuracies and even sometimes better than an Oracle that would only know the expected overconnected regions from the Benchmark, ignoring the concretely constructed edges that are to be predicted by the Oracle as BECBB.

In section 8 we discuss the choice of parameters, and conclude in section 9.

2 Previous work

The literature on Graph Clustering is too extensive for a comprehensive review here. We concentrate on placing in the state of art, the methods to which we compare our results.

Let G = (V, E) be a Graph with n = |V| vertices and m = |E| edges.

;

Degree: The degree of a vertex i in G is dG(i) = |{j ∈ V/{i, j} ∈ E}|;

Module: A Module γ of G is a non-empty subset of the Graph’s vertices: γ ≠ ⌀ and γ ⊆ V;

Clustering: A Clustering Γ of G is a set of Modules of G such that ⋃γ∈Γ γ = V;

Partitional Clustering: If , then Γ is a Partitional Clustering of G, where Modules of G are not allowed to overlap. Given such a Γ we can define an equivalence relation on the set of vertices: .

2.1 Spectral Graph Clustering

Spectral Graph Clustering is one of the most popular and efficient Graph Clustering Algorithms. It generally use the classical kmeans Algorithm whose original idea was proposed by Hugo Steinhaus [21]. Spectral Graph Clustering Algorithms work as follows (see [22]):

Algorithm 1 SGC: Spectral Graph Clustering

Input:

G = (V, E) an undirected Graph with |V| = n

such 0 < κ ≤ n (κ is the desired number of Modules).

Output: A Partitional Clustering of G with κ Modules

(1) Form the Adjacency Matrix with

(2) Form the Degree Matrix with

(3) Let the Normalized Graph Laplacian: L = I − D−1A (where I is the identity matrix ).

(4) Compute the first κ eigenvectors u1, …, uk of L (see [23]).

(5) Let be the matrix containing the vectors u1, …, uκ as columns.

(6) For i = 1, …, n, let be the vector corresponding to the i-th row of U.

(7) Cluster the points with the k-means Algorithm into κ clusters C1, …, Ck.

(8) For i = 1, …, κ, let .

Return {c1, …, cκ}

We can notice that for Spectral Graph Clustering in Algorithm 1, we need to know κ the number of groups of vertices in advance in the Input. It is an advantage because it makes it possible to have a handle on the desired number of Modules, but how to choose κ when one does not know the structure of the Graph? The choice of the number κ of groups is fundamental, it is not a simple problem (see [23–31]), and the quality of the results varies greatly depending on κ, what we confirm in section 7.2.1 with Figs 7 and 8.

2.2 When we don’t know the number of groups in advance

Let G = (V, E) be a Graph and Γ a Partitional Clustering of its vertices.

Clustering quality function: A Clustering quality function Q(G, Γ) is an -valued function designed to measure the adequacy of the Modules with the overconnected regions of Terrain-Graphs (property p3).

When we don’t know κ the number of groups of vertices in advance, given a Clustering quality function Q, in order to establish a good Partitional Clustering for a Graph G = (V, E), it would be sufficient to build all the possible partitionings of the set of vertices V, and to pick a partitioning Γ such that Q(G, Γ) is optimal. This method is however obviously concretely impractical, since the number of partionings of a set of size n = |V| is equal to the nth Bell number, a sequence known to grow exponentially [32]. Many Graph Clustering methods therefore consist in defining a heuristic that can find in a reasonable amount of time a Clustering Γ that tentatively optimises Q(G, Γ) for a given Clustering quality function Q.

With methods optimizing a quality function Q, we do not need to know κ the number of vertices groups in advance in the input, because κ is then a direct consequence of the quality function Q: κ will be automatically built by the optimisation of Q.

2.2.1 Louvain

The Louvain method proposed in 2008 by Blondel, Guillaume, Lambiotte, and Lefebvre in [33] is a heuristic for tentatively maximizing the quality function Modularity proposed in 2004 by Newman and Girvan [34]. The modularity of a Partitional Clustering for a Graph G = (V, E) with m = |E| edges is equal to the difference between the proportion of links internal to Modules of the Clustering, and the same quantity expected in a null model, where no community structure is expected. The null model is a Random Graph GNull with the same number of vertices and edges, as well as the same distribution of degrees as G, where the probability of having an edge between two vertices x and y is equal to .

Let G = (V, E) be a Graph with m edges and Γ a partitioning of V. The modularity of Γ can be defined as follows. The definition of modularity given by Newman and Girvan in [34], is equivalent to that we propose here in Formula 1:

| (1) |

Where Pedge(G, x, y) is a symmetrical vertex closeness measure equal to the probability of {x, y} being an edge of G, that is:

| (2) |

| (3) |

In Eq 1, the first term is purely conventional, so that the modularity values all live in the [−1, 1] interval, but plays no role when maximizing modularity, since it is constant for a given Graph G.

We then define as Newman and Girvan’s quality function, to be maximized:

| (4) |

| (5) |

For Louvain, a good Partitional Clustering Γ as per 5 is one that groups in the same Module vertices that are linked (especially ones with low degrees, but also to a lesser extent ones with high degrees), while avoiding as much as possible the grouping of non-linked vertices (especially ones with high degrees, but to a lesser extent ones with low degrees).

However, several authors [35, 36] showed that optimizing Modularity leads to merging small Modules into larger ones, even when those small Modules are well defined and weakly connected to one another. To address this problem, some authors [37, 38] defined multiresolution variants of Modularity, adding a resolution parameter to control the size of the Modules.

For instance [37] introduces a parameter in Eq 5:

| (6) |

where λ is a resolution parameter: the higher the resolution λ, the smaller the Modules get.

Nevertheless, in [39], the authors show that “… multiresolution Modularity suffers from two opposite coexisting problems: the tendency to merge small subGraphs, which dominates when the resolution is low; the tendency to split large subGraphs, which dominates when the resolution is high. In benchmark networks with heterogeneous distributions of cluster sizes, the simultaneous elimination of both biases is not possible and multiresolution Modularity is not capable to recover the planted community structure, not even when it is pronounced and easily detectable by other methods, for any value of the resolution parameter. This holds for other multiresolution techniques and it is likely to be a general problem of methods based on global optimization.

[…] real networks are characterized by the coexistence of clusters of very different sizes, whose distributions are quite well described by power laws [40, 41]. Therefore there is no characteristic cluster size and tuning a resolution parameter may not help.”

The Louvain method https://github.com/10XGenomics/louvain is non-deterministic, i.e. each time Louvain is run on the same Graph, the results may vary slightly. In the rest of this paper all the results concerning the Louvain method on a given Graph are the result of a single run on this Graph.

2.2.2 Infomap

The Infomap method is a heuristic for tentatively maximizing the quality function described in 2008 by Rosvall and Bergstrom [42]. This quality function is based on the minimum description length principle [43]. It consists in measuring the compression ratio that a given partitioning Γ provides for describing the trajectory of a Random walk on a Graph. The trajectory description happens on two levels. When the walker enters a Module, we write down its name. We then write the vertices that the walker visits, with a notation local to the Module, so that an identical short name may be used for different vertices from different Modules. A concise description of the trajectory, with a good compression ratio, is therefore possible when the Modules of Γ are such that the walker tends to stay in them, which corresponds to the idea that the walker is trapped when it enters a good Module, which is supposed to be a overconnected region that is only weakly connected to other Modules.

For Infomap, a good Partitional Clustering Γ is then one that groups in same Module vertices allowing a good compression ratio for describing the trajectory of a Random walker on G.

However, as we will see in section 7, Infomap only identifies a single Module when the overconnected regions are only sligthly pronounced.

The Infomap method https://github.com/mapequation/ is non-deterministic, in the rest of this paper all the results concerning the Infomap method on a given Graph are the result of a single run on this Graph.

3 Confluence, a vertices mesoscopic closeness measure

The definition of Confluence proposed in this section is an adaptation of these proposed in [44] to compare the structures of two Terrain-Graphs.

In Eq 5, with regards to a Graph G:

is a local (microscopic) vertices closeness measure relative to G;

is a global (macroscopic) vertices closeness measure relative to G.

To avoid the resolution limits of Modularity described in [35–39], we introduce here Confluence(G, i, j), an intermediate mesoscopic vertices closeness measure relative to a Graph G, that we define below.

If G = (V, E) is a reflexive and undirected Graph, let us imagine a walker wandering on the Graph G: at time , the walker is on one vertex i ∈ V; at time t + 1, the walker can reach any neighbouring vertex of i, with a uniform probability. This process is called a simple Random walk [45]. It can be defined by a Markov chain on V with an n × n transition Matrix [G]:

| (7) |

Since G is reflexive, each vertex has at least one neighbour (itself) and [G] is therefore well defined. Furthermore, by construction, [G] is a stochastic Matrix: ∀i ∈ V, ∑j∈V gi,j = 1. The probability of a walker starting on vertex i and reaching vertex j after t steps is:

| (8) |

Proposition 1 Let G = (V, E) be a reflexive Graph with m edges, and Gnull = (V, Enull) its null model such that the probability of the existence of a link between two vertices i and j is .

| (9) |

Proof by induction on t:

(a) True for t = 1:

(b) If true for t then true for t + 1:

(a) & (b) ⇒ 9

On a Graph G = (V, E) the trajectory of a Random walker is completely governed by the topology of the Graph in the vicinity of the starting node: after t steps, any vertex j located at a distance of t links or less can be reached. The probability of this event depends on the number of paths between i and j, and on the structure of the Graph around the intermediary vertices along those paths. The more short paths exist between vertices i and j, the higher the probability of reaching j from i.

On the Graph Gnull the trajectory of a Random walker is only governed by the degrees of the vertices i and j, and no longer by the topology of the Graph in the vicinity of these to nodes.

We want to consider as “close” each pair of vertices {i, j} having a probability of reaching j from i after a short Random walk in G, greater than the probability of reaching j from i in Gnull. We therefore define the t-confluence Conft(G, i, j) between two vertices i, j on a Graph G as follows:

| (10) |

Proposition 2 Let G = (V, E) be a reflexive Graph with m edges, and Gnull its null model such that the probability of the existence of a link between two vertices i and j is .

| (11) |

Proof:

To prove that Conft(G, ⋅, ⋅) is symmetric, we first need to prove proposition 3.

Proposition 3 Let G = (V, E) be a reflexive Graph.

| (12) |

Proof by induction on t:

(a) True for t = 1:

(b) If true for t then true for t + 1:

(a) & (b) ⇒ 12

Proposition 4 Let G = (V, E) be a reflexive Graph.

| (13) |

Proof:

Most Terrain-Graphs exhibit the properties p2 (short paths) and p3 (high Clustering rate). With a classic distance such as the shortest path between two vertices, all vertices would be close to each other in a Terrain-Graph (because of property p2). On the contrary, Confluence allows us to identify vertices living in a same overconnected region of G (property p3):

- If i, j are in a same overconnected region:

(14) - If i, j are in two distinct overconnected regions:

Where the notion of region varies according to t:(15)

When t = 1: Confluence is a microscopic vertices closeness measure relative to G. The notion of region in this case has a radius = 1, it is the notion of neighborhood. Confluence is then independent of the intermediate structures between the two vertices i and j in G;

When 1 < t < ∞: , Confluence is a mesoscopic vertices closeness measure relative to G. The notion of region in this case has a 1 < radius = t < ∞, it is no longer a local notion as the notion of neighborhood. Confluence is then sensitive to the t-intermediate structures (t-mesoscopicity) between the two vertices i and j in G (see 14 and 15);

When t → ∞: limt→∞ Conft(G, i, j) = 0, and Confluence is no longer sensitive to any structure in G. (limt→∞ Conft(G, i, j) = 0 because we can prove with the Perron-Frobenius theorem [46] that if G is reflexive and strongly connected, then the Matrix [G] is ergodic [47], then . So by definition 10 and proposition 1: limt→∞ Conft(G, i, j) = 0).

Confluence actually defines an infinity of mesoscopic vertex closeness measures, one for each Random walk of length 1 < t < ∞. For clarity, in the rest of this paper, we set t = 3 and define Conf(G, i, j) = Conf3(G, i, j).

3.1 Using a mesoscopic scale with Confluence for a new Clustering quality function

We propose here , a new Clustering quality function, which introduces a mesoscopic scale through Confluence with a resolution parameter τ ∈ [0, 1] to promote density of the Modules:

| (16) |

| (17) |

| (18) |

In Eq 16, with regard to a Graph G, the term is a local (microscopic) vertices closeness measure, and the term is a global (macroscopic) vertices closeness measure, when in Eq 17, the term Conf(G, i, j) is an intermediate local/global (mesoscopic) vertices closeness measure.

Therefore in Eq 18, gives a weight of τ to the microscopic and macroscopic structure of Γ with regards to the Graph G and a weight of (1 − τ) to the mesoscopic structure. The closer the τ ∈ [0, 1] parameter is to 1, the less Confluence is taken into account.

4 Optimality

A Partitional Clustering Δ is optimal for a quality function Q iff for all partitioning Γ of V, Q(G, Δ)) ≧ Q(G, Γ)). Computing a Δ that maximizes is [48], and the same holds for computing a Clustering that maximizes . However, when the number of vertices of a Graph G = (V, E) is small, the problem of maximizing the modularity can be turned into a reasonably tractable Integer Linear Program (see [48]): We define n2 decision variables Xij ∈ {0, 1}, one for each pair of vertices {i, j} ∈ V. The key idea is that we can build an equivalence relation on V (i ∼ j iff Xij = 1) and therefore a partitioning of V. To guarantee that the decision variables give rise to an equivalence relation, they must satisfy the following constraints:

Reflexivity: ∀i ∈ V, Xii = 1;

Symmetry: ∀i, j ∈ V : Xij = Xji;

Transitivity:

With the following objective functions to maximize:

| (19) |

| (20) |

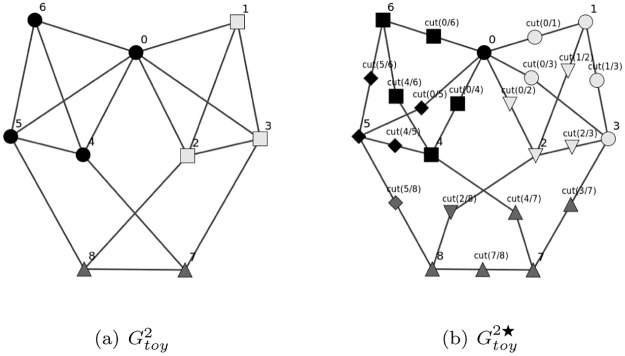

The method SGC described in Algorithm 1 do not optimize a quality function, and the quality function used by Infomap can not be expressed as , with sim(G, ., .) an -valued symmetric similarity measure between vertices of G. We therefore left out this functions in our study of optimality, not having the ability to define their corresponding objective function to maximize in a similar fashion to what was done for and with the formulas 19 and 20. In Fig 1, on a small artificial Graph , we compare the optimal Clusterings , , and (with the Graph , if 0.50 < x < 1 then ) where:

Fig 1. Optimal Clusterings for and QConf on .

If two vertices have same color, then they are in a same Module, with 〈P, R, F〉 where , , .

, , ;

, , ;

, , , ;

, , , , .

We can already notice that growing τ does not imply a simple splitting of the Modules (an approach that would be hierarchical), which we can see by going from τ = 0.25 to τ = 0.50 where there is no such that .

5 Binary edge-classifier by nodes blocks

What metric to use to estimate the accuracy of the four Clusterings in Fig 1? Much literature addresses this fundamental question [49–51]. Here we propose the definition of Binary Edge- Classifier By nodes Blocks (BECBB). To measure the quality of a Clustering Γ on a Graph G = (V, E), an intuitive, simple and efficient approach is to consider a Clustering Γ (with or witout overlaps), as a BECBB trying to predict the edges of a Graph: classifying each pairs of vertices into two classes, the PositiveEdge and the NegativeEdge.

Definition: A BECBB is a pairs of nodes binary classifier trying to predict the edges of a Graph. It is not allowed to give two complementary sets of pairs of nodes, one for its predictions as PositiveEdge and its complementary set for its predictions as NegativeEdge, but is forced to provide its predictions in the form of nodes blocks Bi ⊆ V: classifying as PositiveEdge a pair {x, y} if ∃i such x, y ∈ Bi else classifying it as NegativeEdge. If blocks are allowed to overlap then it is a BECBBOV else it is a BECBBNO.

Let Γ a Clustering (with or witout overlaps) of a Graph G = (V, E)

| (21) |

are the True Postives of Γ according to E;

are the True Negatives;

are the False Postives;

are the False Negatives.

We can then measure the Γ’s accuracy with the classical measures in diagnostic binary Classification [52, 53]:

| (22) |

| (23) |

| (24) |

We can use these three measures indifferently on Clusterings with or without overlaps, because the Eq 21 makes sense with Clusterings with or without overlaps.

BECBBOV: For any Graph G = (V, E), the set of all edges Γ = E can be considered as a BECBBOV. Then Γ = E is optimal because: Prec(Γ = E, E) = 1 (Γ does not include any non-edge in its Modules) and Rec(Γ = E, E) = 1 (Γ include all the edges in its Modules). It is also true for Γ = The set of all the maximal cliques.

BECBBNO: The metric measures the ability of a Method not to include non-edges in the Modules it returns, whereas the metric measures its ability to include the edges in the Modules it returns. For a BECBBNO, a good Precision and a good Recall are two ability that oppose each other (because a BECBBNO is forced to provide its classifications in the form of blocks Bi without overlaps) but are simultaneously bothtogether desirable for a good Clustering method. The whole point of a good Clustering method, as BECBBNO, is therefore to favor Precision without disfavoring Recall too much or even favoring Recall without disfavoring the Precision too much, that is what the metric measures (it is the harmonic mean of Precision and Recall).

5.1 Properties

As showed in [51], it is better that a metric σ(Γ), to estimate the accuracy of a Clustering Γ, has the Homogeneity and Completeness [50] properties (see Fig 2 inspired by Figs 1 and 3 in [51]).

Fig 2. Homogeneity and Completeness: {x, y} ∈ E iff x and y have same color.

Fig 3. Binary classifiers of nodes pairs by nodes blocks.

- A metric σ to estimate the accuracy of Clusterings, has the Homogeneity property iff:

(25) - A metric σ to estimate the accuracy of Clusterings, has the completeness property iff:

(26)

It is clear that the metric has these two properties, for any Clustering Γ with or without overlaps. Moreover the metric is independent of any extrinsic expectation to the Graph, we only need to trust the Graph itself. It is a good objective way to evaluate and compare Clusterings. So, to estimate the accuracy of Clustering methods Methodi and compare them on a Graph G = (V, E), we will use the three metrics:

Precision(Methodi(G = (V, E)), E): Measuring the ability of the Methodi not to include non-edges in the Modules it returns;

Recall(Methodi(G = (V, E)), E): Measuring its ability to include the edges in the Modules it returns;

Fscore(Methodi(G = (V, E)), E): Measuring the harmonic mean of its Precision and Recall.

Fig 1 shows the accuracy of , , and considering these Clusterings as BECBB.

6 Starling, a heuristic for maximizing

In this section we describe Starling, a heuristic for tentatively maximizing . Confluence gives us an ordering on the edges of the Graph G = (V, E), in particular, sorting the edges {i, j} ∈ E by descending Confluence, forms the basis of a new Module merging strategy, described in Algorithm 2, intended to optimize .

Algorithm 2 Starling: Graph Partitional Clustering

Input:

G = (V, E) an undirected Graph

τ ∈ [0, 1]

Output: Cout a Partitional Clustering of G

for i ∈ V do ▸ Initialization

modi ↢ {i} ▸ One vertex per Module

Mi ↢ i ▸ Vertex i is in Module i

ϒ ↢ ⌀

While Υ ≠ X do

▸ Line 1: Strategy based on Confluence

ϒ ↢ ϒ ∪ {{i, j}}

if Mi ≠ Mj then ▸ modi and modj have not yet been merged together

if 0 ≤ profit then

modi ↢ modi ∪ modj ▸ modj merge with modi in modi

modj ↢ ⌀ ▸ modj is dead

for k ∈ V do ▸ Updating the membership list

if Mk = j ▸ Vertex k was in modj

Mk ↢ i ▸ Vertex k is now in modi

Cout ↢ ⌀

for i ∈ V do

if modi ≠ ⌀ ▸ modi is alive

Cout ↢ Cout ∪ {modi}

return Cout

Different edges {i1, j1} ∈ E and {i2, j2} ∈ E might happen to have the exact same Confluence value (Conf(G, i1, j1) = Conf(G, i2, j2)), making the process (in Line 1) non-deterministic in general, because of its sensitivity on the order in which the edges with identical Confluence values are processed. A simple solution to this problem is to sort edges by first comparing their Confluence values and then using the lexicographic order on the words i1j1 and i2j2 when Confluence values are strictly identical.

We coded this Algorithm in C++ and in the following we used this program to analyze Starling’s results. With , find the optimal Clusterings for : , , .

7 Performance

In this section we estimate the accuracy of Starling and compare it with the methods Louvain, Infomap and SGC. We can Estimate the accuracy of Clustering Algorithms on:

Real Graphs: A set of Terrain-Graphs built from real data;

A : A set of computer-generated Graphs and its gold standard its expected Modules as expected overconnected regions.

Because we do not need to know κ the number of vertex groups in advance in the input of Louvain and Infomap, whereas we need it with SGC, for greater clarity, we compare on the one hand Starling versus Louvain, and Infomap, and on the other hand Starling versus SGC.

7.1 Starling versus Louvain and Infomap

7.1.1 Performance on Real Terrain-Graphs

In this section we estimate the accuracy of Algorithms with three Terrain-Graphs:

GEmail: The Graph was generated using email data from a large European research institution [54, 55]. The Graph contains an undirected edge {i, j} if person i sent person j at least one email https://snap.stanford.edu/data/email-Eu-core.html.

GDBLP: The DBLP computer science bibliography provides a comprehensive list of research papers in computer science [56]. Two authors are connected if they have published at least one paper together https://snap.stanford.edu/data/com-DBLP.html.

GAmazon: A Graph was collected by crawling the Amazon website. It is based on the Customers Who Bought This Item Also Bought feature of the Amazon website [56]. If a product i is frequently co-purchased with product j, the Graph contains an undirected edge {i, j} https://snap.stanford.edu/data/com-Amazon.html.

Table 1 illustrates the pedigrees of these Terrain-Graphs and Table 2 shows the accuracies of Louvain, Infomap and Starling Considering each Clustering as a BECBB. We show also the number of Modules, the Length of the biggest Module and the computation time in seconds (All times are based on computations with a Quad Core Intel i5 and 32 Go RAM).

Table 1. Pedigrees: n and m are the number of vertices and edges, 〈k〉 is the mean degree of vertices, C is the Clustering coefficient of the Graph, Llcc is the average shortest path length between any two nodes of the largest connected component (largest subGraph in which there exist at least one path between any two nodes) and nlcc the number of vertices of this component, λ is the coefficient of the best fitting power law of the degree distribution and r2 is the correlation coefficient of the fit, measuring how well the data is modelled by the power law.

| Graph | n | m | 〈k〉 | C | Llcc(nlcc) | λ(r2) |

|---|---|---|---|---|---|---|

| GEmail | 1005 | 16064 | 31.97 | 0.27 | 2.59(986) | −1.02(0.81) |

| GDBLP | 317080 | 1049866 | 6.62 | 0.31 | 6.79(317080) | −2.71(0.95) |

| GAmazon | 334863 | 925872 | 5.53 | 0.21 | 11.95(334863) | −2.81(0.93) |

Table 2. Graph Clustering as BECBBs: With 〈P,R,F〉 where : , , , with [N, M] where N is the Number of Modules of Γ, M the Length of the biggest Module of Γ, and with (T) the computation time in seconds of Γ.

| Graph | G = GEmail | G = GDBLP | G = GAmazon |

|---|---|---|---|

| Louvain | 〈0.11, 0.62, 0.18〉 | 〈0.00, 0.84, 0.00〉 | 〈0.00, 0.94, 0.00〉 |

| [26, 334] (0s) | [212, 22422] (12s) | [237, 12810] (6s) | |

| Infomap | 〈0.13, 0.60, 0.21〉 | 〈0.13, 0.72, 0.22〉 | 〈0.11, 0.82, 0.20〉 |

| [43, 319] (0s) | [16997, 811] (2165s) | [17265, 380] (1567s) | |

| Starling0.000 | 〈0.16, 0.57, 0.24〉 | 〈0.08, 0.70, 0.15〉 | 〈0.10, 0.80, 0.18〉 |

| [63, 213] (9s) | [20044, 433] (752s) | [20479, 486] (160s) | |

| Starling0.125 | 〈0.18, 0.51, 0.27〉 | 〈0.10, 0.70, 0.17〉 | 〈0.11, 0.80, 0.20〉 |

| [72, 140] (5s) | [21809, 396] (714s) | [22400, 435] (147s) | |

| Starling0.250 | 〈0.26, 0.45, 0.33〉 | 〈0.12, 0.69, 0.20〉 | 〈0.13, 0.78, 0.23〉 |

| [102, 98] (2s) | [24852, 296] (584s) | [25906, 374] (134s) | |

| Starling0.375 | 〈0.36, 0.40, 0.37〉 | 〈0.16, 0.67, 0.26〉 | 〈0.17, 0.76, 0.28〉 |

| [154, 84] (1s) | [29545, 252] (465s) | [31374, 308] (121s) | |

| Starling0.500 | 〈0.49, 0.35, 0.41〉 | 〈0.25, 0.63, 0.36〉 | 〈0.26, 0.72, 0.38〉 |

| [235, 72] (1s) | [40905, 171] (347s) | [44597, 199] (108s) | |

| Starling0.625 | 〈0.63, 0.29, 0.40〉 | 〈0.61, 0.52, 0.56〉 | 〈0.52, 0.59, 0.55〉 |

| [284, 52] (1s) | [87286, 116] (298s) | [80104, 32] (101s) | |

| Starling0.750 | 〈0.69, 0.27, 0.38〉 | 〈0.83, 0.45, 0.58〉 | 〈0.70, 0.49, 0.57〉 |

| [319, 47] (1s) | [121392, 116] (252s) | [115637, 19] (78s) | |

| Starling0.875 | 〈0.75, 0.23, 0.35〉 | 〈0.87, 0.43, 0.58〉 | 〈0.75, 0.45, 0.56〉 |

| [327, 30] (0s) | [124338, 113] (246s) | [121999, 16] (74s) | |

| Starling1.000 | 〈0.77, 0.22, 0.35〉 | 〈0.94, 0.40, 0.56〉 | 〈0.86, 0.38, 0.52〉 |

| [378, 30] (0s) | [142371, 113] (239s) | [153712, 13] (68s) |

Louvain: This is the fastest method, however its Precision is small, producing very few Modules, one of which is very large;

Infomap: It gets a good Fscore, higher than this of Louvain.

Starlingτ: ∃τ ∈ [0, 1] such that Starling(G, τ) gets the highest Fscore. By default τ = 0.25 is a good compromise to obtain at the same time a good Precision and a good Recall. If we want to promote Recall (more edges in Modules) then we can decrease τ, and if we want to promote Precision (less non-edges in Modules) then we can increase τ.

7.1.2 Performance on BenchmarkER

BenchmarkER is the class of Random Graphs studied by Erdös and Rényi [57, 58] with parameters N the number of vertices and p the connection probability between two vertices. Random Graphs do not have a meaningful group structure, and they can be used to test if the Algorithms are able to recognize the absence of Modules. Therefore, we set N = 128, and we will study the accuracy of the methods with BenchmarkER according to p.

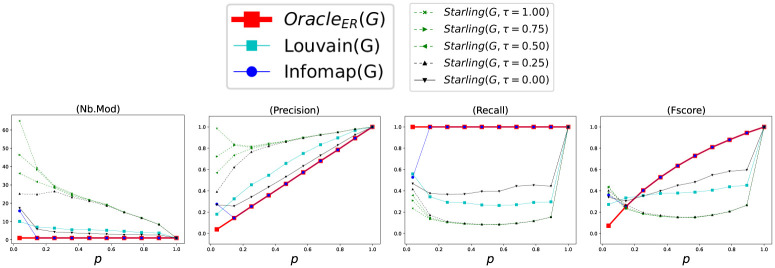

Let a Random Graph built by BenchmarkER, ΓER = {V} with only one Module, and OracleER(GER) = ΓER = {V} the Oracle’s method who knows ΓER. Fig 4 shows the accuracy of the methods according to p considering each Clustering as a BECBB. We can see that:

Fig 4. Performance with BenchmarkER.

Each point (x, y) is the average over 100 Graphs with p = x.

OracleER knows ΓER, but does not know the concretely constructed edges . Its number of Modules is always = 1. Its Precision increases when p increases, because density increases. Its Recall is always = 1. Its Fscore increase;

The best Precisions are done with Starlingτ=0.25 (but with a lot of Modules);

The best Recalls are done with Infomap;

The best Fscores are done with Infomap, except while p <≈ 0.2, then it is with Starlingτ=0.25;

7.1.2.1 Starling detects the slightly-overconnected regions. To observe more closely the behavior of Starling, we draw at Random one of the 100 Graphs with p = 0.25 which made it possible to construct the Fig 4. This Graph has a number of vertices n = 128, a number of edges m = 2077, a mean degree of vertices 〈k〉 = 32.45, a density d = 0.26, a Clustering coefficient C = 0.27, and a average shortest path length between any two nodes L = 1.74.

In this Random Graph with (n = 128, d = 0.26), Starlingτ=0.00 finds four Modules δ1 with (n1 = 51, d1 = 0.33), δ2 with (n2 = 39, d2 = 0.35), δ3 with (n3 = 31, d3 = 0.34), δ4 with (n4 = 7, d4 = 0.76), where ni are their number of vertices and di are their edge density. So, the four Modules δi found by Starlingτ=0.00 have a density greater than the one of the entire Graph, specially for δ4: d4 = 0.76 > d = 0.26.

The phenomenon of overconnected regions is particulary clear in Terrain-Graphs, but also occur in Erdős-Rényi Random Graphs. Indeed such Graphs are not completely uniform, they present an embryo of structure with slightly-overconnected regions resulting from Random fluctuations (for exemple the Module δ4 which is clearly overconnected in this Graph).

It is these slightly-overconnected regions present in Random Graphs that are exploited and amplified in [59] to transform a Random Graph into a shaped-like Terrain-Graph and that Starling detects in a Random Graph, and so accepts as Modules (especially if τ increases). This is why in the Fig 4 the Precision of Starling is greater than that of OracleER. It is because the densities of the Modules found by Starling are greater than the density of the single Module V of OracleER (which increases with p). However the number of edges between the Modules found by Starling remains large, this is why the Recall of Starling stays small (especially if τ increases).

7.1.2.2 Behavior.

(i) Infomap usually returns Γ = {V}. Which means: Infomap identify the absence of strong structures;

(ii) Starlingτ returns Modules which have a density greater than the one of the entire Graph, the slightly-overconnected regions (especially if τ increases). Which means: Starlingτ identifies the presence of weak structures.

7.1.3 Performance on BenchmarkLFR

In most Terrain-Graphs, the distribution of degrees is well approximated by a power law. Similarly, in most Terrain-Graphs, the distribution of community sizes is well approximated by a power law [40, 60]. Therefore, in order to produce artificial Graphs with a meaningful group structure similar to most Terrain-Graphs, Lancichinetti, Fortunato and Radicchi proposed BenchmarkLFR [61] (Code to generate BenchmarkLFR Graphs can be downloaded from Andrea Lancichinetti’s homepage https://sites.google.com/site/andrealancichinetti/home). The Graphs in BenchmarkLFR are parameterized with:

N their number of vertices;

k their average degree;

γ the power law exponent of their degree distribution;

β the power law exponent of their community sizes distribution;

μ ∈ [0, 1] their mixing parameter: Each vertex shares a fraction 1 − μ of its links with the other vertices of its community and a fraction μ with the other vertices of the Graph.

With BenchmarkLFR, when the mixing parameter μ is weak, the overconnected regions are well separated from each other, and when μ increases, the overconnected regions are less clear. Therefore, we set N = 1000, and k = 15 or k = 25, and (γ = 2, β = 1) or (γ = 2, β = 2) or (γ = 3, β = 1) and for each of these six configurations, we will study the accuracy of the methods according to μ.

Let a Graph built by BenchmarkLFR, its expected Modules as expected overconnected regions, and the Oracle’s method which knows the of each GLFR.

We show in Figs 5 and 6 the accuracy of the methods according to μ, considering each Clustering as a BECBB. We can see that:

Fig 5. Performance with BenchmarkLFR (k = 15).

Each point (x, y) is the average over 100 Graphs with μ = x. Fig 5(a)–5(c) are zooms on the Fscores when the overconnected regions are less clear (i.e. when we can no longer trust ).

Fig 6. Performance with BenchmarkLFR (k = 25).

Each point (x, y) is the average over 100 Graphs with μ = x. Fig 6(a)–6(c) are zooms on the Fscores when the overconnected regions are less clear (i.e. when we can no longer trust ).

OracleLFR knows the of each GLFR, but does not know their concretely constructed edges . Its number of Modules is always . Its Precision decreases when μ increase, because there are more and more non-edges in the expected Modules, but OracleLFR does not know it. Its Recall decreases when μ increase, because there are more and more edges outside the expected Modules, but OracleLFR does not know it. Its Fscore decreases when μ increase, because its Precision and its Recall decreases;

The best Precisions are done with Starlingτ=0.25, but with a lot of Modules when the overconnected regions are less clear (because here again (see section 7.1.2.2) Starling identifies the presence of the large number of small slightly-overconnected regions as Modules present in these Graphs);

The best Recalls are done with Infomap, but with very few Modules, and often only one, when the overconnected regions are less clear (because there is no way to compress the description of the path of a Random walker in these Graphs);

The best Fscores are done with Infomap and Starlingτ=0.25 except when the overconnected regions are less clear, then it is with Starlingτ=0.25.

7.2 Starling versus SGC

7.2.1 Performance on Real Terrain-Graphs

In this section, we compare Starling(G, τ) with respect to SGC(G, κ), κ varying, on three little Terrain-Graphs:

GEmail: The Graph seen in section 7.1.1;

: The subGraph of Gdblp on the vertices of the larger Module of Infomap(Gdblp) which has 811 vertices;

: The subGraph of Gamazon on the vertices of the larger Module of Infomap(Gamazon) which has 380 vertices;

Table 3 illustrates the pedigrees of these Terrain-Graphs.

Table 3. Pedigrees: The notations are identical to those of Table 1.

| Graph | n | m | 〈k〉 | C | Llcc(nlcc) | λ(r2) |

|---|---|---|---|---|---|---|

| GEmail | 1005 | 16064 | 31.97 | 0.27 | 2.59(986) | −1.02(0.81) |

| : | 811 | 3774 | 9.31 | 0.19 | 3.33(811) | −1.35(0.91) |

| 380 | 959 | 5.06 | 0.06 | 2.92(380) | −1.11(0.66) |

The dataset describing GEmail contains “ground-truth” community memberships of the nodes . Each individual belongs to exactly one of 42 departments D = {d1, …d42} at the research institute from which the emails are extracted. Let ΓDep the Gold-Standart partition of such:

We can therefore evaluate the quality of a Clustering by partition on GEmail according to two kinds of truths:

Intrinsic-Truth: The edges of GEmail as we did in the previous sections with Precision, Recall, Fscore respectively defined by the formulas 22, 23 and 24;

Extrinsic-Truth: by replacing E by in the three formulas 22, 23 and 24.

Fig 7 shows the performances of SGC(GEmail, κ), one hand according to the Intrinsic-Truth in Fig 7(a), and on the other hand according to the Extrinsic-Truth in Fig 7(b). We can see that:

Fig 7. Performance of SGC(, κ), κ varying.

According to the intrinsic truth in Fig 7(a), and in Fig 7(b) according to the extrinsic truth .

-

According to the Intrinsic-Truth in Fig 7(a): Starling(GEmail, τ = 0.25), with 102 Modules, gets Precision = 0.26, Recall = 0.45, Fscore = 0.33. The maximum Fscore of SGC is geted for κ = 54 with Precision = 0.36, Recall = 0.30, Fscore = 0.33. On the other hand for τ ∈ {0.50, 0.75, 1.00}, Starling gets a beter Fscore than the best Fscore of SGC.

As BECBB: ∃τ ∈ [0, 1] such Starling gets a beter Fscore than the best Fscore of SGC.

-

According to the Extrinsic-Truth in Fig 7(b): Starling(GEmail, τ = 0.25) gets Precision = 0.51, Recall = 0.61, Fscore = 0.56. The maximum Fscore of SGC is geted for κ = 24 with Precision = 0.46, Recall = 0.60, Fscore = 0.52.

According to : ∃τ ∈ [0, 1] such Starling gets a beter Fscore than the best Fscore of SGC.

Fig 8 shows the performances as BECBBs of and , according to their Intrinsic-Truth respectively and . We can see that: ∃τ ∈ [0, 1] such Starling gets a beter Fscore than the best Fscore of SGC.

Fig 8. Performance of SGC(G = (V,E), κ) according to the intrinsic truth E, κ varying.

7.2.1.1 Extrinsic-Truth according to Intrinsic-Truth of GEmail. Because , , , as BECBB, ΓDep is less efficient than Starling(GEmail, τ = 0.25) or SGC(GEmail, κ = 54) the best BECBB of SGC.

That is to say that Gold-Standards are not always the best BECBBs, we can not always trust Gold-Standards provided by Benchmarks or built using human assessors, which as showed in [62], generaly do not always agree with each other, even when their judgements are based on the same protocol.

In our present example with GEmail, we can think that two individuals from the same department can communicate in real life more often than two individuals from different departments: Two individuals from the same department do not necessarily need to communicate more by email than two individuals from different departments.

7.2.2 Performance on BenchmarkER

Because we need to know κ the number of groups of vertices in advance in the Input of SGC, to be able to compare Starling with SGC we define: SGCτ(G) = SGC(G, κ = |Starling(G, τ)|).

Let a Random Graph built by BenchmarkER, ΓER = {V} with only one Module, and OracleER(GER) = ΓER = {V} the Oracle’s method who knows ΓER.

Fig 9 shows the accuracy of the methods according to p considering each Clustering as a BECBB. We can see that: ∀τ ∈ {0.00, 0.25, 1.00} on these Figures, the Fscores geted by Starling(G, τ) are always equal or greater than the Fscores geted by SGCτ(G).

Fig 9. Performance with BenchmarkER.

Each point (x, y) is the average over 100 Graphs with p = x.

7.2.3 Performance on BenchmarkLFR

Let a Graph built by BenchmarkLFR, its expected Modules as expected overconnected regions, and the Oracle’s method which knows the of each GLFR.

We show in Figs 10 and 11 the accuracy of methods SGCτ(G) and Starling(G, τ) according to μ, considering each Clustering as a BECBB. We can see that ∀τ ∈ {0.00, 0.25, 1.00} on these Figures, the Fscores geted by Starling(G, 0.25) are always equal or greater than the Fscores geted by SGCτ(G).

Fig 10. Performance with BenchmarkLFR (k = 15).

Each point (x, y) is the average over 100 Graphs with μ = x. Fig 10(a)–10(c) are zooms on the Fscores when the overconnected regions are less clear (i.e. when we can no longer trust ).

Fig 11. Performance with BenchmarkLFR (k = 25).

Each point (x, y) is the average over 100 Graphs with μ = x. Fig 11(a)–11(c) are zooms on the Fscores when the overconnected regions are less clear (i.e. when we can no longer trust ).

8 Discussion

8.1 Choosing the τ parameter of Starling

When using a Benchmark to evaluate the performance of methods on a Graph , the Oracle’s method knows the expected overconnected regions but do not knows the concretely constructed edges . Therefore, when the overconnected regions are less clear, as BECBB (with , Intrinsic-Truth), some methods may outperform the method. This happens especially with the Starlingτ method if the τ parameter has been chosen appropriately.

We have seen in Formula 17 that the closer the τ ∈ [0, 1] parameter is to 1, the less Confluence is taken into account in . With Terrain-Graphs, we propose using τ = 0.25 as a first approach by default, then decreasing τ if we want to promote Recall (because it has the effect of decreasing the number of Modules and of increasing their sizes) or increasing τ if we want to promote Precision (because it has the effect of increasing the number of Modules and of decreasing their sizes).

8.2 Length of Random walks

For clarity and simplicity, we restricted the Random walks of to a length of t = 3. A first study of the impact of the length of those Random walks to transform a Random Graph into a shaped-like Terrain − Graph was done in [59], but a deeper one should be carried to understand how the length influences the mesoscopicity of Confluence and its effect on QConf and Starling.

For example we can build the Graph from by inserting a new vertex in the middle of each edge. Fig 12 illustrates the optimal Clusterings on and on for with t = 3 and also with t = 6, allowing us to see that:

Fig 12. Optimal Clusterings for with t = 3 and with t = 6: Shapes describe an optimal Clustering for with t = 3, colors describe an optimal Clustering for with t = 6.

On with t = 6:

;

;

.

On with t = 3:

;

;

.

The length of Random walks t could be advantageously chosen taking into account L, the average number of edges on the shortest path between two vertices.

8.3 Directed graphs

If G is a positively weighted Graph by W = {wi,j such {i, j} ∈ E}, then we can apply QConf and Starling by replacing Eqs 7 and 10 by 27 and 28 respectively:

| (27) |

| (28) |

If G is a directed Graph, one can also consider using a variant of page rank [63–65] in place of Eq 8.

9 Conclusions and perspectives

In this paper, we defined Confluence, a mesoscopic vertex closeness measure based on short Random walks, which brings together vertices from the same overconnected region, and separates vertices coming from two distinct overconnected regions. Then we used Confluence to define , a new Clustering quality function, where the τ ∈ [0, 1] parameter is a handle on the Precision & Recall, the size and the number of Modules. With a small toy Graphs, we showed that optimal Clusterings for improve the Fscore of the optimal Clusterings for Modularity.

We then introduced Starling(G, τ), a new heuristic based on the Confluence of edges designed to optimize on a Graph G. On the same little toy Graph, we showed that Starling(G, τ) finds an optimal Clustering for .

Comparing Starling(G, τ) to SGC(G, κ), Infomap, and Louvain we show that:

- Performance with the Terrain-Graphs studied in this paper:

- Louvain(G): Returns Clusterings with a low Fscore, caused by a to much low Precision despite a large Recall;

- Infomap(G): Tends to favor Recall with good Fscore;

- SGC(G, κ): Returns Clusterings with a good Fscore if we know the good number of groups of vertices κ in advance;

- Starling(G, τ): Tends to favor Precision with good Fscore. ∃τ ∈ [0, 1] (usually around τ ≈ 0.25) such that the Fscore of the Clusterings returned by Starling is greater than the Fscores of the Clusterings returned by Infomap and greater than the best Fscores of the Clusterings returned by SGC.

- Performance with BenchmarkER:

- SGC(G, κ = |Starling(G, τ)|): Fscore(SGC(G, κ = |Starling(G, τ)|), {V}) ≈ Fscore(Starling(G, τ), {V});

- (i) Infomap usually returns Γ = {V}. Which means: Infomap identify the absence of strong structures;

- (ii) Starlingτ returns Modules which have a density greater than the one of the entire Graph, the slightly-overconnected regions (especially if τ increases). Which means: Starlingτ identifies the presence of weak structures.

- Performance with BenchmarkLFR:

- When the overconnected regions become less clear, Starling favors Precision while Infomap favor Recall:

-

(1) On the one hand, Starling(G, τ = 0.25) gets then greater Fscores than these of Infomap (see Figs 5(a)–5(c) and 6(a)–6(c)). That’s because even in Non Erdös and Rényi Graphs, Starlingτ identifies the presence of weak structures thanks to its (ii) behavior, whereas Infomap identify the absence of strong structures because its (i) behavior.On the other hand, Starling(G, τ = 0.25) gets then equivalent or greater Fscores than these of SGC(G, κ = |Starling(G, τ = 0.25)|) (see Figs 10(a)–10(c) and 11(a)–11(c)).

- (2) Often (τ dependent) Starling(G, τ), thanks to its (ii) behavior, is able to get larger Fscores than these of Oracles that would only knows their expected overconnected regions (concretely slightly-overconnected), ignoring E their concretely constructed edges. SGC(G, κ = |Starling(G, τ = 0.25)|) can also succeed (see Fig 10(a) and 10(c)), but still weaker than Starling(G, τ = 0.25), whereas Infomap can never succeed, because its (i) behavior.

-

To sum up: If we know the good number of groups of vertices κ in advance then we can use SGC. If we do not know it, then we can use Infomap on the one hand with Starling on the other hand wich are complementary:

Infomap tend to favor Recall with good Fscore and is able to identify the absence of strong structures;

Starlingτ=0.25 by default tends to favor Precision with good Fscore and is able to identify the presence of weak structures. Then if we want to promote Recall with a smaller number of larger Modules, we can decrease τ, and if we want to promote Precision with a greater number of smaller Modules, we can increase τ.

Our follow-up work: We will focus on the role on the ouputs of Starling, played by the length of the Random walks in computing Confluence, as well as the development of a Clustering method based on Confluence able to detect Clustering in Graphs accounting for edge directions and edge weights, its returns communities possibly overlapping.

Supporting information

(TIF)

Acknowledgments

I thank the editors and the anonymous reviewers for their professional and valuable suggestions. I thank also Korantin Auguste for his suggestions and proofreading.

Data Availability

All relevant data are within the manuscript.

Funding Statement

Financed by Centre National de la Recherche Scientifique.

References

- 1. Watts DJ, Strogatz SH. Collective Dynamics of Small-World Networks. Nature. 1998;393:440–442. doi: 10.1038/30918 [DOI] [PubMed] [Google Scholar]

- 2. Albert R, Barabasi AL. Statistical Mechanics of Complex Networks. Reviews of Modern Physics. 2002;74:74–47. doi: 10.1103/RevModPhys.74.47 [DOI] [Google Scholar]

- 3. Newman MEJ. The Structure and Function of Complex Networks. SIAM Review. 2003;45:167–256. doi: 10.1137/S003614450342480 [DOI] [Google Scholar]

- 4. Aittokallio T, Schwikowski B. Graph-based methods for analysing networks in cell biology. Briefings in bioinformatics. 2006;7(3):243–255. doi: 10.1093/bib/bbl022 [DOI] [PubMed] [Google Scholar]

- 5. Bonacich P, Lu P. Introduction to mathematical sociology. Princeton University Press; 2012. [Google Scholar]

- 6. Steyvers M, Tenenbaum JB. The Large-Scale Structure of Semantic Networks: Statistical Analyses and a Model of Semantic Growth. Cognitive Science. 2005;29(1):41–78. doi: 10.1207/s15516709cog2901_3 [DOI] [PubMed] [Google Scholar]

- 7. Korlakai Vinayak R, Oymak S, Hassibi B. Graph Clustering With Missing Data: Convex Algorithms and Analysis. In: Ghahramani Z, Welling M, Cortes C, Lawrence N, Weinberger KQ, editors. Advances in Neural Information Processing Systems. vol. 27. Curran Associates, Inc.; 2014. [Google Scholar]

- 8. Nugent R, Meila M. An overview of clustering applied to molecular biology. Statistical methods in molecular biology. 2010; p. 369–404. doi: 10.1007/978-1-60761-580-4_12 [DOI] [PubMed] [Google Scholar]

- 9. Wolf FA, Hamey FK, Plass M, Solana J, Dahlin JS, Gottgens B, et al. PAGA: graph abstraction reconciles clustering with trajectory inference through a topology preserving map of single cells. Genome biology. 2019;20(1):1–9. doi: 10.1186/s13059-019-1663-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Zesch T, Muller C, Gurevych I. Using wiktionary for computing semantic relatedness. In: Proceedings of the 23rd national conference on Artificial intelligence—Volume 2. Chicago, Illinois: AAAI Press; 2008. p. 861–866.

- 11. de Jesus Holanda A, Pisa IT, Kinouchi O, Martinez AS, Ruiz EES. Thesaurus as a complex network. Physica A: Statistical Mechanics and its Applications. 2004;344(3-4):530–536. doi: 10.1016/j.physa.2004.06.025 [DOI] [Google Scholar]

- 12. Li HJ, Wang L, Zhang Y, Perc M. Optimization of identifiability for efficient community detection. New Journal of Physics. 2020;22(6). doi: 10.1088/1367-2630/ab8e5e [DOI] [Google Scholar]

- 13. Tran C, Shin WY, Spitz A. Community Detection in Partially Observable Social Networks. ACM Transactions on Knowledge Discovery from Data (TKDD). 2017;16:1–24. doi: 10.1145/3461339 [DOI] [Google Scholar]

- 14. Frey BJ, Dueck D. Clustering by passing messages between data points. science. 2007;315(5814):972–976. doi: 10.1126/science.1136800 [DOI] [PubMed] [Google Scholar]

- 15. Aditya G, Jure L. node2vec: Scalable Feature Learning for Networks. CoRR. 2016;1607.00653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Yang Y, Wei H, Sun ZQ, Li GY, Zhou Y, Xiong H, et al. S2OSC: A Holistic Semi-Supervised Approach for Open Set Classification. ACM Trans Knowl Discov Data. 2021;16(2). doi: 10.1145/3468675 [DOI] [Google Scholar]

- 17. Cantini R, Marozzo F, Bruno G, Trunfio P. Learning Sentence-to-Hashtags Semantic Mapping for Hashtag Recommendation on Microblogs. Association for Computing Machinery. 2021;16(2). [Google Scholar]

- 18. Zhang Z, Zhang L, Yang D, Yang L. KRAN: Knowledge Refining Attention Network for Recommendation. Association for Computing Machinery. 2021;16(2). [Google Scholar]

- 19. Wu H, Ma T, Wu L, Xu F, Ji S. Exploiting Heterogeneous Graph Neural Networks with Latent Worker/Task Correlation Information for Label Aggregation in Crowdsourcing. Association for Computing Machinery. 2021;16(2). [Google Scholar]

- 20. O’hare K, Jurek-Loughrey A, De Campos C. High-Value Token-Blocking: Efficient Blocking Method for Record Linkage. Association for Computing Machinery. 2021;16(2). [Google Scholar]

- 21. Steinhaus H. Sur la division des corps matériels en parties. Bulletin de l’academie polonaise des sciences. 1957;4(12):801–804. [Google Scholar]

- 22. Luxburg U. A Tutorial on Spectral Clustering. Statistics and Computing. 2007;17(4):395–416. doi: 10.1007/s11222-007-9033-z [DOI] [Google Scholar]

- 23. Chung FRK. Spectral Graph Theory. American Mathematical Society; 1997. [Google Scholar]

- 24. Bolla M. Relations between spectral and classification properties of multigraphs. DIMACS, Center for Discrete Mathematics and Theoretical Computer Science; 1991. [Google Scholar]

- 25. Hahn G, Sabidussi G. Graph symmetry: algebraic methods and applications. vol. 497. Springer Science & Business Media; 2013. [Google Scholar]

- 26. Still S, Bialek W. How Many Clusters? An Information-Theoretic Perspective. Neural Computation. 2004;16:2483–2506. doi: 10.1162/0899766042321751 [DOI] [PubMed] [Google Scholar]

- 27. C F, R A E. Model-Based Clustering, Discriminant Analysis, and Density Estimation. Journal of the American Statistical Association. 2002;97:611–631. doi: 10.1198/016214502760047131 [DOI] [Google Scholar]

- 28. Tibshirani R, Walther G, Hastie T. Estimating the number of clusters in a dataset via the Gap statistic. 2000;63:411–423. [Google Scholar]

- 29. Ben-Hur A, Elisseeff A, Guyon I. A Stability Based Method for Discovering Structure in Clustered Data. Pacific Symposium on Biocomputing Pacific Symposium on Biocomputing. 2002; p. 6–17. [PubMed] [Google Scholar]

- 30. Lange T, Roth V, Braun ML, Buhmann JM. Stability-Based Validation of Clustering Solutions. Neural Computation. 2004;16:1299–1323. doi: 10.1162/089976604773717621 [DOI] [PubMed] [Google Scholar]

- 31. Ben-David S, von Luxburg U, Pal D. A Sober Look at Clustering Stability. In: COLT 2006. Max-Planck-Gesellschaft. Berlin, Germany: Springer; 2006. p. 5–19. [Google Scholar]

- 32. Knuth DE. The Art of Computer Programming: Fundamental algorithms. The Art of Computer Programming. Addison -Wesley; 1968. [Google Scholar]

- 33. Blondel VD, Guillaume JL, Lambiotte R, Lefebvre E. Fast unfolding of communities in large networks. Journal of Statistical Mechanics: Theory and Experiment. 2008;2008(10). doi: 10.1088/1742-5468/2008/10/P10008 [DOI] [Google Scholar]

- 34. Newman MEJ, Girvan M. Finding and evaluating community structure in networks. Physical Review E. 2004;69(2). doi: 10.1103/PhysRevE.69.026113 [DOI] [PubMed] [Google Scholar]

- 35. Fortunato S, Barthelemy M. Resolution limit in community detection. Proceedings of the National Academy of Sciences. 2006;104(1):36–41. doi: 10.1073/pnas.0605965104 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Kumpula JM, Saramaki J, Kaski K, Kertesz J. Limited resolution in complex network community detection with Potts model approach. The European Physical Journal B. 2007;56(1):41–45. doi: 10.1140/epjb/e2007-00088-4 [DOI] [Google Scholar]

- 37. Reichardt J, Bornholdt S. Statistical mechanics of community detection. Physical Review E. 2006;74(1). doi: 10.1103/PhysRevE.74.016110 [DOI] [PubMed] [Google Scholar]

- 38. Arenas A, Fernández A, Gómez S. Analysis of the structure of complex networks at different resolution levels. New Journal of Physics. 2008;10(5):053039. doi: 10.1088/1367-2630/10/5/053039 [DOI] [Google Scholar]

- 39. Lancichinetti A, Fortunato S. Limits of modularity maximization in community detection. Physical Review E. 2011;84(6). doi: 10.1103/PhysRevE.84.066122 [DOI] [PubMed] [Google Scholar]

- 40. Clauset A, Newman MEJ, Moore C. Finding community structure in very large networks. Physical Review E. 2004;70(6). doi: 10.1103/PhysRevE.70.066111 [DOI] [PubMed] [Google Scholar]

- 41. Radicchi F, Castellano C, Cecconi F, Loreto V, Parisi D. Defining and identifying communities in networks. Proceedings of the National Academy of Sciences. 2004;101(9):2658–2663. doi: 10.1073/pnas.0400054101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Rosvall M, Bergstrom CT. Maps of random walks on complex networks reveal community structure. Proceedings of the National Academy of Sciences. 2008;105(4):1118–1123. doi: 10.1073/pnas.0706851105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Grunwald PD. The minimum description length principle. MIT press; 2007. [Google Scholar]

- 44.Gaume B, Duvignau K, Navarro E, Desalle Y, Cheung H, Hsieh SK, et al. Skillex: a graph-based lexical score for measuring the semantic efficiency of used verbs by human subjects describing actions. vol. 55 of Revue TAL: numéro spécial sur Traitement Automatique des Langues et Sciences Cognitives (55-3). ATALA (Association pour le Traitement Automatique des Langues); 2016. Available from: https://hal.archives-ouvertes.fr/hal-01320416.

- 45. Bollobas B. Modern Graph Theory. Springer-Verlag New York Inc.; 2002. [Google Scholar]

- 46. Stewart GW. Perron-Frobenius theory: a new proof of the basics. College Park, MD, USA; 1994. [Google Scholar]

- 47. Gaume B. Random walks in lexical small worlds. Revue I3—Information Interaction Intelligence. 2004;4(3). [Google Scholar]

- 48. Brandes U, Delling D, Gaertler M, Gorke R, Hoefer M, Nikoloski Z, et al. On Modularity Clustering. IEEE Transactions on Knowledge and Data Engineering. 2008;20(2):172–188. doi: 10.1109/TKDE.2007.190689 [DOI] [Google Scholar]

- 49.Meila M. Comparing clusterings. In: Proc. of COLT 03; 2003. Available from: http://www.stat.washington.edu/mmp/www.stat.washington.edu/mmp/Papers/compare-colt.pdf.

- 50.Rosenberg A, Hirschberg J. V-Measure: A Conditional Entropy-Based External Cluster Evaluation Measure. In: Proceedings of the 2007 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning (EMNLP-CoNLL). Prague, Czech Republic: Association for Computational Linguistics; 2007. p. 410–420. Available from: https://aclanthology.org/D07-1043.

- 51. Amigo E, Gonzalo J, Artiles J, Verdejo F. A Comparison of Extrinsic Clustering Evaluation Metrics Based on Formal Constraints. Inf Retr. 2009;12(4):461–486. doi: 10.1007/s10791-008-9066-8 [DOI] [Google Scholar]

- 52. Hubert L, Arabie P. Comparing partitions. Journal of Classification. 1985;2(1):193–218. doi: 10.1007/BF01908075 [DOI] [Google Scholar]

- 53. Chakraborty T, Dalmia A, Mukherjee A, Ganguly N. Metrics for Community Analysis: A Survey. ACM Comput Surv. 2017;50(4). doi: 10.1145/3091106 [DOI] [Google Scholar]

- 54.Benson AR, Kumar R, Tomkins A. Sequences of Sets. In: Proceedings of the 24th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. KDD’18. New York, NY, USA: Association for Computing Machinery; 2018. p. 1148–1157.

- 55. Leskovec J, Kleinberg J, Faloutsos C. Graph Evolution: Densification and Shrinking Diameters. ACM Trans Knowl Discov Data. 2007;1(1):2–es. doi: 10.1145/1217299.1217301 [DOI] [Google Scholar]

- 56. Leskovec J, Krevl A. SNAP Datasets: Stanford Large Network Dataset Collection; 2014. 25327001 [Google Scholar]

- 57. Erdös P, Rényi A. On Random Graphs I. Publicationes Mathematicae Debrecen. 1959;6:290–297. [Google Scholar]

- 58. Erdös P, Rényi A. On the evolution of random graphs. Publ Math Inst Hungary Acad Sci. 1960;5:17–61. [Google Scholar]

- 59. Gaum B, Mathieu F, Navarro E. Building Real-World Complex Networks by Wandering on Random Graphs. vol. 10. Revue I3—Information Interaction Intelligence, Cepadues; 2010. p. 73–91. [Google Scholar]

- 60. Palla G, Derényi I, Farkas I, Vicsek T. Uncovering the overlapping community structure of complex networks in nature and society. Nature. 2005;435(7043):814–818. doi: 10.1038/nature03607 [DOI] [PubMed] [Google Scholar]

- 61. Lancichinetti A, Fortunato S, Radicchi F. Benchmark graphs for testing community detection algorithms. Physical Review E. 2008;78(4). doi: 10.1103/PhysRevE.78.046110 [DOI] [PubMed] [Google Scholar]

- 62. Murray GC, Green R. Lexical Knowledge and Human Disagreement on a WSD Task. Computer Speech & Language. 2004;18(3):209–222. doi: 10.1016/j.csl.2004.05.001 [DOI] [Google Scholar]

- 63.Gaume B, Mathieu F. PageRank Induced Topology for Real-World Networks; 2016. Available from: https://hal.archives-ouvertes.fr/hal-01322040.

- 64. Chen F, Zhang Y, Rohe K. Targeted sampling from massive block model graphs with personalized PageRank. Journal of the Royal Statistical Society: Series B (Statistical Methodology). 2019;82(1). [Google Scholar]

- 65. Kloumann IM, Ugander J, Kleinberg J. Block models and personalized PageRank. Proceedings of the National Academy of Sciences. 2016;114(1):33–38. doi: 10.1073/pnas.1611275114 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

(TIF)

Data Availability Statement

All relevant data are within the manuscript.